Image Classification

Overview

Image classification is a fundamental task in computer vision that involves categorizing an image into one of several predefined classes. It’s a cornerstone of artificial intelligence, enabling machines to interpret and understand visual information in a way that mimics human perception.

Image classification refers to assigning a label or category to an entire image based on its visual content. This task is crucial in computer vision and has numerous applications across various industries. Image classification’s importance lies in its ability to automate visual understanding tasks that would otherwise require human intervention.

Applications in Real-World Scenarios

Image classification has found its way into numerous real-world applications, revolutionizing various sectors:

- Healthcare: Assisting in medical image analysis, such as identifying abnormalities in X-rays or MRIs.

- Agriculture: Monitoring crop health and detecting plant diseases through aerial imagery.

- Automotive: Enabling advanced driver assistance systems and autonomous vehicles to recognize road signs, pedestrians, and other vehicles.

- Retail: Powering visual search capabilities and automated inventory management systems.

- Security and Surveillance: Enhancing threat detection and facial recognition systems.

- Environmental Monitoring: Analyzing satellite imagery for deforestation, urban planning, and climate change studies.

Advantages of Running Classification on Edge Devices like Raspberry Pi

Implementing image classification on edge devices such as the Raspberry Pi offers several compelling advantages:

Low Latency: Processing images locally eliminates the need to send data to cloud servers, significantly reducing response times.

Offline Functionality: Classification can be performed without an internet connection, making it suitable for remote or connectivity-challenged environments.

Privacy and Security: Sensitive image data remains on the local device, addressing data privacy concerns and compliance requirements.

Cost-Effectiveness: Eliminates the need for expensive cloud computing resources, especially for continuous or high-volume classification tasks.

Scalability: Enables distributed computing architectures where multiple devices can work independently or in a network.

Energy Efficiency: Optimized models on dedicated hardware can be more energy-efficient than cloud-based solutions, which is crucial for battery-powered or remote applications.

Customization: Deploying specialized or frequently updated models tailored to specific use cases is more manageable.

We can create more responsive, secure, and efficient computer vision solutions by leveraging the power of edge devices like Raspberry Pi for image classification. This approach opens up new possibilities for integrating intelligent visual processing into various applications and environments.

In the following sections, we’ll explore how to implement and optimize image classification on the Raspberry Pi, harnessing these advantages to create powerful and efficient computer vision systems.

Setting Up the Environment

Updating the Raspberry Pi

First, ensure your Raspberry Pi is up to date:

sudo apt update

sudo apt upgrade -yInstalling Required Libraries

Install the necessary libraries for image processing and machine learning:

sudo apt install python3-pip

sudo rm /usr/lib/python3.11/EXTERNALLY-MANAGED

pip3 install --upgrade pipSetting up a Virtual Environment (Optional but Recommended)

Create a virtual environment to manage dependencies:

python3 -m venv ~/tflite

source ~/tflite/bin/activateInstalling TensorFlow Lite

We are interested in performing inference, which refers to executing a TensorFlow Lite model on a device to make predictions based on input data. To perform an inference with a TensorFlow Lite model, we must run it through an interpreter. The TensorFlow Lite interpreter is designed to be lean and fast. The interpreter uses a static graph ordering and a custom (less-dynamic) memory allocator to ensure minimal load, initialization, and execution latency.

We’ll use the TensorFlow Lite runtime for Raspberry Pi, a simplified library for running machine learning models on mobile and embedded devices, without including all TensorFlow packages.

pip install tflite_runtime --no-depsThe wheel installed:

tflite_runtime-2.14.0-cp311-cp311-manylinux_2_34_aarch64.whl

Installing Additional Python Libraries

Install required Python libraries for use with Image Classification:

If you have another version of Numpy installed, first uninstall it.

pip3 uninstall numpyInstall version 1.23.2, which is compatible with the tflite_runtime.

pip3 install numpy==1.23.2pip3 install Pillow matplotlibCreating a working directory:

If you are working on the Raspi-Zero with the minimum OS (No Desktop), you may not have a user-pre-defined directory tree (you can check it with ls. So, let’s create one:

mkdir Documents

cd Documents/

mkdir TFLITE

cd TFLITE/

mkdir IMG_CLASS

cd IMG_CLASS

mkdir models

cd modelsOn the Raspi-5, the /Documents should be there.

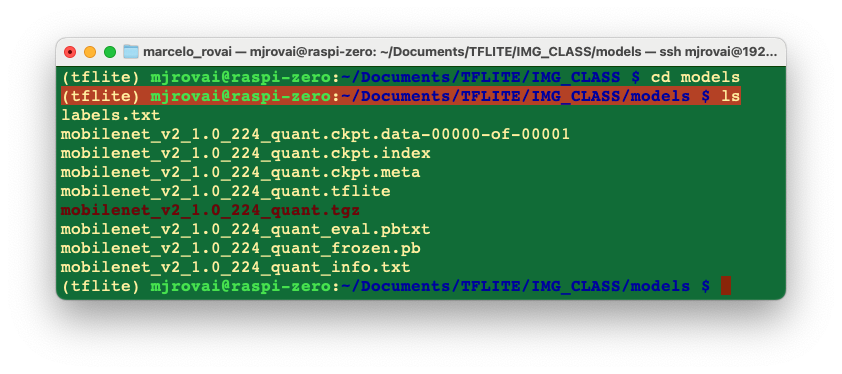

Get a pre-trained Image Classification model:

An appropriate pre-trained model is crucial for successful image classification on resource-constrained devices like the Raspberry Pi. MobileNet is designed for mobile and embedded vision applications with a good balance between accuracy and speed. Versions: MobileNetV1, MobileNetV2, MobileNetV3. Let’s download the V2:

# One long line, split with backslash \

wget https://storage.googleapis.com/download.tensorflow.org/\

models/tflite_11_05_08/mobilenet_v2_1.0_224_quant.tgz

tar xzf mobilenet_v2_1.0_224_quant.tgzGet its labels:

wget https://raw.githubusercontent.com/Mjrovai/EdgeML-with-Raspberry-Pi/refs/heads/\

main/IMG_CLASS/models/labels.txtIn the end, you should have the models in its directory:

We will only need the

mobilenet_v2_1.0_224_quant.tflitemodel and thelabels.txt. You can delete the other files.

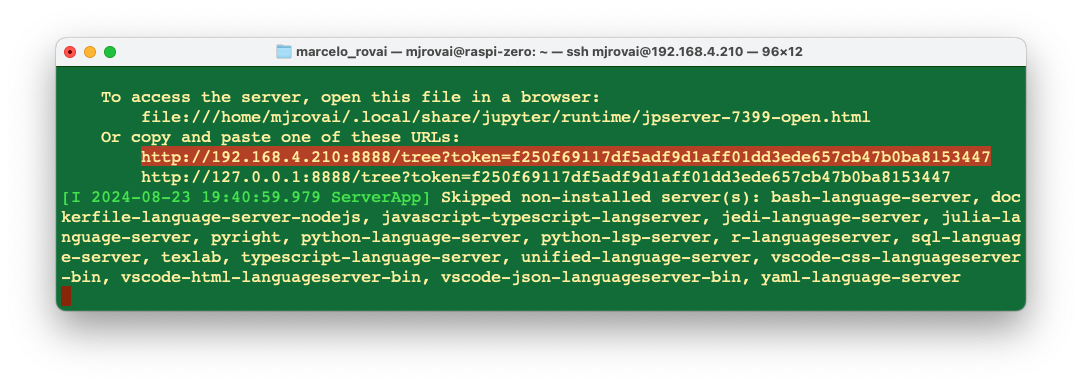

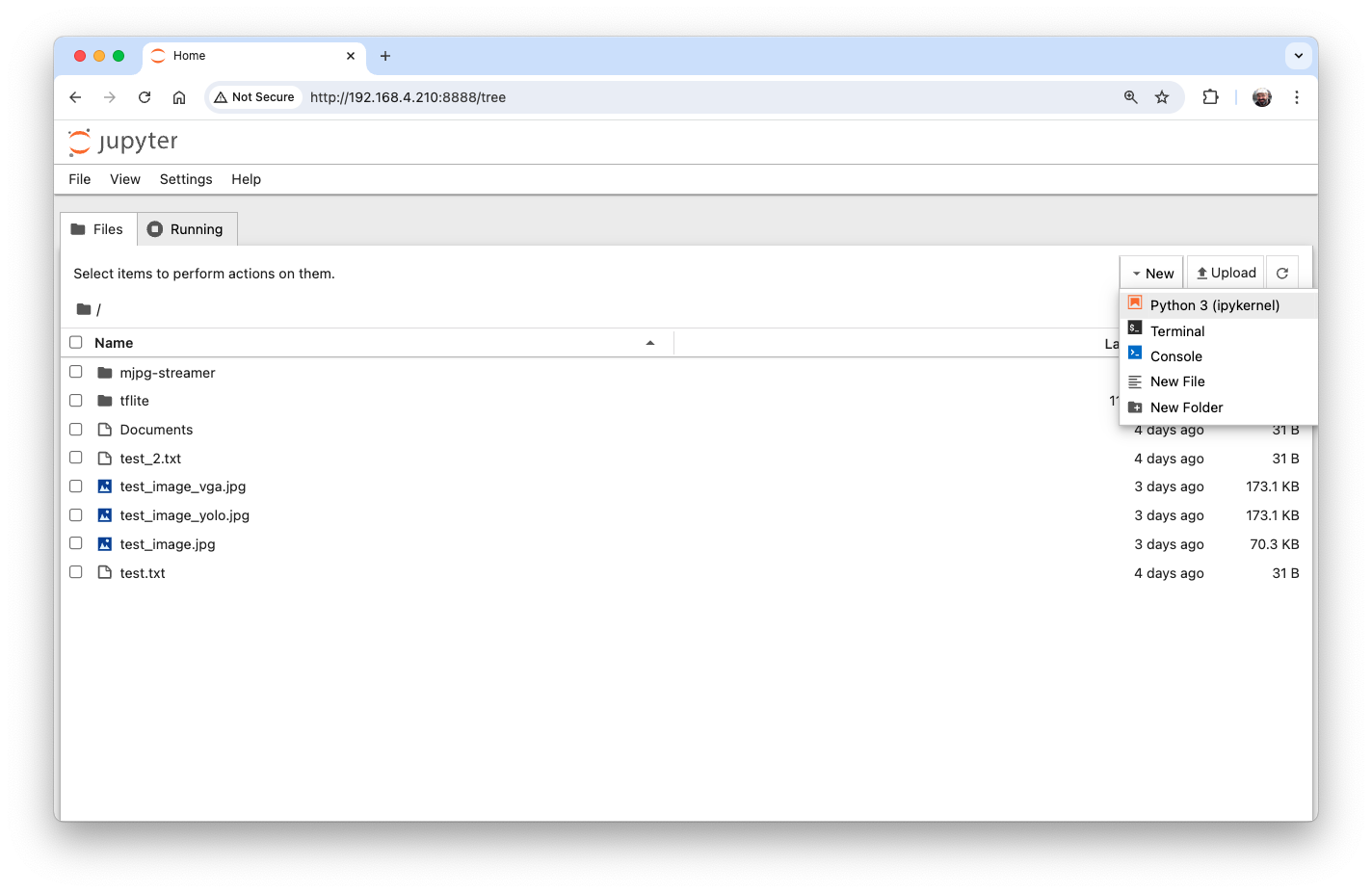

Setting up Jupyter Notebook (Optional)

If you prefer using Jupyter Notebook for development:

pip3 install jupyter

jupyter notebook --generate-configTo run Jupyter Notebook, run the command (change the IP address for yours):

jupyter notebook --ip=192.168.4.210 --no-browserOn the terminal, you can see the local URL address to open the notebook:

You can access it from another device by entering the Raspberry Pi’s IP address and the provided token in a web browser (you can copy the token from the terminal).

Define your working directory in the Raspi and create a new Python 3 notebook.

Verifying the Setup

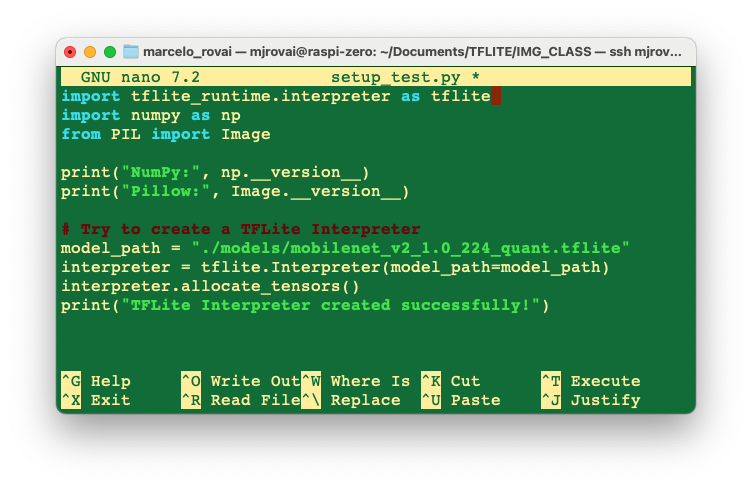

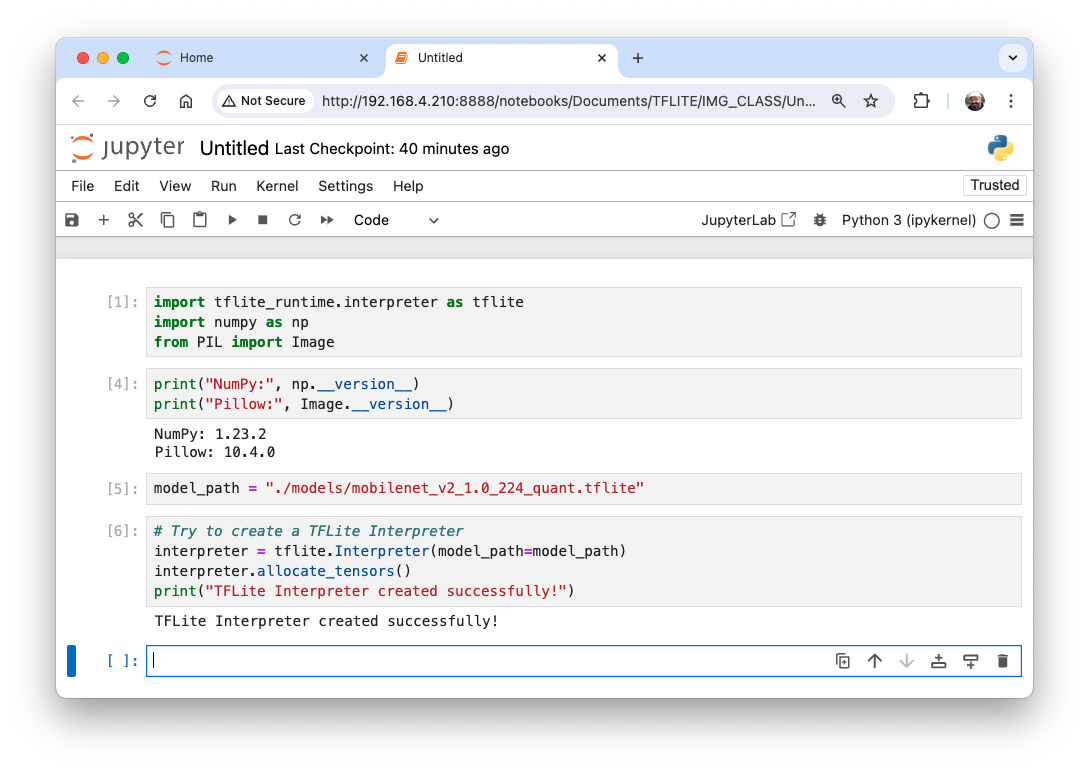

Test your setup by running a simple Python script:

import tflite_runtime.interpreter as tflite

import numpy as np

from PIL import Image

print("NumPy:", np.__version__)

print("Pillow:", Image.__version__)

# Try to create a TFLite Interpreter

model_path = "./models/mobilenet_v2_1.0_224_quant.tflite"

interpreter = tflite.Interpreter(model_path=model_path)

interpreter.allocate_tensors()

print("TFLite Interpreter created successfully!")You can create the Python script using nano on the terminal, saving it with CTRL+0 + ENTER + CTRL+X

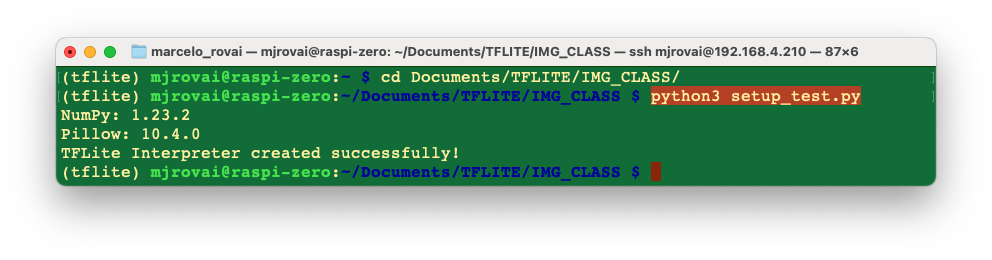

And run it with the command:

Or you can run it directly on the Notebook:

Making inferences with Mobilenet V2

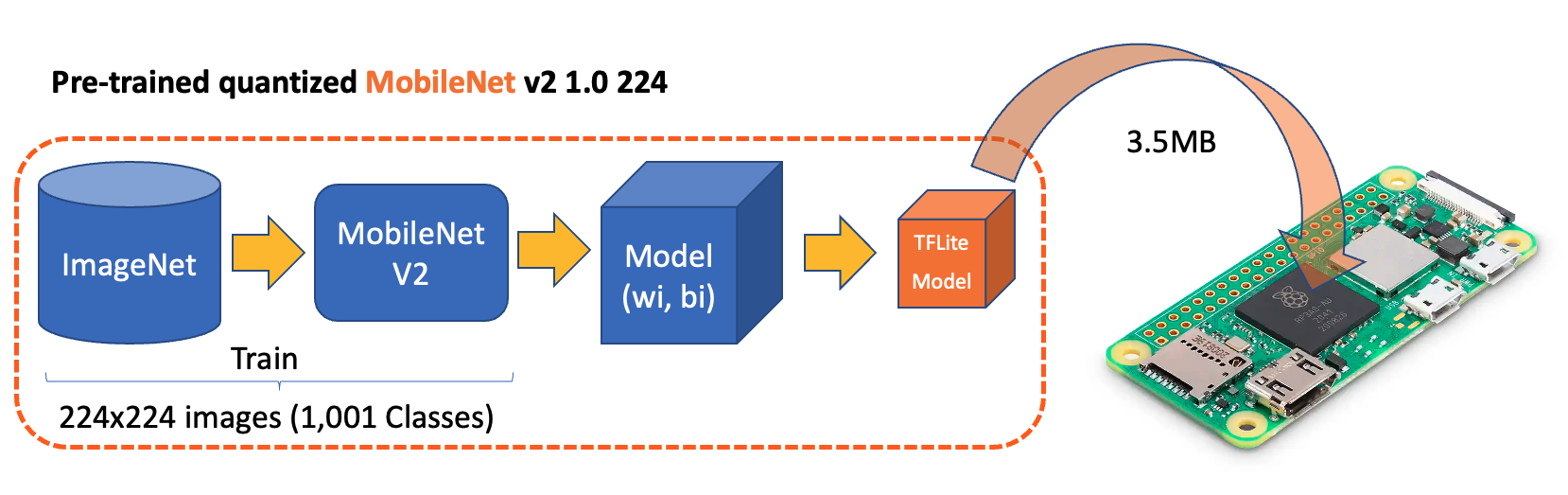

In the last section, we set up the environment, including downloading a popular pre-trained model, Mobilenet V2, trained on ImageNet’s \(224\times 224\) images (1.2 million) for 1,001 classes (1,000 object categories plus 1 background). The model was converted to a compact 3.5 MB TensorFlow Lite format, making it suitable for the limited storage and memory of a Raspberry Pi.

Let’s start a new notebook to follow all the steps to classify one image:

Import the needed libraries:

import time

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import tflite_runtime.interpreter as tfliteLoad the TFLite model and allocate tensors:

model_path = "./models/mobilenet_v2_1.0_224_quant.tflite"

interpreter = tflite.Interpreter(model_path=model_path)

interpreter.allocate_tensors()Get input and output tensors.

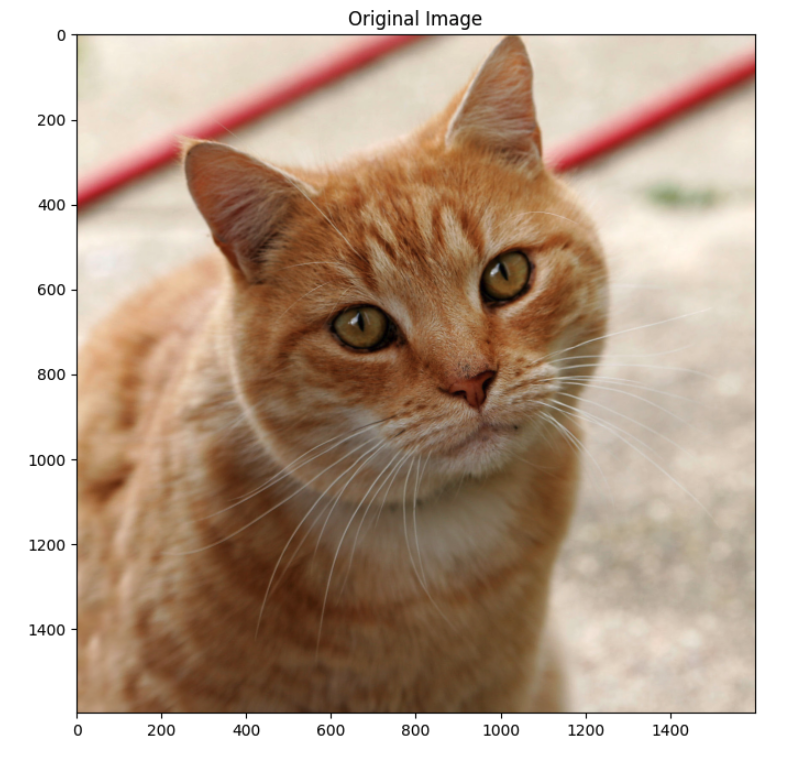

input_details = interpreter.get_input_details()

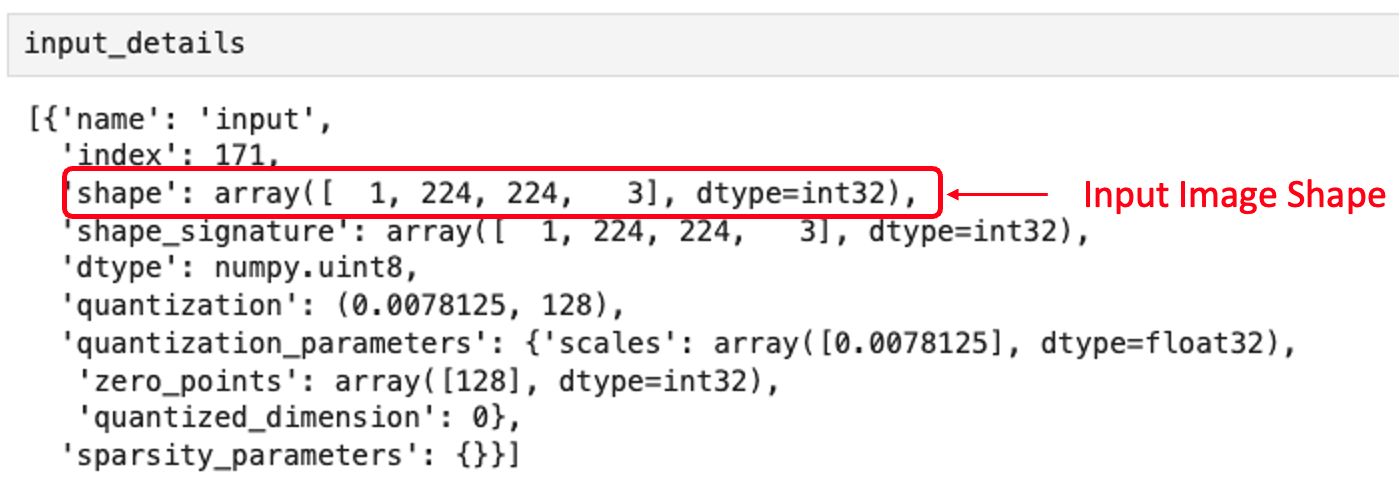

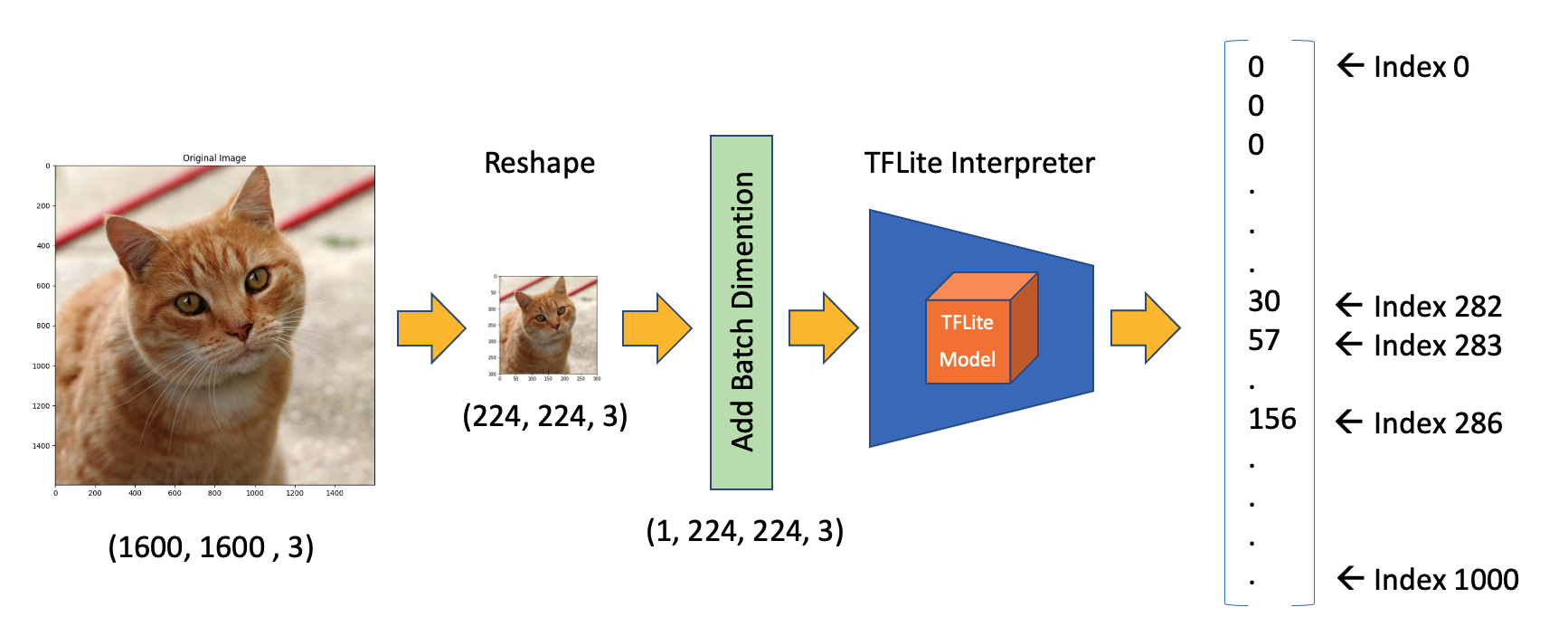

output_details = interpreter.get_output_details()Input details will give us information about how the model should be fed with an image. The shape of (1, 224, 224, 3) informs us that an image with dimensions \((224\times 224\times 3)\) should be input one by one (Batch Dimension: 1).

The output details show that the inference will result in an array of 1,001 integer values. Those values result from the image classification, where each value is the probability of that specific label being related to the image.

Let’s also inspect the dtype of input details of the model

input_dtype = input_details[0]["dtype"]

input_dtypedtype('uint8')This shows that the input image should be raw pixels (0 - 255).

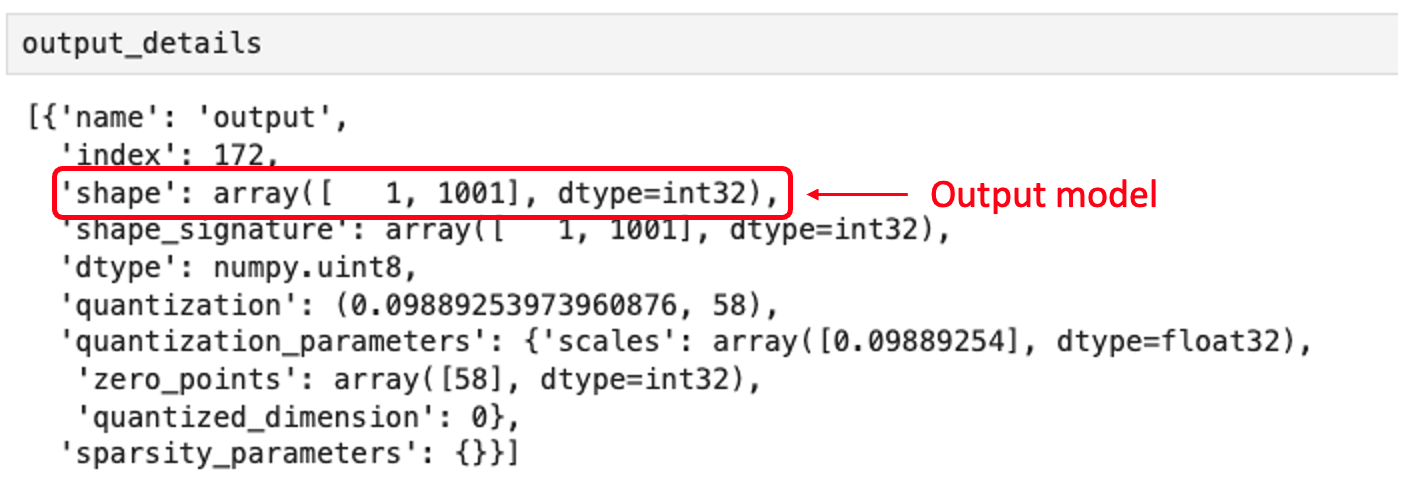

Let’s get a test image. You can transfer it from your computer or download one for testing. Let’s first create a folder under our working directory:

mkdir images

cd images

wget https://upload.wikimedia.org/wikipedia/commons/3/3a/Cat03.jpgLet’s load and display the image:

# Load he image

img_path = "./images/Cat03.jpg"

img = Image.open(img_path)

# Display the image

plt.figure(figsize=(8, 8))

plt.imshow(img)

plt.title("Original Image")

plt.show()

We can see the image size running the command:

width, height = img.sizeThat shows us that the image is an RGB image with a width of 1600 and a height of 1600 pixels. So, to use our model, we should reshape it to (224, 224, 3) and add a batch dimension of 1, as defined in input details: (1, 224, 224, 3). The inference result, as shown in output details, will be an array with a 1001 size, as shown below:

So, let’s reshape the image, add the batch dimension, and see the result:

img = img.resize(

(input_details[0]["shape"][1], input_details[0]["shape"][2])

)

input_data = np.expand_dims(img, axis=0)

input_data.shapeThe input_data shape is as expected: (1, 224, 224, 3)

Let’s confirm the dtype of the input data:

input_data.dtypedtype('uint8')The input data dtype is ‘uint8’, which is compatible with the dtype expected for the model.

Using the input_data, let’s run the interpreter and get the predictions (output):

interpreter.set_tensor(input_details[0]["index"], input_data)

interpreter.invoke()

predictions = interpreter.get_tensor(output_details[0]["index"])[0]The prediction is an array with 1001 elements. Let’s get the Top-5 indices where their elements have high values:

top_k_results = 5

top_k_indices = np.argsort(predictions)[::-1][:top_k_results]

top_k_indicesThe top_k_indices is an array with 5 elements: array([283, 286, 282])

So, 283, 286, 282, 288, and 479 are the image’s most probable classes. Having the index, we must find to what class it appoints (such as car, cat, or dog). The text file downloaded with the model has a label associated with each index from 0 to 1,000. Let’s use a function to load the .txt file as a list:

def load_labels(filename):

with open(filename, "r") as f:

return [line.strip() for line in f.readlines()]And get the list, printing the labels associated with the indexes:

labels_path = "./models/labels.txt"

labels = load_labels(labels_path)

print(labels[286])

print(labels[283])

print(labels[282])

print(labels[288])

print(labels[479])As a result, we have:

Egyptian cat

tiger cat

tabby

lynx

cartonAt least the four top indices are related to felines. The prediction content is the probability associated with each one of the labels. As we saw on output details, those values are quantized and should be dequantized and apply softmax.

scale, zero_point = output_details[0]["quantization"]

dequantized_output = (

predictions.astype(np.float32) - zero_point

) * scale

exp_output = np.exp(dequantized_output - np.max(dequantized_output))

probabilities = exp_output / np.sum(exp_output)Let’s print the top-5 probabilities:

print(probabilities[286])

print(probabilities[283])

print(probabilities[282])

print(probabilities[288])

print(probabilities[479])0.27741462

0.3732285

0.16919471

0.10319158

0.023410844For clarity, let’s create a function to relate the labels with the probabilities:

for i in range(top_k_results):

print(

"\t{:20}: {}%".format(

labels[top_k_indices[i]],

(int(probabilities[top_k_indices[i]] * 100)),

)

)tiger cat : 37%

Egyptian cat : 27%

tabby : 16%

lynx : 10%

carton : 2%Define a general Image Classification function

Let’s create a general function to give an image as input, and we get the Top-5 possible classes:

def image_classification(

img_path, model_path, labels, top_k_results=5

):

# load the image

img = Image.open(img_path)

plt.figure(figsize=(4, 4))

plt.imshow(img)

plt.axis("off")

# Load the TFLite model

interpreter = tflite.Interpreter(model_path=model_path)

interpreter.allocate_tensors()

# Get input and output tensors

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Preprocess

img = img.resize(

(input_details[0]["shape"][1], input_details[0]["shape"][2])

)

input_data = np.expand_dims(img, axis=0)

# Inference on Raspi-Zero

interpreter.set_tensor(input_details[0]["index"], input_data)

interpreter.invoke()

# Obtain results and map them to the classes

predictions = interpreter.get_tensor(output_details[0]["index"])[

0

]

# Get indices of the top k results

top_k_indices = np.argsort(predictions)[::-1][:top_k_results]

# Get quantization parameters

scale, zero_point = output_details[0]["quantization"]

# Dequantize the output and apply softmax

dequantized_output = (

predictions.astype(np.float32) - zero_point

) * scale

exp_output = np.exp(

dequantized_output - np.max(dequantized_output)

)

probabilities = exp_output / np.sum(exp_output)

print("\n\t[PREDICTION] [Prob]\n")

for i in range(top_k_results):

print(

"\t{:20}: {}%".format(

labels[top_k_indices[i]],

(int(probabilities[top_k_indices[i]] * 100)),

)

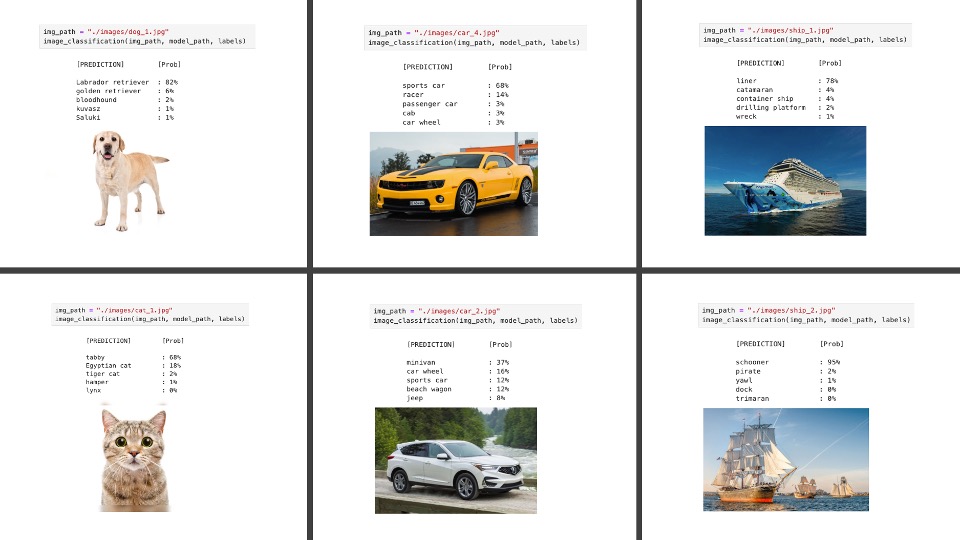

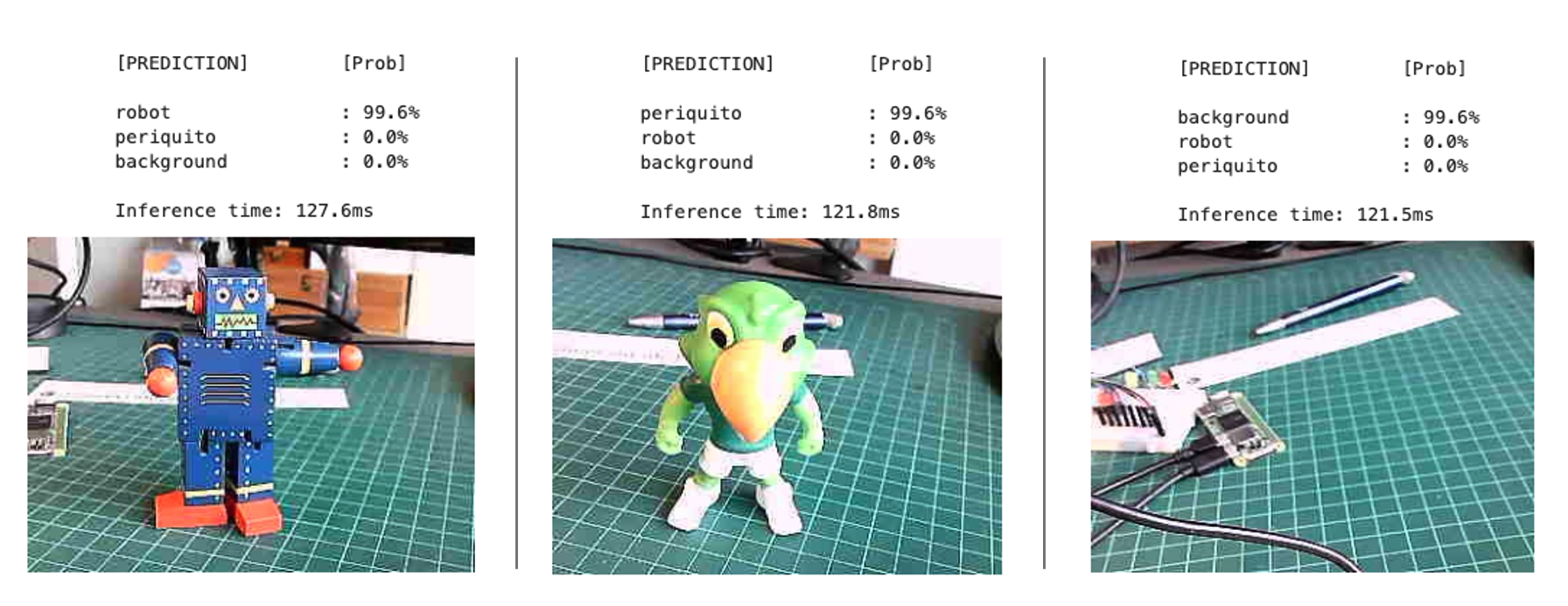

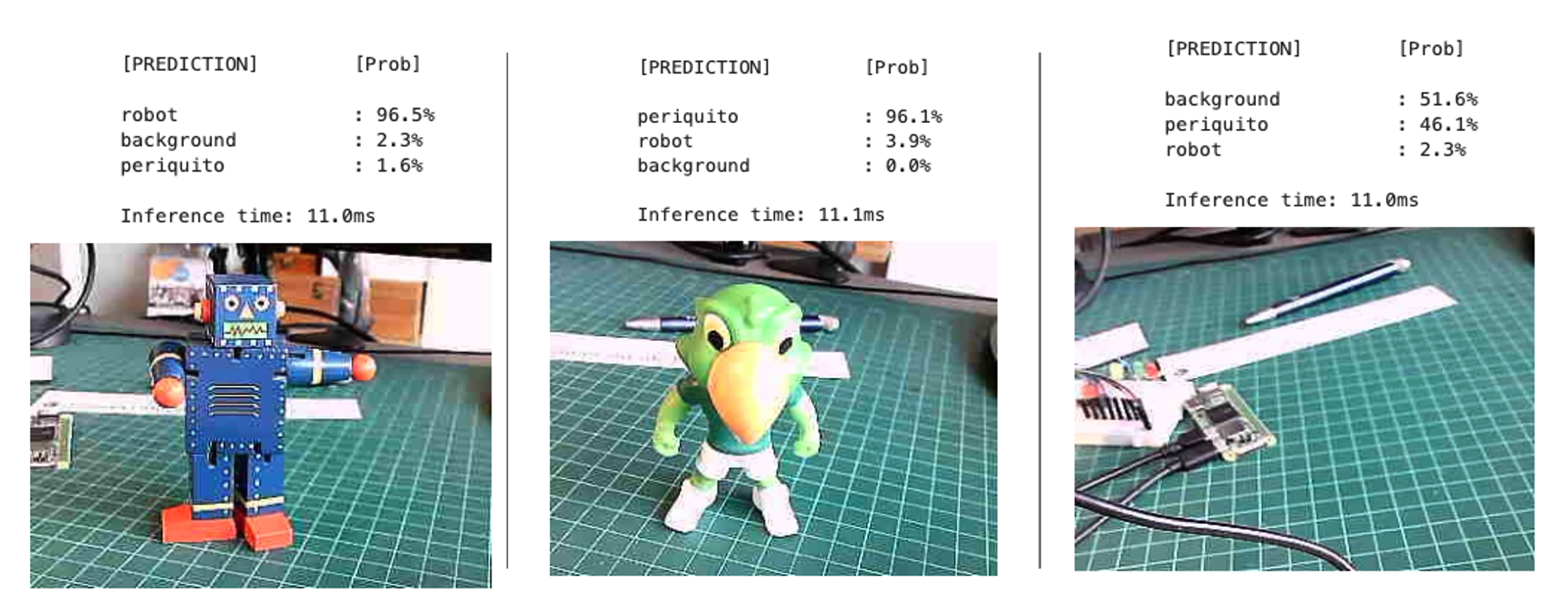

)And loading some images for testing, we have:

Testing with a model trained from scratch

Let’s get a TFLite model trained from scratch. For that, you can follow the Notebook:

CNN to classify Cifar-10 dataset

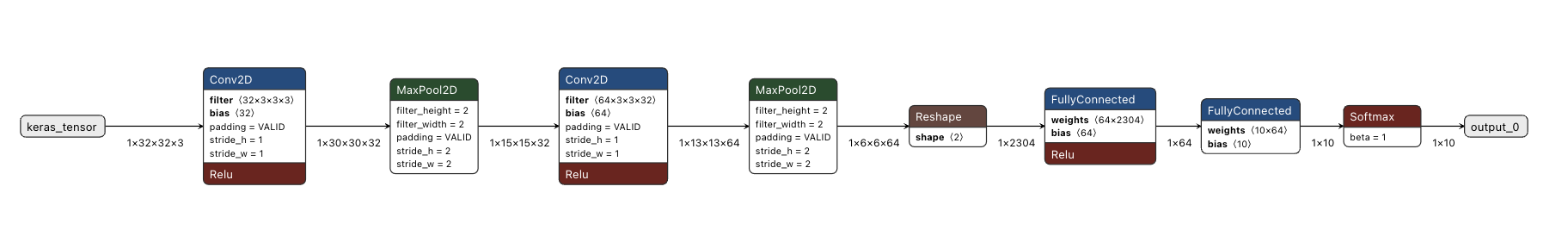

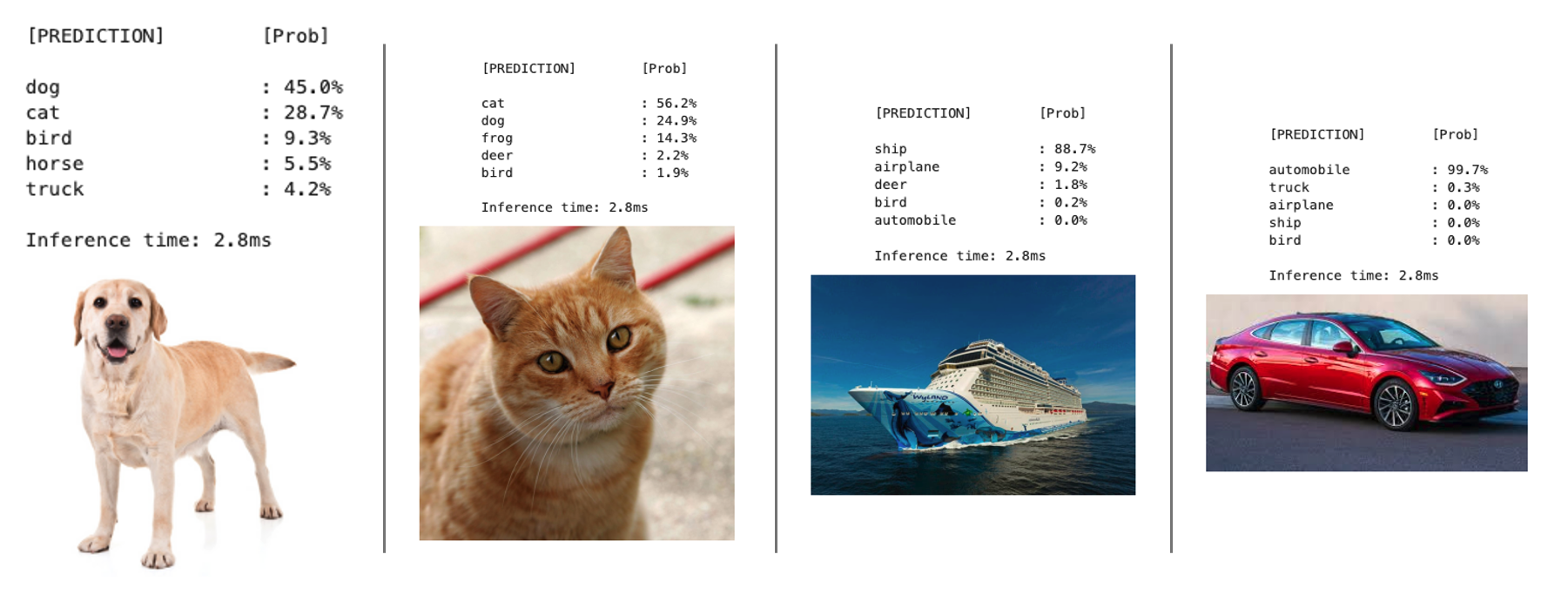

In the notebook, we trained a model using the CIFAR10 dataset, which contains 60,000 images from 10 classes of CIFAR (airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck). CIFAR has \(32\times 32\) color images (3 color channels) where the objects are not centered and can have the object with a background, such as airplanes that might have a cloudy sky behind them! In short, small but real images.

The CNN trained model (cifar10_model.keras) had a size of 2.0MB. Using the TFLite Converter, the model cifar10.tflite became with 674MB (around 1/3 of the original size).

On the notebook Cifar 10 - Image Classification on a Raspi with TFLite (which can be run over the Raspi), we can follow the same steps we did with the mobilenet_v2_1.0_224_quant.tflite. Below are examples of images using the General Function for Image Classification on a Raspi-Zero, as shown in the last section.

Installing Picamera2

Picamera2, a Python library for interacting with Raspberry Pi’s camera, is based on the libcamera camera stack, and the Raspberry Pi foundation maintains it. The Picamera2 library is supported on all Raspberry Pi models, from the Pi Zero to the RPi 5. It is already installed system-wide on the Raspi, but we should make it accessible within the virtual environment.

First, activate the virtual environment if it’s not already activated:

source ~/tflite/bin/activateNow, let’s create a .pth file in your virtual environment to add the system site-packages path:

echo "/usr/lib/python3/dist-packages" > \ $VIRTUAL_ENV/lib/python3.11/ site-packages/system_site_packages.pthNote: If your Python version differs, replace

python3.11with the appropriate version.After creating this file, try importing picamera2 in Python:

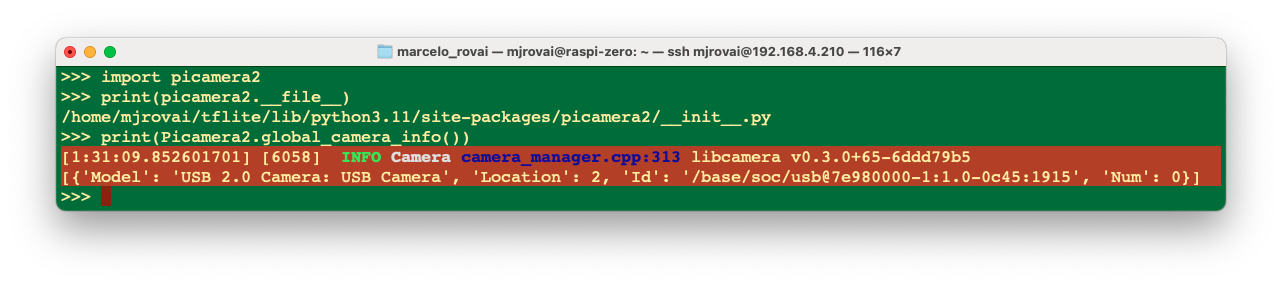

python3 >>> import picamera2 >>> print(picamera2.__file__)

The above code will show the file location of the picamera2 module itself, proving that the library can be accessed from the environment.

/home/mjrovai/tflite/lib/python3.11/site-packages/\

picamera2/__init__.pyYou can also list the available cameras in the system:

>>> print(Picamera2.global_camera_info())In my case, with a USB installed, I got:

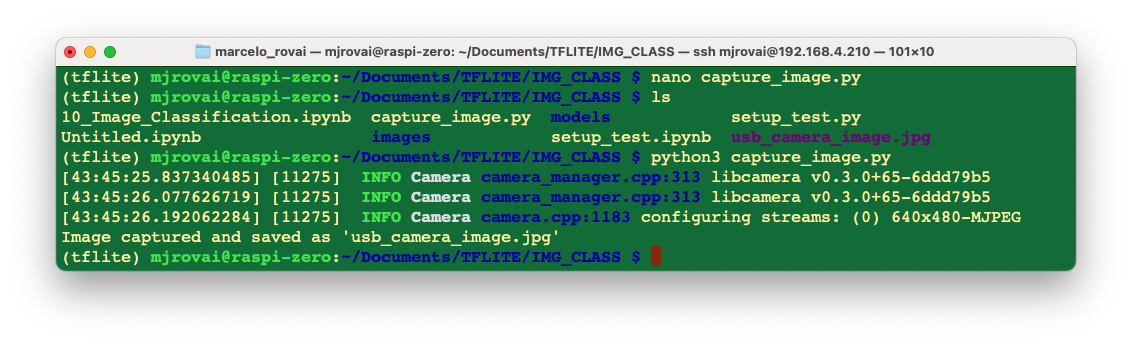

Now that we’ve confirmed picamera2 is working in the environment with an index 0, let’s try a simple Python script to capture an image from your USB camera:

from picamera2 import Picamera2

import time

# Initialize the camera

picam2 = Picamera2() # default is index 0

# Configure the camera

config = picam2.create_still_configuration(main={"size": (640, 480)})

picam2.configure(config)

# Start the camera

picam2.start()

# Wait for the camera to warm up

time.sleep(2)

# Capture an image

picam2.capture_file("usb_camera_image.jpg")

print("Image captured and saved as 'usb_camera_image.jpg'")

# Stop the camera

picam2.stop()Use the Nano text editor, the Jupyter Notebook, or any other editor. Save this as a Python script (e.g., capture_image.py) and run it. This should capture an image from your camera and save it as “usb_camera_image.jpg” in the same directory as your script.

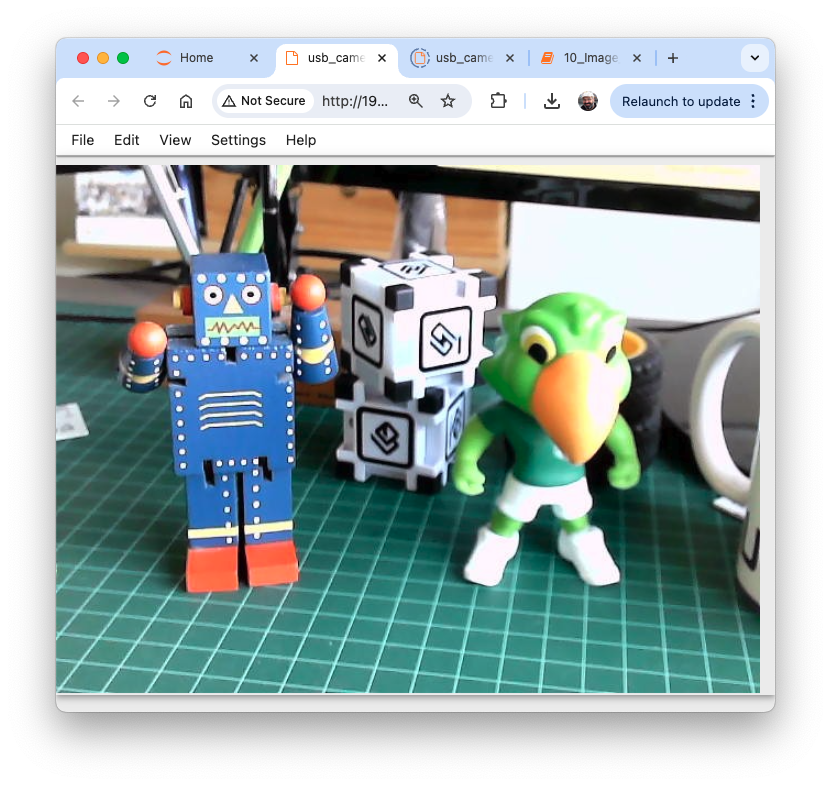

If the Jupyter is open, you can see the captured image on your computer. Otherwise, transfer the file from the Raspi to your computer.

If you are working with a Raspi-5 with a whole desktop, you can open the file directly on the device.

Image Classification Project

Now, we will develop a complete Image Classification project using the Edge Impulse Studio. As we did with the Movilinet V2, the trained and converted TFLite model will be used for inference.

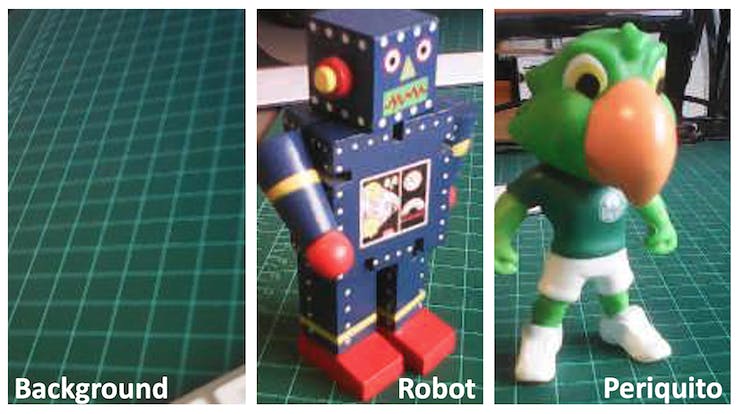

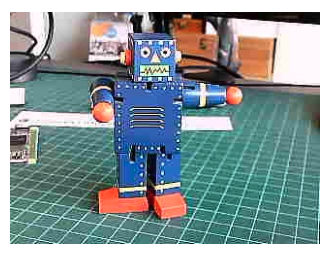

The Goal

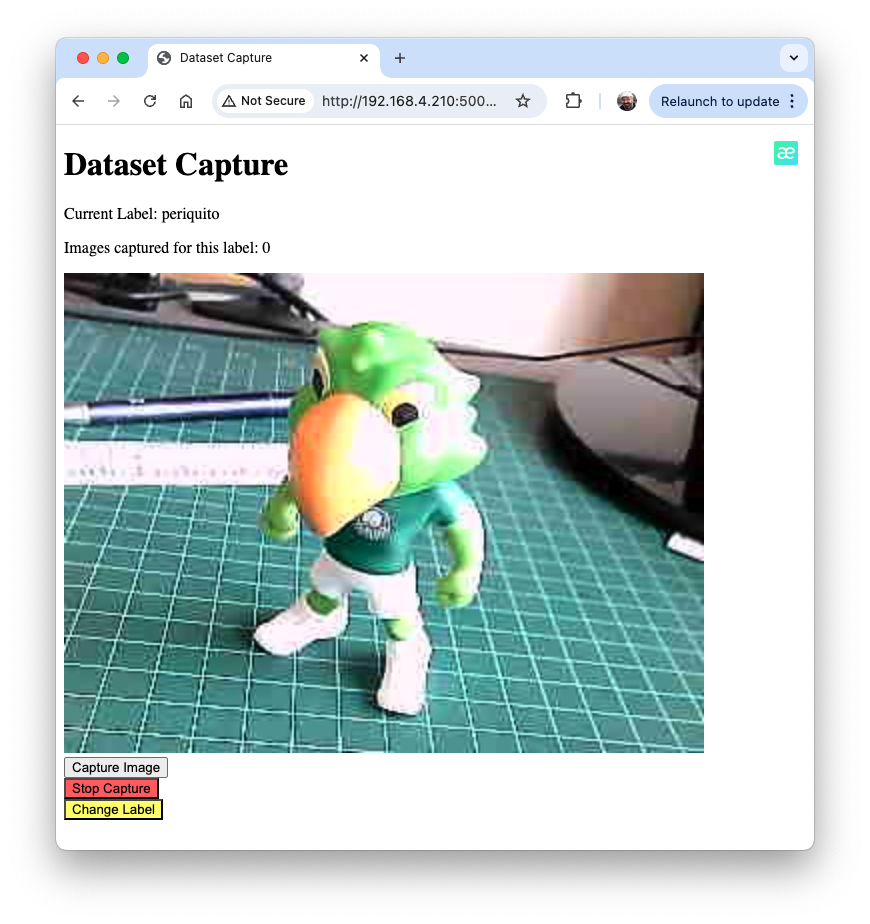

The first step in any ML project is to define its goal. In this case, it is to detect and classify two specific objects present in one image. For this project, we will use two small toys: a robot and a small Brazilian parrot (named Periquito). We will also collect images of a background where those two objects are absent.

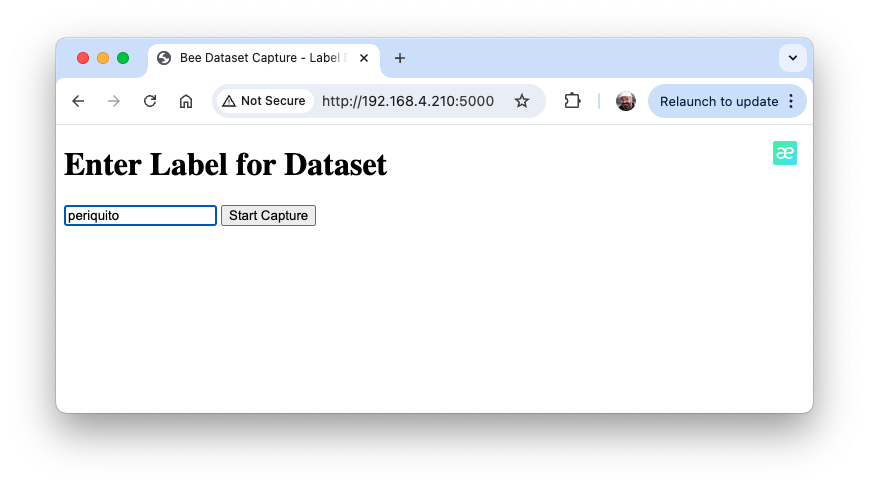

Data Collection

Once we have defined our Machine Learning project goal, the next and most crucial step is collecting the dataset. We can use a phone for the image capture, but we will use the Raspi here. Let’s set up a simple web server on our Raspberry Pi to view the QVGA (320 x 240) captured images in a browser.

First, let’s install Flask, a lightweight web framework for Python:

pip3 install flaskLet’s create a new Python script combining image capture with a web server. We’ll call it

get_img_data.py:

from flask import Flask, Response, render_template_string,

request, redirect, url_for

from picamera2 import Picamera2

import io

import threading

import time

import os

import signal

app = Flask(__name__)

# Global variables

base_dir = "dataset"

picam2 = None

frame = None

frame_lock = threading.Lock()

capture_counts = {}

current_label = None

shutdown_event = threading.Event()

def initialize_camera():

global picam2

picam2 = Picamera2()

config = picam2.create_preview_configuration(

main={"size": (320, 240)}

)

picam2.configure(config)

picam2.start()

time.sleep(2) # Wait for camera to warm up

def get_frame():

global frame

while not shutdown_event.is_set():

stream = io.BytesIO()

picam2.capture_file(stream, format='jpeg')

with frame_lock:

frame = stream.getvalue()

time.sleep(0.1) # Adjust as needed for smooth preview

def generate_frames():

while not shutdown_event.is_set():

with frame_lock:

if frame is not None:

yield (b'--frame\r\n'

b'Content-Type: image/jpeg\r\n\r\n' +

frame + b'\r\n')

time.sleep(0.1) # Adjust as needed for smooth streaming

def shutdown_server():

shutdown_event.set()

if picam2:

picam2.stop()

# Give some time for other threads to finish

time.sleep(2)

# Send SIGINT to the main process

os.kill(os.getpid(), signal.SIGINT)

@app.route('/', methods=['GET', 'POST'])

def index():

global current_label

if request.method == 'POST':

current_label = request.form['label']

if current_label not in capture_counts:

capture_counts[current_label] = 0

os.makedirs(os.path.join(base_dir, current_label),

exist_ok=True)

return redirect(url_for('capture_page'))

return render_template_string('''

<!DOCTYPE html>

<html>

<head>

<title>Dataset Capture - Label Entry</title>

</head>

<body>

<h1>Enter Label for Dataset</h1>

<form method="post">

<input type="text" name="label" required>

<input type="submit" value="Start Capture">

</form>

</body>

</html>

''')

@app.route('/capture')

def capture_page():

return render_template_string('''

<!DOCTYPE html>

<html>

<head>

<title>Dataset Capture</title>

<script>

var shutdownInitiated = false;

function checkShutdown() {

if (!shutdownInitiated) {

fetch('/check_shutdown')

.then(response => response.json())

.then(data => {

if (data.shutdown) {

shutdownInitiated = true;

document.getElementById(

'video-feed').src = '';

document.getElementById(

'shutdown-message')

.style.display = 'block';

}

});

}

}

setInterval(checkShutdown, 1000); // Check

every second

</script>

</head>

<body>

<h1>Dataset Capture</h1>

<p>Current Label: {{ label }}</p>

<p>Images captured for this label: {{ capture_count

}}</p>

<img id="video-feed" src="{{ url_for('video_feed')

}}" width="640"

height="480" />

<div id="shutdown-message" style="display: none;

color: red;">

Capture process has been stopped.

You can close this window.

</div>

<form action="/capture_image" method="post">

<input type="submit" value="Capture Image">

</form>

<form action="/stop" method="post">

<input type="submit" value="Stop Capture"

style="background-color: #ff6666;">

</form>

<form action="/" method="get">

<input type="submit" value="Change Label"

style="background-color: #ffff66;">

</form>

</body>

</html>

''', label=current_label, capture_count=capture_counts.get(

current_label, 0))

@app.route('/video_feed')

def video_feed():

return Response(generate_frames(),

mimetype='multipart/x-mixed-replace;

boundary=frame')

@app.route('/capture_image', methods=['POST'])

def capture_image():

global capture_counts

if current_label and not shutdown_event.is_set():

capture_counts[current_label] += 1

timestamp = time.strftime("%Y%m%d-%H%M%S")

filename = f"image_{timestamp}.jpg"

full_path = os.path.join(base_dir, current_label,

filename)

picam2.capture_file(full_path)

return redirect(url_for('capture_page'))

@app.route('/stop', methods=['POST'])

def stop():

summary = render_template_string('''

<!DOCTYPE html>

<html>

<head>

<title>Dataset Capture - Stopped</title>

</head>

<body>

<h1>Dataset Capture Stopped</h1>

<p>The capture process has been stopped.

You can close this window.</p>

<p>Summary of captures:</p>

<ul>

{% for label, count in capture_counts.items() %}

<li>{{ label }}: {{ count }} images</li>

{% endfor %}

</ul>

</body>

</html>

''', capture_counts=capture_counts)

# Start a new thread to shutdown the server

threading.Thread(target=shutdown_server).start()

return summary

@app.route('/check_shutdown')

def check_shutdown():

return {'shutdown': shutdown_event.is_set()}

if __name__ == '__main__':

initialize_camera()

threading.Thread(target=get_frame, daemon=True).start()

app.run(host='0.0.0.0', port=5000, threaded=True)- Run this script:

python3 get_img_data.pyAccess the web interface:

- On the Raspberry Pi itself (if you have a GUI): Open a web browser and go to

http://localhost:5000 - From another device on the same network: Open a web browser and go to

http://<raspberry_pi_ip>:5000(Replace<raspberry_pi_ip>with your Raspberry Pi’s IP address). For example:http://192.168.4.210:5000/

- On the Raspberry Pi itself (if you have a GUI): Open a web browser and go to

This Python script creates a web-based interface for capturing and organizing image datasets using a Raspberry Pi and its camera. It’s handy for machine learning projects that require labeled image data.

Key Features:

- Web Interface: Accessible from any device on the same network as the Raspberry Pi.

- Live Camera Preview: This shows a real-time feed from the camera.

- Labeling System: Allows users to input labels for different categories of images.

- Organized Storage: Automatically saves images in label-specific subdirectories.

- Per-Label Counters: Keeps track of how many images are captured for each label.

- Summary Statistics: Provides a summary of captured images when stopping the capture process.

Main Components:

- Flask Web Application: Handles routing and serves the web interface.

- Picamera2 Integration: Controls the Raspberry Pi camera.

- Threaded Frame Capture: Ensures smooth live preview.

- File Management: Organizes captured images into labeled directories.

Key Functions:

initialize_camera(): Sets up the Picamera2 instance.get_frame(): Continuously captures frames for the live preview.generate_frames(): Yields frames for the live video feed.shutdown_server(): Sets the shutdown event, stops the camera, and shuts down the Flask serverindex(): Handles the label input page.capture_page(): Displays the main capture interface.video_feed(): Shows a live preview to position the cameracapture_image(): Saves an image with the current label.stop(): Stops the capture process and displays a summary.

Usage Flow:

- Start the script on your Raspberry Pi.

- Access the web interface from a browser.

- Enter a label for the images you want to capture and press

Start Capture.

- Use the live preview to position the camera.

- Click

Capture Imageto save images under the current label.

- Change labels as needed for different categories, selecting

Change Label. - Click

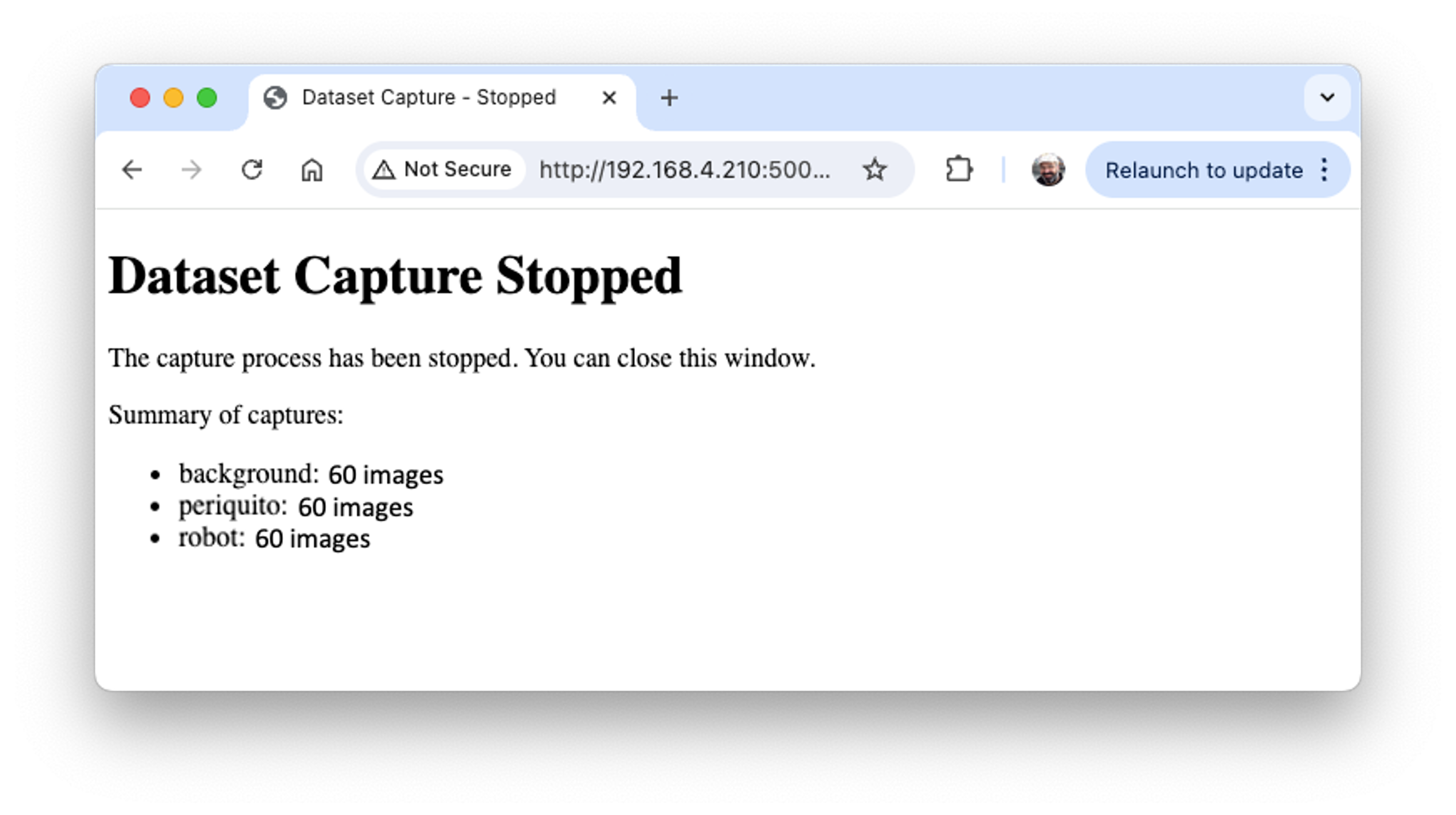

Stop Capturewhen finished to see a summary.

Technical Notes:

- The script uses threading to handle concurrent frame capture and web serving.

- Images are saved with timestamps in their filenames for uniqueness.

- The web interface is responsive and can be accessed from mobile devices.

Customization Possibilities:

- Adjust image resolution in the

initialize_camera()function. Here we used QVGA \((320\times 240)\). - Modify the HTML templates for a different look and feel.

- Add additional image processing or analysis steps in the

capture_image()function.

Number of samples on Dataset:

Get around 60 images from each category (periquito, robot and background). Try to capture different angles, backgrounds, and light conditions. On the Raspi, we will end with a folder named dataset, witch contains 3 sub-folders periquito, robot, and background. one for each class of images.

You can use Filezilla to transfer the created dataset to your main computer.

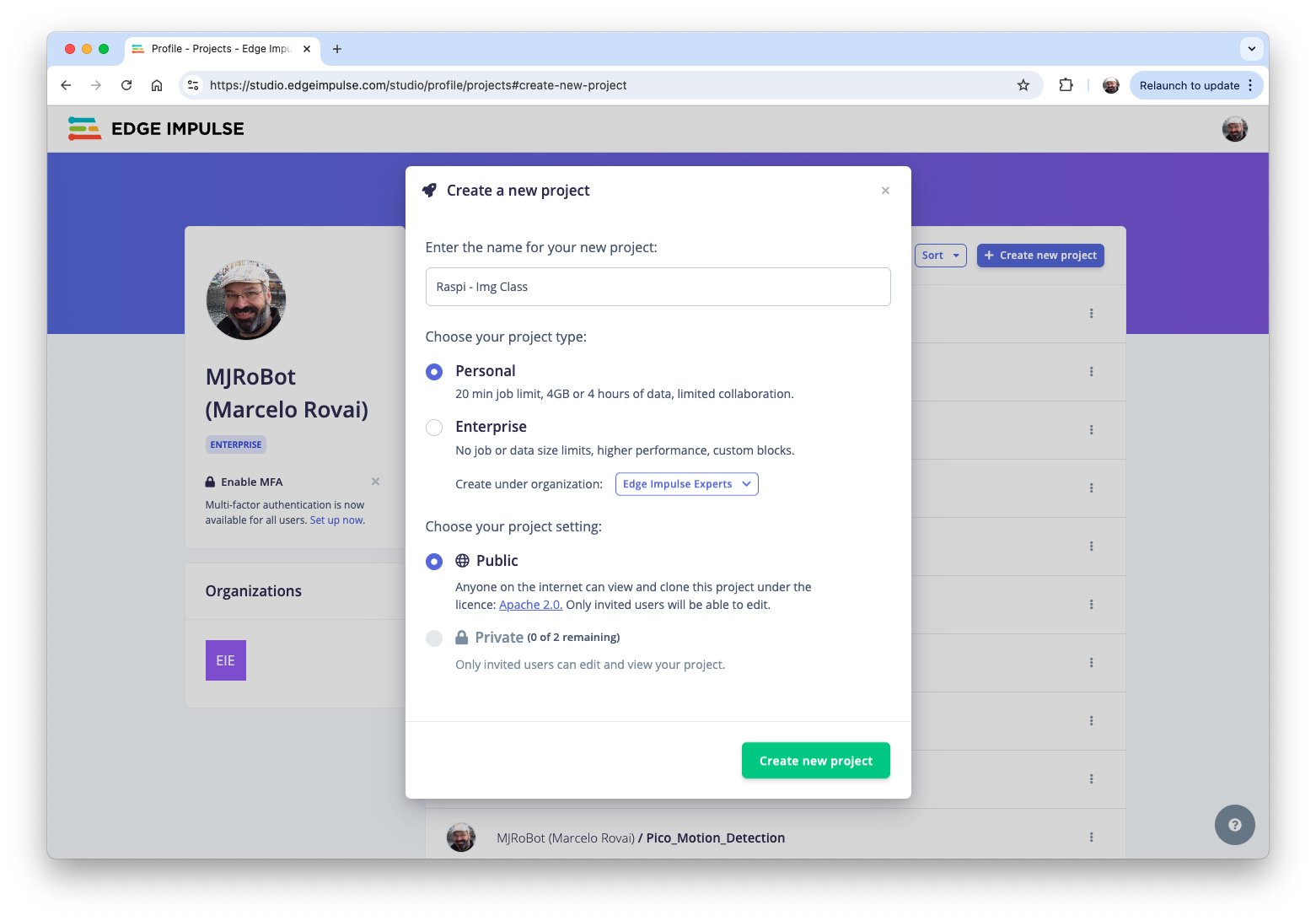

Training the model with Edge Impulse Studio

We will use the Edge Impulse Studio to train our model. Go to the Edge Impulse Page, enter your account credentials, and create a new project:

Here, you can clone a similar project: Raspi - Img Class.

Dataset

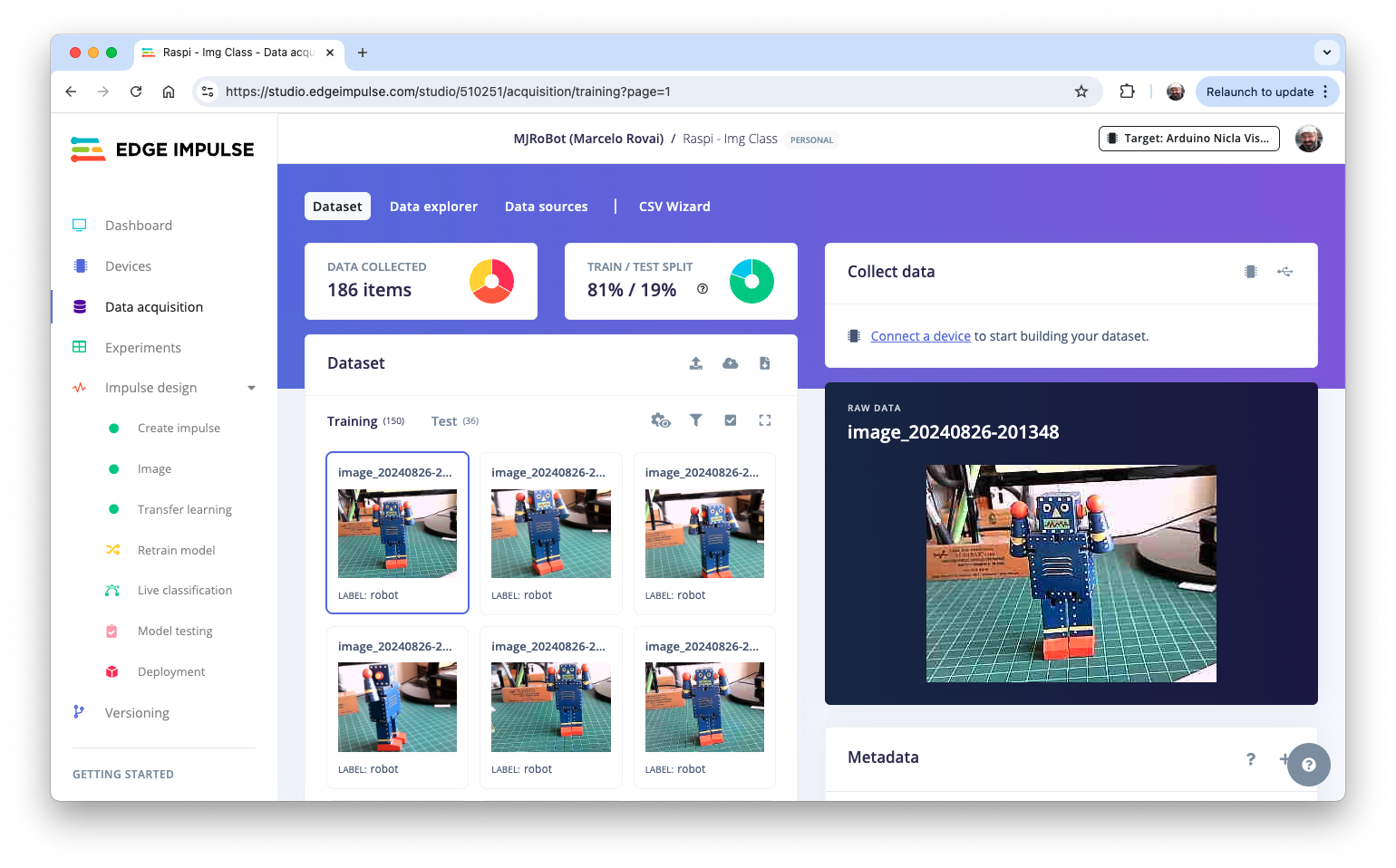

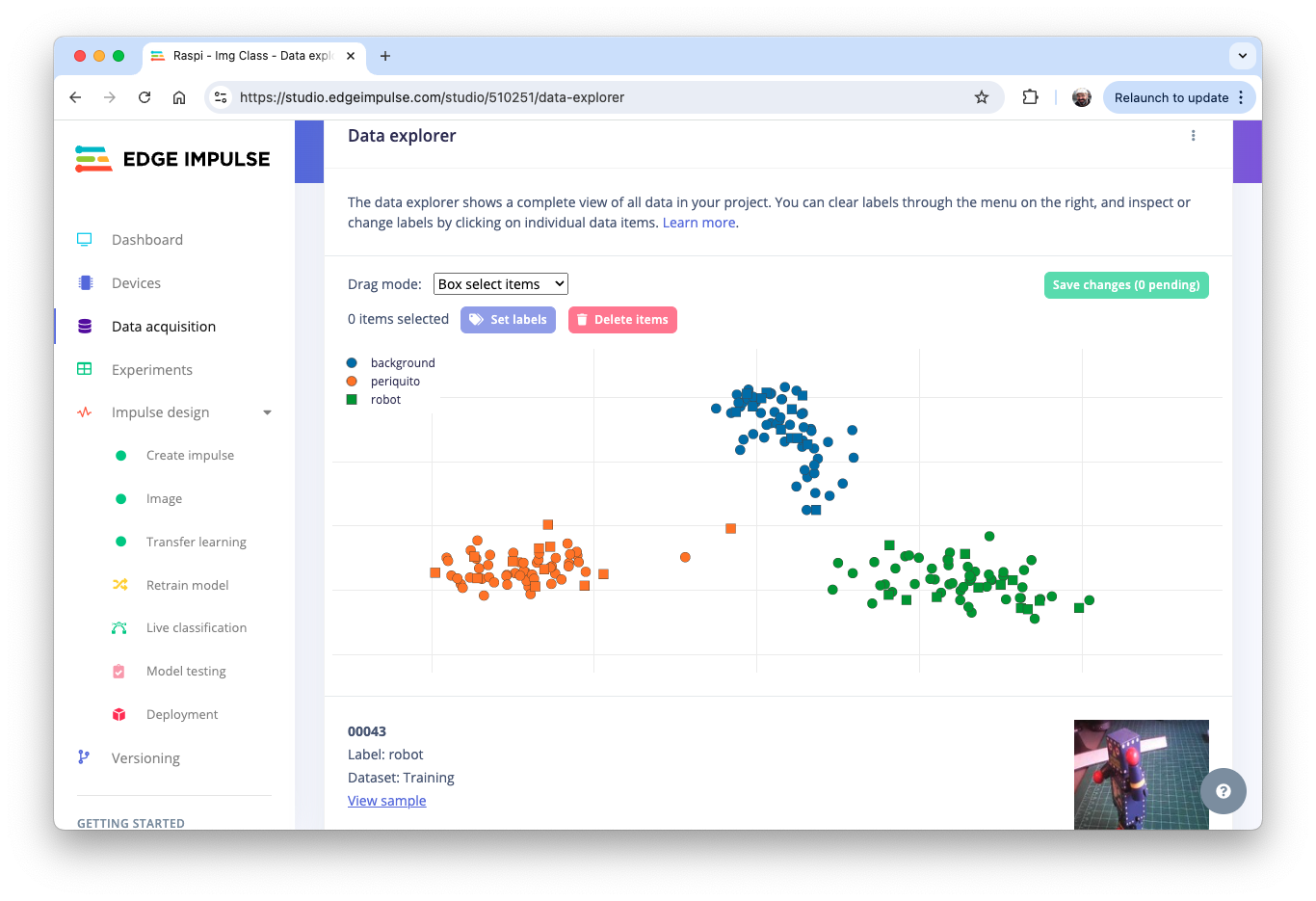

We will walk through four main steps using the EI Studio (or Studio). These steps are crucial in preparing our model for use on the Raspi: Dataset, Impulse, Tests, and Deploy (on the Edge Device, in this case, the Raspi).

Regarding the Dataset, it is essential to point out that our Original Dataset, captured with the Raspi, will be split into Training, Validation, and Test. The Test Set will be separated from the beginning and reserved for use only in the Test phase after training. The Validation Set will be used during training.

On Studio, follow the steps to upload the captured data:

- Go to the

Data acquisitiontab, and in theUPLOAD DATAsection, upload the files from your computer in the chosen categories. - Leave to the Studio the splitting of the original dataset into train and test and choose the label about

- Repeat the procedure for all three classes. At the end, you should see your “raw data” in the Studio:

The Studio allows you to explore your data, showing a complete view of all the data in your project. You can clear, inspect, or change labels by clicking on individual data items. In our case, a straightforward project, the data seems OK.

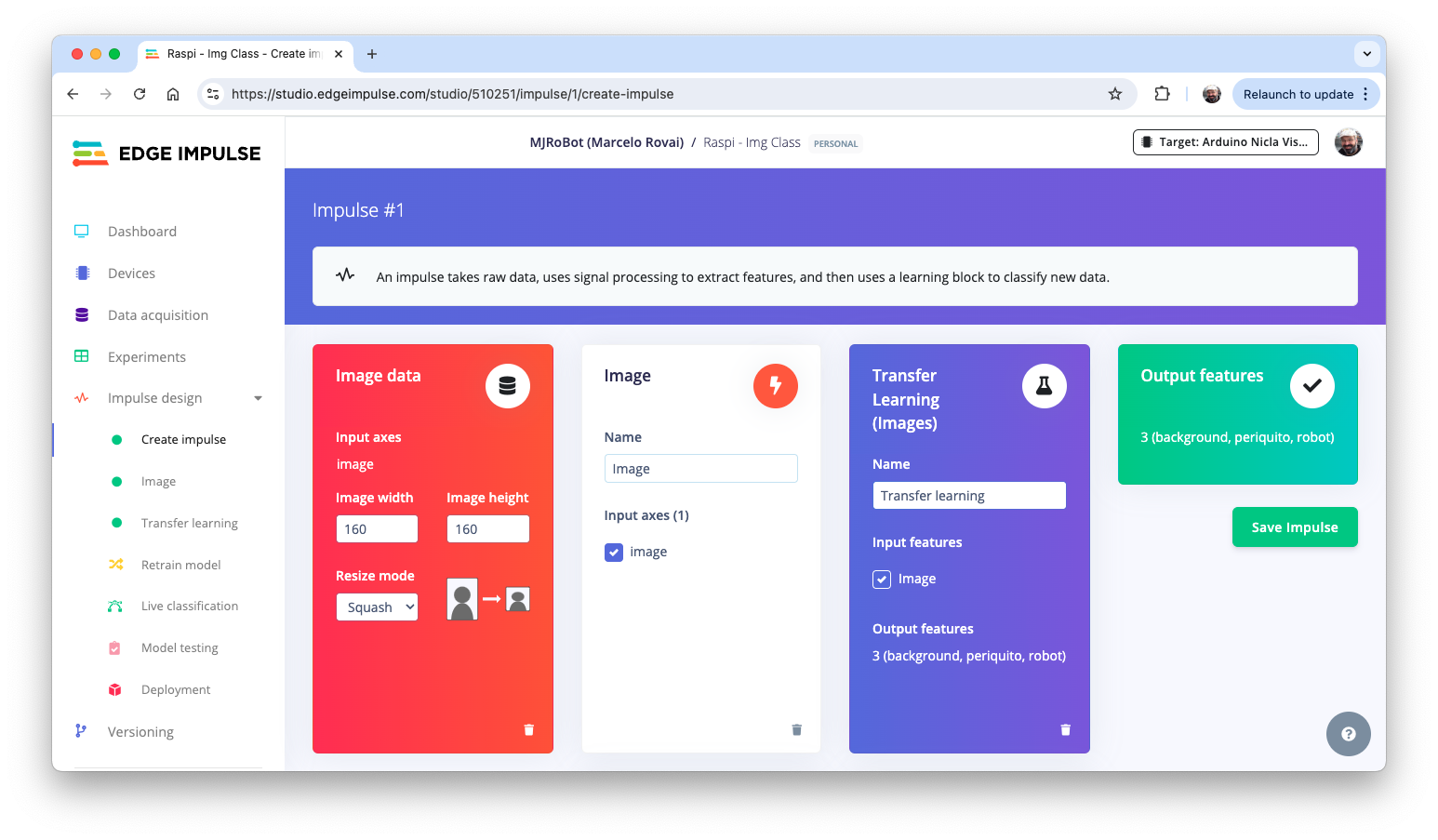

The Impulse Design

In this phase, we should define how to:

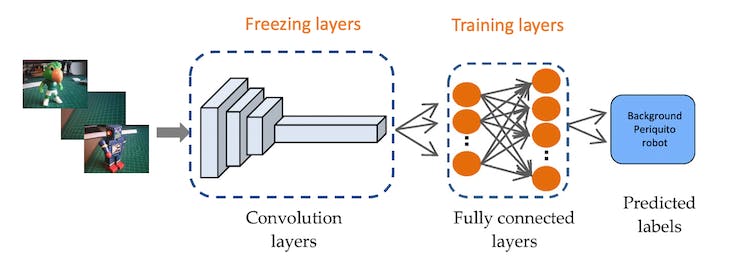

Pre-process our data, which consists of resizing the individual images and determining the

color depthto use (be it RGB or Grayscale) andSpecify a Model. In this case, it will be the

Transfer Learning (Images)to fine-tune a pre-trained MobileNet V2 image classification model on our data. This method performs well even with relatively small image datasets (around 180 images in our case).

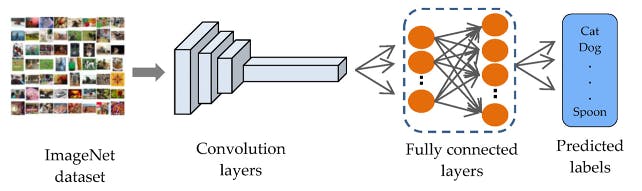

Transfer Learning with MobileNet offers a streamlined approach to model training, which is especially beneficial for resource-constrained environments and projects with limited labeled data. MobileNet, known for its lightweight architecture, is a pre-trained model that has already learned valuable features from a large dataset (ImageNet).

By leveraging these learned features, we can train a new model for your specific task with fewer data and computational resources and achieve competitive accuracy.

This approach significantly reduces training time and computational cost, making it ideal for quick prototyping and deployment on embedded devices where efficiency is paramount.

Go to the Impulse Design Tab and create the impulse, defining an image size of \(160\times 160\) and squashing them (squared form, without cropping). Select Image and Transfer Learning blocks. Save the Impulse.

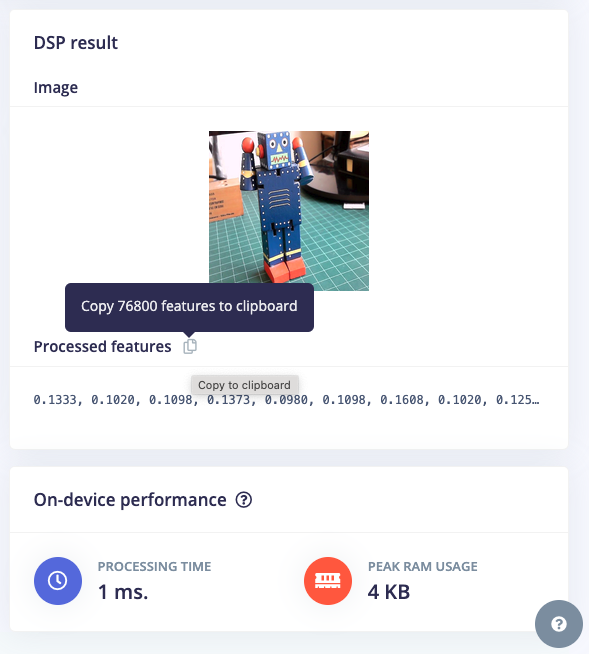

Image Pre-Processing

All the input QVGA/RGB565 images will be converted to 76,800 features \((160\times 160\times 3)\).

Press Save parameters and select Generate features in the next tab.

Model Design

MobileNet is a family of efficient convolutional neural networks designed for mobile and embedded vision applications. The key features of MobileNet are:

- Lightweight: Optimized for mobile devices and embedded systems with limited computational resources.

- Speed: Fast inference times, suitable for real-time applications.

- Accuracy: Maintains good accuracy despite its compact size.

MobileNetV2, introduced in 2018, improves the original MobileNet architecture. Key features include:

- Inverted Residuals: Inverted residual structures are used where shortcut connections are made between thin bottleneck layers.

- Linear Bottlenecks: Removes non-linearities in the narrow layers to prevent the destruction of information.

- Depth-wise Separable Convolutions: Continues to use this efficient operation from MobileNetV1.

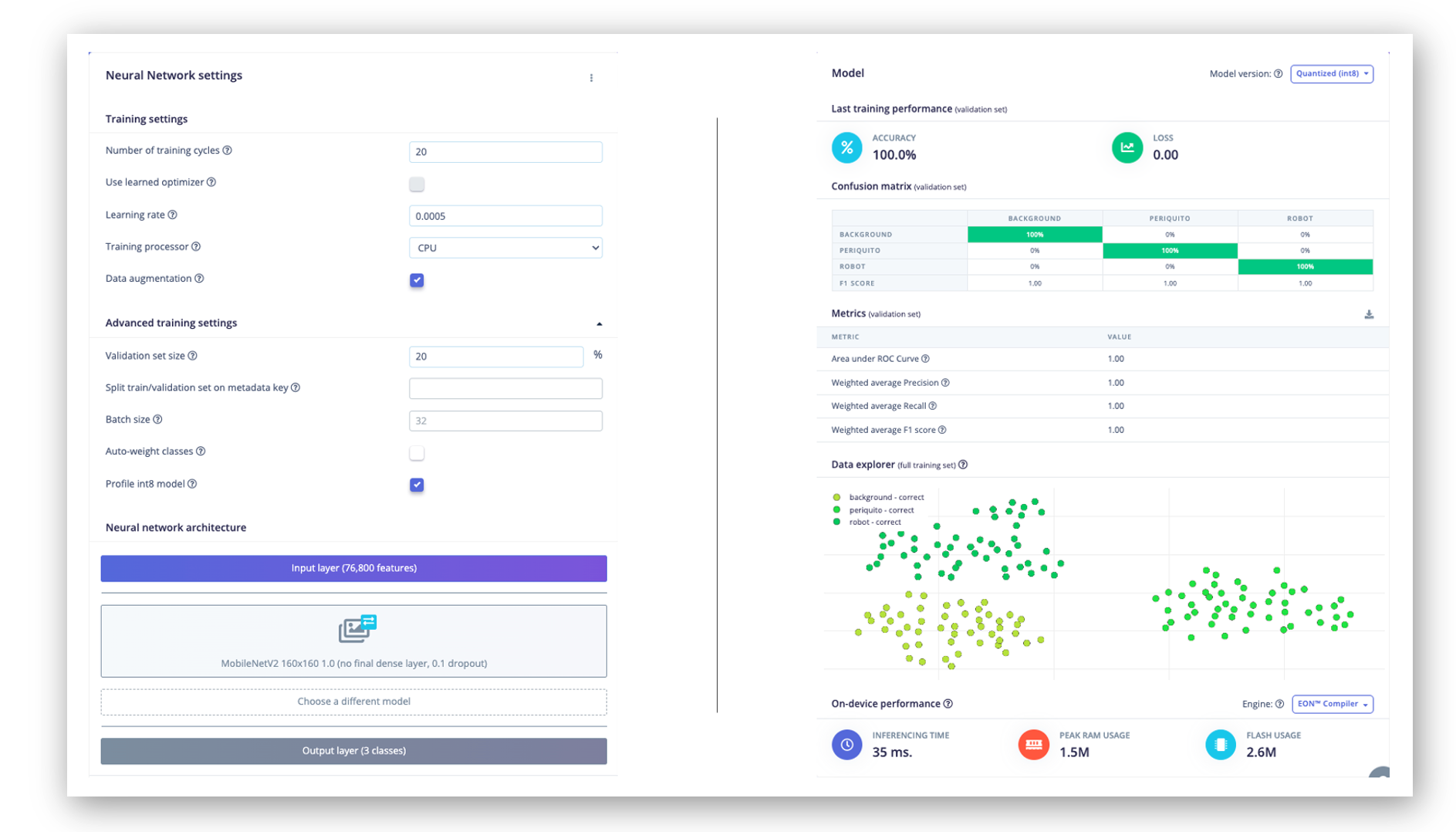

In our project, we will do a Transfer Learning with the MobileNetV2 160x160 1.0, which means that the images used for training (and future inference) should have an input Size of \(160\times 160\) pixels and a Width Multiplier of 1.0 (full width, not reduced). This configuration balances between model size, speed, and accuracy.

Model Training

Another valuable deep learning technique is Data Augmentation. Data augmentation improves the accuracy of machine learning models by creating additional artificial data. A data augmentation system makes small, random changes to the training data during the training process (such as flipping, cropping, or rotating the images).

Looking under the hood, here you can see how Edge Impulse implements a data Augmentation policy on your data:

# Implements the data augmentation policy

def augment_image(image, label):

# Flips the image randomly

image = tf.image.random_flip_left_right(image)

# Increase the image size, then randomly crop it down to

# the original dimensions

resize_factor = random.uniform(1, 1.2)

new_height = math.floor(resize_factor * INPUT_SHAPE[0])

new_width = math.floor(resize_factor * INPUT_SHAPE[1])

image = tf.image.resize_with_crop_or_pad(

image, new_height, new_width

)

image = tf.image.random_crop(image, size=INPUT_SHAPE)

# Vary the brightness of the image

image = tf.image.random_brightness(image, max_delta=0.2)

return image, labelExposure to these variations during training can help prevent your model from taking shortcuts by “memorizing” superficial clues in your training data, meaning it may better reflect the deep underlying patterns in your dataset.

The final dense layer of our model will have 0 neurons with a 10% dropout for overfitting prevention. Here is the Training result:

The result is excellent, with a reasonable 35 ms of latency (for a Raspi-4), which should result in around 30 fps (frames per second) during inference. A Raspi-Zero should be slower, and the Raspi-5, faster.

Trading off: Accuracy versus speed

If faster inference is needed, we should train the model using smaller alphas (0.35, 0.5, and 0.75) or even reduce the image input size, trading with accuracy. However, reducing the input image size and decreasing the alpha (width multiplier) can speed up inference for MobileNet V2, but they have different trade-offs. Let’s compare:

- Reducing Image Input Size:

Pros:

- Significantly reduces the computational cost across all layers.

- Decreases memory usage.

- It often provides a substantial speed boost.

Cons:

- It may reduce the model’s ability to detect small features or fine details.

- It can significantly impact accuracy, especially for tasks requiring fine-grained recognition.

- Reducing Alpha (Width Multiplier):

Pros:

- Reduces the number of parameters and computations in the model.

- Maintains the original input resolution, potentially preserving more detail.

- It can provide a good balance between speed and accuracy.

Cons:

- It may not speed up inference as dramatically as reducing input size.

- It can reduce the model’s capacity to learn complex features.

Comparison:

- Speed Impact:

- Reducing input size often provides a more substantial speed boost because it reduces computations quadratically (halving both width and height reduces computations by about 75%).

- Reducing alpha provides a more linear reduction in computations.

- Accuracy Impact:

- Reducing input size can severely impact accuracy, especially when detecting small objects or fine details.

- Reducing alpha tends to have a more gradual impact on accuracy.

- Model Architecture:

- Changing input size doesn’t alter the model’s architecture.

- Changing alpha modifies the model’s structure by reducing the number of channels in each layer.

Recommendation:

- If our application doesn’t require detecting tiny details and can tolerate some loss in accuracy, reducing the input size is often the most effective way to speed up inference.

- Reducing alpha might be preferable if maintaining the ability to detect fine details is crucial or if you need a more balanced trade-off between speed and accuracy.

- For best results, you might want to experiment with both:

- Try MobileNet V2 with input sizes like \(160\times 160\) or \(92\times 92\)

- Experiment with alpha values like 1.0, 0.75, 0.5 or 0.35.

- Always benchmark the different configurations on your specific hardware and with your particular dataset to find the optimal balance for your use case.

Remember, the best choice depends on your specific requirements for accuracy, speed, and the nature of the images you’re working with. It’s often worth experimenting with combinations to find the optimal configuration for your particular use case.

Model Testing

Now, you should take the data set aside at the start of the project and run the trained model using it as input. Again, the result is excellent (92.22%).

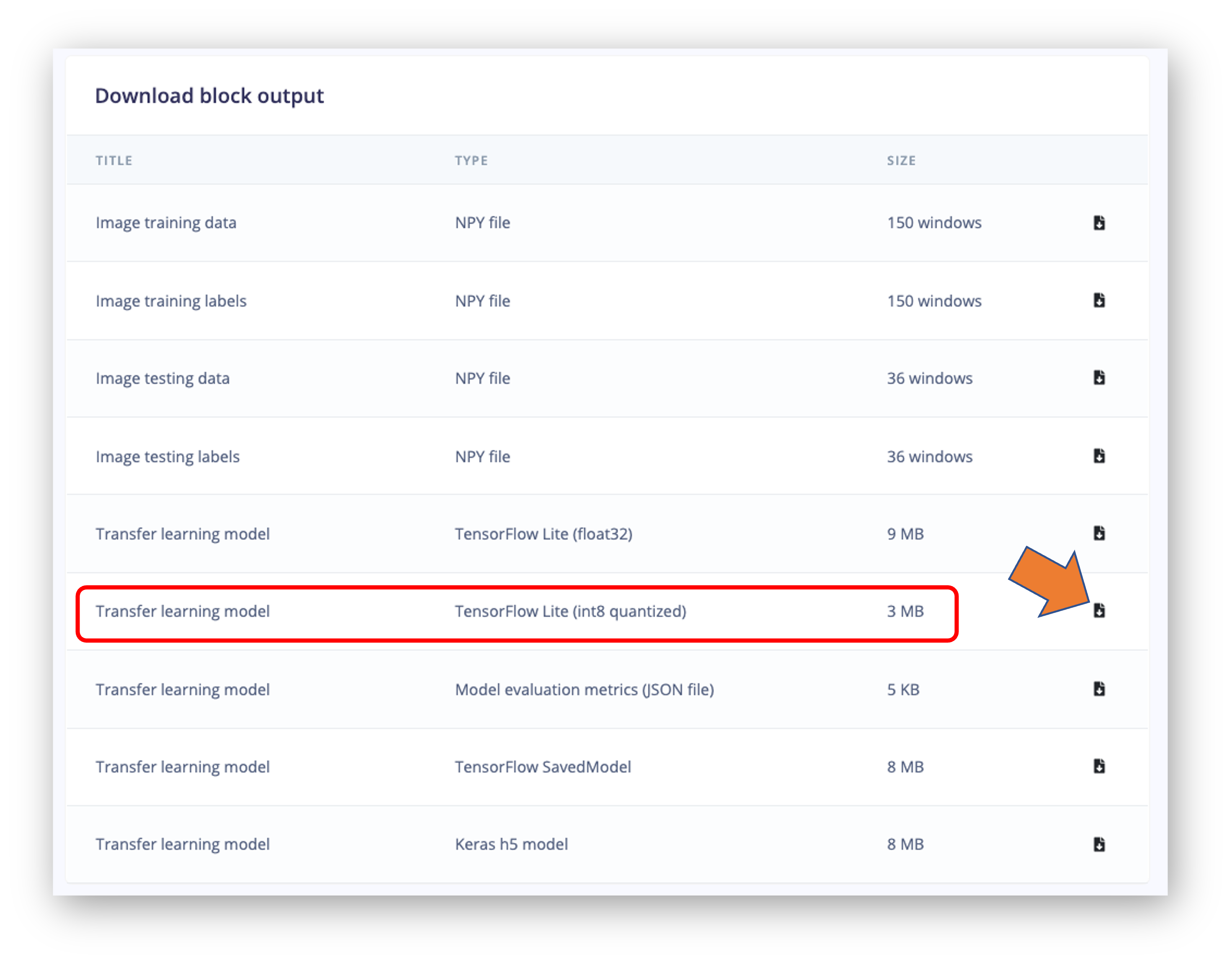

Deploying the model

As we did in the previous section, we can deploy the trained model as .tflite and use Raspi to run it using Python.

On the Dashboard tab, go to Transfer learning model (int8 quantized) and click on the download icon:

Let’s also download the float32 version for comparison

Transfer the model from your computer to the Raspi (./models), for example, using FileZilla. Also, capture some images for inference (./images).

Import the needed libraries:

import time

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import tflite_runtime.interpreter as tfliteDefine the paths and labels:

img_path = "./images/robot.jpg"

model_path = "./models/ei-raspi-img-class-int8-quantized-\

model.tflite"

labels = ["background", "periquito", "robot"]Note that the models trained on the Edge Impulse Studio will output values with index 0, 1, 2, etc., where the actual labels will follow an alphabetic order.

Load the model, allocate the tensors, and get the input and output tensor details:

# Load the TFLite model

interpreter = tflite.Interpreter(model_path=model_path)

interpreter.allocate_tensors()

# Get input and output tensors

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()One important difference to note is that the dtype of the input details of the model is now int8, which means that the input values go from –128 to +127, while each pixel of our image goes from 0 to 255. This means that we should pre-process the image to match it. We can check here:

input_dtype = input_details[0]["dtype"]

input_dtypenumpy.int8So, let’s open the image and show it:

img = Image.open(img_path)

plt.figure(figsize=(4, 4))

plt.imshow(img)

plt.axis("off")

plt.show()

And perform the pre-processing:

scale, zero_point = input_details[0]["quantization"]

img = img.resize(

(input_details[0]["shape"][1], input_details[0]["shape"][2])

)

img_array = np.array(img, dtype=np.float32) / 255.0

img_array = (

(img_array / scale + zero_point).clip(-128, 127).astype(np.int8)

)

input_data = np.expand_dims(img_array, axis=0)Checking the input data, we can verify that the input tensor is compatible with what is expected by the model:

input_data.shape, input_data.dtype((1, 160, 160, 3), dtype('int8'))Now, it is time to perform the inference. Let’s also calculate the latency of the model:

# Inference on Raspi-Zero

start_time = time.time()

interpreter.set_tensor(input_details[0]["index"], input_data)

interpreter.invoke()

end_time = time.time()

inference_time = (end_time - start_time) * 1000 # Convert

# to milliseconds

print("Inference time: {:.1f}ms".format(inference_time))The model will take around 125ms to perform the inference in the Raspi-Zero, which is 3 to 4 times longer than a Raspi-5.

Now, we can get the output labels and probabilities. It is also important to note that the model trained on the Edge Impulse Studio has a softmax in its output (different from the original Movilenet V2), and we should use the model’s raw output as the “probabilities.”

# Obtain results and map them to the classes

predictions = interpreter.get_tensor(output_details[0]["index"])[0]

# Get indices of the top k results

top_k_results = 3

top_k_indices = np.argsort(predictions)[::-1][:top_k_results]

# Get quantization parameters

scale, zero_point = output_details[0]["quantization"]

# Dequantize the output

dequantized_output = (

predictions.astype(np.float32) - zero_point

) * scale

probabilities = dequantized_output

print("\n\t[PREDICTION] [Prob]\n")

for i in range(top_k_results):

print(

"\t{:20}: {:.2f}%".format(

labels[top_k_indices[i]],

probabilities[top_k_indices[i]] * 100,

)

)

Let’s modify the function created before so that we can handle different type of models:

def image_classification(

img_path, model_path, labels, top_k_results=3, apply_softmax=False

):

# Load the image

img = Image.open(img_path)

plt.figure(figsize=(4, 4))

plt.imshow(img)

plt.axis("off")

# Load the TFLite model

interpreter = tflite.Interpreter(model_path=model_path)

interpreter.allocate_tensors()

# Get input and output tensors

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Preprocess

img = img.resize(

(input_details[0]["shape"][1], input_details[0]["shape"][2])

)

input_dtype = input_details[0]["dtype"]

if input_dtype == np.uint8:

input_data = np.expand_dims(np.array(img), axis=0)

elif input_dtype == np.int8:

scale, zero_point = input_details[0]["quantization"]

img_array = np.array(img, dtype=np.float32) / 255.0

img_array = (

(img_array / scale + zero_point)

.clip(-128, 127)

.astype(np.int8)

)

input_data = np.expand_dims(img_array, axis=0)

else: # float32

input_data = (

np.expand_dims(np.array(img, dtype=np.float32), axis=0)

/ 255.0

)

# Inference on Raspi-Zero

start_time = time.time()

interpreter.set_tensor(input_details[0]["index"], input_data)

interpreter.invoke()

end_time = time.time()

inference_time = (

end_time - start_time

) * 1000 # Convert to milliseconds

# Obtain results

predictions = interpreter.get_tensor(output_details[0]["index"])[

0

]

# Get indices of the top k results

top_k_indices = np.argsort(predictions)[::-1][:top_k_results]

# Handle output based on type

output_dtype = output_details[0]["dtype"]

if output_dtype in [np.int8, np.uint8]:

# Dequantize the output

scale, zero_point = output_details[0]["quantization"]

predictions = (

predictions.astype(np.float32) - zero_point

) * scale

if apply_softmax:

# Apply softmax

exp_preds = np.exp(predictions - np.max(predictions))

probabilities = exp_preds / np.sum(exp_preds)

else:

probabilities = predictions

print("\n\t[PREDICTION] [Prob]\n")

for i in range(top_k_results):

print(

"\t{:20}: {:.1f}%".format(

labels[top_k_indices[i]],

probabilities[top_k_indices[i]] * 100,

)

)

print("\n\tInference time: {:.1f}ms".format(inference_time))And test it with different images and the int8 quantized model (160x160 alpha =1.0).

Let’s download a smaller model, such as the one trained for the Nicla Vision Lab (int8 quantized model, 96x96, alpha = 0.1), as a test. We can use the same function:

The model lost some accuracy, but it is still OK once our model does not look for many details. Regarding latency, we are around ten times faster on the Raspi-Zero.

Live Image Classification

Let’s develop an app to capture images with the USB camera in real time, showing its classification.

Using the nano on the terminal, save the code below, such as img_class_live_infer.py.

from flask import Flask, Response, render_template_string,

request, jsonify

from picamera2 import Picamera2

import io

import threading

import time

import numpy as np

from PIL import Image

import tflite_runtime.interpreter as tflite

from queue import Queue

app = Flask(__name__)

# Global variables

picam2 = None

frame = None

frame_lock = threading.Lock()

is_classifying = False

confidence_threshold = 0.8

model_path = "./models/ei-raspi-img-class-int8-quantized-\

model.tflite"

labels = ['background', 'periquito', 'robot']

interpreter = None

classification_queue = Queue(maxsize=1)

def initialize_camera():

global picam2

picam2 = Picamera2()

config = picam2.create_preview_configuration(

main={"size": (320, 240)}

)

picam2.configure(config)

picam2.start()

time.sleep(2) # Wait for camera to warm up

def get_frame():

global frame

while True:

stream = io.BytesIO()

picam2.capture_file(stream, format='jpeg')

with frame_lock:

frame = stream.getvalue()

time.sleep(0.1) # Capture frames more frequently

def generate_frames():

while True:

with frame_lock:

if frame is not None:

yield (

b'--frame\r\n'

b'Content-Type: image/jpeg\r\n\r\n'

+ frame + b'\r\n'

)

time.sleep(0.1)

def load_model():

global interpreter

if interpreter is None:

interpreter = tflite.Interpreter(model_path=model_path)

interpreter.allocate_tensors()

return interpreter

def classify_image(img, interpreter):

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

img = img.resize((input_details[0]['shape'][1],

input_details[0]['shape'][2]))

input_data = np.expand_dims(np.array(img), axis=0)\

.astype(input_details[0]['dtype'])

interpreter.set_tensor(input_details[0]['index'], input_data)

interpreter.invoke()

predictions = interpreter.get_tensor(output_details[0]

['index'])[0]

# Handle output based on type

output_dtype = output_details[0]['dtype']

if output_dtype in [np.int8, np.uint8]:

# Dequantize the output

scale, zero_point = output_details[0]['quantization']

predictions = (predictions.astype(np.float32) -

zero_point) * scale

return predictions

def classification_worker():

interpreter = load_model()

while True:

if is_classifying:

with frame_lock:

if frame is not None:

img = Image.open(io.BytesIO(frame))

predictions = classify_image(img, interpreter)

max_prob = np.max(predictions)

if max_prob >= confidence_threshold:

label = labels[np.argmax(predictions)]

else:

label = 'Uncertain'

classification_queue.put({

'label': label,

'probability': float(max_prob)

})

time.sleep(0.1) # Adjust based on your needs

@app.route('/')

def index():

return render_template_string('''

<!DOCTYPE html>

<html>

<head>

<title>Image Classification</title>

<script

src="https://code.jquery.com/jquery-3.6.0.min.js">

</script>

<script>

function startClassification() {

$.post('/start');

$('#startBtn').prop('disabled', true);

$('#stopBtn').prop('disabled', false);

}

function stopClassification() {

$.post('/stop');

$('#startBtn').prop('disabled', false);

$('#stopBtn').prop('disabled', true);

}

function updateConfidence() {

var confidence = $('#confidence').val();

$.post('/update_confidence',

{confidence: confidence}

);

}

function updateClassification() {

$.get('/get_classification', function(data) {

$('#classification').text(data.label + ': '

+ data.probability.toFixed(2));

});

}

$(document).ready(function() {

setInterval(updateClassification, 100);

// Update every 100ms

});

</script>

</head>

<body>

<h1>Image Classification</h1>

<img src="{{ url_for('video_feed') }}"

width="640"

height="480" />

<br>

<button id="startBtn"

onclick="startClassification()">

Start Classification

</button>

<button id="stopBtn"

onclick="stopClassification()"

disabled>

Stop Classification

</button>

<br>

<label for="confidence">Confidence Threshold:</label>

<input type="number"

id="confidence"

name="confidence"

min="0" max="1"

step="0.1"

value="0.8"

onchange="updateConfidence()" />

<br>

<div id="classification">

Waiting for classification...

</div>

</body>

</html>

''')

@app.route('/video_feed')

def video_feed():

return Response(

generate_frames(),

mimetype='multipart/x-mixed-replace; boundary=frame'

)

@app.route('/start', methods=['POST'])

def start_classification():

global is_classifying

is_classifying = True

return '', 204

@app.route('/stop', methods=['POST'])

def stop_classification():

global is_classifying

is_classifying = False

return '', 204

@app.route('/update_confidence', methods=['POST'])

def update_confidence():

global confidence_threshold

confidence_threshold = float(request.form['confidence'])

return '', 204

@app.route('/get_classification')

def get_classification():

if not is_classifying:

return jsonify({'label': 'Not classifying',

'probability': 0})

try:

result = classification_queue.get_nowait()

except Queue.Empty:

result = {'label': 'Processing', 'probability': 0}

return jsonify(result)

if __name__ == '__main__':

initialize_camera()

threading.Thread(target=get_frame, daemon=True).start()

threading.Thread(target=classification_worker,

daemon=True).start()

app.run(host='0.0.0.0', port=5000, threaded=True)On the terminal, run:

python3 img_class_live_infer.pyAnd access the web interface:

- On the Raspberry Pi itself (if you have a GUI): Open a web browser and go to

http://localhost:5000 - From another device on the same network: Open a web browser and go to

http://<raspberry_pi_ip>:5000(Replace<raspberry_pi_ip>with your Raspberry Pi’s IP address). For example:http://192.168.4.210:5000/

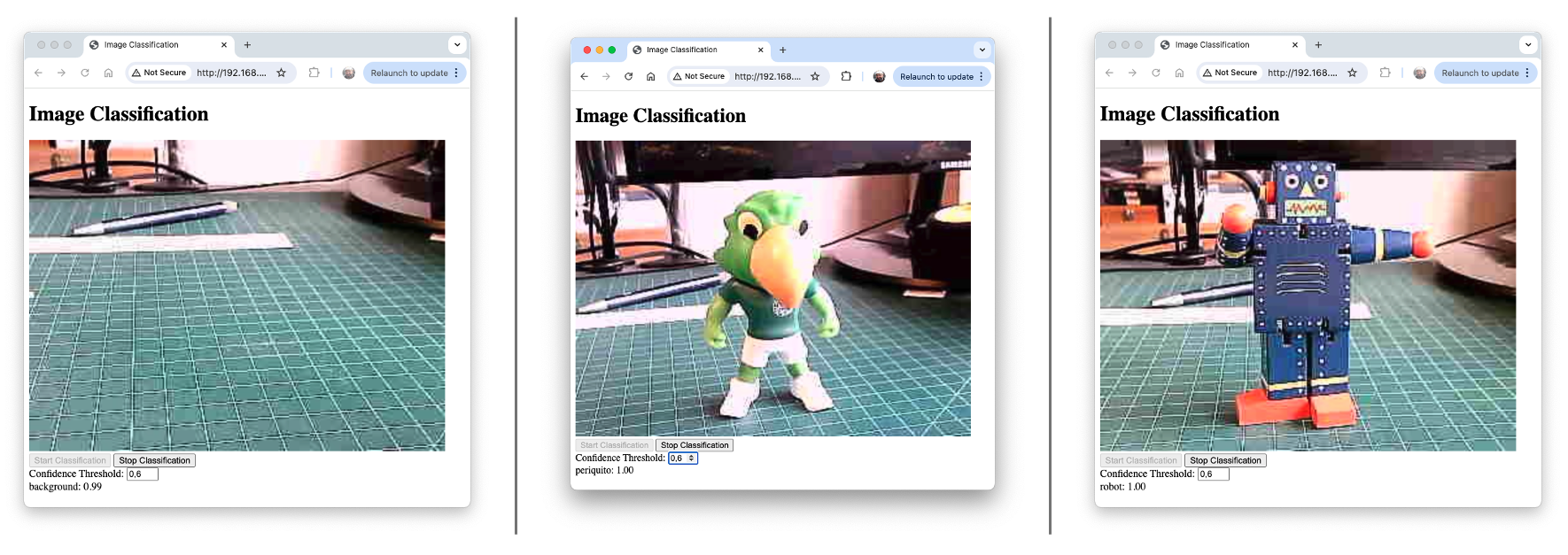

Here are some screenshots of the app running on an external desktop

Here, you can see the app running on the YouTube:

The code creates a web application for real-time image classification using a Raspberry Pi, its camera module, and a TensorFlow Lite model. The application uses Flask to serve a web interface where is possible to view the camera feed and see live classification results.

Key Components:

- Flask Web Application: Serves the user interface and handles requests.

- PiCamera2: Captures images from the Raspberry Pi camera module.

- TensorFlow Lite: Runs the image classification model.

- Threading: Manages concurrent operations for smooth performance.

Main Features:

- Live camera feed display

- Real-time image classification

- Adjustable confidence threshold

- Start/Stop classification on demand

Code Structure:

- Imports and Setup:

- Flask for web application

- PiCamera2 for camera control

- TensorFlow Lite for inference

- Threading and Queue for concurrent operations

- Global Variables:

- Camera and frame management

- Classification control

- Model and label information

- Camera Functions:

initialize_camera(): Sets up the PiCamera2get_frame(): Continuously captures framesgenerate_frames(): Yields frames for the web feed

- Model Functions:

load_model(): Loads the TFLite modelclassify_image(): Performs inference on a single image

- Classification Worker:

- Runs in a separate thread

- Continuously classifies frames when active

- Updates a queue with the latest results

- Flask Routes:

/: Serves the main HTML page/video_feed: Streams the camera feed/startand/stop: Controls classification/update_confidence: Adjusts the confidence threshold/get_classification: Returns the latest classification result

- HTML Template:

- Displays camera feed and classification results

- Provides controls for starting/stopping and adjusting settings

- Main Execution:

- Initializes camera and starts necessary threads

- Runs the Flask application

Key Concepts:

- Concurrent Operations: Using threads to handle camera capture and classification separately from the web server.

- Real-time Updates: Frequent updates to the classification results without page reloads.

- Model Reuse: Loading the TFLite model once and reusing it for efficiency.

- Flexible Configuration: Allowing users to adjust the confidence threshold on the fly.

Usage:

- Ensure all dependencies are installed.

- Run the script on a Raspberry Pi with a camera module.

- Access the web interface from a browser using the Raspberry Pi’s IP address.

- Start classification and adjust settings as needed.

Summary:

Image classification has emerged as a powerful and versatile application of machine learning, with significant implications for various fields, from healthcare to environmental monitoring. This chapter has demonstrated how to implement a robust image classification system on edge devices like the Raspi-Zero and Raspi-5, showcasing the potential for real-time, on-device intelligence.

We’ve explored the entire pipeline of an image classification project, from data collection and model training using Edge Impulse Studio to deploying and running inferences on a Raspi. The process highlighted several key points:

- The importance of proper data collection and preprocessing for training effective models.

- The power of transfer learning, allowing us to leverage pre-trained models like MobileNet V2 for efficient training with limited data.

- The trade-offs between model accuracy and inference speed, especially crucial for edge devices.

- The implementation of real-time classification using a web-based interface, demonstrating practical applications.

The ability to run these models on edge devices like the Raspi opens up numerous possibilities for IoT applications, autonomous systems, and real-time monitoring solutions. It allows for reduced latency, improved privacy, and operation in environments with limited connectivity.

As we’ve seen, even with the computational constraints of edge devices, it’s possible to achieve impressive results in terms of both accuracy and speed. The flexibility to adjust model parameters, such as input size and alpha values, allows for fine-tuning to meet specific project requirements.

Looking forward, the field of edge AI and image classification continues to evolve rapidly. Advances in model compression techniques, hardware acceleration, and more efficient neural network architectures promise to further expand the capabilities of edge devices in computer vision tasks.

This project serves as a foundation for more complex computer vision applications and encourages further exploration into the exciting world of edge AI and IoT. Whether it’s for industrial automation, smart home applications, or environmental monitoring, the skills and concepts covered here provide a solid starting point for a wide range of innovative projects.