Data Engineering

DALL·E 3 Prompt: Create a rectangular illustration visualizing the concept of data engineering. Include elements such as raw data sources, data processing pipelines, storage systems, and refined datasets. Show how raw data is transformed through cleaning, processing, and storage to become valuable information that can be analyzed and used for decision-making.

Purpose

Why does data quality serve as the foundation that determines whether machine learning systems succeed or fail in production environments?

Machine learning systems depend on data quality: no algorithm can overcome poor data, but excellent data engineering enables even simple models to achieve remarkable results. Unlike traditional software where logic is explicit, ML systems derive behavior from data patterns, making quality the primary determinant of system trustworthiness. Understanding data engineering principles provides the foundation for building ML systems that operate consistently across diverse production environments, maintain performance over time, and scale effectively as data volumes and complexity increase.

Apply the four pillars framework (Quality, Reliability, Scalability, Governance) to evaluate data engineering decisions systematically

Calculate infrastructure requirements for ML systems including storage capacity, processing throughput, and labeling costs

Design data pipelines that maintain training-serving consistency to prevent the primary cause of production ML failures

Evaluate acquisition strategies (existing datasets, web scraping, crowdsourcing, synthetic data) based on quality-cost-scale trade-offs

Architect storage systems (databases, data warehouses, data lakes, feature stores) appropriate for different ML workload patterns

Implement data governance practices including lineage tracking, privacy protection, and bias mitigation throughout the data lifecycle

Data Engineering as a Systems Discipline

The systematic methodologies examined in the previous chapter establish the procedural foundations of machine learning development, yet underlying each phase of these workflows exists a fundamental prerequisite: robust data infrastructure. In traditional software, computational logic is defined by code. In machine learning, system behavior is defined by data. This paradigm shift makes data a first-class citizen in the engineering process, akin to source code, requiring a new discipline, data engineering, to manage it with the same rigor we apply to code.

While workflow methodologies provide the organizational framework for constructing ML systems, data engineering provides the technical substrate that enables effective implementation of these methodologies. Advanced modeling techniques and rigorous validation procedures cannot compensate for deficient data infrastructure, whereas well-engineered data systems enable even conventional approaches to achieve substantial performance gains.

This chapter examines data engineering as a systematic engineering discipline focused on the design, construction, and maintenance of infrastructure that transforms heterogeneous raw information into reliable, high-quality datasets suitable for machine learning applications. In contrast to traditional software systems where computational logic remains explicit and deterministic, machine learning systems derive their behavioral characteristics from underlying data patterns, establishing data infrastructure quality as the principal determinant of system efficacy. Consequently, architectural decisions concerning data acquisition, processing, storage, and governance influence whether ML systems achieve expected performance in production environments.

The critical importance of data engineering decisions becomes evident when examining how data quality issues propagate through machine learning systems. Traditional software systems typically generate predictable error responses or explicit rejections when encountering malformed input, enabling developers to implement immediate corrective measures. Machine learning systems present different challenges: data quality deficiencies manifest as subtle performance degradations that accumulate throughout the processing pipeline and frequently remain undetected until catastrophic system failures occur in production environments. While individual mislabeled training instances may appear inconsequential, systematic labeling inconsistencies systematically corrupt model behavior across entire feature spaces. Similarly, gradual data distribution shifts in production environments can progressively degrade system performance until comprehensive model retraining becomes necessary.

These challenges require systematic engineering approaches that transcend ad-hoc solutions and reactive interventions. Effective data engineering demands systematic analysis of infrastructure requirements that parallels the disciplined methodologies applied to workflow design. This chapter develops a principled theoretical framework for data engineering decision-making, organized around four foundational pillars (Quality, Reliability, Scalability, and Governance) that provide systematic guidance for technical choices spanning initial data acquisition through production deployment. We examine how these engineering principles manifest throughout the complete data lifecycle, clarifying the systems-level thinking required to construct data infrastructure that supports current ML workflows while maintaining adaptability and scalability as system requirements evolve.

Rather than analyzing individual technical components in isolation, we examine the systemic interdependencies among engineering decisions, demonstrating the inherently interconnected nature of data infrastructure systems. This integrated analytical perspective is particularly significant as we prepare to examine the computational frameworks that process these carefully engineered datasets, the primary focus of subsequent chapters.

Four Pillars Framework

Building effective ML systems requires understanding not only what data engineering is but also implementing a structured framework for making principled decisions about data infrastructure. Choices regarding storage formats, ingestion patterns, processing architectures, and governance policies require systematic evaluation rather than ad-hoc selection. This framework organizes data engineering around four foundational pillars that ensure systems achieve functionality, robustness, scalability, and trustworthiness.

The Four Foundational Pillars

Every data engineering decision, from choosing storage formats to designing ingestion pipelines, should be evaluated against four foundational principles. Each pillar contributes to system success through systematic decision-making.

First, data quality provides the foundation for system success. Quality issues compound throughout the ML lifecycle through a phenomenon termed “Data Cascades” (Section 1.3), wherein early failures propagate and amplify downstream. Quality includes accuracy, completeness, consistency, and fitness for the intended ML task. High-quality data is essential for model success, with the mathematical foundations of this relationship explored in Chapter 3: Deep Learning Primer and Chapter 4: DNN Architectures.

Building upon this quality foundation, ML systems require consistent, predictable data processing that handles failures gracefully. Reliability means building systems that continue operating despite component failures, data anomalies, or unexpected load patterns. This includes implementing comprehensive error handling, monitoring, and recovery mechanisms throughout the data pipeline.

While reliability ensures consistent operation, scalability addresses the challenge of growth. As ML systems grow from prototypes to production services, data volumes and processing requirements increase dramatically. Scalability involves designing systems that can handle growing data volumes, user bases, and computational demands without requiring complete system redesigns.

Finally, governance provides the framework within which quality, reliability, and scalability operate. Data governance ensures systems operate within legal, ethical, and business constraints while maintaining transparency and accountability. This includes privacy protection, bias mitigation, regulatory compliance, and establishing clear data ownership and access controls.

Integrating the Pillars Through Systems Thinking

Although understanding each pillar individually provides important insights, recognizing their individual importance is only the first step toward effective data engineering. As illustrated in Figure 1, these four pillars are not independent components but interconnected aspects of a unified system where decisions in one area affect all others. Quality improvements must account for scalability constraints, reliability requirements influence governance implementations, and governance policies shape quality metrics. This systems perspective guides our exploration of data engineering, examining how each technical topic supports and balances these foundational principles while managing their inherent tensions.

As Figure 2 illustrates, data scientists spend 60-80% of their time on data preparation tasks according to various industry surveys1. This statistic reflects the current state where data engineering practices are often ad-hoc rather than systematic. By applying the four-pillar framework consistently to address this overhead, teams can reduce data preparation time while building more reliable and maintainable systems.

1 Data Quality Reality: The famous “garbage in, garbage out” principle was first coined by IBM computer programmer George Fuechsel in the 1960s, describing how flawed input data produces nonsense output. This principle remains critically relevant in modern ML systems.

Framework Application Across Data Lifecycle

This four-pillar framework guides our exploration of data engineering systems from problem definition through production operations. We begin by establishing clear problem definitions and governance principles that shape all subsequent technical decisions. The framework then guides us through data acquisition strategies, where quality and reliability requirements determine how we source and validate data. Processing and storage decisions follow naturally from scalability and governance constraints, while operational practices ensure all four pillars are maintained throughout the system lifecycle.

This framework guides our systematic exploration through each major component of data engineering. As we examine data acquisition, ingestion, processing, and storage in subsequent sections, we examine how these pillars manifest in specific technical decisions: sourcing techniques that balance quality with scalability, storage architectures that support performance within governance constraints, and processing pipelines that maintain reliability while handling massive scale.

Table 1 provides a comprehensive view of how each pillar manifests across the major stages of the data pipeline. This matrix serves both as a planning tool for system design and as a reference for troubleshooting when issues arise at different pipeline stages.

| Stage | Quality | Reliability | Scalability | Governance |

|---|---|---|---|---|

| Acquisition | Representative sampling, bias detection | Diverse sources, redundant collection strategies | Web scraping, synthetic data generation | Consent, anonymization, ethical sourcing |

| Ingestion | Schema validation, data profiling | Dead letter queues, graceful degradation | Batch vs stream processing, autoscaling pipelines | Access controls, audit logs, data lineage |

| Processing | Consistency validation, training-serving parity | Idempotent transformations, retry mechanisms | Distributed frameworks, horizontal scaling | Lineage tracking, privacy preservation, bias monitoring |

| Storage | Data validation checks, freshness monitoring | Backups, replication, disaster recovery | Tiered storage, partitioning, compression optimization | Access audits, encryption, retention policies |

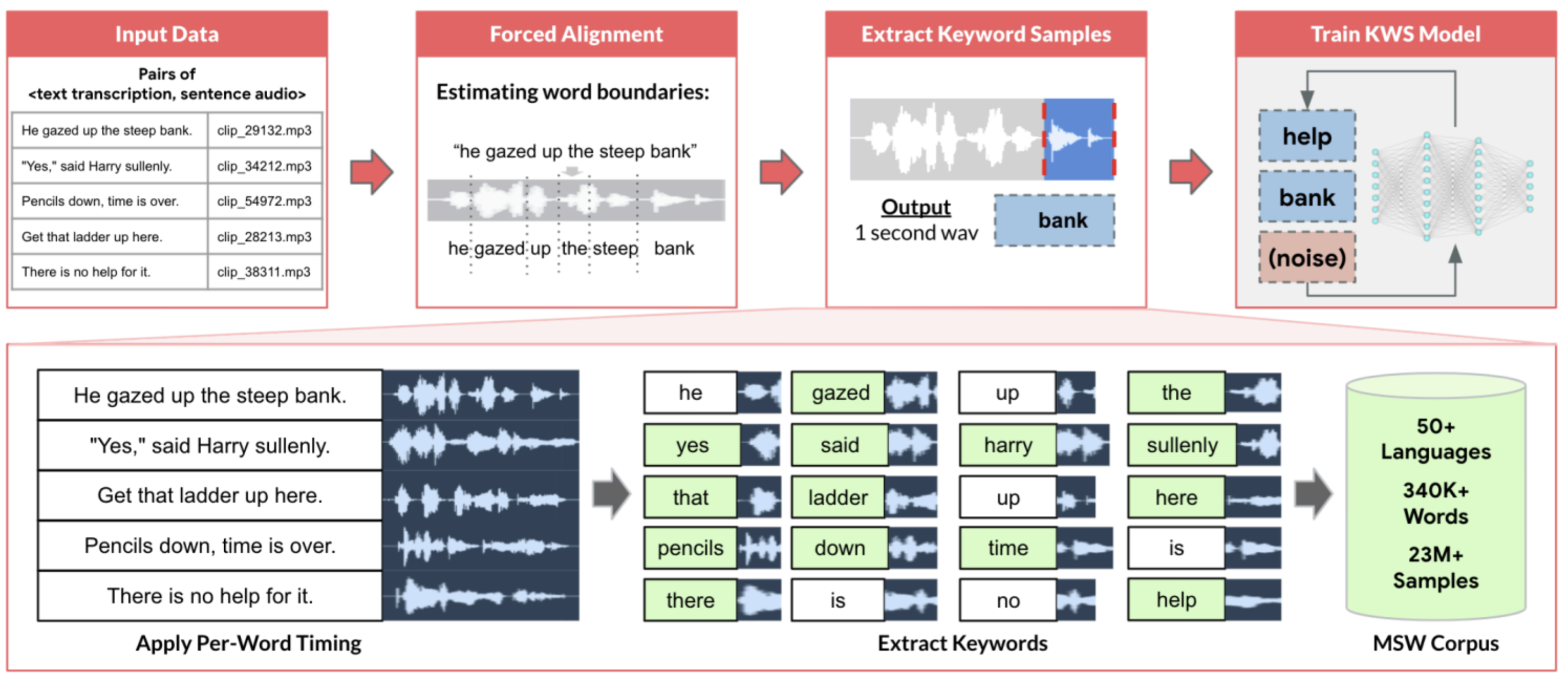

To ground these concepts in practical reality, we follow a Keyword Spotting (KWS) system throughout as our running case study, demonstrating how framework principles translate into engineering decisions.

Data Cascades and the Need for Systematic Foundations

Machine learning systems face a unique failure pattern that distinguishes them from traditional software engineering: “Data Cascades,”2 the phenomenon identified by Sambasivan et al. (2021) where poor data quality in early stages amplifies throughout the entire pipeline, causing downstream model failures, project termination, and potential user harm. Unlike traditional software where bad inputs typically produce immediate errors, ML systems degrade silently until quality issues become severe enough to necessitate complete system rebuilds.

2 Data Cascades: A systems failure pattern unique to ML where poor data quality in early stages amplifies throughout the entire pipeline, causing downstream model failures, project termination, and potential user harm. Unlike traditional software where bad inputs typically produce immediate errors, ML systems degrade silently until quality issues become severe enough to necessitate complete system rebuilds.

Data cascades occur when teams skip establishing clear quality criteria, reliability requirements, and governance principles before beginning data collection and processing work. This fundamental vulnerability motivates our Four Pillars framework: Quality, Reliability, Scalability, and Governance provide the systematic foundation needed to prevent cascade failures and build robust ML systems.

Figure 3 illustrates these potential data pitfalls at every stage and how they influence the entire process down the line. The influence of data collection errors is especially pronounced. As illustrated in the figure, any lapses in this initial stage will become apparent during model evaluation and deployment phases discussed in Chapter 8: AI Training and Chapter 13: ML Operations, potentially leading to costly consequences such as abandoning the entire model and restarting anew. Therefore, investing in data engineering techniques from the onset will help us detect errors early, mitigating these cascading effects.

Establishing Governance Principles Early

With this understanding of how quality issues cascade through ML systems, we must establish governance principles that ensure our data engineering systems operate within ethical, legal, and business constraints. These principles are not afterthoughts to be applied later but foundational requirements that shape every technical decision from the outset.

Central to these governance principles, data systems must protect user privacy and maintain security throughout their lifecycle. This means implementing access controls, encryption, and data minimization practices from the initial system design, not adding them as later enhancements. Privacy requirements directly influence data collection methods, storage architectures, and processing approaches.

Beyond privacy protection, data engineering systems must actively work to identify and mitigate bias in data collection, labeling, and processing. This requires diverse data collection strategies, representative sampling approaches, and systematic bias detection throughout the pipeline. Technical choices about data sources, labeling methodologies, and quality metrics all impact system fairness. Hidden stratification in data—where subpopulations are underrepresented or exhibit different patterns, can cause systematic failures even in well-performing models (Oakden-Rayner et al. 2020), underscoring why demographic balance and representation requires engineering into data collection from the outset.

Complementing these fairness efforts, systems must maintain clear documentation about data sources, processing decisions, and quality criteria. This includes implementing data lineage tracking, maintaining processing logs, and establishing clear ownership and responsibility for data quality decisions.

Finally, data systems must comply with relevant regulations such as GDPR, CCPA, and domain-specific requirements. Compliance requirements influence data retention policies, user consent mechanisms, and cross-border data transfer protocols.

These governance principles work hand-in-hand with our technical pillars of quality, reliability, and scalability. A system cannot be truly reliable if it violates user privacy, and quality metrics are meaningless if they perpetuate unfair outcomes.

Structured Approach to Problem Definition

Building on these governance foundations, we need a systematic approach to problem definition. As Sculley et al. (2021) emphasize, ML systems require problem framing that goes beyond traditional software development approaches. Whether developing recommendation engines processing millions of user interactions, computer vision systems analyzing medical images, or natural language models handling diverse text data, each system brings unique challenges that require careful consideration within our governance and technical framework.

Within this context, establishing clear objectives provides unified direction that guides the entire project, from data collection strategies through deployment operations. These objectives must balance technical performance with governance requirements, creating measurable outcomes that include both accuracy metrics and fairness criteria.

This systematic approach to problem definition ensures that governance principles and technical requirements are integrated from the start rather than retrofitted later. To achieve this integration, we identify the key steps that must precede any data collection effort:

- Identify and clearly state the problem definition

- Set clear objectives to meet

- Establish success benchmarks

- Understand end-user engagement/use

- Understand the constraints and limitations of deployment

- Perform data collection.

- Iterate and refine.

Framework Application Through Keyword Spotting Case Study

To demonstrate how these systematic principles work in practice, Keyword Spotting (KWS) systems provide an ideal case study for applying our four-pillar framework to real-world data engineering challenges. These systems, which power voice-activated devices like smartphones and smart speakers, must detect specific wake words (such as “OK, Google” or “Alexa”) within continuous audio streams while operating under strict resource constraints.

As shown in Figure 4, KWS systems operate as lightweight, always-on front-ends that trigger more complex voice processing systems. These systems demonstrate the interconnected challenges across all four pillars of our framework (Section 1.2): Quality (accuracy across diverse environments), Reliability (consistent battery-powered operation), Scalability (severe memory constraints), and Governance (privacy protection). These constraints explain why many KWS systems support only a limited number of languages: collecting high-quality, representative voice data for smaller linguistic populations proves prohibitively difficult given governance and scalability challenges, demonstrating how all four pillars must work together to achieve successful deployment.

With this framework understanding established, we can apply our problem definition approach to our KWS example, demonstrating how the four pillars guide practical engineering decisions:

Identifying the Problem: KWS detects specific keywords amidst ambient sounds and other spoken words. The primary problem is to design a system that can recognize these keywords with high accuracy, low latency, and minimal false positives or negatives, especially when deployed on devices with limited computational resources. A well-specified problem definition for developing a new KWS model should identify the desired keywords along with the envisioned application and deployment scenario.

Setting Clear Objectives: The objectives for a KWS system must balance multiple competing requirements. Performance targets include achieving high accuracy rates (98% accuracy in keyword detection) while ensuring low latency (keyword detection and response within 200 milliseconds). Resource constraints demand minimizing power consumption to extend battery life on embedded devices and ensuring the model size is optimized for available memory on the device.

Benchmarks for Success: Establish clear metrics to measure the success of the KWS system. Key performance indicators include true positive rate (the percentage of correctly identified keywords relative to all spoken keywords) and false positive rate (the percentage of non-keywords including silence, background noise, and out-of-vocabulary words incorrectly identified as keywords). Detection/error tradeoff curves evaluate KWS on streaming audio representative of real-world deployment scenarios by comparing false accepts per hour (false positives over total evaluation audio duration) against false rejection rate (missed keywords relative to spoken keywords in evaluation audio), as demonstrated by Nayak et al. (2022). Operational metrics track response time (keyword utterance to system response) and power consumption (average power used during keyword detection).

Stakeholder Engagement and Understanding: Engage with stakeholders, which include device manufacturers, hardware and software developers, and end-users. Understand their needs, capabilities, and constraints. Different stakeholders bring competing priorities: device manufacturers might prioritize low power consumption, software developers might emphasize ease of integration, and end-users would prioritize accuracy and responsiveness. Balancing these competing requirements shapes system architecture decisions throughout development.

Understanding the Constraints and Limitations of Embedded Systems: Embedded devices come with their own set of challenges that shape KWS system design. Memory limitations require extremely lightweight models, typically as small as 16 KB to fit in the always-on island of the SoC3, with this constraint covering only model weights while preprocessing code must also fit within tight memory bounds. Processing power constraints from limited computational capabilities (a few hundred MHz of clock speed) demand aggressive model optimization for efficiency. Power consumption becomes critical since most embedded devices run on batteries, requiring the KWS system to achieve sub-milliwatt power consumption during continuous listening. Environmental challenges add another layer of complexity, as devices must function effectively across diverse deployment scenarios ranging from quiet bedrooms to noisy industrial settings.

3 System on Chip (SoC): An integrated circuit that combines all essential computer components (processor, memory, I/O interfaces) on a single chip. Modern SoCs include specialized “always-on” low-power domains that continuously monitor for triggers like wake words while the main processor sleeps, achieving power consumption under 1mW for continuous listening applications.

Data Collection and Analysis: For a KWS system, data quality and diversity determine success. The dataset must capture demographic diversity by including speakers with various accents across age and gender to ensure wide-ranging recognition support. Keyword variations require attention since people pronounce wake words differently, requiring the dataset to capture these pronunciation nuances and slight variations. Background noise diversity proves essential, necessitating data samples that include or are augmented with different ambient noises to train the model for real-world scenarios ranging from quiet environments to noisy conditions.

Iterative Feedback and Refinement: Finally, once a prototype KWS system is developed, teams must ensure the system remains aligned with the defined problem and objectives as deployment scenarios change over time and use-cases evolve. This requires testing in real-world scenarios, gathering feedback about whether some users or deployment scenarios encounter underperformance relative to others, and iteratively refining both the dataset and model based on observed failure patterns.

Building on this problem definition foundation, our KWS system demonstrates how different data collection approaches combine effectively across the project lifecycle. Pre-existing datasets like Google’s Speech Commands (Warden 2018) provide a foundation for initial development, offering carefully curated voice samples for common wake words. However, these datasets often lack diversity in accents, environments, and languages, necessitating additional strategies.

4 Mechanical Turk Origins: Named after the 18th-century chess-playing “automaton” (actually a human chess master hidden inside), Amazon’s MTurk (2005) pioneered human-in-the-loop AI by enabling distributed human computation at scale, ironically reversing the original Turk’s deception of AI capabilities.

To address coverage gaps, web scraping supplements baseline datasets by gathering diverse voice samples from video platforms and speech databases, capturing natural speech patterns and wake word variations. Crowdsourcing platforms like Amazon Mechanical Turk4 enable targeted collection of wake word samples across different demographics and environments, particularly valuable for underrepresented languages or specific acoustic conditions.

Finally, synthetic data generation fills remaining gaps through speech synthesis (Werchniak et al. 2021) and audio augmentation, creating unlimited wake word variations across acoustic environments, speaker characteristics, and background conditions. This comprehensive approach enables KWS systems that perform robustly across diverse real-world conditions while demonstrating how systematic problem definition guides data strategy throughout the project lifecycle.

With our framework principles established through the KWS case study, we now examine how these abstract concepts translate into operational reality through data pipeline architecture.

Data Pipeline Architecture

Data pipelines serve as the systematic implementation of our four-pillar framework, transforming raw data into ML-ready formats while maintaining quality, reliability, scalability, and governance standards. Rather than simple linear data flows, these are complex systems that must orchestrate multiple data sources, transformation processes, and storage systems while ensuring consistent performance under varying load conditions. Pipeline architecture translates our abstract framework principles into operational reality, where each pillar manifests as concrete engineering decisions about validation strategies, error handling mechanisms, throughput optimization, and observability infrastructure.

To illustrate these concepts, our KWS system pipeline architecture must handle continuous audio streams, maintain low-latency processing for real-time keyword detection, and ensure privacy-preserving data handling. The pipeline must scale from development environments processing sample audio files to production deployments handling millions of concurrent audio streams while maintaining strict quality and governance standards.

As shown in the architecture diagram, ML data pipelines consist of several distinct layers: data sources, ingestion, processing, labeling, storage, and ML training (Figure 5). Each layer plays a specific role in the data preparation workflow, and selecting appropriate technologies for each layer requires understanding how our four framework pillars manifest at each stage. Rather than treating these layers as independent components to be optimized separately, we examine how quality requirements at one stage affect scalability constraints at another, how reliability needs shape governance implementations, and how the pillars interact to determine overall system effectiveness.

Central to these design decisions, data pipeline design is constrained by storage hierarchies and I/O bandwidth limitations rather than CPU capacity. Understanding these constraints enables building efficient systems that can handle modern ML workloads. Storage hierarchy trade-offs, ranging from high-latency object storage (ideal for archival) to low-latency in-memory stores (essential for real-time serving), and bandwidth limitations (spinning disks at 100-200 MB/s versus RAM at 50-200 GB/s) shape every pipeline decision. Detailed storage architecture considerations are covered in Section 1.9.

Given these performance constraints, design decisions should align with specific requirements. For streaming data, consider whether you need message durability (ability to replay failed processing), ordering guarantees (maintaining event sequence), or geographic distribution. For batch processing, the key decision factors include data volume relative to memory, processing complexity, and whether computation must be distributed. Single-machine tools suffice for gigabyte-scale data, but terabyte-scale processing needs distributed frameworks that partition work across clusters. The interactions between these layers, viewed through our four-pillar lens, determine the system’s overall effectiveness and guide the specific engineering decisions we examine in the following subsections.

Quality Through Validation and Monitoring

Quality represents the foundation of reliable ML systems, and pipelines implement quality through systematic validation and monitoring at every stage. Production experience shows that data pipeline issues represent a major source of ML failures, with studies citing 30-70% attribution rates for schema changes breaking downstream processing, distribution drift degrading model accuracy, or data corruption silently introducing errors (Sculley et al. 2021). These failures prove particularly insidious because they often don’t cause obvious system crashes but instead slowly degrade model performance in ways that become apparent only after affecting users. The quality pillar demands proactive monitoring and validation that catches issues before they cascade into model failures.

Understanding these metrics in practice requires examining how production teams implement monitoring at scale. Most organizations adopt severity-based alerting systems where different types of failures trigger different response protocols. The most critical alerts indicate complete system failure: the pipeline has stopped processing entirely, showing zero throughput for more than 5 minutes, or a primary data source has become completely unavailable. These situations demand immediate attention because they halt all downstream model training or serving. More subtle degradation patterns require different detection strategies. When throughput drops to 80% of baseline levels, or error rates climb above 5%, or quality metrics drift more than 2 standard deviations from training data characteristics, the system signals degradation requiring urgent but not immediate attention. These gradual failures often prove more dangerous than complete outages because they can persist undetected for hours or days, silently corrupting model inputs and degrading prediction quality.

Consider how these principles apply to a recommendation system processing user interaction events. With a baseline throughput of 50,000 records per second, the monitoring system tracks several interdependent signals. Instantaneous throughput alerts fire if processing drops below 40,000 records per second for more than 10 minutes, accounting for normal traffic variation while catching genuine capacity or processing problems. Each feature in the data stream has its own quality profile: if a feature like user_age shows null values in more than 5% of records when the training data contained less than 1% nulls, something has likely broken in the upstream data source. Duplicate detection runs on sampled data, watching for the same event appearing multiple times—a pattern that might indicate retry logic gone wrong or a database query accidentally returning the same records repeatedly.

These monitoring dimensions become particularly important when considering end-to-end latency. The system must track not just whether data arrives, but how long it takes to flow through the entire pipeline from the moment an event occurs to when the resulting features become available for model inference. When 95th percentile5 latency exceeds 30 seconds in a system with a 10-second service level agreement, the monitoring system needs to pinpoint which pipeline stage introduced the delay: ingestion, transformation, validation, or storage.

5 95th percentile: A statistical measure indicating that 95% of values fall below this threshold, commonly used in performance monitoring to capture typical worst-case behavior while excluding outliers. For latency monitoring, the 95th percentile provides more stable insights than maximum values (which may be anomalies) while revealing performance degradation that averages would hide.

6 Kolmogorov-Smirnov test: A non-parametric statistical test that quantifies whether two datasets come from the same distribution by measuring the maximum distance between their cumulative distribution functions. In ML systems, K-S tests detect data drift by comparing serving data against training baselines, producing p-values where values below 0.05 indicate statistically significant distribution shifts requiring investigation. When the test produces p-values below 0.05, it signals that serving and training data have diverged significantly—perhaps because user behavior has changed, or because an upstream system modification altered how features are computed.

Quality monitoring extends beyond simple schema validation to statistical properties that capture whether serving data resembles training data. Rather than just checking that values fall within valid ranges, production systems track rolling statistics over 24-hour windows. For numerical features like transaction_amount or session_duration, the system computes means and standard deviations continuously, then applies statistical tests like the Kolmogorov-Smirnov test6 to compare serving distributions against training distributions.

Categorical features require different statistical approaches. Instead of comparing means and variances, monitoring systems track category frequency distributions. When new categories appear that never existed in training data, or when existing categories shift substantially in relative frequency—say, the proportion of “mobile” versus “desktop” traffic changes by more than 20%, the system flags potential data quality issues or genuine distribution shifts. This statistical vigilance catches subtle problems that simple schema validation misses entirely: imagine if age values remain in the valid range of 18-95, but the distribution shifts from primarily 25-45 year olds to primarily 65+ year olds, indicating the data source has changed in ways that will affect model performance.

Validation at the pipeline level encompasses multiple strategies working together. Schema validation executes synchronously as data enters the pipeline, rejecting malformed records immediately before they can propagate downstream. Modern tools like TensorFlow Data Validation (TFDV)7 automatically infer schemas from training data, capturing expected data types, value ranges, and presence requirements.

7 TensorFlow Data Validation (TFDV): A production-grade library for analyzing and validating ML data that automatically infers schemas, detects anomalies, and identifies training-serving skew. TFDV computes descriptive statistics, identifies data drift through distribution comparisons, and generates human-readable validation reports, integrating with TFX pipelines for automated data quality monitoring. For a feature vector containing user demographics, the inferred schema might specify that user_age must be a 64-bit integer between 18 and 95 and cannot be null, user_country must be a string from a specific set of country codes, and session_duration must be a floating-point number between 0 and 7200 seconds but is optional. During serving, the validator checks each incoming record against these specifications, rejecting records with null required fields, out-of-range values, or type mismatches before they reach feature computation logic.

This synchronous validation necessarily remains simple and fast, checking properties that can be evaluated on individual records in microseconds. More sophisticated validation that requires comparing serving data against training data distributions or aggregating statistics across many records must run asynchronously to avoid blocking the ingestion pipeline. Statistical validation systems typically sample 1-10% of serving traffic—enough to detect meaningful shifts while avoiding the computational cost of analyzing every record. These samples accumulate in rolling windows, commonly 1 hour, 24 hours, and 7 days, with different windows revealing different patterns. Hourly windows detect sudden shifts like a data source failing over to a backup with different characteristics, while weekly windows reveal gradual drift in user populations or behavior.

Perhaps the most insidious validation challenge arises from training-serving skew8, where the same features get computed differently in training versus serving environments. This typically happens when training pipelines process data in batch using one set of libraries or logic, while serving systems compute features in real-time using different implementations. A recommendation system might compute “user_lifetime_purchases” in training by joining user profiles against complete transaction histories, while the serving system inadvertently uses a cached materialized view9 updated only weekly.

8 Training-Serving Skew: A ML systems failure where identical features are computed differently during training versus serving, causing silent model degradation. Occurs when training uses batch processing with one implementation while serving uses real-time processing with different libraries, creating subtle differences that compound to degrade accuracy significantly without obvious errors.

9 Materialized view: A database optimization that pre-computes and stores query results as physical tables, trading storage space for query performance. Unlike standard views that compute results on-demand, materialized views cache expensive join and aggregation operations but require refresh strategies to maintain data freshness, creating potential training-serving skew when refresh schedules differ between environments. The resulting 15% discrepancy between training and serving features directly explains seemingly mysterious 12% accuracy drops observed in production A/B tests. Detecting training-serving skew requires infrastructure that can recompute training features on serving data for comparison. Production systems implement periodic validation where they sample raw serving data, process it through both training and serving feature pipelines, and measure discrepancies.

Reliability Through Graceful Degradation

While quality monitoring detects issues, reliability ensures systems continue operating effectively when problems occur. Pipelines face constant challenges: data sources become temporarily unavailable, network partitions separate components, upstream schema changes break parsing logic, or unexpected load spikes exhaust resources. The reliability pillar demands systems that handle these failures gracefully rather than cascading into complete outage. This resilience comes from systematic failure analysis, intelligent error handling, and automated recovery strategies that maintain service continuity even under adverse conditions.

Systematic failure mode analysis for ML data pipelines reveals predictable patterns that require specific engineering countermeasures. Data corruption failures occur when upstream systems introduce subtle format changes, encoding issues, or field value modifications that pass basic validation but corrupt model inputs. A date field switching from “YYYY-MM-DD” to “MM/DD/YYYY” format might not trigger schema validation but will break any date-based feature computation. Schema evolution10 failures happen when source systems add fields, rename columns, or change data types without coordination, breaking downstream processing assumptions that expected specific field names or types. Resource exhaustion manifests as gradually degrading performance when data volume growth outpaces capacity planning, eventually causing pipeline failures during peak load periods.

10 Schema Evolution: The challenge of managing changes to data structure over time as source systems add fields, rename columns, or modify data types. Critical for ML systems because model training expects consistent feature schemas, and schema changes can silently break feature computation or introduce training-serving skew.

11 Dead Letter Queue: A separate storage system for messages that fail processing after exhausting retry attempts, enabling later analysis and reprocessing without blocking the main pipeline. Essential for ML systems where data loss is unacceptable—malformed training examples can be fixed and reprocessed, while failed inference requests can be debugged to improve system robustness.

Building on this failure analysis, effective error handling strategies ensure problems are contained and recovered from systematically. Implementing intelligent retry logic for transient errors, such as network interruptions or temporary service outages, requires exponential backoff strategies to avoid overwhelming recovering services. A simple linear retry that attempts reconnection every second would flood a struggling service with connection attempts, potentially preventing its recovery. Exponential backoff—retrying after 1 second, then 2 seconds, then 4 seconds, doubling with each attempt—gives services breathing room to recover while still maintaining persistence. Many ML systems employ the concept of dead letter queues11, using separate storage for data that fails processing after multiple retry attempts. This allows for later analysis and potential reprocessing of problematic data without blocking the main pipeline (Kleppmann 2016). A pipeline processing financial transactions that encounters malformed data can route it to a dead letter queue rather than losing critical records or halting all processing.

Moving beyond ad-hoc error handling, cascade failure prevention requires circuit breaker12 patterns and bulkhead isolation to prevent single component failures from propagating throughout the system. When a feature computation service fails, the circuit breaker pattern stops calling that service after detecting repeated failures, preventing the caller from waiting on timeouts that would cascade into its own failure.

12 Circuit Breaker: A reliability pattern that monitors service failures and automatically stops calling a failing service after a threshold is reached, preventing cascade failures and system overload. Like an electrical circuit breaker, it has three states: closed (normal operation), open (failing service blocked), and half-open (testing if service has recovered).

Automated recovery engineering implements sophisticated strategies beyond simple retry logic. Progressive timeout increases prevent overwhelming struggling services while maintaining rapid recovery for transient issues—initial requests timeout after 1 second, but after detecting service degradation, timeouts extend to 5 seconds, then 30 seconds, giving the service time to stabilize. Multi-tier fallback systems provide degraded service when primary data sources fail: serving slightly stale cached features when real-time computation fails, or using approximate features when exact computation times out. A recommendation system unable to compute user preferences from the past 30 days might fall back to preferences from the past 90 days, providing somewhat less accurate but still useful recommendations rather than failing entirely. Comprehensive alerting and escalation procedures ensure human intervention occurs when automated recovery fails, with sufficient diagnostic information captured during the failure to enable rapid debugging.

These concepts become concrete when considering a financial ML system ingesting market data. Error handling might involve falling back to slightly delayed data sources if real-time feeds fail, while simultaneously alerting the operations team to the issue. Dead letter queues capture malformed price updates for investigation rather than dropping them silently. Circuit breakers prevent the system from overwhelming a struggling market data provider during recovery. This comprehensive approach to error management ensures that downstream processes have access to reliable, high-quality data for training and inference tasks, even in the face of the inevitable failures that occur in distributed systems at scale.

Scalability Patterns

While quality and reliability ensure correct system operation, scalability addresses a different challenge: how systems evolve as data volumes grow and ML systems mature from prototypes to production services. Pipelines that work effectively at gigabyte scale often break at terabyte scale without architectural changes that enable distributed processing. Scalability involves designing systems that handle growing data volumes, user bases, and computational demands without requiring complete redesigns. The key insight is that scalability constraints manifest differently across pipeline stages, requiring different architectural patterns for ingestion, processing, and storage.

ML systems typically follow two primary ingestion patterns, each with distinct scalability characteristics. Batch ingestion involves collecting data in groups over a specified period before processing. This method proves appropriate when real-time data processing is not critical and data can be processed at scheduled intervals. A retail company might use batch ingestion to process daily sales data overnight, updating ML models for inventory prediction each morning. Batch processing enables efficient use of computational resources by amortizing startup costs across large data volumes—a job processing one terabyte might use 100 machines for 10 minutes, achieving better resource efficiency than maintaining always-on infrastructure.

In contrast to this scheduled approach, stream ingestion processes data in real-time as it arrives. This pattern proves crucial for applications requiring immediate data processing, scenarios where data loses value quickly, and systems that need to respond to events as they occur. A financial institution might use stream ingestion for real-time fraud detection, processing each transaction as it occurs to flag suspicious activity immediately. However, stream processing must handle backpressure13 when downstream systems cannot keep pace—when a sudden traffic spike produces data faster than processing capacity, the system must either buffer data (requiring memory), sample (losing some data), or push back to producers (potentially causing failures). Data freshness Service Level Agreements (SLAs)14 formalize these requirements, specifying maximum acceptable delays between data generation and availability for processing.

13 Backpressure: A flow control mechanism in streaming systems where downstream components signal upstream producers to slow data transmission when processing capacity is exceeded. Critical for preventing memory overflow and system crashes during traffic spikes, backpressure can be implemented through buffering, sampling, or direct producer throttling—each with different trade-offs between data loss and system stability.

14 Service Level Agreement (SLA): A formal contract specifying measurable service quality metrics like latency (95th percentile response time under 100ms), availability (99.9% uptime), and throughput (process 50,000 records/second). In ML systems, SLAs often include data freshness (features available within 5 minutes of event), model accuracy (precision above 85%), and inference latency (predictions returned under 200ms).

Recognizing the limitations of either approach alone, many modern ML systems employ hybrid approaches, combining both batch and stream ingestion to handle different data velocities and use cases. This flexibility allows systems to process both historical data in batches and real-time data streams, providing a comprehensive view of the data landscape. Production systems must balance cost versus latency trade-offs: real-time processing can cost 10-100x more than batch processing. This cost differential arises from several factors: streaming systems require always-on infrastructure rather than schedulable resources, maintain redundant processing for fault tolerance, need low-latency networking and storage, and cannot benefit from the economies of scale that batch processing achieves by amortizing startup costs across large data volumes. Techniques for managing streaming systems at scale, including backpressure handling and cost optimization, are detailed in Chapter 13: ML Operations.

Beyond ingestion patterns, distributed processing becomes necessary when single machines cannot handle data volumes or processing complexity. The challenge in distributed systems is that data must be partitioned across multiple computing resources, which introduces coordination overhead. Distributed coordination is limited by network round-trip times: local operations complete in microseconds while network coordination requires milliseconds, creating a 1000x latency difference. This constraint explains why operations requiring global coordination, like computing normalization statistics across 100 machines, create bottlenecks. Each partition computes local statistics quickly, but combining them requires information from all partitions, and the round-trip time for gathering results dominates total execution time.

Data locality becomes critical at this scale. Moving one terabyte of training data across the network takes 100+ seconds at 10GB/s, while local SSD access requires only 200 seconds at 5GB/s. This similar performance between network transfer and local storage drives ML system design toward compute-follows-data architectures where processing moves to data rather than data moving to processing. When processing nodes access local data at RAM speeds (50-200 GB/s) but must coordinate over networks limited to 1-10 GB/s, the bandwidth mismatch creates fundamental bottlenecks. Geographic distribution amplifies these challenges: cross-datacenter coordination must handle network latency (50-200ms between regions), partial failures during network partitions, and regulatory constraints preventing data from crossing borders. Understanding which operations parallelize easily versus those requiring expensive coordination determines system architecture and performance characteristics.

For our KWS system, these scalability patterns manifest concretely through quantitative capacity planning that dimensions infrastructure appropriately for workload requirements. Development uses batch processing on sample datasets to iterate on model architectures rapidly. Training scales to distributed processing across GPU clusters when model complexity or dataset size (23 million examples) exceeds single-machine capacity. Production deployment requires stream processing for real-time wake word detection on millions of concurrent devices. The system must handle traffic spikes when news events trigger synchronized usage—millions of users simultaneously asking about breaking news.

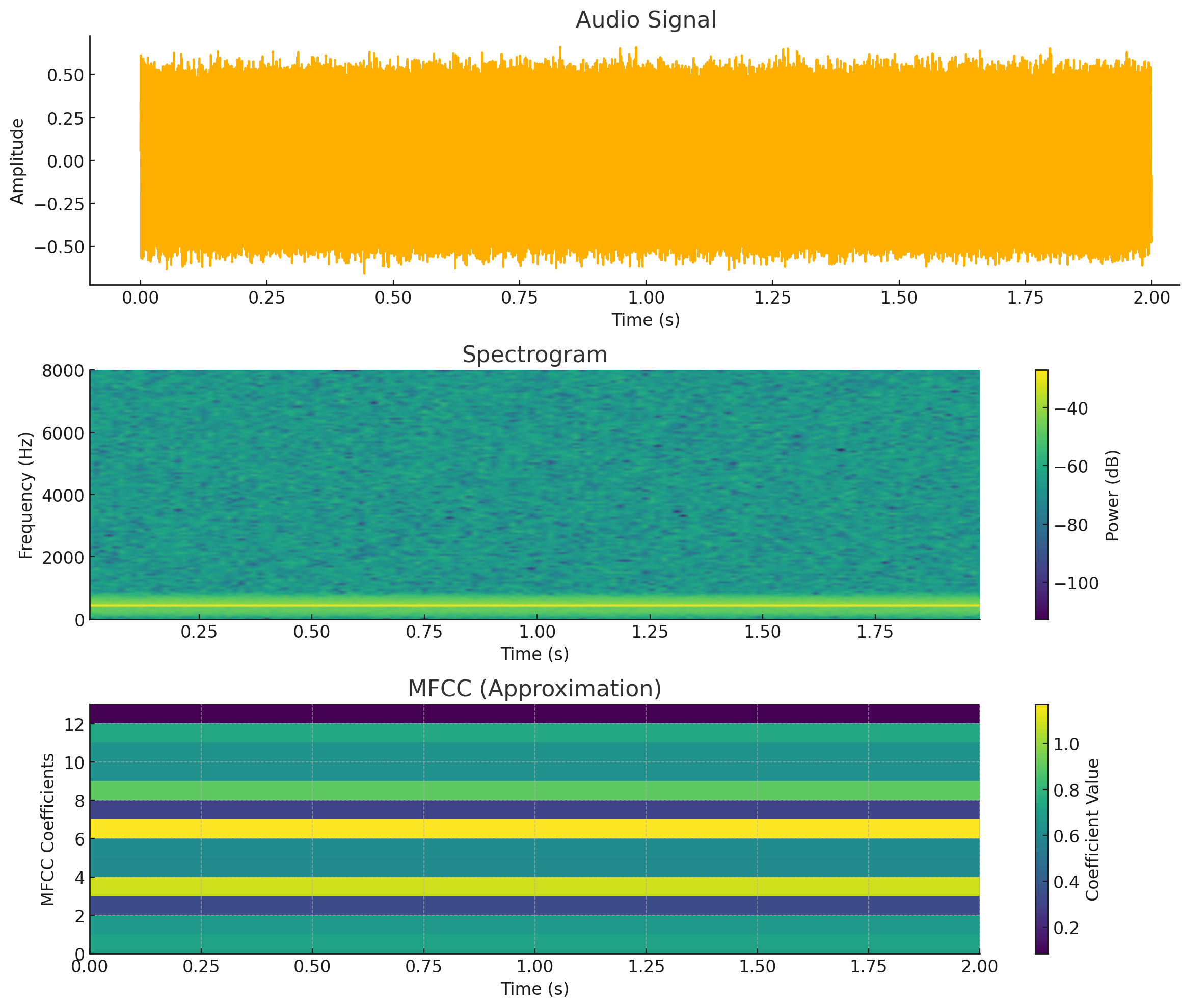

To make these scaling challenges concrete, consider the engineering calculations required to dimension our KWS training infrastructure. With 23 million audio samples averaging 1 second each at 16 kHz sampling rate (16-bit PCM15), raw storage requires approximately:

15 Pulse Code Modulation (PCM): The standard digital audio representation that samples analog waveforms at regular intervals and quantizes amplitudes to discrete values. 16-bit PCM at 16 kHz captures speech adequately for recognition tasks, storing each sample as a 16-bit integer (65,536 possible values) sampled 16,000 times per second, yielding 32 KB/second of uncompressed audio data.

\[ \text{Storage} = 23 \times 10^6 \text{ samples} \times 1 \text{ sec} \times 16,000 \text{ samples/sec} \times 2 \text{ bytes} = 736 \text{ GB} \]

Processing these samples into MFCC features (13 coefficients, 100 frames per second) reduces storage but increases computational requirements. Feature extraction on a modern CPU processes approximately 100x real-time (100 seconds of audio per second of computation), requiring:

\[\text{Processing time} = \frac{23 \times 10^6 \text{ sec of audio}}{100 \text{ speedup}} = 230,000 \text{ sec} \approx 64 \text{ hours on single core}\]

Distributing across 64 cores reduces this to one hour, demonstrating how parallelization enables rapid iteration. Network bandwidth becomes the bottleneck when transferring training data from storage to GPU servers—at 10 GB/s network throughput, transferring 736 GB requires 74 seconds, comparable to the training epoch time itself. This analysis reveals why high-throughput storage (NVMe SSDs achieving 5-7 GB/s) and network infrastructure (25-100 Gbps interconnects) prove essential for ML workloads where data movement time rivals computation time.

Scalability architecture enables this range from development through production while maintaining efficiency at each stage, with capacity planning ensuring infrastructure appropriately dimensions for workload requirements.

Governance Through Observability

Having addressed functional requirements through quality, reliability, and scalability, we turn to the governance pillar. The governance pillar manifests in pipelines as comprehensive observability—the ability to understand what data flows through the system, how it transforms, and who accesses it. Effective governance requires tracking data lineage from sources through transformations to final datasets, maintaining audit trails for compliance, and implementing access controls that enforce organizational policies. Unlike the other pillars that focus primarily on system functionality, governance ensures operations occur within legal, ethical, and business constraints while maintaining transparency and accountability.

Data lineage tracking captures the complete provenance of every dataset: which raw sources contributed data, what transformations were applied, when processing occurred, and what version of processing code executed. For ML systems, lineage becomes essential for debugging model behavior and ensuring reproducibility. When a model prediction proves incorrect, engineers need to trace back through the pipeline: which training data contributed to this prediction, what quality metrics did that data have, what transformations were applied, and can we recreate this exact scenario for investigation? Modern lineage systems like Apache Atlas, Amundsen, or commercial offerings instrument pipelines to automatically capture this flow. Each pipeline stage annotates data with metadata describing its provenance, creating an audit trail that enables both debugging and compliance.

Audit trails complement lineage by recording who accessed data and when. Regulatory frameworks like GDPR require organizations to demonstrate appropriate data handling, including tracking access to personal information. ML pipelines implement audit logging at data access points: when training jobs read datasets, when serving systems retrieve features, or when engineers query data for analysis. These logs typically capture user identity, timestamp, data accessed, and purpose. For a healthcare ML system, audit trails demonstrate compliance by showing that only authorized personnel accessed patient data, that access occurred for legitimate medical purposes, and that data wasn’t retained longer than allowed. The scale of audit logging in production systems can be substantial—a high-traffic recommendation system might generate millions of audit events daily—requiring efficient log storage and querying infrastructure.

Access controls enforce policies about who can read, write, or transform data at each pipeline stage. Rather than simple read/write permissions, ML systems often implement attribute-based access control where policies consider data sensitivity, user roles, and access context. A data scientist might access anonymized training data freely but require approval for raw data containing personal information. Production serving systems might read feature data but never write it, preventing accidental corruption. Access controls integrate with data catalogs that maintain metadata about data sensitivity, compliance requirements, and usage restrictions, enabling automated policy enforcement as data flows through pipelines.

Provenance metadata enables reproducibility essential for both debugging and compliance. When a model trained six months ago performed better than current models, teams need to recreate that training environment: exact data version, transformation parameters, and code versions. ML systems implement this through comprehensive metadata capture: training jobs record dataset checksums, transformation parameter values, random seeds for reproducibility, and code version hashes. Feature stores maintain historical feature values, enabling point-in-time reconstruction of training conditions. For our KWS system, this means tracking which version of forced alignment generated labels, what audio normalization parameters were applied, what synthetic data generation settings were used, and which crowdsourcing batches contributed to training data.

The integration of these governance mechanisms transforms pipelines from opaque data transformers into auditable, reproducible systems that can demonstrate appropriate data handling. This governance infrastructure proves essential not just for regulatory compliance but for maintaining trust in ML systems as they make increasingly consequential decisions affecting users’ lives.

With comprehensive pipeline architecture established—quality through validation and monitoring, reliability through graceful degradation, scalability through appropriate patterns, and governance through observability—we must now determine what actually flows through these carefully designed systems. The data sources we choose shape every downstream characteristic of our ML systems.

Strategic Data Acquisition

Data acquisition represents more than simply gathering training examples. It is a strategic decision that determines our system’s capabilities and limitations. The approaches we choose for sourcing training data directly shape our quality foundation, reliability characteristics, scalability potential, and governance compliance. Rather than treating data sources as independent options to be selected based on convenience or familiarity, we examine them as strategic choices that must align with our established framework requirements. Each sourcing strategy (existing datasets, web scraping, crowdsourcing, synthetic generation) offers different trade-offs across quality, cost, scale, and ethical considerations. The key insight is that no single approach satisfies all requirements; successful ML systems typically combine multiple strategies, balancing their complementary strengths against competing constraints.

Returning to our KWS system, data source decisions have profound implications across all our framework pillars, as demonstrated in our integrated case study in Section 1.3.3. Achieving 98% accuracy across diverse acoustic environments (quality pillar) requires representative data spanning accents, ages, and recording conditions. Maintaining consistent detection despite device variations (reliability pillar) demands data from varied hardware. Supporting millions of concurrent users (scalability pillar) requires data volumes that manual collection cannot economically provide. Protecting user privacy in always-listening systems (governance pillar) constrains collection methods and requires careful anonymization. These interconnected requirements demonstrate why acquisition strategy must be evaluated systematically rather than through ad-hoc source selection.

Data Source Evaluation and Selection

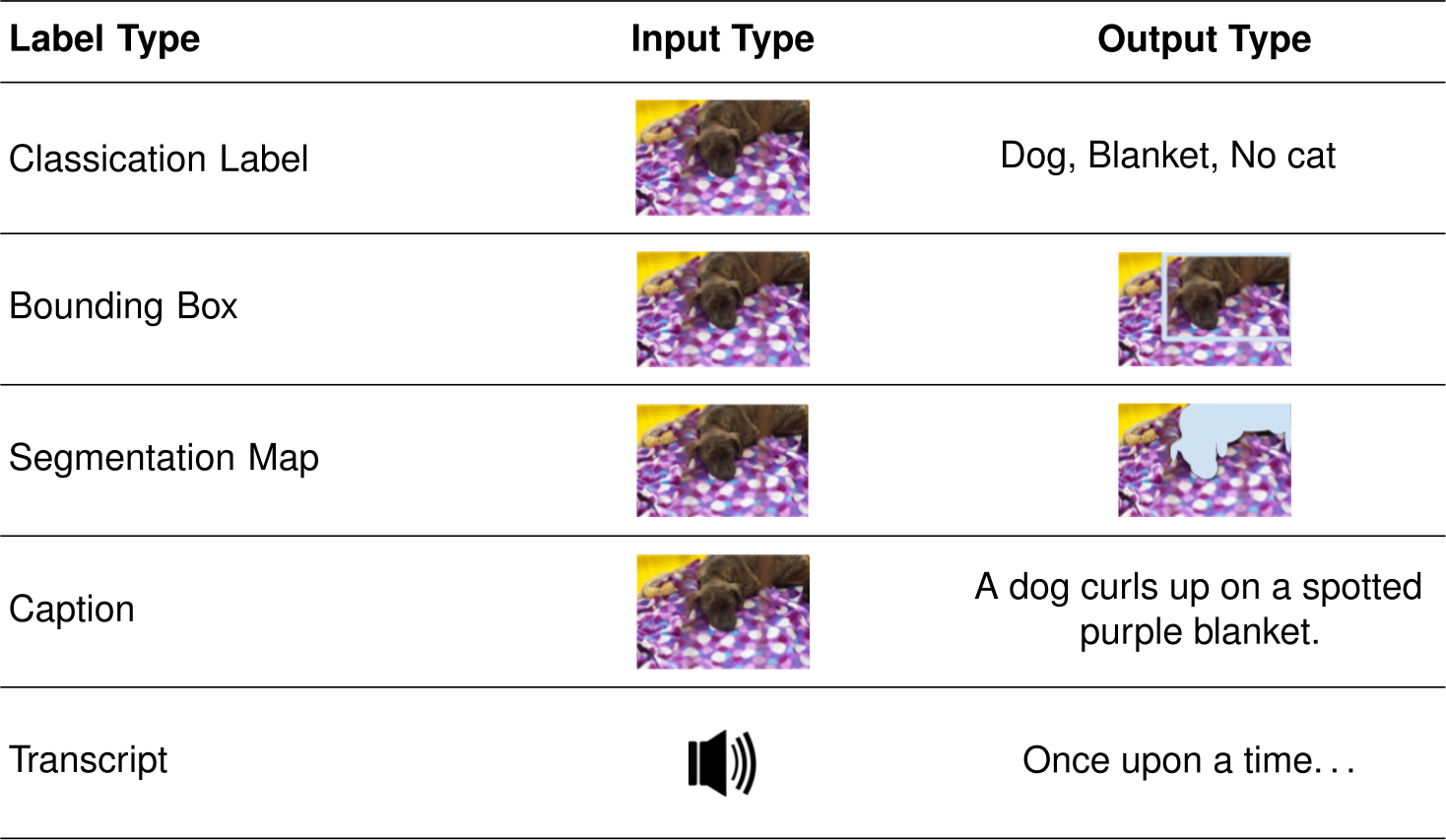

Having established the strategic importance of data acquisition, we begin with quality as the primary driver. When quality requirements dominate acquisition decisions, the choice between curated datasets, expert crowdsourcing, and controlled web scraping depends on the accuracy targets, domain expertise needed, and benchmark requirements that guide model development. The quality pillar demands understanding not just that data appears correct but that it accurately represents the deployment environment and provides sufficient coverage of edge cases that might cause failures.

Platforms like Kaggle and UCI Machine Learning Repository provide ML practitioners with ready-to-use datasets that can jumpstart system development. These pre-existing datasets are particularly valuable when building ML systems as they offer immediate access to cleaned, formatted data with established benchmarks. One of their primary advantages is cost efficiency, as creating datasets from scratch requires significant time and resources, especially when building production ML systems that need large amounts of high-quality training data. Building on this cost efficiency, many of these datasets, such as ImageNet, have become standard benchmarks in the machine learning community, enabling consistent performance comparisons across different models and architectures. For ML system developers, this standardization provides clear metrics for evaluating model improvements and system performance. The immediate availability of these datasets allows teams to begin experimentation and prototyping without delays in data collection and preprocessing.

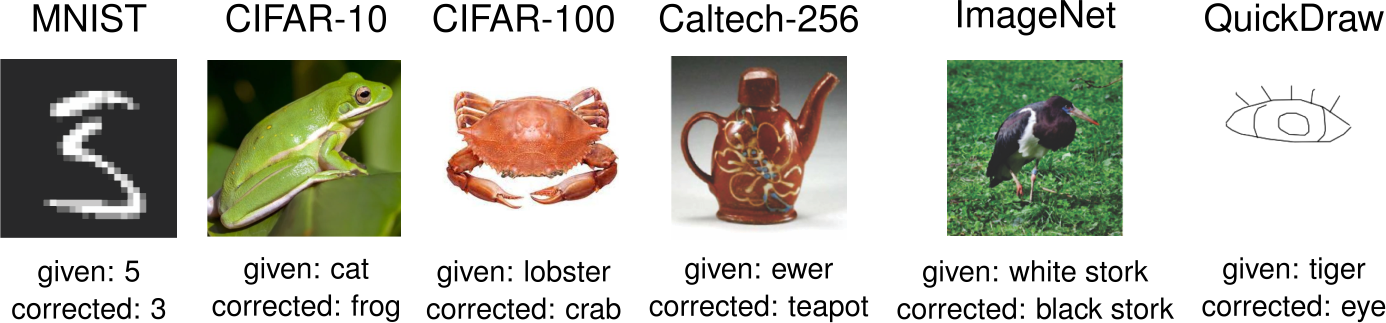

Despite these advantages, ML practitioners must carefully consider the quality assurance aspects of pre-existing datasets. For instance, the ImageNet dataset was found to have label errors on 3.4% of the validation set (Northcutt, Athalye, and Mueller 2021). While popular datasets benefit from community scrutiny that helps identify and correct errors and biases, most datasets remain “untended gardens” where quality issues can significantly impact downstream system performance if not properly addressed. As (Gebru et al. 2021) highlighted in her paper, simply providing a dataset without documentation can lead to misuse and misinterpretation, potentially amplifying biases present in the data.

Beyond quality concerns, supporting documentation accompanying existing datasets is invaluable, yet is often only present in widely-used datasets. Good documentation provides insights into the data collection process and variable definitions and sometimes even offers baseline model performances. This information not only aids understanding but also promotes reproducibility in research, a cornerstone of scientific integrity; currently, there is a crisis around improving reproducibility in machine learning systems (Pineau et al. 2021; Henderson et al. 2018). When other researchers have access to the same data, they can validate findings, test new hypotheses, or apply different methodologies, thus allowing us to build on each other’s work more rapidly. The challenges of data quality extend particularly to big data scenarios where volume and variety compound quality concerns (Gudivada, Rao, et al. 2017), requiring systematic approaches to quality validation at scale.

Even with proper documentation, understanding the context in which the data was collected becomes necessary. Researchers must avoid potential overfitting when using popular datasets such as ImageNet (Beyer et al. 2020), which can lead to inflated performance metrics. Sometimes, these datasets do not reflect the real-world data.

Central to these contextual concerns, a key consideration for ML systems is how well pre-existing datasets reflect real-world deployment conditions. Relying on standard datasets can create a concerning disconnect between training and production environments. This misalignment becomes particularly problematic when multiple ML systems are trained on the same datasets (Figure 6), potentially propagating biases and limitations throughout an entire ecosystem of deployed models.

For our KWS system, pre-existing datasets like Google’s Speech Commands (Warden 2018) provide essential starting points, offering carefully curated voice samples for common wake words. These datasets enable rapid prototyping and establish baseline performance metrics. However, evaluating them against our quality requirements immediately reveals coverage gaps: limited accent diversity, predominantly quiet recording environments, and support for only major languages. Quality-driven acquisition strategy recognizes these limitations and plans complementary approaches to address them, demonstrating how framework-based thinking guides source selection beyond simply choosing available datasets.

Scalability and Cost Optimization

While quality-focused approaches excel at creating accurate, well-curated datasets, they face inherent scaling limitations. When scale requirements dominate—needing millions or billions of examples that manual curation cannot economically provide—web scraping and synthetic generation offer paths to massive datasets. The scalability pillar demands understanding the economic models underlying different acquisition strategies: cost per labeled example, throughput limitations, and how these scale with data volume. What proves cost-effective at thousand-example scale often becomes prohibitive at million-example scale, while approaches that require high setup costs amortize favorably across large volumes.

Web scraping offers a powerful approach to gathering training data at scale, particularly in domains where pre-existing datasets are insufficient. This automated technique for extracting data from websites has become essential for modern ML system development, enabling teams to build custom datasets tailored to their specific needs. When human-labeled data is scarce, web scraping demonstrates its value. Consider computer vision systems: major datasets like ImageNet and OpenImages were built through systematic web scraping, significantly advancing the field of computer vision.

Expanding beyond these computer vision applications, the impact of web scraping extends well beyond image recognition systems. In natural language processing, web-scraped data has enabled the development of increasingly sophisticated ML systems. Large language models, such as ChatGPT and Claude, rely on vast amounts of text scraped from the public internet and media to learn language patterns and generate responses (Groeneveld et al. 2024). Similarly, specialized ML systems like GitHub’s Copilot demonstrate how targeted web scraping, in this case of code repositories, can create powerful domain-specific assistants (Chen et al. 2021).

Building on these foundational developments, production ML systems often require continuous data collection to maintain relevance and performance. Web scraping facilitates this by gathering structured data like stock prices, weather patterns, or product information for analytical applications. This continuous collection introduces unique challenges for ML systems. Data consistency becomes crucial, as variations in website structure or content formatting can disrupt the data pipeline and affect model performance. Proper data management through databases or warehouses becomes essential not just for storage, but for maintaining data quality and enabling model updates.

However, alongside these powerful capabilities, web scraping presents several challenges that ML system developers must carefully consider. Legal and ethical constraints can limit data collection, as not all websites permit scraping, and violating these restrictions can have serious consequences. When building ML systems with scraped data, teams must carefully document data sources and ensure compliance with terms of service and copyright laws. Privacy considerations become important when dealing with user-generated content, often requiring systematic anonymization procedures.

Complementing these legal and ethical constraints, technical limitations also affect the reliability of web-scraped training data. Rate limiting by websites can slow data collection, while the dynamic nature of web content can introduce inconsistencies that impact model training. As shown in Figure 7, web scraping can yield unexpected or irrelevant data, for example, historical images appearing in contemporary image searches, that can pollute training datasets and degrade model performance. These issues highlight the importance of thorough data validation and cleaning processes in ML pipelines built on web-scraped data.

Crowdsourcing offers another scalable approach, leveraging distributed human computation to accelerate dataset creation. Platforms like Amazon Mechanical Turk exemplify how crowdsourcing facilitates this process by distributing annotation tasks to a global workforce. This enables rapid collection of labels for complex tasks such as sentiment analysis, image recognition, and speech transcription, significantly expediting the data preparation phase. One of the most impactful examples of crowdsourcing in machine learning is the creation of the ImageNet dataset. ImageNet, which revolutionized computer vision, was built by distributing image labeling tasks to contributors via Amazon Mechanical Turk. The contributors categorized millions of images into thousands of classes, enabling researchers to train and benchmark models for a wide variety of visual recognition tasks.

Building on this massive labeling effort, the dataset’s availability spurred advancements in deep learning, including the breakthrough AlexNet model in 2012 (Krizhevsky, Sutskever, and Hinton 2017) that demonstrated the power of large-scale neural networks and showed how large-scale, crowdsourced datasets could drive innovation. ImageNet’s success highlights how leveraging a diverse group of contributors for annotation can enable machine learning systems to achieve unprecedented performance. Extending beyond academic research, another example of crowdsourcing’s potential is Google’s Crowdsource, a platform where volunteers contribute labeled data to improve AI systems in applications like language translation, handwriting recognition, and image understanding.

Beyond these static dataset creation efforts, crowdsourcing has also been instrumental in applications beyond traditional dataset annotation. For instance, the navigation app Waze uses crowdsourced data from its users to provide real-time traffic updates, route suggestions, and incident reporting. These diverse applications highlight one of the primary advantages of crowdsourcing: its scalability. By distributing microtasks to a large audience, projects can process enormous volumes of data quickly and cost-effectively. This scalability is particularly beneficial for machine learning systems that require extensive datasets to achieve high performance. The diversity of contributors introduces a wide range of perspectives, cultural insights, and linguistic variations, enriching datasets and improving models’ ability to generalize across populations.

Complementing this scalability advantage, flexibility is a key benefit of crowdsourcing. Tasks can be adjusted dynamically based on initial results, allowing for iterative improvements in data collection. For example, Google’s reCAPTCHA system uses crowdsourcing to verify human users while simultaneously labeling datasets for training machine learning models.

Moving beyond human-generated data entirely, synthetic data generation represents the ultimate scalability solution, creating unlimited training examples through algorithmic generation rather than manual collection. This approach changes the economics of data acquisition by removing human labor from the equation. As Figure 8 illustrates, synthetic data combines with historical datasets to create larger, more diverse training sets that would be impractical to collect manually.

Building on this foundation, advancements in generative modeling techniques have greatly enhanced the quality of synthetic data. Modern AI systems can produce data that closely resembles real-world distributions, making it suitable for applications ranging from computer vision to natural language processing. For example, generative models have been used to create synthetic images for object recognition tasks, producing diverse datasets that closely match real-world images. Similarly, synthetic data has been leveraged to simulate speech patterns, enhancing the robustness of voice recognition systems.

Beyond these quality improvements, synthetic data has become particularly valuable in domains where obtaining real-world data is either impractical or costly. The automotive industry has embraced synthetic data to train autonomous vehicle systems; there are only so many cars you can physically crash to get crash-test data that might help an ML system know how to avoid crashes in the first place. Capturing real-world scenarios, especially rare edge cases such as near-accidents or unusual road conditions, is inherently difficult. Synthetic data allows researchers to simulate these scenarios in a controlled virtual environment, ensuring that models are trained to handle a wide range of conditions. This approach has proven invaluable for advancing the capabilities of self-driving cars.

Complementing these safety-critical applications, another important application of synthetic data lies in augmenting existing datasets. Introducing variations into datasets enhances model robustness by exposing the model to diverse conditions. For instance, in speech recognition, data augmentation techniques like SpecAugment (Park et al. 2019) introduce noise, shifts, or pitch variations, enabling models to generalize better across different environments and speaker styles. This principle extends to other domains as well, where synthetic data can fill gaps in underrepresented scenarios or edge cases.

For our KWS system, the scalability pillar drove the need for 23 million training examples across 50 languages—a volume that manual collection cannot economically provide. Web scraping supplements baseline datasets with diverse voice samples from video platforms. Crowdsourcing enables targeted collection for underrepresented languages. Synthetic data generation fills remaining gaps through speech synthesis (Werchniak et al. 2021) and audio augmentation, creating unlimited wake word variations across acoustic environments, speaker characteristics, and background conditions. This comprehensive multi-source strategy demonstrates how scalability requirements shape acquisition decisions, with each approach contributing specific capabilities to the overall data ecosystem.

Reliability Across Diverse Conditions

Beyond quality and scale considerations, the reliability pillar addresses a critical question: will our collected data enable models that perform consistently across the deployment environment’s full range of conditions? A dataset might achieve high quality by established metrics yet fail to support reliable production systems if it doesn’t capture the diversity encountered during deployment. Coverage requirements for robust models extend beyond simple volume to encompass geographic diversity, demographic representation, temporal variation, and edge case inclusion that stress-test model behavior.

Understanding coverage requirements requires examining potential failure modes. Geographic bias occurs when training data comes predominantly from specific regions, causing models to underperform in other areas. A study of image datasets found significant geographic skew, with image recognition systems trained on predominantly Western imagery performing poorly on images from other regions (Wang et al. 2019). Demographic bias emerges when training data doesn’t represent the full user population, potentially causing discriminatory outcomes. Temporal variation matters when phenomena change over time—a fraud detection model trained only on historical data may fail against new fraud patterns. Edge case collection proves particularly challenging yet critical, as rare scenarios often represent high-stakes situations where failures cause the most harm.

The challenge of edge case collection becomes apparent in autonomous vehicle development. While normal driving conditions are easy to capture through test fleet operation, near-accidents, unusual pedestrian behavior, or rare weather conditions occur infrequently. Synthetic data generation helps address this by simulating rare scenarios, but validating that synthetic examples accurately represent real edge cases requires careful engineering. Some organizations employ targeted data collection where test drivers deliberately create edge cases or where engineers identify scenarios from incident reports that need better coverage.

Dataset convergence, illustrated in Figure 6 earlier, represents another reliability challenge. When multiple systems train on identical datasets, they inherit identical blind spots and biases. An entire ecosystem of models may fail on the same edge cases because all trained on data with the same coverage gaps. This systemic risk motivates diverse data sourcing strategies where each organization collects supplementary data beyond common benchmarks, ensuring their models develop different strengths and weaknesses rather than shared failure modes.

For our KWS system, reliability manifests as consistent wake word detection across acoustic environments from quiet bedrooms to noisy streets, across accents from various geographic regions, and across age ranges from children to elderly speakers. The data sourcing strategy explicitly addresses these diversity requirements: web scraping captures natural speech variation from diverse video sources, crowdsourcing targets underrepresented demographics and environments, and synthetic data systematically explores the parameter space of acoustic conditions. Without this deliberate diversity in sourcing, the system might achieve high accuracy on test sets while failing unreliably in production deployment.

Governance and Ethics in Sourcing