Security & Privacy

DALL·E 3 Prompt: An illustration on privacy and security in machine learning systems. The image shows a digital landscape with a network of interconnected nodes and data streams, symbolizing machine learning algorithms. In the foreground, there’s a large lock superimposed over the network, representing privacy and security. The lock is semi-transparent, allowing the underlying network to be partially visible. The background features binary code and digital encryption symbols, emphasizing the theme of cybersecurity. The color scheme is a mix of blues, greens, and grays, suggesting a high-tech, digital environment.

Purpose

Why do privacy and security determine whether machine learning systems achieve widespread adoption and societal trust?

Machine learning systems require unprecedented access to personal data, institutional knowledge, and behavioral patterns to function effectively, creating tension between utility and protection that determines societal acceptance. Unlike traditional software that processes data transiently, ML systems learn from sensitive information and embed patterns into persistent models that can inadvertently reveal private details. This capability creates systemic risks extending beyond individual privacy violations to threaten institutional trust, competitive advantages, and democratic governance. Success of machine learning deployment across critical domains (healthcare, finance, education, and public services) depends entirely on establishing robust security and privacy foundations enabling beneficial use while preventing harmful exposure. Without these protections, even the most capable systems remain unused due to legal, ethical, and practical concerns. Understanding privacy and security principles enables engineers to design systems achieving both technical excellence and societal acceptance.

Distinguish between security and privacy concerns in machine learning systems using formal definitions and threat models

Analyze historical security incidents to extract principles applicable to ML system vulnerabilities

Classify ML threats across model, data, and hardware attack surfaces

Evaluate privacy-preserving techniques including differential privacy, federated learning, and synthetic data generation for specific use cases

Design layered defense architectures that integrate data protection, model security, and hardware trust mechanisms

Implement basic security controls including access management, encryption, and input validation for ML systems

Assess trade-offs between security measures and system performance using quantitative cost-benefit analysis

Apply the three-phase security roadmap to prioritize defenses based on organizational threat models and risk tolerance

Security and Privacy in ML Systems

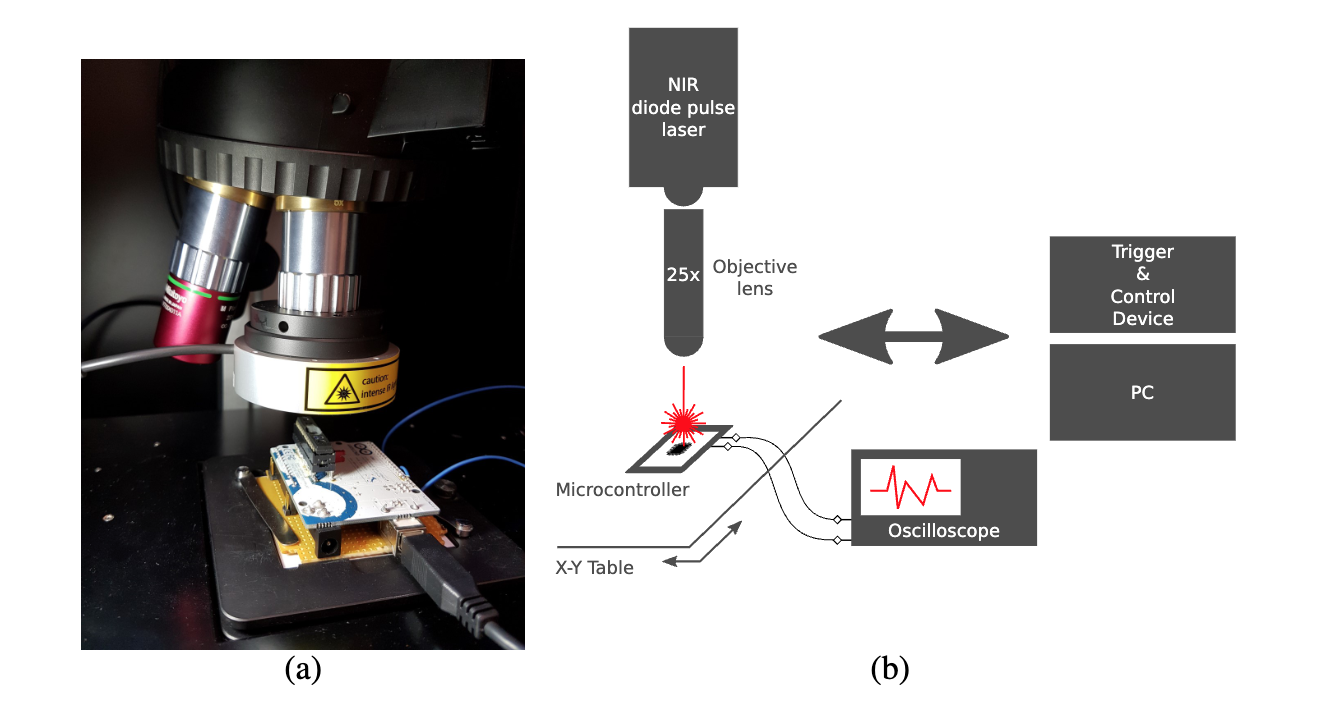

The shift from centralized training architectures to distributed, adaptive machine learning systems has altered the threat landscape and security requirements for modern ML infrastructure. Contemporary machine learning systems, as examined in Chapter 14: On-Device Learning, increasingly operate across heterogeneous computational environments spanning edge devices, federated networks, and hybrid cloud deployments. This architectural evolution enables new capabilities in adaptive intelligence but introduces attack vectors and privacy vulnerabilities that traditional cybersecurity frameworks cannot adequately address.

Machine learning systems exhibit different security characteristics compared to conventional software applications. Traditional software systems process data transiently and deterministically, whereas machine learning systems extract and encode patterns from training data into persistent model parameters. This learned knowledge representation creates unique vulnerabilities where sensitive information can be inadvertently memorized and later exposed through model outputs or systematic interrogation. Such risks manifest across domains from healthcare systems that may leak patient information to proprietary models that can be reverse-engineered through strategic query patterns, threatening both individual privacy and organizational intellectual property.

The architectural complexity of machine learning systems, as detailed in Chapter 2: ML Systems, compounds these security challenges through multi-layered attack surfaces. Contemporary ML deployments include data ingestion pipelines, distributed training infrastructure, model serving systems, and continuous monitoring frameworks. Each architectural component introduces distinct vulnerabilities while privacy concerns affect the entire computational stack. The distributed nature of modern deployments, with continuous adaptation at edge nodes and federated coordination protocols, expands the attack surface while complicating comprehensive security implementation.

Addressing these challenges requires systematic approaches that integrate security and privacy considerations throughout the machine learning system lifecycle. This chapter establishes the foundations and methodologies necessary for engineering ML systems that achieve both computational effectiveness and trustworthy operation. We examine the application of established security principles to machine learning contexts, identify threat models specific to learning systems, and present comprehensive defense strategies that include data protection mechanisms, secure model architectures, and hardware-based security implementations.

Our investigation proceeds through four interconnected frameworks. We begin by establishing distinctions between security and privacy within machine learning contexts, then examine evidence from historical security incidents to inform contemporary threat assessment. We analyze vulnerabilities that emerge from the learning process itself, before presenting layered defense architectures that span cryptographic data protection, adversarial-robust model design, and hardware security mechanisms. Throughout this analysis, we emphasize implementation guidance that enables practitioners to develop systems meeting both technical performance requirements and the trust standards necessary for societal deployment.

Foundational Concepts and Definitions

Security and privacy are core concerns in machine learning system design, but they are often misunderstood or conflated. Both aim to protect systems and data, yet they do so in different ways, address different threat models, and require distinct technical responses. For ML systems, distinguishing between the two helps guide the design of robust and responsible infrastructure.

Security Defined

Security in machine learning focuses on defending systems from adversarial behavior. This includes protecting model parameters, training pipelines, deployment infrastructure, and data access pathways from manipulation or misuse.

Example: A facial recognition system deployed in public transit infrastructure may be targeted with adversarial inputs that cause it to misidentify individuals or fail entirely. This is a runtime security vulnerability that threatens both accuracy and system availability.

Privacy Defined

While security addresses adversarial threats, privacy focuses on limiting the exposure and misuse of sensitive information within ML systems. This includes protecting training data, inference inputs, and model outputs from leaking personal or proprietary information, even when systems operate correctly and no explicit attack is taking place.

Example: A language model trained on medical transcripts may inadvertently memorize snippets of patient conversations. If a user later triggers this content through a public-facing chatbot, it represents a privacy failure, even in the absence of an attacker.

Security versus Privacy

Although they intersect in some areas (encrypted storage supports both), security and privacy differ in their objectives, threat models, and typical mitigation strategies. Table 1 below summarizes these distinctions in the context of machine learning systems.

| Aspect | Security | Privacy |

|---|---|---|

| Primary Goal | Prevent unauthorized access or disruption | Limit exposure of sensitive information |

| Threat Model | Adversarial actors (external or internal) | Honest-but-curious observers or passive leaks |

| Typical Concerns | Model theft, poisoning, evasion attacks | Data leakage, re-identification, memorization |

| Example Attack | Adversarial inputs cause misclassification | Model inversion reveals training data |

| Representative Defenses | Access control, adversarial training | Differential privacy, federated learning |

| Relevance to Regulation | Emphasized in cybersecurity standards | Central to data protection laws (e.g., GDPR) |

Security-Privacy Interactions and Trade-offs

Security and privacy are deeply interrelated but not interchangeable. A secure system helps maintain privacy by restricting unauthorized access to models and data. Privacy-preserving designs can improve security by reducing the attack surface, for example, minimizing the retention of sensitive data reduces the risk of exposure if a system is compromised.

However, they can also be in tension. Techniques like differential privacy1 reduce memorization risks but may lower model utility. Similarly, encryption enhances security but may obscure transparency and auditability, complicating privacy compliance. In machine learning systems, designers must reason about these trade-offs holistically. Systems that serve sensitive domains, including healthcare, finance, and public safety, must simultaneously protect against both misuse (security) and overexposure (privacy). Understanding the boundaries between these concerns is essential for building systems that are performant, trustworthy, and legally compliant.

1 Differential Privacy Origins: Cynthia Dwork coined the term differential privacy at Microsoft Research in 2006, but the concept emerged from her frustration with the “anonymization myth” (the false belief that removing names from data guaranteed privacy). Her groundbreaking insight was that privacy should be mathematically provable, not just plausible, leading to the rigorous framework that now protects billions of users’ data in products from Apple to Google.

Learning from Security Breaches

Having established the conceptual foundations of security and privacy, we now examine how these principles manifest in real-world systems through landmark security incidents. These historical cases provide concrete illustrations of the abstract concepts we’ve defined, showing how security vulnerabilities emerge and propagate through complex systems. More importantly, they reveal universal patterns (supply chain compromise, insufficient isolation, and weaponized endpoints) that directly apply to modern machine learning deployments.

Valuable lessons can be drawn from well-known security breaches across a range of computing systems. Understanding how these patterns apply to modern ML deployments, which increasingly operate across cloud, edge, and embedded environments, provides important lessons for securing machine learning systems. These incidents demonstrate how weaknesses in system design can lead to widespread, and sometimes physical, consequences. Although the examples discussed in this section do not all involve machine learning directly, they provide important insights into designing secure systems. These lessons apply to machine learning applications deployed across cloud, edge, and embedded environments.

Supply Chain Compromise: Stuxnet

In 2010, security researchers discovered a highly sophisticated computer worm later named Stuxnet2, which targeted industrial control systems used in Iran’s Natanz nuclear facility (Farwell and Rohozinski 2011). Stuxnet exploited four previously unknown “zero-day”3 vulnerabilities in Microsoft Windows, allowing it to spread undetected through networked and isolated systems.

2 Stuxnet Discovery: Stuxnet was first detected by VirusBokNok, a small Belarusian antivirus company, when their client computers began crashing unexpectedly. What seemed like a routine malware investigation turned into one of the most significant cybersecurity discoveries in history: the first confirmed cyberweapon designed to cause physical destruction.

3 Zero-Day Term Origin: The term “zero-day” originated in software piracy circles, referring to the “zero days” since a program’s release when pirated copies appeared. In security, it describes the “zero days” defenders have to patch a vulnerability before attackers exploit it, representing the ultimate race between attack and defense.

4 Air-Gapped Systems: Air-gapped systems are networks physically isolated from external connections, originally developed for military systems in the 1960s. Despite seeming impenetrable, studies show 90% of air-gapped systems can be breached through supply chain compromise, infected removable media, or hidden channels (acoustic, electromagnetic, thermal) (Farwell and Rohozinski 2011).

5 USB Attacks: USB interfaces, introduced in 1996, became a primary attack vector for crossing air gaps. The 2008 Operation Olympic Games reportedly used infected USB drives to penetrate secure facilities, with some estimates suggesting 60% of organizations remain vulnerable to USB-based attacks (Farwell and Rohozinski 2011).

Unlike typical malware designed to steal information or perform espionage, Stuxnet was engineered to cause physical damage. Its objective was to disrupt uranium enrichment by sabotaging the centrifuges used in the process. Despite the facility being air-gapped4 from external networks, the malware is believed to have entered the system via an infected USB device5, demonstrating how physical access can compromise isolated environments.

The worm specifically targeted programmable logic controllers (PLCs), industrial computers that automate electromechanical processes such as controlling the speed of centrifuges. By exploiting vulnerabilities in the Windows operating system and the Siemens Step7 software used to program the PLCs, Stuxnet achieved highly targeted, real-world disruption. This represents a landmark in cybersecurity, demonstrating how malicious software can bridge the digital and physical worlds to manipulate industrial infrastructure.

The lessons from Stuxnet directly apply to modern ML systems. Training pipelines and model repositories face persistent supply chain risks analogous to those exploited by Stuxnet. Just as Stuxnet compromised industrial systems through infected USB devices and software vulnerabilities, modern ML systems face multiple attack vectors: compromised dependencies (malicious packages in PyPI/conda repositories), malicious training data (poisoned datasets on HuggingFace, Kaggle), backdoored model weights (trojan models in model repositories), and tampered hardware drivers (compromised NVIDIA CUDA libraries, firmware backdoors in AI accelerators).

A concrete ML attack scenario illustrates these risks: an attacker uploads a backdoored image classification model to a popular model repository, trained to misclassify specific patterns while maintaining normal accuracy on clean data. When deployed in autonomous vehicles, this backdoored model correctly identifies most objects but fails to detect pedestrians wearing specific patterns, creating safety risks. The attack propagates through automated model deployment pipelines, affecting thousands of vehicles before detection.

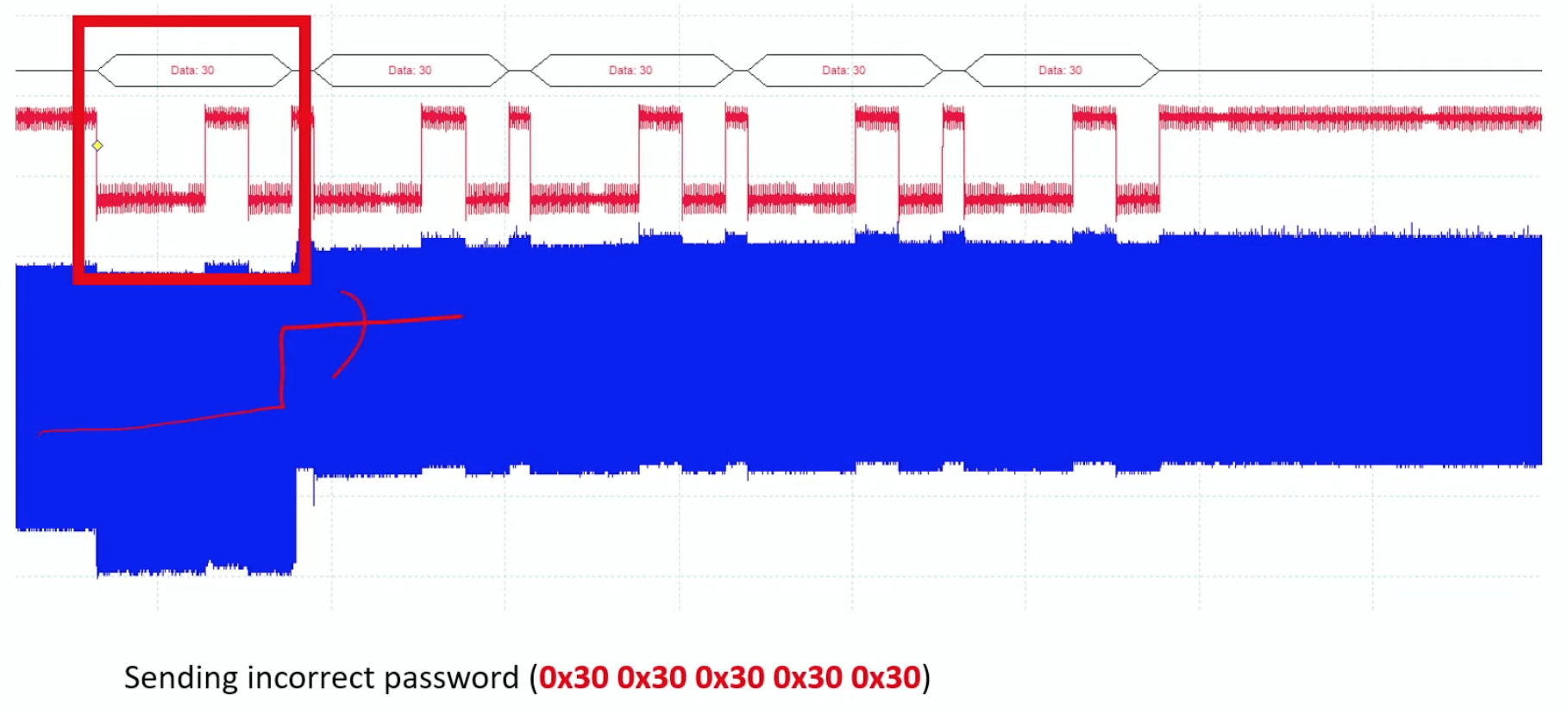

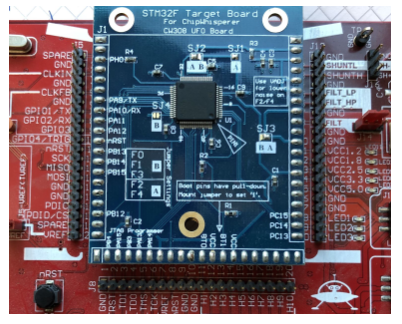

Defending against such supply chain attacks requires end-to-end security measures: (1) cryptographic verification to sign all model artifacts, datasets, and dependencies with cryptographic signatures; (2) provenance tracking to maintain immutable logs of all training data sources, code versions, and infrastructure used; (3) integrity validation to implement automated scanning for model backdoors, dependency vulnerabilities, and dataset poisoning before deployment; (4) air-gapped training to isolate sensitive model training in secure environments with controlled dependency management. Figure 1 illustrates how these supply chain compromise patterns apply across both industrial and ML systems.

Insufficient Isolation: Jeep Cherokee Hack

The 2015 Jeep Cherokee hack demonstrated how connectivity in everyday products creates new vulnerabilities. Security researchers publicly demonstrated a remote cyberattack on a Jeep Cherokee that exposed important vulnerabilities in automotive system design (Miller and Valasek 2015; Miller 2019). Conducted as a controlled experiment, the researchers exploited a vulnerability in the vehicle’s Uconnect entertainment system, which was connected to the internet via a cellular network. By gaining remote access to this system, they sent commands that affected the vehicle’s engine, transmission, and braking systems without physical access to the car.

This demonstration served as a wake-up call for the automotive industry, highlighting the risks posed by the growing connectivity of modern vehicles. Traditionally isolated automotive control systems, such as those managing steering and braking, were shown to be vulnerable when exposed through externally accessible software interfaces. The ability to remotely manipulate safety-critical functions raised serious concerns about passenger safety, regulatory oversight, and industry best practices.

The incident also led to a recall of over 1.4 million vehicles to patch the vulnerability6, highlighting the need for manufacturers to prioritize cybersecurity in their designs. The National Highway Traffic Safety Administration (NHTSA)7 issued guidelines for automakers to improve vehicle cybersecurity, including recommendations for secure software development practices and incident response protocols.

6 Automotive Cybersecurity Recalls: The Jeep Cherokee hack triggered the first-ever automotive cybersecurity recall in 2015. Since then, cybersecurity recalls have affected over 15 million vehicles globally, costing manufacturers an estimated $2.4 billion in remediation efforts and spurring new regulations.

7 NHTSA Cybersecurity Guidance: NHTSA, established in 1970, issued its first cybersecurity guidance in 2016 following the Jeep hack. The agency now mandates that connected vehicles include cybersecurity by design, affecting 99% of new vehicles sold in the US that contain 100+ onboard computers.

The Jeep Cherokee hack offers critical lessons for ML system security. Connected ML systems require strict isolation between external interfaces and safety-critical components, as this incident dramatically illustrated. The architectural flaw (allowing external interfaces to reach safety-critical functions) directly threatens modern ML deployments where inference APIs often connect to physical actuators or critical systems.

Modern ML attack vectors exploit these same isolation failures across multiple domains: (1) Autonomous vehicles where compromised infotainment system ML APIs (voice recognition, navigation) gain access to perception models controlling steering and braking; (2) Smart home systems where exploited voice assistant wake-word detection models provide backdoor access to security systems, door locks, and cameras; (3) Industrial IoT where compromised edge ML inference endpoints (predictive maintenance, anomaly detection) manipulate actuator control logic in manufacturing systems; (4) Medical devices where attacked diagnostic ML models influence treatment recommendations and drug delivery systems.

Consider a concrete attack scenario: a smart home voice assistant processes user commands through cloud-based NLP models. An attacker exploits a vulnerability in the voice processing API to inject malicious commands that bypass authentication. Through insufficient network segmentation, the compromised voice system gains access to the home security ML model responsible for facial recognition door unlocking, allowing unauthorized physical access.

Effective defense requires comprehensive isolation architecture: (1) network segmentation to isolate ML inference networks from actuator control networks using firewalls and VPNs; (2) API authentication requiring cryptographic authentication for all ML API calls with rate limiting and anomaly detection; (3) privilege separation to run inference models in sandboxed environments with minimal system permissions; (4) fail-safe defaults that design actuator control logic to revert to safe states (locked doors, stopped motors) when ML systems detect anomalies or lose connectivity; (5) monitoring that implements real-time logging and alerting for suspicious ML API usage patterns.

Weaponized Endpoints: Mirai Botnet

While the Jeep Cherokee hack demonstrated targeted exploitation of connected systems, the Mirai botnet revealed how poor security practices could be weaponized at massive scale. In 2016, the Mirai botnet8 emerged as one of the most disruptive distributed denial-of-service (DDoS)9 attacks in internet history (Antonakakis et al. 2017). The botnet infected thousands of networked devices, including digital cameras, DVRs, and other consumer electronics. These devices, often deployed with factory-default usernames and passwords, were easily compromised by the Mirai malware and enlisted into a large-scale attack network.

8 Mirai Botnet Scale: At its peak, Mirai controlled over 600,000 infected IoT devices, generating peak attacks of 1.2 Tbps (1,200 Gbps) against OVH hosting provider, making it one of the first terabit-scale DDoS attacks. The attack revealed that IoT devices with default credentials (admin/admin, root/12345) could be weaponized at unprecedented scale.

9 DDoS Attacks: Distributed Denial-of-Service (DDoS) attacks overwhelm targets with traffic from multiple sources, first demonstrated in 1999. Modern DDoS attacks can exceed 3.47 Tbps (terabits per second), enough to take down entire internet infrastructures and costing businesses $2.3 million per incident on average.

The Mirai botnet was used to overwhelm major internet infrastructure providers, disrupting access to popular online services across the United States and beyond. The scale of the attack demonstrated how vulnerable consumer and industrial devices can become a platform for widespread disruption when security is not prioritized in their design and deployment.

The Mirai botnet’s lessons apply directly to modern ML deployments. Edge-deployed ML devices with weak authentication become weaponized attack infrastructure at unprecedented scale, precisely as the Mirai botnet demonstrated with traditional IoT devices. Modern ML edge devices (smart cameras running object detection, voice assistants performing wake-word detection, autonomous drones with navigation models, industrial IoT sensors with anomaly detection algorithms) face identical vulnerability patterns but with amplified consequences due to their AI capabilities and access to sensitive data.

The attack escalation with ML devices differs significantly from traditional IoT compromises. Unlike simple IoT devices that provided only computing power for DDoS attacks, compromised ML devices offer sophisticated capabilities: (1) Data exfiltration where smart cameras leak facial recognition databases, voice assistants extract conversation transcripts, and health monitors steal biometric data; (2) Model weaponization where hijacked autonomous drones coordinate swarm attacks and compromised traffic cameras misreport vehicle counts to manipulate traffic systems; (3) AI-powered reconnaissance where compromised edge ML devices use their trained models to identify high-value targets (facial recognition for VIP identification, voice analysis for emotion detection) and coordinate sophisticated multi-stage attacks.

Consider a concrete attack scenario where attackers compromise 50,000 smart security cameras with default passwords, each running ML object detection models. Rather than traditional DDoS attacks, they use the compromised cameras to: (1) extract facial recognition databases from residential and commercial buildings; (2) coordinate physical surveillance of targeted individuals using distributed camera networks; (3) inject false object detection alerts to trigger emergency responses and create chaos; (4) use the cameras’ computing power to train adversarial examples against other security systems.

Comprehensive defense against such weaponization requires zero-trust edge security: (1) Secure manufacturing that eliminates default credentials, implements hardware security modules (HSMs) for device-unique keys, and enables secure boot with cryptographic verification; (2) Encrypted communications that mandate TLS 1.3+ for all ML API communications with certificate pinning and mutual authentication; (3) Behavioral monitoring that deploys anomaly detection systems to identify unusual inference patterns, unexpected network traffic, and suspicious computational loads; (4) Automated response that implements kill switches to disable compromised devices remotely and quarantine them from networks; (5) Update security that enforces cryptographically signed firmware updates with automatic security patching and version rollback capabilities.

Systematic Threat Analysis and Risk Assessment

The historical incidents demonstrate how fundamental security failures manifest across different computing paradigms. Supply chain vulnerabilities enable persistent compromise, insufficient isolation allows privilege escalation, and weaponized endpoints create attack infrastructure at scale. These patterns directly apply to machine learning deployments: compromised training pipelines and model repositories inherit supply chain risks, external interfaces to safety-critical ML components require strict isolation, and compromised ML edge devices can exfiltrate inference data or participate in coordinated attacks.

These historical incidents reveal universal security patterns that translate directly to ML system vulnerabilities. Supply chain compromise, as demonstrated by Stuxnet, manifests in ML through training data poisoning and backdoored model repositories. Insufficient isolation, exemplified by the Jeep Cherokee hack, appears in ML API access to safety-critical systems and compromised inference endpoints. Weaponized endpoints, illustrated by the Mirai botnet, emerge through hijacked ML edge devices capable of coordinated AI-powered attacks.

The key insight is that traditional cybersecurity patterns amplify in ML systems because models learn from data and make autonomous decisions. While Stuxnet required sophisticated malware to manipulate industrial controllers, ML systems can be compromised through data poisoning that appears statistically normal but embeds hidden behaviors. This characteristic makes ML systems both more vulnerable to subtle attacks and more dangerous when compromised, as they can make decisions affecting physical systems autonomously. Understanding these historical patterns helps recognize how familiar attack vectors manifest in ML contexts, while the unique properties of learning systems (statistical learning, decision autonomy, and data dependency) create new attack surfaces requiring specialized defenses.

Machine learning systems introduce attack vectors that extend beyond traditional computing vulnerabilities. The data-driven nature of learning creates new opportunities for adversaries: training data can be manipulated to embed backdoors, input perturbations can exploit learned decision boundaries, and systematic API queries can extract proprietary model knowledge. These ML-specific threats require specialized defenses that account for the statistical and probabilistic foundations of learning systems, complementing traditional infrastructure hardening.

Threat Prioritization Framework

With the wide range of potential threats facing ML systems, practitioners need a framework to prioritize their defensive efforts effectively. Not all threats are equally likely or impactful, and security resources are always constrained. A simple prioritization matrix based on likelihood and impact helps focus attention where it matters most.

Consider these threat priority categories:

High Likelihood / High Impact: Data poisoning in federated learning systems where training data comes from untrusted sources. These attacks are relatively easy to execute but can severely compromise model behavior.

High Likelihood / Medium Impact: Model extraction attacks against public APIs. These are common and technically simple but may only affect competitive advantage rather than safety or privacy.

Low Likelihood / High Impact: Hardware side-channel attacks on cloud-deployed models. These require sophisticated adversaries and physical access but could expose all model parameters and user data.

Medium Likelihood / Medium Impact: Membership inference attacks against models trained on sensitive data. These require some technical skill but mainly threaten individual privacy rather than system integrity.

This framework guides resource allocation throughout this chapter. We begin with the most common and accessible threats (model theft, data poisoning, and adversarial attacks) before examining more specialized hardware and infrastructure vulnerabilities. Understanding these priority levels helps practitioners implement defenses in a logical sequence that maximizes security benefit per invested effort.

Model-Specific Attack Vectors

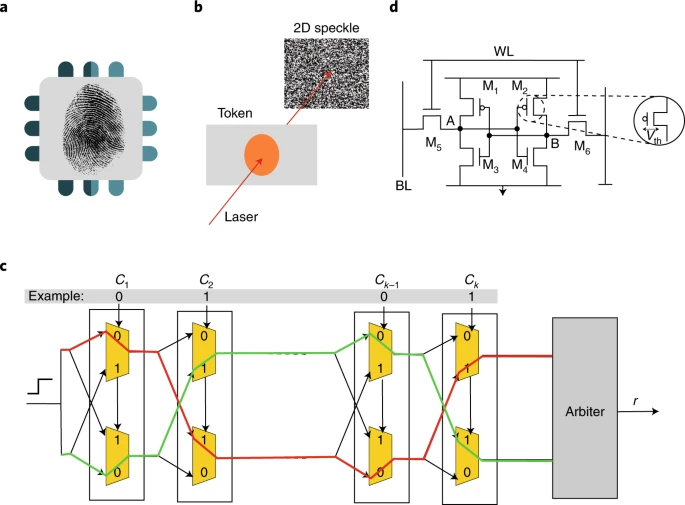

Machine learning systems face threats spanning the entire ML lifecycle, from training-time manipulations to inference-time evasion. These threats fall into three broad categories: threats to model confidentiality (model theft), threats to training integrity (data poisoning10), and threats to inference robustness (adversarial examples11). Each category targets different vulnerabilities and requires distinct defensive strategies.

10 Data Poisoning Attacks: Data poisoning is an attack technique where adversaries inject malicious data during training, first formalized in 2012 (Biggio, Nelson, and Laskov 2012). Studies show that poisoning just 0.1% of training data can reduce model accuracy by 10-50%, making it a highly efficient attack vector against ML systems.

11 Adversarial Examples: Adversarial examples are inputs crafted to deceive ML models, discovered by Szegedy et al. (Szegedy et al. 2013). These attacks can fool state-of-the-art image classifiers with perturbations invisible to humans (changing <0.01% of pixel values), affecting 99%+ of deep learning models.

Understanding when and where different attacks occur in the ML lifecycle helps prioritize defenses and understand attacker motivations. Figure 2 maps the primary attack vectors to their target stages in the machine learning pipeline, revealing how adversaries exploit different system vulnerabilities at different times.

During Data Collection: Attackers can inject malicious samples or manipulate labels in training datasets, particularly in federated learning or crowdsourced data scenarios where data sources are less controlled.

During Training: This stage faces backdoor insertion attacks, where adversaries embed hidden behaviors that activate only under specific trigger conditions, and label manipulation attacks that systematically corrupt the learning process.

During Deployment: Model theft attacks target this stage because trained models become accessible through APIs, file downloads, or reverse engineering of mobile applications. This is where intellectual property is most vulnerable.

During Inference: Adversarial attacks occur at runtime, where attackers craft inputs designed to fool deployed models into making incorrect predictions while appearing normal to human observers.

This lifecycle perspective reveals that different threats require different defensive strategies. Data validation protects the collection phase, secure training environments protect the training phase, access controls and API design protect deployment, and input validation protects inference. By understanding which attacks target which lifecycle stages, security teams can implement appropriate defenses at the right architectural layers.

Machine learning models are not solely passive victims of attack; in some cases, they can be employed as components of an attack strategy. Pretrained models, particularly large generative or discriminative networks, may be adapted to automate tasks such as adversarial example generation, phishing content synthesis12, or protocol subversion. Open-source or publicly accessible models can be fine-tuned for malicious purposes, including impersonation, surveillance, or reverse-engineering of secure systems.

12 AI-Generated Phishing: Large language models can generate convincing phishing emails with 99%+ grammatical accuracy, compared to 19% for traditional phishing. Security firms report dramatic increases in AI-generated phishing attacks since 2022, with some studies citing 1,265% growth (though methodologies and baselines vary significantly), with some campaigns achieving 30%+ success rates. This dual-use potential necessitates a broader security perspective that considers models not only as assets to defend but also as possible instruments of attack.

Model Theft

The first category of model-specific threats targets confidentiality. Threats to model confidentiality arise when adversaries gain access to a trained model’s parameters, architecture, or output behavior. These attacks can undermine the economic value of machine learning systems, allow competitors to replicate proprietary functionality, or expose private information encoded in model weights.

Such threats arise across a range of deployment settings, including public APIs13, cloud-hosted services, on-device inference engines, and shared model repositories14. Machine learning models may be vulnerable due to exposed interfaces, insecure serialization formats15, or insufficient access controls, factors that create opportunities for unauthorized extraction or replication (Ateniese et al. 2015).

13 Machine Learning APIs: Machine learning APIs (Application Programming Interfaces) were popularized by Google’s Prediction API (2010). Today’s ML APIs handle billions of requests daily, with major providers processing billions of tokens monthly, creating vast attack surfaces for model extraction.

14 Model Repositories: Model repositories are centralized platforms for sharing ML models, led by Hugging Face (2016) which hosts 500,000+ models. While democratizing AI access, these repositories have become targets for supply chain attacks, with researchers finding malicious models in 5% of popular repositories (Oliynyk, Mayer, and Rauber 2023).

15 Model Serialization: Model serialization is the process of converting trained models into portable formats like ONNX (2017), TensorFlow SavedModel (2016), or PyTorch’s .pth files. Insecure serialization can expose model weights and enable arbitrary code execution, affecting 80%+ of deployed ML systems (Ateniese et al. 2015; Tramèr et al. 2016).

The severity of these threats is underscored by high-profile legal cases that have highlighted the strategic and economic value of machine learning models. For example, former Google engineer Anthony Levandowski was accused of stealing proprietary designs from Waymo, including critical components of its autonomous vehicle technology, before founding a competing startup. Such cases illustrate the potential for insider threats to bypass technical protections and gain access to sensitive intellectual property.

The consequences of model theft extend beyond economic loss. Stolen models can be used to extract sensitive information, replicate proprietary algorithms, or enable further attacks. The economic impact can be substantial: research estimates suggest that aspects of large language models can be approximated through systematic API queries at costs orders of magnitude lower than original training, though full model replication remains economically and technically challenging (Tramèr et al. 2016; Carlini et al. 2024). For instance, a competitor who obtains a stolen recommendation model from an e-commerce platform might gain insights into customer behavior, business analytics, and embedded trade secrets. This knowledge can also be used to conduct model inversion attacks16, where an attacker attempts to infer private details about the model’s training data (Fredrikson, Jha, and Ristenpart 2015).

16 Model Inversion Attacks: Model inversion attacks were first demonstrated in 2015 against face recognition systems, when researchers reconstructed recognizable faces from neural network outputs using only confidence scores. The attack revealed that models trained on 40 individuals could leak identifiable facial features, proving that “black-box” API access isn’t sufficient privacy protection.

17 Netflix Deanonymization: In 2008, researchers re-identified Netflix users by correlating the “anonymous” Prize dataset with public IMDb ratings. Using as few as 8 movie ratings with dates, they identified 99% of users, leading Netflix to cancel a second competition and highlighting the futility of naive anonymization.

In a model inversion attack, the adversary queries the model through a legitimate interface, such as a public API, and observes its outputs. By analyzing confidence scores or output probabilities, the attacker can optimize inputs to reconstruct data resembling the model’s training set. For example, a facial recognition model used for secure access could be manipulated to reveal statistical properties of the employee photos on which it was trained. Similar vulnerabilities have been demonstrated in studies on the Netflix Prize dataset17, where researchers inferred individual movie preferences from anonymized data (Narayanan and Shmatikov 2006).

Model theft can target two distinct objectives: extracting exact model properties, such as architecture and parameters, or replicating approximate model behavior to produce similar outputs without direct access to internal representations. Understanding neural network architectures helps recognize which architectural patterns are most vulnerable to extraction attacks. The specific architectural vulnerabilities vary by model type, as discussed in Chapter 4: DNN Architectures. Both forms of theft undermine the security and value of machine learning systems, as explored in the following subsections.

These two attack paths are illustrated in Figure 3. In exact model theft, the attacker gains access to the model’s internal components, including serialized files, weights, and architecture definitions, and reproduces the model directly. In contrast, approximate model theft relies on observing the model’s input-output behavior, typically through a public API. By repeatedly querying the model and collecting responses, the attacker trains a surrogate that mimics the original model’s functionality. The first approach compromises the model’s internal design and training investment, while the second threatens its predictive value and can facilitate further attacks such as adversarial example transfer or model inversion.

Exact Model Theft

Exact model property theft refers to attacks aimed at extracting the internal structure and learned parameters of a machine learning model. These attacks often target deployed models that are exposed through APIs, embedded in on-device inference engines, or shared as downloadable model files on collaboration platforms. Exploiting weak access control, insecure model packaging, or unprotected deployment interfaces, attackers can recover proprietary model assets without requiring full control of the underlying infrastructure.

These attacks typically seek three types of information. The first is the model’s learned parameters, such as weights and biases. By extracting these parameters, attackers can replicate the model’s functionality without incurring the cost of training. This replication allows them to benefit from the model’s performance while bypassing the original development effort.

The second target is the model’s fine-tuned hyperparameters, including training configurations such as learning rate, batch size, and regularization settings. These hyperparameters significantly influence model performance, and stealing them allows attackers to reproduce high-quality results with minimal additional experimentation.

Finally, attackers may seek to reconstruct the model’s architecture. This includes the sequence and types of layers, activation functions, and connectivity patterns that define the model’s behavior. Architecture theft may be accomplished through side-channel attacks18, reverse engineering, or analysis of observable model behavior.

18 ML Side-Channel Attacks: Side-channel attacks on ML were first demonstrated against neural networks in 2018, when researchers showed that power consumption patterns during inference could reveal sensitive model information. This extended traditional cryptographic side-channel attacks into the ML domain, creating new vulnerabilities for edge AI devices.

Revealing the architecture not only compromises intellectual property but also gives competitors strategic insights into the design choices that provide competitive advantage.

System designers must account for these risks by securing model serialization formats, restricting access to runtime APIs, and hardening deployment pipelines. Protecting models requires a combination of software engineering practices, including access control, encryption, and obfuscation techniques, to reduce the risk of unauthorized extraction (Tramèr et al. 2016).

Approximate Model Theft

While some attackers seek to extract a model’s exact internal properties, others focus on replicating its external behavior. Approximate model behavior theft refers to attacks that attempt to recreate a model’s decision-making capabilities without directly accessing its parameters or architecture. Instead, attackers observe the model’s inputs and outputs to build a substitute model that performs similarly on the same tasks.

This type of theft often targets models deployed as services, where the model is exposed through an API or embedded in a user-facing application. By repeatedly querying the model and recording its responses, an attacker can train their own model to mimic the behavior of the original. This process, often called model distillation19 or knockoff modeling, allows attackers to achieve comparable functionality without access to the original model’s proprietary internals (Orekondy, Schiele, and Fritz 2019).

19 Model Distillation: Model distillation is a knowledge transfer technique developed by (Hinton, Vinyals, and Dean 2015) where a smaller “student” model learns from a larger “teacher” model. While designed for model compression, attackers exploit this to create stolen models with 95%+ accuracy using only 1% of the original training data.

Attackers may evaluate the success of behavior replication in two ways. The first is by measuring the level of effectiveness of the substitute model. This involves assessing whether the cloned model achieves similar accuracy, precision, recall, or other performance metrics on benchmark tasks. By aligning the substitute’s performance with that of the original, attackers can build a model that is practically indistinguishable in effectiveness, even if its internal structure differs.

The second is by testing prediction consistency. This involves checking whether the substitute model produces the same outputs as the original model when presented with the same inputs. Matching not only correct predictions but also the original model’s mistakes can provide attackers with a high-fidelity reproduction of the target model’s behavior. This poses particular concern in applications such as natural language processing, where attackers might replicate sentiment analysis models to gain competitive insights or bypass proprietary systems.

Approximate behavior theft proves challenging to defend against in open-access deployment settings, such as public APIs or consumer-facing applications. Limiting the rate of queries, detecting automated extraction patterns, and watermarking model outputs are among the techniques that can help mitigate this risk. However, these defenses must be balanced with usability and performance considerations, especially in production environments.

One demonstration of approximate model theft extracts internal components of black-box language models via public APIs. In their paper, Carlini et al. (2024), researchers show how to reconstruct the final embedding projection matrix of several OpenAI models, including ada, babbage, and gpt-3.5-turbo, using only public API access. By exploiting the low-rank structure of the output projection layer and making carefully crafted queries, they recover the model’s hidden dimensionality and replicate the weight matrix up to affine transformations.

The attack does not reconstruct the full model, but reveals internal architecture parameters and sets a precedent for future, deeper extractions. This work demonstrated that even partial model theft poses risks to confidentiality and competitive advantage, especially when model behavior can be probed through rich API responses such as logit bias and log-probabilities.

| Model | Size (Dimension Extraction) | Number of Queries | RMS (Weight Matrix Extraction) | Cost (USD) |

|---|---|---|---|---|

| OpenAI ada | 1024 ✓ | < 2 ^6$ | \(5 \cdot 10^{-4}\) | $1 / $4 |

| OpenAI babbage | 2048 ✓ | < 4 ^6$ | \(7 \cdot 10^{-4}\) | $2 / $12 |

| OpenAI babbage-002 | 1536 ✓ | < 4 ^6$ | Not implemented | $2 / $12 |

| OpenAI gpt-3.5-turbo-instruct | Not disclosed | < 4 ^7$ | Not implemented | $200 / ~$2,000 (estimated) |

| OpenAI gpt-3.5-turbo-1106 | Not disclosed | < 4 ^7$ | Not implemented | $800 / ~$8,000 (estimated) |

As shown in their empirical evaluation, reproduced in Table 2, model parameters could be extracted with root mean square errors as low as \(10^{-4}\), confirming that high-fidelity approximation is achievable at scale. These findings raise important implications for system design, suggesting that innocuous API features, like returning top-k logits, can serve as significant leakage vectors if not tightly controlled.

Case Study: Tesla IP Theft

In 2018, Tesla filed a lawsuit against the self-driving car startup Zoox, alleging that former Tesla employees had stolen proprietary data and trade secrets related to Tesla’s autonomous driving technology. According to the lawsuit, several employees transferred over 10 gigabytes of confidential files, including machine learning models and source code, before leaving Tesla to join Zoox.

Among the stolen materials was a key image recognition model used for object detection in Tesla’s self-driving system. By obtaining this model, Zoox could have bypassed years of research and development, giving the company a competitive advantage. Beyond the economic implications, there were concerns that the stolen model could expose Tesla to further security risks, such as model inversion attacks aimed at extracting sensitive data from the model’s training set.

The Zoox employees denied any wrongdoing, and the case was ultimately settled out of court. The incident highlights the real-world risks of model theft, especially in industries where machine learning models represent significant intellectual property. The theft of models not only undermines competitive advantage but also raises broader concerns about privacy, safety, and the potential for downstream exploitation.

This case demonstrates that model theft is not limited to theoretical attacks conducted over APIs or public interfaces. Insider threats, supply chain vulnerabilities, and unauthorized access to development infrastructure pose equally serious risks to machine learning systems deployed in commercial environments.

Data Poisoning

While model theft targets confidentiality, the second category of threats focuses on training integrity. Training integrity threats stem from the manipulation of data used to train machine learning models. These attacks aim to corrupt the learning process by introducing examples that appear benign but induce harmful or biased behavior in the final model.

Data poisoning attacks are a prominent example, in which adversaries inject carefully crafted data points into the training set to influence model behavior in targeted or systemic ways (Biggio, Nelson, and Laskov 2012). Poisoned data may cause a model to make incorrect predictions, degrade its generalization ability, or embed failure modes that remain dormant until triggered post-deployment.

20 Crowdsourcing Risks: Platforms like Amazon Mechanical Turk (2005) and Prolific democratized data labeling but introduced poisoning risks. Studies show 15-30% of crowdsourced labels contain errors or bias (Biggio, Nelson, and Laskov 2012; Oprea, Singhal, and Vassilev 2022), with coordinated attacks capable of poisoning entire datasets at costs under $1,000.

Data poisoning is a security threat because it involves intentional manipulation of the training data by an adversary, with the goal of embedding vulnerabilities or subverting model behavior. These attacks pose concern in applications where models retrain on data collected from external sources, including user interactions, crowdsourced annotations20, and online scraping, since attackers can inject poisoned data without direct access to the training pipeline.

These attacks occur across diverse threat models. From a security perspective, poisoning attacks vary depending on the attacker’s level of access and knowledge. In white-box scenarios, the adversary may have detailed insight into the model architecture or training process, enabling more precise manipulation. In contrast, black-box or limited-access attacks exploit open data submission channels or indirect injection vectors. Poisoning can target different stages of the ML pipeline, ranging from data collection and preprocessing to labeling and storage, making the attack surface both broad and system-dependent. The relative priority of data poisoning threats varies by deployment context as analyzed in Section 1.4.1.

Poisoning attacks typically follow a three-stage process. First, the attacker injects malicious data into the training set. These examples are often designed to appear legitimate but introduce subtle distortions that alter the model’s learning process. Second, the model trains on this compromised data, embedding the attacker’s intended behavior. Finally, once the model is deployed, the attacker may exploit the altered behavior to cause mispredictions, bypass safety checks, or degrade overall reliability.

To understand these attack mechanisms precisely, data poisoning can be viewed as a bilevel optimization problem, where the attacker seeks to select poisoning data \(D_p\) that maximizes the model’s loss on a validation or target dataset \(D_{\text{test}}\). Let \(D\) represent the original training data. The attacker’s objective is to solve: \[ \max_{D_p} \ \mathcal{L}(f_{D \cup D_p}, D_{\text{test}}) \] where \(f_{D \cup D_p}\) represents the model trained on the combined dataset of original and poisoned data. For targeted attacks, this objective can be refined to focus on specific inputs \(x_t\) and target labels \(y_t\): \[ \max_{D_p} \ \mathcal{L}(f_{D \cup D_p}, x_t, y_t) \]

This formulation captures the adversary’s goal of introducing carefully crafted data points to manipulate the model’s decision boundaries.

For example, consider a traffic sign classification model trained to distinguish between stop signs and speed limit signs. An attacker might inject a small number of stop sign images labeled as speed limit signs into the training data. The attacker’s goal is to subtly shift the model’s decision boundary so that future stop signs are misclassified as speed limit signs. In this case, the poisoning data \(D_p\) consists of mislabeled stop sign images, and the attacker’s objective is to maximize the misclassification of legitimate stop signs \(x_t\) as speed limit signs \(y_t\), following the targeted attack formulation above. Even if the model performs well on other types of signs, the poisoned training process creates a predictable and exploitable vulnerability.

Data poisoning attacks can be classified based on their objectives and scope of impact. Availability attacks degrade overall model performance by introducing noise or label flips that reduce accuracy across tasks. Targeted attacks manipulate a specific input or class, leaving general performance intact but causing consistent misclassification in select cases. Backdoor attacks21 embed hidden triggers, which are often imperceptible patterns, that elicit malicious behavior only when the trigger is present. Subpopulation attacks degrade performance on a specific group defined by shared features, making them particularly dangerous in fairness-sensitive applications.

21 Backdoor Attacks: Backdoor attacks involve hidden triggers embedded in ML models during training, first demonstrated in 2017. These attacks achieve 99%+ success rates while maintaining normal accuracy, with triggers as subtle as single-pixel modifications. BadNets, the seminal backdoor attack, affected 100% of tested models.

22 Perspective API: Google’s Perspective API is a toxicity detection model launched in 2017, now processing 500+ million comments daily across platforms like The New York Times and Wikipedia. Despite sophisticated training, the API demonstrates how even billion-parameter models remain vulnerable to targeted poisoning attacks.

23 Perspective Vulnerability: After retraining, the poisoned model exhibited a significantly higher false negative rate, allowing offensive language to bypass filters. This demonstrates how poisoned data can exploit feedback loops in user-generated content systems, creating long-term vulnerabilities in content moderation pipelines.

A notable real-world example of a targeted poisoning attack was demonstrated against Perspective, Google’s widely-used online toxicity detection model22 that helps platforms identify harmful content (Hosseini et al. 2017). By injecting synthetically generated toxic comments with subtle misspellings and grammatical errors into the model’s training set, researchers degraded its ability to detect harmful content23.

Mitigating data poisoning threats requires end-to-end security of the data pipeline, encompassing collection, storage, labeling, and training. Preventative measures include input validation checks, integrity verification of training datasets, and anomaly detection to flag suspicious patterns. In parallel, robust training algorithms can limit the influence of mislabeled or manipulated data by down-weighting or filtering out anomalous instances. While no single technique guarantees immunity, combining proactive data governance, automated monitoring, and robust learning practices is important for maintaining model integrity in real-world deployments.

Adversarial Attacks

Moving from training-time to inference-time threats, the third category targets model robustness during deployment. Inference robustness threats occur when attackers manipulate inputs at test time to induce incorrect predictions. Unlike data poisoning, which compromises the training process, these attacks exploit vulnerabilities in the model’s decision surface during inference.

A central class of such threats is adversarial attacks, where carefully constructed inputs are designed to cause incorrect predictions while remaining nearly indistinguishable from legitimate data. As detailed in Chapter 16: Robust AI, these attacks highlight vulnerabilities in ML models’ sensitivity to small, targeted perturbations that can drastically alter output confidence or classification results.

These attacks create significant real-world risks in domains such as autonomous driving, biometric authentication, and content moderation. The effectiveness can be striking: research demonstrates that adversarial examples can achieve 99%+ attack success rates against state-of-the-art image classifiers while modifying less than 0.01% of pixel values, changes virtually imperceptible to humans (Szegedy et al. 2013; Goodfellow, Shlens, and Szegedy 2014). In physical-world attacks, printed adversarial patches as small as 2% of an image can cause autonomous vehicles to misclassify stop signs as speed limit signs with 80%+ success rates under varying lighting conditions (Eykholt et al. 2017).

Unlike data poisoning, which corrupts the model during training, adversarial attacks manipulate the model’s behavior at test time, often without requiring any access to the training data or model internals. The attack surface thus shifts from upstream data pipelines to real-time interaction, demanding robust defense mechanisms capable of detecting or mitigating malicious inputs at the point of inference.

The mathematical foundations of adversarial example generation and comprehensive taxonomies of attack algorithms, including gradient-based, optimization-based, and transfer-based techniques, are covered in detail in Chapter 16: Robust AI, which explores robust approaches to building adversarially resistant systems.

Adversarial attacks vary based on the attacker’s level of access to the model. In white-box attacks, the adversary has full knowledge of the model’s architecture, parameters, and training data, allowing them to craft highly effective adversarial examples. In black-box attacks, the adversary has no internal knowledge and must rely on querying the model and observing its outputs. Grey-box attacks fall between these extremes, with the adversary possessing partial information, such as access to the model architecture but not its parameters.

These attacker models can be summarized along a spectrum of knowledge levels. Table 3 highlights the differences in model access, data access, typical attack strategies, and common deployment scenarios. Such distinctions help characterize the practical challenges of securing ML systems across different deployment environments.

Common attack strategies include surrogate model construction, transfer attacks exploiting adversarial transferability, and GAN-based perturbation generation. The technical details of these approaches and their mathematical formulations are thoroughly covered in Chapter 16: Robust AI.

| Adversary Knowledge Level | Model Access | Training Data Access | Attack Example | Common Scenario |

|---|---|---|---|---|

| White-box | Full access to architecture and parameters | Full access | Crafting adversarial examples using gradients | Insider threats, open-source model reuse |

| Grey-box | Partial access (e.g., architecture only) | Limited or no access | Attacks based on surrogate model approximation | Known model family, unknown fine-tuning |

| Black-box | No internal access; only query-response view | No access | Query-based surrogate model training and transfer attacks | Public APIs, model-as-a-service deployments |

One illustrative example involves the manipulation of traffic sign recognition systems (Eykholt et al. 2017). Researchers demonstrated that placing small stickers on stop signs could cause machine learning models to misclassify them as speed limit signs. While the altered signs remained easily recognizable to humans, the model consistently misinterpreted them. Such attacks pose serious risks in applications like autonomous driving, where reliable perception is important for safety.

Adversarial attacks highlight the need for robust defenses that go beyond improving model accuracy. Securing ML systems against adversarial threats requires runtime defenses such as input validation, anomaly detection, and monitoring for abnormal patterns during inference. Training-time robustness methods (e.g., adversarial training) complement these strategies and are explored in Chapter 16: Robust AI. The training methodologies that support robust model development are detailed in Chapter 8: AI Training. These defenses aim to enhance model resilience against adversarial examples, ensuring that machine learning systems can operate reliably even in the presence of malicious inputs.

Case Study: Traffic Sign Attack

In 2017, researchers conducted experiments by placing small black and white stickers on stop signs (Eykholt et al. 2017). As shown in Figure 4, these stickers were designed to be nearly imperceptible to the human eye, yet they significantly altered the appearance of the stop sign when viewed by machine learning models. When viewed by a normal human eye, the stickers did not obscure the sign or prevent interpretability. However, when images of the stickers stop signs were fed into standard traffic sign classification ML models, they were misclassified as speed limit signs over 85% of the time.

This demonstration showed how simple adversarial stickers could trick ML systems into misreading important road signs. If deployed realistically, these attacks could endanger public safety, causing autonomous vehicles to misinterpret stop signs as speed limits. Researchers warned this could potentially cause dangerous rolling stops or acceleration into intersections.

This case study provides a concrete illustration of how adversarial examples exploit the pattern recognition mechanisms of ML models. By subtly altering the input data, attackers can induce incorrect predictions and pose significant risks to safety-important applications like self-driving cars. The attack’s simplicity demonstrates how even minor, imperceptible changes can lead models astray. Consequently, developers must implement robust defenses against such threats.

These threat types span different stages of the ML lifecycle and demand distinct defensive strategies. Table 4 below summarizes their key characteristics.

| Threat Type | Lifecycle Stage | Attack Vector | Example Impact |

|---|---|---|---|

| Model Theft | Deployment | API access, insider leaks | Stolen IP, model inversion, behavioral clone |

| Data Poisoning | Training | Label flipping, backdoors | Targeted misclassification, degraded accuracy |

| Adversarial Attacks | Inference | Input perturbation | Real-time misclassification, safety failure |

The appropriate defense for a given threat depends on its type, attack vector, and where it occurs in the ML lifecycle. Figure 5 provides a simplified decision flow that connects common threat categories, such as model theft, data poisoning, and adversarial examples, to corresponding defensive strategies. While real-world deployments may require more nuanced combinations of defenses as discussed in our layered defense framework, this flowchart serves as a conceptual guide for aligning threat models with practical mitigation techniques.

While ML models themselves present important attack surfaces, they ultimately run on hardware that can introduce vulnerabilities beyond the model’s control. The transition from software-based threats to hardware-based vulnerabilities represents a significant shift in the security landscape. Where software attacks target code logic and data flows, hardware attacks exploit the physical properties of the computing substrate itself.

The specialized computing infrastructure that powers machine learning workloads creates a layered attack surface that extends far beyond traditional software vulnerabilities. This includes the processors that execute instructions, the memory systems that store data, and the interconnects that move information between components. Understanding these hardware-level risks is essential because they can bypass conventional software security mechanisms and remain difficult to detect. These risks are addressed through the hardware-based security mechanisms detailed in Section 1.8.7.

In the next section, we examine how adversaries can target the physical infrastructure that executes machine learning workloads through hardware bugs, physical tampering, side channels, and supply chain risks.

Hardware-Level Security Vulnerabilities

As machine learning systems move from research prototypes to large-scale, real-world deployments, their security depends on the hardware platforms they run on. Whether deployed in data centers, on edge devices, or in embedded systems, machine learning applications rely on a layered stack of processors, accelerators, memory, and communication interfaces. These hardware components, while essential for enabling efficient computation, introduce unique security risks that go beyond traditional software-based vulnerabilities.

Unlike general-purpose software systems, machine learning workflows often process high-value models and sensitive data in performance-constrained environments. This makes them attractive targets not only for software attacks but also for hardware-level exploitation. Vulnerabilities in hardware can expose models to theft, leak user data, disrupt system reliability, or allow adversaries to manipulate inference results. Because hardware operates below the software stack, such attacks can bypass conventional security mechanisms and remain difficult to detect.

Understanding hardware security threats requires considering how computing substrates implement machine learning operations. At the hardware level, CPU components like arithmetic logic units, registers, and caches execute the instructions that drive model inference and training. Memory hierarchies determine how quickly models can access parameters and intermediate results. The hardware-software interface, mediated by firmware and bootloaders, establishes the initial trust foundation for system operation. The physical properties of computation—including power consumption, timing characteristics, and electromagnetic emissions—create observable signals that attackers can exploit to extract sensitive information.

Hardware threats arise from multiple sources that span the entire system lifecycle. Design flaws in processor architectures, exemplified by vulnerabilities like Meltdown and Spectre, can compromise security guarantees. Physical tampering enables direct manipulation of components and data flows. Side-channel attacks exploit unintended information leakage through power traces, timing variations, and electromagnetic radiation. Supply chain compromises introduce malicious components or modifications during manufacturing and distribution. Together, these threats form a critical attack surface that must be addressed to build trustworthy machine learning systems. For readers focusing on practical deployment, the key lessons center on supply chain verification, physical access controls, and hardware trust anchors, while the defensive strategies in Section 1.8 provide actionable guidance regardless of deep architectural expertise.

Table 5 summarizes the major categories of hardware security threats, describing their origins, methods, and implications for machine learning system design and deployment.

| Threat Type | Description | Relevance to ML Hardware Security |

|---|---|---|

| Hardware Bugs | Intrinsic flaws in hardware designs that can compromise system integrity. | Foundation of hardware vulnerability. |

| Physical Attacks | Direct exploitation of hardware through physical access or manipulation. | Basic and overt threat model. |

| Fault-injection Attacks | Induction of faults to cause errors in hardware operation, leading to potential system crashes. | Systematic manipulation leading to failure. |

| Side-Channel Attacks | Exploitation of leaked information from hardware operation to extract sensitive data. | Indirect attack via environmental observation. |

| Leaky Interfaces | Vulnerabilities arising from interfaces that expose data unintentionally. | Data exposure through communication channels. |

| Counterfeit Hardware | Use of unauthorized hardware components that may have security flaws. | Compounded vulnerability issues. |

| Supply Chain Risks | Risks introduced through the hardware lifecycle, from production to deployment. | Cumulative & multifaceted security challenges. |

Hardware Bugs

The first category of hardware threats stems from design vulnerabilities. Hardware is not immune to the pervasive issue of design flaws or bugs. Attackers can exploit these vulnerabilities to access, manipulate, or extract sensitive data, breaching the confidentiality and integrity that users and services depend on. One of the most notable examples came with the discovery of Meltdown and Spectre24—two vulnerabilities in modern processors that allow malicious programs to bypass memory isolation and read the data of other applications and the operating system (Kocher et al. 2019a, 2019b).

24 Meltdown/Spectre Impact: Disclosed in January 2018, these vulnerabilities affected virtually every processor made since 1995 (billions of devices). The disclosure triggered emergency patches across all major operating systems, causing 5-30% performance degradation in some workloads, and led to a core rethinking of processor security.

25 Speculative Execution: Introduced in the Intel Pentium Pro (1995), this technique executes instructions before confirming they’re needed, improving performance by 10-25%. However, it creates a 20+ year attack window where speculated operations leak data through cache timing, affecting ML accelerators that rely on similar optimizations.

These attacks exploit speculative execution25, a performance optimization in CPUs that executes instructions out of order before safety checks are complete. While improving computational speed, this optimization inadvertently exposes sensitive data through microarchitectural side channels, such as CPU caches. The technical sophistication of these attacks highlights the difficulty of eliminating vulnerabilities even with extensive hardware validation.

Further research has revealed that these were not isolated incidents. Variants such as Foreshadow, ZombieLoad, and RIDL target different microarchitectural elements, ranging from secure enclaves to CPU internal buffers, demonstrating that speculative execution flaws are a systemic hardware risk. This systemic nature means that while these attacks were first demonstrated on general-purpose CPUs, their implications extend to machine learning accelerators and specialized hardware. ML systems often rely on heterogeneous compute platforms that combine CPUs with GPUs, TPUs, FPGAs, or custom accelerators. These components process sensitive data such as personal information, medical records, or proprietary models. Vulnerabilities in any part of this stack could expose such data to attackers.

For example, an edge device like a smart camera running a face recognition model on an accelerator could be vulnerable if the hardware lacks proper cache isolation. An attacker might exploit this weakness to extract intermediate computations, model parameters, or user data. Similar risks exist in cloud inference services, where hardware multi-tenancy increases the chances of cross-tenant data leakage.

Such vulnerabilities pose concern in privacy-sensitive domains like healthcare, where ML systems routinely handle patient data. A breach could violate privacy regulations such as the Health Insurance Portability and Accountability Act (HIPAA)26, leading to significant legal and ethical consequences. Similar regulatory risks apply globally, with GDPR27 imposing fines up to 4% of global revenue for organizations that fail to implement appropriate technical measures to protect EU citizens’ data.

26 HIPAA Violations: Since enforcement began in 2003, HIPAA has generated over $130 million in fines, with individual penalties reaching $16 million. The largest healthcare data breach affected 78.8 million patients at Anthem Inc. in 2015, highlighting the massive scale of exposure when ML systems handling medical data are compromised.

27 General Data Protection Regulation (GDPR): Enacted by the EU in 2018, GDPR imposes fines up to 4% of global revenue (€20+ million) for privacy violations. Since enforcement began, over €4.5 billion in fines have been levied, including €746 million against Amazon in 2021, driving massive investment in privacy-preserving ML technologies.

These examples illustrate that hardware security is not solely about preventing physical tampering. It also requires architectural safeguards to prevent data leakage through the hardware itself. As new vulnerabilities continue to emerge across processors, accelerators, and memory systems, addressing these risks requires continuous mitigation efforts, often involving performance trade-offs, especially in compute- and memory-intensive ML workloads. Proactive solutions, such as confidential computing and trusted execution environments (TEEs), offer promising architectural defenses. However, achieving robust hardware security requires attention at every stage of the system lifecycle, from design to deployment.

Physical Attacks

Beyond design flaws, the second category involves direct physical manipulation. Physical tampering refers to the direct, unauthorized manipulation of computing hardware to undermine the integrity of machine learning systems. This type of attack is particularly concerning because it bypasses traditional software security defenses, directly targeting the physical components on which machine learning depends. ML systems are especially vulnerable to such attacks because they rely on hardware sensors, accelerators, and storage to process large volumes of data and produce reliable outcomes in real-world environments.

While software security measures, including encryption, authentication, and access control, protect ML systems against remote attacks, they offer little defense against adversaries with physical access to devices. Physical tampering can range from simple actions, like inserting a malicious USB device into an edge server, to highly sophisticated manipulations such as embedding hardware trojans during chip manufacturing. These threats are particularly relevant for machine learning systems deployed at the edge or in physically exposed environments, where attackers may have opportunities to interfere with the hardware directly.

To understand how such attacks affect ML systems in practice, consider the example of an ML-powered drone used for environmental mapping or infrastructure inspection. The drone’s navigation depends on machine learning models that process data from GPS, cameras, and inertial measurement units. If an attacker gains physical access to the drone, they could replace or modify its navigation module, embedding a hidden backdoor that alters flight behavior or reroutes data collection. Such manipulation not only compromises the system’s reliability but also opens the door to misuse, such as surveillance or smuggling operations.

These threats extend across application domains. Physical attacks are not limited to mobility systems. Biometric access control systems, which rely on ML models to process face or fingerprint data, are also vulnerable. These systems typically use embedded hardware to capture and process biometric inputs. An attacker could physically replace a biometric sensor with a modified component designed to capture and transmit personal identification data to an unauthorized receiver. This creates multiple vulnerabilities including unauthorized data access and enabling future impersonation attacks.

In addition to tampering with external sensors, attackers may target internal hardware subsystems. For example, the sensors used in autonomous vehicles, including cameras, LiDAR, and radar, are important for ML models that interpret the surrounding environment. A malicious actor could physically misalign or obstruct these sensors, degrading the model’s perception capabilities and creating safety hazards.

Hardware trojans pose another serious risk. Malicious modifications introduced during chip fabrication or assembly can embed dormant circuits in ML accelerators or inference chips. These trojans may remain inactive under normal conditions but trigger malicious behavior when specific inputs are processed or system states are reached. Such hidden vulnerabilities can disrupt computations, leak model outputs, or degrade system performance in ways that are extremely difficult to diagnose post-deployment.

Memory subsystems are also attractive targets. Attackers with physical access to edge devices or embedded ML accelerators could manipulate memory chips to extract encrypted model parameters or training data. Fault injection techniques, including voltage manipulation and electromagnetic interference, can further degrade system reliability by corrupting model weights or forcing incorrect computations during inference.

Physical access threats extend to data center and cloud environments as well. Attackers with sufficient access could install hardware implants, such as keyloggers or data interceptors, to capture administrative credentials or monitor data streams. Such implants can provide persistent backdoor access, enabling long-term surveillance or data exfiltration from ML training and inference pipelines.

In summary, physical attacks on machine learning systems threaten both security and reliability across a wide range of deployment environments. Addressing these risks requires a combination of hardware-level protections, tamper detection mechanisms, and supply chain integrity checks. Without these safeguards, even the most secure software defenses may be undermined by vulnerabilities introduced through direct physical manipulation.

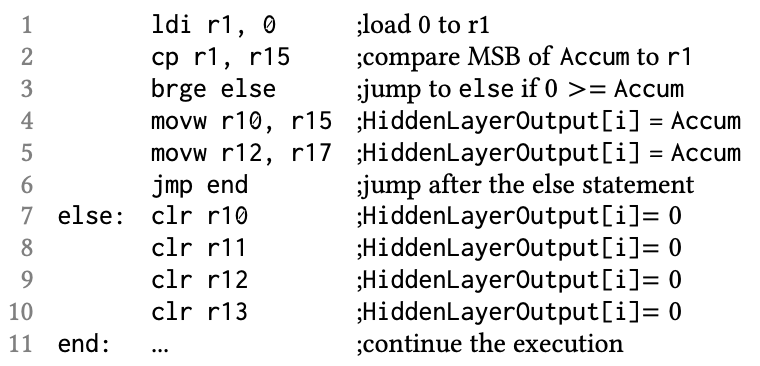

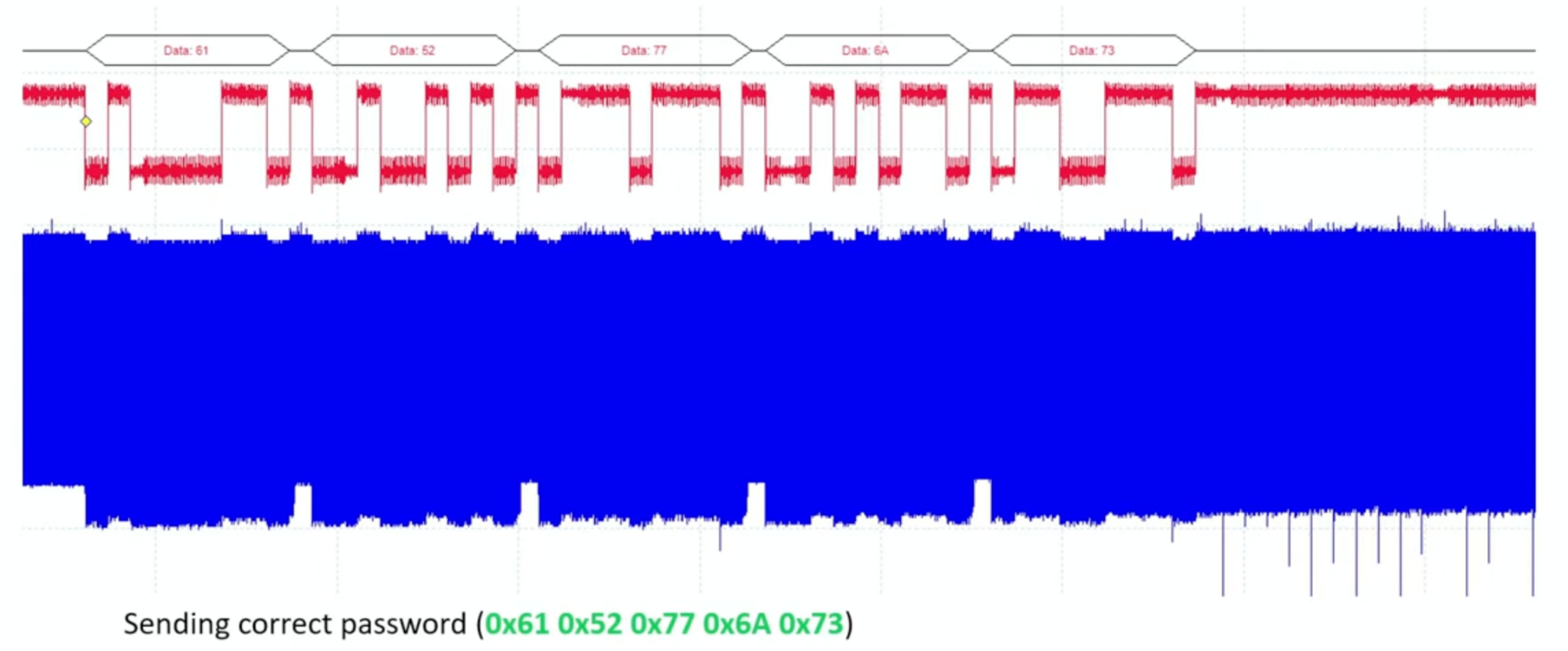

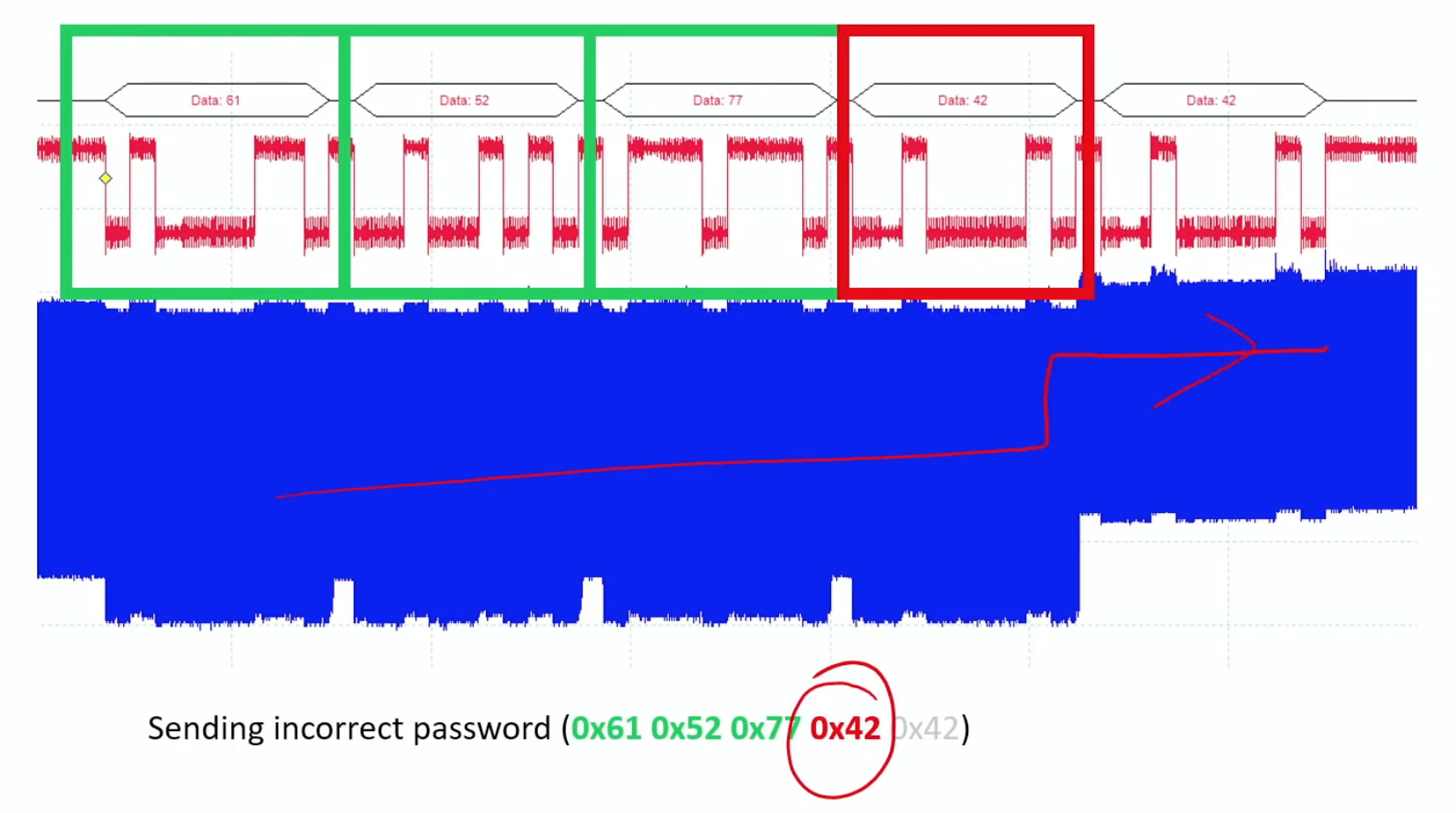

Fault Injection Attacks

Building on physical tampering techniques, fault injection represents a more sophisticated approach to hardware exploitation. Fault injection is a powerful class of physical attacks that deliberately disrupts hardware operations to induce errors in computation. These induced faults can compromise the integrity of machine learning models by causing them to produce incorrect outputs, degrade reliability, or leak sensitive information. For ML systems, such faults not only disrupt inference but also expose models to deeper exploitation, including reverse engineering and bypass of security protocols (Joye and Tunstall 2012).