Model Optimizations

DALL·E 3 Prompt: Illustration of a neural network model represented as a busy construction site, with a diverse group of construction workers, both male and female, of various ethnicities, labeled as ‘pruning’, ‘quantization’, and ‘sparsity’. They are working together to make the neural network more efficient and smaller, while maintaining high accuracy. The ‘pruning’ worker, a Hispanic female, is cutting unnecessary connections from the middle of the network. The ‘quantization’ worker, a Caucasian male, is adjusting or tweaking the weights all over the place. The ‘sparsity’ worker, an African female, is removing unnecessary nodes to shrink the model. Construction trucks and cranes are in the background, assisting the workers in their tasks. The neural network is visually transforming from a complex and large structure to a more streamlined and smaller one.

Purpose

How does the mismatch between research-optimized models and production deployment constraints create critical engineering challenges in machine learning systems?

Machine learning research prioritizes accuracy above all considerations, producing models with remarkable performance that cannot deploy where needed most: resource-constrained mobile devices, cost-sensitive cloud environments, or latency-critical edge applications. Model optimization bridges theoretical capability and practical deployment, transforming computationally intensive research models into efficient systems preserving performance while meeting stringent constraints on memory, energy, latency, and cost. Without systematic optimization techniques, advanced AI capabilities remain trapped in research laboratories. Understanding optimization principles enables engineers to democratize AI capabilities by making sophisticated models accessible across diverse deployment contexts, from billion-parameter language models running on mobile devices to embedded sensors.

Compare model optimization techniques including pruning, quantization, knowledge distillation, and neural architecture search in terms of their mechanisms and applications

Evaluate trade-offs between numerical precision levels and their effects on model accuracy, energy consumption, and hardware compatibility

Apply the tripartite optimization framework (model representation, numerical precision, architectural efficiency) to design deployment strategies for specific hardware constraints

Analyze how hardware-aware design principles influence model architecture decisions and computational efficiency across different deployment platforms

Implement sparsity exploitation and dynamic computation techniques to improve inference performance while managing accuracy preservation

Design integrated optimization pipelines that combine multiple techniques to achieve specific deployment objectives within resource constraints

Assess automated optimization approaches and their role in discovering novel optimization strategies beyond manual tuning

Model Optimization Fundamentals

Successful deployment of machine learning systems requires addressing the tension between model sophistication and computational feasibility. Contemporary research in machine learning has produced increasingly powerful models whose resource demands often exceed the practical constraints of real-world deployment environments. This represents the classic engineering challenge of translating theoretical advances into viable systems, affecting the accessibility and scalability of machine learning applications.

The magnitude of this resource gap is substantial and multifaceted. State-of-the-art language models may require several hundred gigabytes of memory for full-precision parameter storage (Brown et al. 2020; Chowdhery et al. 2022), while target deployment platforms such as mobile devices typically provide only a few gigabytes of available memory. This disparity extends beyond memory constraints to encompass computational throughput, energy consumption, and latency requirements. The challenge is further compounded by the heterogeneous nature of deployment environments, each imposing distinct constraints and performance requirements.

Production machine learning systems operate within a complex optimization landscape characterized by multiple, often conflicting, performance objectives. Real-time applications impose strict latency bounds, mobile deployments require energy efficiency to preserve battery life, embedded systems must operate within thermal constraints, and cloud services demand cost-effective resource utilization at scale. These constraints collectively define a multi-objective optimization problem that requires systematic approaches to achieve satisfactory solutions across all relevant performance dimensions.

The engineering discipline of model optimization has evolved to address these challenges through systematic methodologies that integrate algorithmic innovation with hardware-aware design principles. Effective optimization strategies require deep understanding of the interactions between model architecture, numerical precision, computational patterns, and target hardware characteristics. This interdisciplinary approach transforms optimization from an ad hoc collection of techniques into a principled engineering discipline guided by theoretical foundations and empirical validation.

This chapter establishes a comprehensive theoretical and practical framework for model optimization organized around three interconnected dimensions: structural efficiency in model representation, numerical efficiency through precision optimization, and computational efficiency via hardware-aware implementation. Through this framework, we examine how established techniques such as quantization achieve memory reduction and inference acceleration, how pruning methods eliminate parameter redundancy while preserving model accuracy, and how knowledge distillation enables capability transfer from complex models to efficient architectures. The overarching objective transcends simple performance metrics to enable the deployment of sophisticated machine learning capabilities across the complete spectrum of computational environments and application domains.

Optimization Framework

The optimization process operates through three interconnected dimensions that bridge software algorithms and hardware execution, as illustrated in Figure 1. Understanding these dimensions and their relationships provides the conceptual foundation for all techniques explored in this chapter.

Understanding these layer interactions reveals the systematic nature of optimization engineering. Model representation techniques (pruning, distillation, structured approximations) reduce computational complexity while creating opportunities for numerical precision optimization. Quantization and reduced-precision arithmetic exploit hardware capabilities for faster execution, while architectural efficiency techniques align computation patterns with processor designs. Software optimizations establish the foundation for hardware acceleration by creating structured, predictable workloads that specialized processors can execute efficiently.

This chapter examines each optimization layer through an engineering lens, providing specific algorithms for quantization (post-training and quantization-aware training), pruning strategies (magnitude-based, structured, and dynamic), and distillation procedures (temperature scaling, feature transfer). We explore how these techniques combine synergistically and how their effectiveness depends on target hardware characteristics. The framework guides systematic optimization decisions, ensuring that model transformations align with deployment constraints while preserving essential capabilities.

This chapter transforms the efficiency concepts from earlier foundations into actionable engineering practices through systematic application of optimization principles. Mastery of quantization, pruning, and distillation techniques provides practitioners with the essential tools for deploying sophisticated machine learning models across diverse computational environments. The optimization framework presented bridges the gap between theoretical model capabilities and practical deployment requirements, enabling machine learning systems that deliver both performance and efficiency in real-world applications.

Deployment Context

Machine learning models operate as part of larger systems with complex constraints, dependencies, and trade-offs. Model optimization cannot be treated as a purely algorithmic problem; it must be viewed as a systems-level challenge that considers computational efficiency, scalability, deployment feasibility, and overall system performance. Operational principles from Chapter 13: ML Operations provide the foundation for understanding the systems perspective on model optimization, highlighting why optimization is important, the key constraints that drive optimization efforts, and the principles that define an effective optimization strategy.

Practical Deployment

Modern machine learning models often achieve impressive accuracy on benchmark datasets, but making them practical for real-world use is far from trivial. Machine learning systems operate under computational, memory, latency, and energy constraints that significantly impact both training and inference (Choudhary et al. 2020). Models that perform well in research settings may prove impractical when integrated into broader systems, regardless of deployment context including cloud environments, smartphone integration, or microcontroller implementation.

1 Microcontroller Constraints: Arduino Uno has 2KB SRAM vs. 32KB flash storage. ARM Cortex-M4 implementations typically have 256KB flash, 64KB RAM, running at up to 168MHz vs. modern GPUs with 3000+ MHz clocks and 16-80GB memory, representing a 10,000x+ resource gap.

Beyond these deployment complexities, real-world feasibility encompasses efficiency in training, storage, and execution rather than accuracy alone.1

Efficiency requirements manifest differently across deployment contexts. In large-scale cloud ML settings, optimizing models helps minimize training time, computational cost, and power consumption, making large-scale AI workloads more efficient (Dean, Patterson, and Young 2018). In contrast, edge ML2 requires models to run with limited compute resources, necessitating optimizations that reduce memory footprint and computational complexity. Mobile ML introduces additional constraints, such as battery life and real-time responsiveness, while tiny ML3 pushes efficiency to the extreme, requiring models to fit within the memory and processing limits of ultra-low-power devices (Banbury et al. 2020).

2 Edge ML: Computing paradigm where ML inference occurs on local devices (smartphones, IoT sensors, autonomous vehicles) rather than cloud servers. Reduces latency from 100-500ms cloud round-trip to <10ms local processing, but constrains models to 10-500MB vs. multi-GB cloud models.

3 Tiny ML: Ultra-low-power ML systems operating under 1mW power budget with <1MB memory. Enables always-on AI in hearing aids, smart sensors, and wearables. Models typically 10-100KB vs. GB-scale cloud models, representing 10,000x size reduction.

Optimization contributes to sustainable and accessible AI deployment, following sustainability principles established in Chapter 18: Sustainable AI. Reducing a model’s energy footprint is important as AI workloads scale, helping mitigate the environmental impact of large-scale ML training and inference (Patterson et al. 2021). At the same time, optimized models can expand the reach of machine learning, supporting applications in low-resource environments, from rural healthcare to autonomous systems operating in the field.

Balancing Trade-offs

The tension between accuracy and efficiency drives optimization decisions across all dimensions. Increasing model capacity generally enhances predictive performance while increasing computational cost, resulting in slower, more resource-intensive inference. These improvements introduce challenges related to memory footprint4, inference latency, power consumption, and training efficiency. As machine learning systems are deployed across a wide range of hardware platforms, balancing accuracy and efficiency becomes a key challenge in model optimization.

4 Memory Bandwidth: Modern GPUs achieve 3.0 TB/s memory bandwidth (H100 SXM5) vs. 25-50 GB/s for high-end mobile SoCs. Large language models require 1-2x model size in GPU memory for training (16GB model needs 32GB+ GPU memory), creating the “memory wall” bottleneck.

This tension manifests differently across deployment contexts. Training requires computational resources that scale with model size, while inference demands strict latency and power constraints in real-time applications.

Optimization Dimensions

Each optimization dimension merits detailed examination. As shown in Figure 1, model representation optimization reduces what computations are performed, numerical precision optimization changes how computations are executed, and architectural efficiency optimization ensures operations run efficiently on target hardware.

Model Representation

The first dimension, model representation optimization, focuses on eliminating redundancy in the structure of machine learning models. Large models often contain excessive parameters5 that contribute little to overall performance but significantly increase memory footprint and computational cost. Optimizing model representation involves techniques that remove unnecessary components while maintaining predictive accuracy. These include pruning, knowledge distillation, and automated architecture search methods that refine model structures to balance efficiency and accuracy. These optimizations primarily impact how models are designed at an algorithmic level, ensuring that they remain effective while being computationally manageable.

5 Overparameterization: Modern neural networks typically have 10-100x more parameters than theoretically needed. GPT-3’s 175B parameters could theoretically be compressed to 1-10B while maintaining 95% performance, but overparameterization enables faster training convergence and better generalization during the learning process.

Numerical Precision

While representation techniques modify what computations are performed, precision optimization changes how those computations are executed by reducing the numerical fidelity of weights, activations, and arithmetic operations. The second dimension, numerical precision optimization, addresses how numerical values are represented and processed within machine learning models. The precision optimization techniques detailed in this section address these efficiency challenges. Quantization techniques map high-precision weights and activations to lower-bit representations, enabling efficient execution on hardware accelerators such as GPUs, TPUs, and specialized AI chips (Chapter 11: AI Acceleration). Mixed-precision training6 dynamically adjusts precision levels during training to strike a balance between efficiency and accuracy.

6 Mixed-Precision Training: Uses FP16 for forward pass and FP32 for gradient computation, achieving 1.5-2x training speedup with ~50% memory reduction. NVIDIA’s automatic mixed precision (AMP) maintains FP32 accuracy while delivering approximately 1.6x speedup on V100 and up to 2.2x on A100 GPUs.

Careful numerical precision optimization enables significant computational cost reductions while maintaining acceptable accuracy levels, providing sophisticated model access in resource-constrained environments.

Architectural Efficiency

The third dimension, architectural efficiency, addresses efficient computation performance during training and inference. Well-designed model structure proves insufficient when execution remains suboptimal. Many machine learning models contain redundancies in their computational graphs, leading to inefficiencies in how operations are scheduled and executed. Sparsity7 represents a key architectural efficiency technique where models exploit zero-valued parameters to reduce computation.

7 Sparsity: Percentage of zero-valued parameters in a model. 90% sparse models have only 10% non-zero weights, reducing memory by 10x and computation by 10x (with specialized hardware). Modern transformers naturally exhibit 80-95% activation sparsity during inference.

8 Matrix Factorization: Decomposes large weight matrices (e.g., 4096×4096) into smaller matrices (4096×256 × 256×4096), reducing parameters from 16M to 2M (8x reduction). SVD and low-rank approximations maintain 95%+ accuracy while enabling 3-5x speedup on mobile hardware.

Architectural efficiency involves techniques that exploit sparsity in both model weights and activations, factorize large computational components into more efficient forms8, and dynamically adjust computation based on input complexity.

These architectural optimization methods improve execution efficiency across different hardware platforms, reducing latency and power consumption. These efficiency principles extend naturally to training scenarios, where techniques such as gradient checkpointing and low-rank adaptation9 help reduce memory overhead and computational demands.

9 LoRA (Low-Rank Adaptation): Fine-tuning technique that freezes pretrained weights and adds small trainable matrices, reducing trainable parameters by over 99% (from 175B to approximately 1.2M for GPT-3 scale). Achieves comparable performance while reducing training memory and computation by 3x.

Three-Dimensional Optimization Framework

The interconnected nature of this three-dimensional framework emerges when examining technique interactions. Pruning primarily addresses model representation but also affects architectural efficiency by reducing inference operations. Quantization focuses on numerical precision but impacts memory footprint and execution efficiency. Understanding these interdependencies enables optimal optimization combinations.

This interconnected nature means that the choice of optimizations is driven by system constraints, which define the practical limitations within which models must operate. A machine learning model deployed in a data center has different constraints from one running on a mobile device or an embedded system. Computational cost, memory usage, inference latency, and energy efficiency all influence which optimizations are most appropriate for a given scenario. A model that is too large for a resource-constrained device may require aggressive pruning and quantization, while a latency-sensitive application may benefit from operator fusion10 and hardware-aware scheduling.

10 Operator Fusion: Graph-level optimization that combines multiple operations into single kernels, reducing memory bandwidth by 30-50%. In ResNet-50, fusing Conv+BatchNorm+ReLU operations achieves 1.8x speedup on V100 GPUs, while BERT transformer blocks show 25% latency reduction through attention fusion.

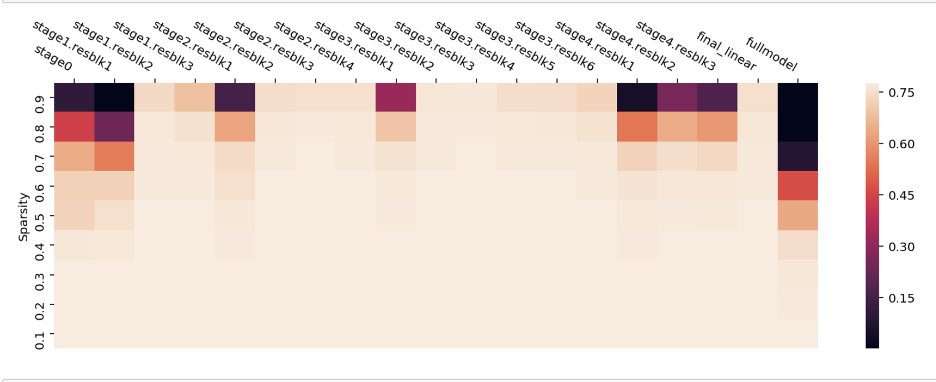

The constraint-dimension mapping established in Table 1 demonstrates interdependence between optimization strategies and real-world constraints. These relationships extend beyond one-to-one correspondence, as many optimization techniques affect multiple constraints simultaneously.

Systematic examination of each dimension begins with model representation optimization, encompassing techniques that modify neural network structure and parameters to eliminate redundancy while preserving accuracy.

Structural Model Optimization Methods

Model representation optimization modifies neural network structure and parameters to improve efficiency while preserving accuracy. Modern models often prioritize accuracy over efficiency, containing excessive parameters that increase costs and slow inference. This optimization addresses inefficiencies through two objectives: eliminating redundancy (exploiting overparameterization where models achieve similar performance with fewer parameters) and structuring computations for efficient hardware execution through techniques like gradient checkpointing11 and parallel processing patterns12.

11 Gradient Checkpointing: Memory optimization technique that trades computation for memory by recomputing intermediate activations during backpropagation instead of storing them. Reduces memory usage by 20-50% in transformer models, enabling larger batch sizes or model sizes within same GPU memory.

12 Parallel Processing in ML: High-end datacenter GPUs have 5,000-10,000+ cores vs. CPU’s 8-64 cores. NVIDIA H100 delivers 989 TFLOPS tensor performance vs. Intel Xeon 3175-X’s ~1.5 TFLOPS (double precision), representing a 650x compute density advantage for parallelizable ML workloads.

13 Pareto Frontier: In model optimization, the curve where improving one metric (speed) requires sacrificing another (accuracy). EfficientNet family demonstrates optimal accuracy-FLOPS trade-offs: EfficientNet-B0 (77.1% ImageNet, 390M FLOPs) to B7 (84.4%, 37B FLOPs), showing diminishing returns at scale.

The optimization challenge lies in balancing competing constraints13. Aggressive compression risks accuracy degradation that renders models unreliable for production use, while insufficient optimization leaves models too large or slow for target deployment environments. Selecting appropriate techniques requires understanding trade-offs between model size, computational complexity, and generalization performance.

Three key techniques address this challenge: pruning eliminates low-impact parameters, knowledge distillation transfers capabilities to smaller models, and NAS automates architecture design for specific constraints. Each technique offers distinct optimization pathways while maintaining model performance.

These three techniques represent distinct but complementary approaches within our optimization framework. Pruning and knowledge distillation reduce redundancy in existing models, while NAS addresses building optimized architectures from the ground up. In many cases, they can be combined to achieve even greater optimization.

Pruning

The memory wall constrains system performance: as models grow larger, memory bandwidth becomes the bottleneck rather than computational capacity. Pruning directly addresses this constraint by lowering memory requirements through parameter elimination. State-of-the-art machine learning models often contain millions or billions of parameters, many of which contribute minimally to final predictions. While large models enhance representational power and generalization, they also introduce inefficiencies in memory footprint, computational cost, and scalability that impact both training and deployment across cloud, edge, and mobile environments.

Parameter necessity for accuracy maintenance varies considerably. Many weights contribute minimally to decision-making processes, enabling significant efficiency improvements through removal without substantial performance degradation. This redundancy exists because modern neural networks are heavily overparameterized, meaning they have far more weights than are strictly necessary to solve a task. This overparameterization serves important purposes during training by providing multiple optimization paths and helping avoid poor local minima, but it creates opportunities for compression during deployment. Model compression preserves performance through information-theoretic principles from Chapter 3: Deep Learning Primer, where neural networks’ overparameterization creates compression opportunities. This observation motivates pruning, a class of optimization techniques that systematically removes redundant parameters while preserving model accuracy.

Pruning enables models to become smaller, faster, and more efficient without requiring architectural redesign. By eliminating redundancy, pruning directly addresses the memory, computation, and scalability constraints of machine learning systems, making it essential for deploying models across diverse hardware platforms.

Modern frameworks provide built-in APIs that make these optimization techniques readily accessible. PyTorch offers torch.nn.utils.prune for pruning operations, while TensorFlow provides the Model Optimization Toolkit14 with functions like tfmot.sparsity.keras.prune_low_magnitude(). These tools transform complex research algorithms into practical function calls, making optimization achievable for practitioners at all levels.

14 TensorFlow Model Optimization: TensorFlow Model Optimization Toolkit provides production-ready quantization (achieving 4x model size reduction), pruning (up to 90% sparsity), and clustering techniques. Used by YouTube, Gmail, and Google Photos to deploy models on 4+ billion devices worldwide.

Pruning Example

Pruning can be illustrated through systematic example. Pruning identifies weights contributing minimally to model predictions and removes them while maintaining accuracy. The most intuitive approach examines weight magnitudes, as weights with small absolute values typically have minimal impact on outputs, making them candidates for removal.

Listing 1 demonstrates magnitude-based pruning on a 3×3 weight matrix, showing how a simple threshold rule creates sparsity.

import torch

import torch.nn.utils.prune as prune

# Original dense weight matrix

weights = torch.tensor(

[[0.8, 0.1, -0.7], [0.05, -0.9, 0.03], [-0.6, 0.02, 0.4]]

)

# Simple magnitude-based pruning: remove weights with magnitude < 0.5

threshold = 0.5

mask = torch.abs(weights) >= threshold

pruned_weights = weights * mask

print("Original:", weights)

print("Pruned:", pruned_weights)

# Result: 4 out of 9 weights remain (56% sparsity)This example illustrates the core pruning objective: minimize the number of parameters while maintaining model performance. We reduced nonzero parameters from 9 to 4 (keeping only 4 weights, hence a budget of \(k=4\)). The weights with smallest magnitudes (0.4, 0.1, 0.05, 0.03, 0.02) were removed, while the four largest magnitude weights (0.8, -0.7, -0.9, -0.6) were preserved.

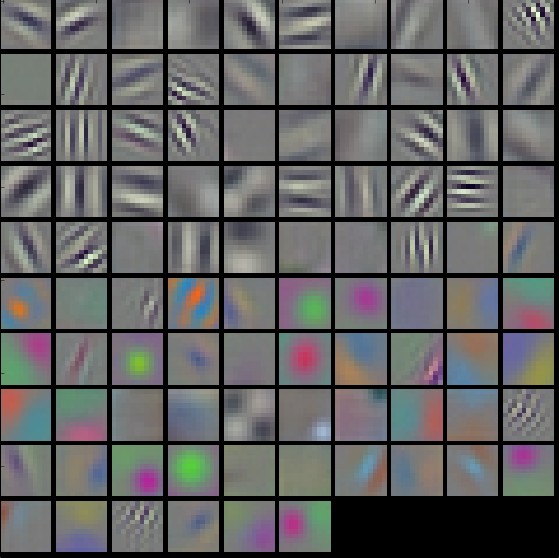

Extending this intuition to full neural networks requires considering both how many parameters to remove (the sparsity level) and which parameters to remove (the selection criterion). The next visualization shows this applied to larger weight matrices.

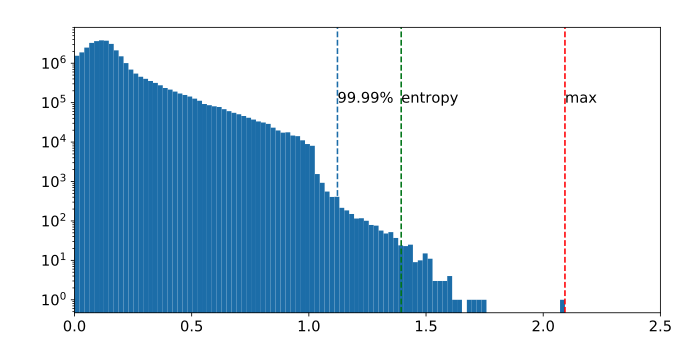

As illustrated in Figure 2, pruning reduces the number of nonzero weights by eliminating small-magnitude values, transforming a dense weight matrix into a sparse representation. This explicit enforcement of sparsity aligns with the \(\ell_0\)-norm constraint in our optimization formulation.

Mathematical Formulation

The goal of pruning can be stated simply: we want to find the version of our model that has the fewest non-zero weights (the smallest size) while causing the smallest possible increase in the prediction error (the loss). This intuitive goal translates into a mathematical optimization problem that guides practical pruning algorithms.

The pruning process can be formalized as an optimization problem. Given a trained model with parameters \(W\), we seek a sparse version \(\hat{W}\) that retains only the most important parameters. The objective is expressed as:

\[ \min_{\hat{W}} \mathcal{L}(\hat{W}) \quad \text{subject to} \quad \|\hat{W}\|_0 \leq k \]

where \(\mathcal{L}(\hat{W})\) represents the model’s loss function after pruning, \(\hat{W}\) denotes the pruned model’s parameters, \(\|\hat{W}\|_0\) is the L0-norm (number of nonzero parameters), and \(k\) is the parameter budget constraining maximum model size.

The L0-norm directly measures model size by counting nonzero parameters, which determines memory usage and computational cost. However, L0-norm minimization is NP-hard, making this optimization challenging. Practical pruning algorithms use heuristics like magnitude-based selection, gradient-based importance, or second-order sensitivity to approximate solutions efficiently.

In Listing 1, this constraint becomes concrete: we reduced \(\|\hat{W}\|_0\) from 9 to 4 (satisfying \(k=4\)), with the magnitude threshold acting as our selection heuristic. Alternative formulations using L1 or L2 norms encourage small weights but don’t guarantee exact zeros, failing to reduce actual memory or computation without explicit thresholding.

To make pruning computationally feasible, practical methods replace the hard constraint with a soft regularization term: \[ \min_W \mathcal{L}(W) + \lambda \| W \|_1 \] where \(\lambda\) controls sparsity degree. The \(\ell_1\)-norm encourages smaller weight values and promotes sparsity but does not strictly enforce zero values. Other methods use iterative heuristics, where parameters with smallest magnitudes are pruned in successive steps, followed by fine-tuning to recover lost accuracy (Gale, Elsen, and Hooker 2019a; Labarge, n.d.).

Target Structures

Pruning methods vary based on which structures within a neural network are removed. The primary targets include neurons, channels, and layers, each with distinct implications for the model’s architecture and performance.

Neuron pruning removes entire neurons along with their associated weights and biases, reducing the width of a layer. This technique is often applied to fully connected layers.

Channel pruning (or filter pruning), commonly used in convolutional neural networks, eliminates entire channels or filters. This reduces the depth of feature maps, which impacts the network’s ability to extract certain features. Channel pruning is particularly valuable in image-processing tasks where computational efficiency is a priority.

Layer pruning removes entire layers from the network, significantly reducing depth. While this approach can yield significant efficiency gains, it requires careful balance to ensure the model retains sufficient capacity to capture complex patterns.

Figure 3 illustrates the differences between channel pruning and layer pruning. When a channel is pruned, the model’s architecture must be adjusted to accommodate the structural change. Specifically, the number of input channels in subsequent layers must be modified, requiring alterations to the depths of the filters applied to the layer with the removed channel. In contrast, layer pruning removes all channels within a layer, necessitating more significant architectural modifications. In this case, connections between remaining layers must be reconfigured to bypass the removed layer. Regardless of the pruning approach, fine-tuning is important to adapt the remaining network and restore performance.

Unstructured Pruning

Unstructured pruning removes individual weights while preserving the overall network architecture. During training, some connections become redundant, contributing little to the final computation. Pruning these weak connections reduces memory requirements while preserving most of the model’s accuracy.

The mathematical foundation for unstructured pruning helps understand how sparsity is systematically introduced. Mathematically, unstructured pruning introduces sparsity into the weight matrices of a neural network. Let \(W \in \mathbb{R}^{m \times n}\) represent a weight matrix in a given layer of a network. Pruning removes a subset of weights by applying a binary mask \(M \in \{0,1\}^{m \times n}\), yielding a pruned weight matrix: \[ \hat{W} = M \odot W \] where \(\odot\) represents the element-wise Hadamard product. The mask \(M\) is constructed based on a pruning criterion, typically weight magnitude. A common approach is magnitude-based pruning, which removes a fraction \(s\) of the lowest-magnitude weights. This is achieved by defining a threshold \(\tau\) such that: \[ M_{i,j} = \begin{cases} 1, & \text{if } |W_{i,j}| > \tau \\ 0, & \text{otherwise} \end{cases} \] where \(\tau\) is chosen to ensure that only the largest \((1 - s)\) fraction of weights remain. This method assumes that larger-magnitude weights contribute more to the network’s function, making them preferable for retention.

The primary advantage of unstructured pruning is memory efficiency. By reducing the number of nonzero parameters, pruned models require less storage, which is particularly beneficial when deploying models to embedded or mobile devices with limited memory.

However, unstructured pruning does not necessarily improve computational efficiency on modern machine learning hardware. Standard GPUs and TPUs are optimized for dense matrix multiplications, and a sparse weight matrix often cannot fully utilize hardware acceleration unless specialized sparse computation kernels are available. Therefore, unstructured pruning primarily benefits model storage rather than inference acceleration. While unstructured pruning improves model efficiency at the parameter level, it does not alter the structural organization of the network.

Structured Pruning

While unstructured pruning removes individual weights from a neural network, structured pruning15 eliminates entire computational units, such as neurons, filters, channels, or layers. This approach is particularly beneficial for hardware efficiency, as it produces smaller dense models that can be directly mapped to modern machine learning accelerators. Unlike unstructured pruning, which results in sparse weight matrices that require specialized execution kernels to exploit computational benefits, structured pruning leads to more efficient inference on general-purpose hardware by reducing the overall size of the network architecture.

15 Structured Pruning: Filter pruning in ResNet-34 achieves 50% FLOP reduction with only 1.0% accuracy loss on CIFAR-10. Channel pruning in MobileNetV2 reduces parameters by 73% while maintaining 96.5% of original accuracy, enabling 3.2x faster inference on ARM processors.

Structured pruning is motivated by the observation that not all neurons, filters, or layers contribute equally to a model’s predictions. Some units primarily carry redundant or low-impact information, and removing them does not significantly degrade model performance. The challenge lies in identifying which structures can be pruned while preserving accuracy.

Figure 4 illustrates the key differences between unstructured and structured pruning. On the left, unstructured pruning removes individual weights (depicted as dashed connections), creating a sparse weight matrix. This can disrupt the original network structure, as shown in the fully connected network where certain connections have been randomly pruned. While this reduces the number of active parameters, the resulting sparsity requires specialized execution kernels to fully utilize computational benefits.

In contrast, structured pruning (depicted in the middle and right sections of the figure) removes entire neurons or filters while preserving the network’s overall structure. In the middle section, a pruned fully connected network retains its fully connected nature but with fewer neurons. On the right, structured pruning is applied to a CNN by removing convolutional kernels or entire channels (dashed squares). This method maintains the CNN’s core convolutional operations while reducing the computational load, making it more compatible with hardware accelerators.

A common approach to structured pruning is magnitude-based pruning, where entire neurons or filters are removed based on the magnitude of their associated weights. The intuition behind this method is that parameters with smaller magnitudes contribute less to the model’s output, making them prime candidates for elimination. The importance of a neuron or filter is often measured using a norm function, such as the \(\ell_1\)-norm or \(\ell_2\)-norm, applied to the weights associated with that unit. If the norm falls below a predefined threshold, the corresponding neuron or filter is pruned. This method is straightforward to implement and does not require additional computational overhead beyond computing norms across layers.

Another strategy is activation-based pruning, which evaluates the average activation values of neurons or filters over a dataset. Neurons that consistently produce low activations contribute less information to the network’s decision process and can be safely removed. This method captures the dynamic behavior of the network rather than relying solely on static weight values. Activation-based pruning requires profiling the model over a representative dataset to estimate the average activation magnitudes before making pruning decisions.

Gradient-based pruning uses information from the model’s training process to identify less significant neurons or filters. The key idea is that units with smaller gradient magnitudes contribute less to reducing the loss function, making them less important for learning. By ranking neurons based on their gradient values, structured pruning can remove those with the least impact on model optimization. Unlike magnitude-based or activation-based pruning, which rely on static properties of the trained model, gradient-based pruning requires access to gradient computations and is typically applied during training rather than as a post-processing step.

Each of these methods presents trade-offs in terms of computational complexity and effectiveness. Magnitude-based pruning is computationally inexpensive and easy to implement but does not account for how neurons behave across different data distributions. Activation-based pruning provides a more data-driven pruning approach but requires additional computations to estimate neuron importance. Gradient-based pruning leverages training dynamics but may introduce additional complexity if applied to large-scale models. The choice of method depends on the specific constraints of the target deployment environment and the performance requirements of the pruned model.

Dynamic Pruning

Traditional pruning methods, whether unstructured or structured, typically involve static pruning, where parameters are permanently removed after training or at fixed intervals during training. However, this approach assumes that the importance of parameters is fixed, which is not always the case. In contrast, dynamic pruning adapts pruning decisions based on the input data or training dynamics, allowing the model to adjust its structure in real time.

Dynamic pruning can be implemented using runtime sparsity techniques, where the model actively determines which parameters to utilize based on input characteristics. Activation-conditioned pruning exemplifies this approach by selectively deactivating neurons or channels that exhibit low activation values for specific inputs (J. Hu et al. 2023). This method introduces input-dependent sparsity patterns, effectively reducing the computational workload during inference without permanently modifying the model architecture.

For instance, consider a convolutional neural network processing images with varying complexity. During inference of a simple image containing mostly uniform regions, many convolutional filters may produce negligible activations. Dynamic pruning identifies these low-impact filters and temporarily excludes them from computation, improving efficiency while maintaining accuracy for the current input. This adaptive behavior is particularly advantageous in latency-sensitive applications, where computational resources must be allocated judiciously based on input complexity, connecting to performance measurement strategies (Chapter 12: Benchmarking AI).

Another class of dynamic pruning operates during training, where sparsity is gradually introduced and adjusted throughout the optimization process. Methods such as gradual magnitude pruning start with a dense network and progressively increase the fraction of pruned parameters as training progresses. Instead of permanently removing parameters, these approaches allow the network to recover from pruning-induced capacity loss by regrowing connections that prove to be important in later stages of training.

Dynamic pruning presents several advantages over static pruning. It allows models to adapt to different workloads, potentially improving efficiency while maintaining accuracy. Unlike static pruning, which risks over-pruning and degrading performance, dynamic pruning provides a mechanism for selectively reactivating parameters when necessary. However, implementing dynamic pruning requires additional computational overhead, as pruning decisions must be made in real-time, either during training or inference. This makes it more complex to integrate into standard machine learning pipelines compared to static pruning, requiring sophisticated production deployment strategies and monitoring frameworks covered in Chapter 13: ML Operations.

Despite its challenges, dynamic pruning is particularly useful in edge computing and adaptive AI systems (Chapter 14: On-Device Learning), where resource constraints and real-time efficiency requirements vary across different inputs. The next section explores the practical considerations and trade-offs involved in choosing the right pruning method for a given machine learning system.

Pruning Trade-offs

Pruning techniques offer different trade-offs in terms of memory efficiency, computational efficiency, accuracy retention, hardware compatibility, and implementation complexity. The choice of pruning strategy depends on the specific constraints of the machine learning system and the deployment environment, integrating with operational considerations (Chapter 13: ML Operations).

Unstructured pruning is particularly effective in reducing model size and memory footprint, as it removes individual weights while keeping the overall model architecture intact. However, since machine learning accelerators are optimized for dense matrix operations, unstructured pruning does not always translate to significant computational speed-ups unless specialized sparse execution kernels are used.

Structured pruning, in contrast, eliminates entire neurons, channels, or layers, leading to a more hardware-friendly model. This technique provides direct computational savings, as it reduces the number of floating-point operations (FLOPs)16 required during inference.

16 FLOPs (Floating-Point Operations): Computational complexity metric counting multiply-add operations. ResNet-50 requires approximately 3.8 billion FLOPs per inference (K. He et al. 2015), GPT-3 training required an estimated 3.14E23 FLOPs (Patterson et al. 2021). Modern GPUs achieve 100-300 TFLOPS (trillion FLOPs/second), making FLOP reduction important for efficiency.

The downside is that modifying the network structure can lead to a greater accuracy drop, requiring careful fine-tuning to recover lost performance.

Dynamic pruning introduces adaptability into the pruning process by adjusting which parameters are pruned at runtime based on input data or training dynamics. This allows for a better balance between accuracy and efficiency, as the model retains the flexibility to reintroduce previously pruned parameters if needed. However, dynamic pruning increases implementation complexity, as it requires additional computations to determine which parameters to prune on-the-fly.

Table 2 summarizes the key structural differences between these pruning approaches, outlining how each method modifies the model and impacts its execution.

| Aspect | Unstructured Pruning | Structured Pruning | Dynamic Pruning |

|---|---|---|---|

| What is removed? | Individual weights in the model | Entire neurons, channels, filters, or layers | Adjusts pruning based on runtime conditions |

| Model structure | Sparse weight matrices; original architecture remains unchanged | Model architecture is modified; pruned layers are fully removed | Structure adapts dynamically |

| Impact on memory | Reduces model storage by eliminating nonzero weights | Reduces model storage by removing entire components | Varies based on real-time pruning |

| Impact on computation | Limited; dense matrix operations still required unless specialized sparse computation is used | Directly reduces FLOPs and speeds up inference | Balances accuracy and efficiency dynamically |

| Hardware compatibility | Sparse weight matrices require specialized execution support for efficiency | Works efficiently with standard deep learning hardware | Requires adaptive inference engines |

| Fine-tuning required? | Often necessary to recover accuracy after pruning | More likely to require fine-tuning due to larger structural modifications | Adjusts dynamically, reducing the need for retraining |

| Use cases | Memory-efficient model compression, particularly for cloud deployment | Real-time inference optimization, mobile/edge AI, and efficient training | Adaptive AI applications, real-time systems |

Pruning Strategies

Beyond the broad categories of unstructured, structured, and dynamic pruning, different pruning workflows can impact model efficiency and accuracy retention. Two widely used pruning strategies are iterative pruning and one-shot pruning, each with its own benefits and trade-offs.

Iterative Pruning

Iterative pruning implements a gradual approach to structure removal through multiple cycles of pruning followed by fine-tuning. During each cycle, the algorithm removes a small subset of structures based on predefined importance metrics. The model then undergoes fine-tuning to adapt to these structural modifications before proceeding to the next pruning iteration. This methodical approach helps prevent sudden drops in accuracy while allowing the network to progressively adjust to reduced complexity.

To illustrate this process, consider pruning six channels from a convolutional neural network as shown in Figure 5. Rather than removing all channels simultaneously, iterative pruning eliminates two channels per iteration over three cycles. Following each pruning step, the model undergoes fine-tuning to recover performance. The first iteration, which removes two channels, results in an accuracy decrease from 0.995 to 0.971, but subsequent fine-tuning restores accuracy to 0.992. After completing two additional pruning-tuning cycles, the final model achieves 0.991 accuracy, which represents only a 0.4% reduction from the original, while operating with 27% fewer channels. By distributing structural modifications across multiple iterations, the network maintains its performance capabilities while achieving improved computational efficiency.

One-shot Pruning

One-shot pruning removes multiple architectural components in a single step, followed by an extensive fine-tuning phase to recover model accuracy. This aggressive approach compresses the model quickly but risks greater accuracy degradation, as the network must adapt to significant structural changes simultaneously.

Consider applying one-shot pruning to the same network from the iterative pruning example. Instead of removing two channels at a time over multiple iterations, one-shot pruning eliminates all six channels simultaneously, as illustrated in Figure 6. Removing 27% of the network’s channels simultaneously causes the accuracy to drop significantly, from 0.995 to 0.914. Even after fine-tuning, the network only recovers to an accuracy of 0.943, which is a 5% degradation from the original unpruned network. While both iterative and one-shot pruning ultimately produce identical network structures, the gradual approach of iterative pruning better preserves model performance.

The choice of pruning strategy requires careful consideration of several key factors that influence both model efficiency and performance. The desired level of parameter reduction, or sparsity target, directly impacts strategy selection. Higher reduction targets often necessitate iterative approaches to maintain accuracy, while moderate sparsity goals may be achievable through simpler one-shot methods.

Available computational resources significantly influence strategy choice. Iterative pruning demands significant resources for multiple fine-tuning cycles, whereas one-shot approaches require fewer resources but may sacrifice accuracy. This resource consideration connects to performance requirements, where applications with strict accuracy requirements typically benefit from gradual, iterative pruning to carefully preserve model capabilities. Use cases with more flexible performance constraints may accommodate more aggressive one-shot approaches.

Development timeline also impacts pruning decisions. One-shot methods enable faster deployment when time is limited, though iterative approaches generally achieve superior results given sufficient optimization periods. Finally, target platform capabilities significantly influence strategy selection, as certain hardware architectures may better support specific sparsity patterns, making particular pruning approaches more advantageous for deployment.

The choice between pruning strategies requires careful evaluation of project requirements and constraints. One-shot pruning enables rapid model compression by removing multiple parameters simultaneously, making it suitable for scenarios where deployment speed is prioritized over accuracy. However, this aggressive approach often results in greater performance degradation compared to more gradual methods. Iterative pruning, on the other hand, while computationally intensive and time-consuming, typically achieves superior accuracy retention through structured parameter reduction across multiple cycles. This methodical approach enables the network to adapt progressively to structural modifications, preserving important connections that maintain model performance. The trade-off is increased optimization time and computational overhead. By evaluating these factors systematically, practitioners can select a pruning approach that optimally balances efficiency gains with model performance for their specific use case.

Lottery Ticket Hypothesis

Pruning is widely used to reduce the size and computational cost of neural networks, but the process of determining which parameters to remove is not always straightforward. While traditional pruning methods eliminate weights based on magnitude, structure, or dynamic conditions, recent research suggests that pruning is not just about reducing redundancy; it may also reveal inherently efficient subnetworks that exist within the original model.

This perspective leads to the Lottery Ticket Hypothesis17 (LTH), which challenges conventional pruning workflows by proposing that within large neural networks, there exist small, well-initialized subnetworks, referred to as ‘winning tickets’, that can achieve comparable accuracy to the full model when trained in isolation. Rather than viewing pruning as just a post-training compression step, LTH suggests it can serve as a discovery mechanism to identify these efficient subnetworks early in training.

17 Lottery Ticket Hypothesis: The lottery ticket hypothesis (Frankle and Carbin 2018) demonstrates that ResNet-18 subnetworks at 10-20% original size achieve 93.2% accuracy vs. 94.1% for full model on CIFAR-10. BERT-base winning tickets retain 97% performance with 90% fewer parameters, requiring 5-8x less training time to converge.

LTH is validated through an iterative pruning process, illustrated in Figure 7. A large network is first trained to convergence. The lowest-magnitude weights are then pruned, and the remaining weights are reset to their original initialization rather than being re-randomized. This process is repeated iteratively, gradually reducing the network’s size while preserving performance. After multiple iterations, the remaining subnetwork, referred to as the ‘winning ticket’, proves capable of training to the same or higher accuracy as the original full model.

The implications of the Lottery Ticket Hypothesis extend beyond conventional pruning techniques. Instead of training large models and pruning them later, LTH suggests that compact, high-performing subnetworks could be trained directly from the start, eliminating the need for overparameterization. This insight challenges the traditional assumption that model size is necessary for effective learning. It also emphasizes the importance of initialization, as winning tickets only retain their performance when reset to their original weight values. This finding raises deeper questions about the role of initialization in shaping a network’s learning trajectory.

The hypothesis further reinforces the effectiveness of iterative pruning over one-shot pruning. Gradually refining the model structure allows the network to adapt at each stage, preserving accuracy more effectively than removing large portions of the model in a single step. This process aligns well with practical pruning strategies used in deployment, where preserving accuracy while reducing computation is important.

Despite its promise, applying LTH in practice remains computationally expensive, as identifying winning tickets requires multiple cycles of pruning and retraining. Ongoing research explores whether winning subnetworks can be detected early without full training, potentially leading to more efficient sparse training techniques. If such methods become practical, LTH could corely reshape how machine learning models are trained, shifting the focus from pruning large networks after training to discovering and training only the important components from the beginning.

While LTH presents a compelling theoretical perspective on pruning, practical implementations rely on established framework-level tools to integrate structured and unstructured pruning techniques.

Pruning Practice

Several machine learning frameworks provide built-in tools to apply structured and unstructured pruning, fine-tune pruned models, and optimize deployment for cloud, edge, and mobile environments.

Machine learning frameworks such as PyTorch, TensorFlow, and ONNX offer dedicated pruning utilities that allow practitioners to efficiently implement these techniques while ensuring compatibility with deployment hardware.

In PyTorch, pruning is available through the torch.nn.utils.prune module, which provides functions to apply magnitude-based pruning to individual layers or the entire model. Users can perform unstructured pruning by setting a fraction of the smallest-magnitude weights to zero or apply structured pruning to remove entire neurons or filters. PyTorch also allows for custom pruning strategies, where users define pruning criteria beyond weight magnitude, such as activation-based or gradient-based pruning. Once a model is pruned, it can be fine-tuned to recover lost accuracy before being exported for inference.

TensorFlow provides pruning support through the TensorFlow Model Optimization Toolkit (TF-MOT). This toolkit integrates pruning directly into the training process by applying sparsity-inducing regularization. TensorFlow’s pruning API supports global and layer-wise pruning, dynamically selecting parameters for removal based on weight magnitudes. Unlike PyTorch, TensorFlow’s pruning is typically applied during training, allowing models to learn sparse representations from the start rather than pruning them post-training. TF-MOT also provides export tools to convert pruned models into TFLite format, making them compatible with mobile and edge devices.

ONNX18, an open standard for model representation, does not implement pruning directly but provides export and compatibility support for pruned models from PyTorch and TensorFlow. Since ONNX is designed to be hardware-agnostic, it allows models that have undergone pruning in different frameworks to be optimized for inference engines such as TensorRT19, OpenVINO, and EdgeTPU. These inference engines can further leverage structured and dynamic pruning for execution efficiency, particularly on specialized hardware accelerators.

18 ONNX Deployment: ONNX Runtime achieves 1.3-2.9x speedup over TensorFlow and 1.1-1.7x over PyTorch across various models on CPU platforms. ResNet-50 inference drops from 7.2ms to 2.8ms on CPU, while BERT-Base reduces from 45ms to 23ms with ONNX Runtime optimizations including graph fusion and memory pooling.

19 TensorRT Optimization: NVIDIA TensorRT delivers up to 40x speedup for inference compared to CPU baselines, with typical GPU optimization improvements of 5-8x on V100. ResNet-50 INT8 inference achieves 1.2ms vs. 4.8ms FP32, while BERT-Large drops from 10.4ms to 2.1ms. Layer fusion reduces kernel launches by 80%, memory bandwidth by 50%.

Although framework-level support for pruning has advanced significantly, applying pruning in practice requires careful consideration of hardware compatibility and software optimizations. Standard CPUs and GPUs often do not natively accelerate sparse matrix operations, meaning that unstructured pruning may reduce memory usage without providing significant computational speed-ups. In contrast, structured pruning is more widely supported in inference engines, as it directly reduces the number of computations needed during execution. Dynamic pruning, when properly integrated with inference engines, can optimize execution based on workload variations and hardware constraints, making it particularly beneficial for adaptive AI applications.

At a practical level, choosing the right pruning strategy depends on several key trade-offs, including memory efficiency, computational performance, accuracy retention, and implementation complexity. These trade-offs impact how pruning methods are applied in real-world machine learning workflows, influencing deployment choices based on resource constraints and system requirements.

To help guide these decisions, Table 3 provides a high-level comparison of these trade-offs, summarizing the key efficiency and usability factors that practitioners must consider when selecting a pruning method.

These trade-offs underscore the importance of aligning pruning methods with practical deployment needs. Frameworks such as PyTorch, TensorFlow, and ONNX enable developers to implement these strategies, but the effectiveness of a pruning approach depends on the underlying hardware and application requirements.

| Criterion | Unstructured Pruning | Structured Pruning | Dynamic Pruning |

|---|---|---|---|

| Memory Efficiency | ↑↑ High | ↑ Moderate | ↑ Moderate |

| Computational Efficiency | → Neutral | ↑↑ High | ↑ High |

| Accuracy Retention | ↑ Moderate | ↓↓ Low | ↑↑ High |

| Hardware Compatibility | ↓ Low | ↑↑ High | → Neutral |

| Implementation Complexity | → Neutral | ↑ Moderate | ↓↓ High |

For example, structured pruning is commonly used in mobile and edge applications because of its compatibility with standard inference engines, whereas dynamic pruning is better suited for adaptive AI workloads that need to adjust sparsity levels on the fly. Unstructured pruning, while useful for reducing memory footprints, requires specialized sparse execution kernels to fully realize computational savings.

Understanding these trade-offs is important when deploying pruned models in real-world settings. Several high-profile models have successfully integrated pruning to optimize performance. MobileNet, a lightweight convolutional neural network designed for mobile and embedded applications, has been pruned to reduce inference latency while preserving accuracy (Howard et al. 2017). BERT20, a widely used transformer model for natural language processing, has undergone structured pruning of attention heads and intermediate layers to create efficient versions such as DistilBERT21 and TinyBERT, which retain much of the original performance while reducing computational overhead (Sanh et al. 2019). In computer vision, EfficientNet22 has been pruned to remove unnecessary filters, optimizing it for deployment in resource-constrained environments (Tan and Le 2019a).

20 BERT Compression: BERT-Base (110M params) can be compressed to 67M params (39% reduction) with only 1.2% GLUE score drop. Attention head pruning removes 144 of 192 heads with minimal impact, while layer pruning reduces 12 layers to 6 layers maintaining 97.8% performance.

21 DistilBERT: Achieves 97% of BERT-Base performance with 40% fewer parameters (66M vs. 110M) and 60% faster inference. On SQuAD v1.1, DistilBERT scores 86.9 F1 vs. BERT’s 88.5 F1, while reducing memory from 1.35GB to 0.54GB and latency from 85ms to 34ms.

22 EfficientNet Pruning: EfficientNet-B0 with 70% structured pruning maintains 75.8% ImageNet accuracy (vs. 77.1% original) with 2.8x speedup on mobile devices. Channel pruning reduces FLOPs from 390M to 140M while keeping inference under 20ms on Pixel 4.

Knowledge Distillation

Imagine a world-class professor (the teacher model) who has read thousands of books and has a deep, nuanced understanding of a subject. Now, imagine a bright student (the student model) who needs to learn the subject quickly. Instead of just giving the student the textbook answers (the hard labels), the professor provides rich explanations, pointing out why one answer is better than another and how different concepts relate (the soft labels). The student learns much more effectively from this rich guidance than from the textbook alone. This is the essence of knowledge distillation.

Knowledge distillation trains a smaller student model using guidance from a larger pre-trained teacher, learning from the teacher’s rich output distributions rather than simple correct/incorrect labels. This distinction matters because teacher models provide richer learning signals than ground-truth labels. Consider image classification: a ground-truth label might say “this is a dog” (one-hot encoding: [0, 1, 0, 0, …]). But a trained teacher model might output [0.02, 0.85, 0.08, 0.05, …], revealing that while “dog” is most likely, the image shares some features with “wolf” (0.08) and “fox” (0.05). This inter-class similarity information helps the student learn feature relationships that hard labels cannot convey.

Knowledge distillation differs from pruning. While pruning removes parameters from an existing model, distillation trains a separate, smaller architecture using guidance from a larger pre-trained teacher (Gou et al. 2021). The student model optimizes to match the teacher’s soft predictions (probability distributions over classes) rather than simply learning from labeled data (Jiong Lin et al. 2020).

Figure 8 illustrates the distillation process. The teacher model produces probability distributions using a softened softmax function with temperature \(T\), and the student model trains using both these soft targets and ground truth labels.

The training process for the student model incorporates two loss terms:

- Distillation loss: A loss function (often based on Kullback-Leibler (KL) divergence23) that minimizes the difference between the student’s and teacher’s soft label distributions.

- Student loss: A standard cross-entropy loss that ensures the student model correctly classifies the hard labels.

23 Kullback-Leibler (KL) Divergence: Information-theoretic measure quantifying difference between probability distributions, introduced by Kullback & Leibler (Kullback and Leibler 1951). In knowledge distillation, typical KL divergence values range 0.1-2.0 nats; values >3.0 indicate poor teacher-student alignment requiring temperature adjustment or architecture modification.

The combination of these two loss functions enables the student model to absorb both structured knowledge from the teacher and label supervision from the dataset. This approach allows smaller models to reach accuracy levels close to their larger teacher models, making knowledge distillation a key technique for model compression and efficient deployment.

Knowledge distillation allows smaller models to reach a level of accuracy that would be difficult to achieve through standard training alone. This makes it particularly useful in ML systems where inference efficiency is a priority, such as real-time applications, cloud-to-edge model compression, and low-power AI systems (Sun et al. 2019).

Distillation Theory

Knowledge distillation is based on the idea that a well-trained teacher model encodes more information about the data distribution than just the correct class labels. In conventional supervised learning, a model is trained to minimize the cross-entropy loss24 between its predictions and the ground truth labels. However, this approach only provides a hard decision boundary for each class, discarding potentially useful information about how the model relates different classes to one another (Hinton, Vinyals, and Dean 2015).

24 Cross-Entropy Loss: Standard loss function for classification tasks measuring difference between predicted and true probability distributions. For binary classification, ranges 0-∞ (lower is better); values >2.3 indicate poor performance (worse than random guessing). Computed as -log(predicted probability for true class).

Knowledge distillation addresses this limitation by transferring additional information through the soft probability distributions produced by the teacher model. Instead of training the student model to match only the correct label, it is trained to match the teacher’s full probability distribution over all possible classes. This is achieved by introducing a temperature-scaled softmax function, which smooths the probability distribution, making it easier for the student model to learn from the teacher’s outputs (Gou et al. 2021).

Distillation Mathematics

To formalize this temperature-based approach, let \(z_i\) be the logits (pre-softmax outputs) of the model for class \(i\). The standard softmax function computes class probabilities as: \[ p_i = \frac{\exp(z_i)}{\sum_j \exp(z_j)} \] where higher logits correspond to higher confidence in a class prediction.

In knowledge distillation, we introduce a temperature parameter25 \(T\) that scales the logits before applying softmax: \[ p_i(T) = \frac{\exp(z_i / T)}{\sum_j \exp(z_j / T)} \] where a higher temperature produces a softer probability distribution, revealing more information about how the model distributes uncertainty across different classes.

25 Temperature Parameter: Controls softness of probability distributions in knowledge distillation. T=1 gives standard softmax, T=3-5 typical for distillation (revealing inter-class relationships), T=20+ creates nearly uniform distributions. Optimal temperatures: T=3 for CIFAR-10, T=4 for ImageNet, T=6 for language models like BERT.

The student model is then trained using a loss function that minimizes the difference between its output distribution and the teacher’s softened output distribution. The most common formulation combines two loss terms: \[ \mathcal{L}_{\text{distill}} = (1 - \alpha) \mathcal{L}_{\text{CE}}(y_s, y) + \alpha T^2 \sum_i p_i^T \log p_{i, s}^T \] where:

- \(\mathcal{L}_{\text{CE}}(y_s, y)\) is the standard cross-entropy loss between the student’s predictions \(y_s\) and the ground truth labels \(y\).

- The second term minimizes the Kullback-Leibler (KL) divergence between the teacher’s softened predictions \(p_i^T\) and the student’s predictions \(p_{i, s}^T\).

- The factor \(T^2\) ensures that gradients remain appropriately scaled when using high-temperature values.

- The hyperparameter \(\alpha\) balances the importance of the standard training loss versus the distillation loss.

By learning from both hard labels and soft teacher outputs, the student model benefits from the generalization power of the teacher, improving its ability to distinguish between similar classes even with fewer parameters.

Distillation Intuition

By learning from both hard labels and soft teacher outputs, the student model benefits from the generalization power of the teacher, improving its ability to distinguish between similar classes even with fewer parameters. Unlike conventional training, where a model learns only from binary correctness signals, knowledge distillation allows the student to absorb a richer understanding of the data distribution from the teacher’s predictions.

A key advantage of soft targets is that they provide relative confidence levels rather than just a single correct answer. Consider an image classification task where the goal is to distinguish between different animal species. A standard model trained with hard labels will only receive feedback on whether its prediction is right or wrong. If an image contains a cat, the correct label is “cat,” and all other categories, such as “dog” and “fox,” are treated as equally incorrect. However, a well-trained teacher model naturally understands that a cat is more visually similar to a dog than to a fox, and its soft output probabilities might look like Figure 9, where the relative confidence levels indicate that while “cat” is the most likely category, “dog” is still a plausible alternative, whereas “fox” is much less likely.

Rather than simply forcing the student model to classify the image strictly as a cat, the teacher model provides a more nuanced learning signal, indicating that while “dog” is incorrect, it is a more reasonable mistake than “fox.” This subtle information helps the student model build better decision boundaries between similar classes, making it more robust to ambiguity in real-world data.

This effect is particularly useful in cases where training data is limited or noisy. A large teacher model trained on extensive data has already learned to generalize well, capturing patterns that might be difficult to discover with smaller datasets. The student benefits by inheriting this structured knowledge, acting as if it had access to a larger training signal than what is explicitly available.

Another key benefit of knowledge distillation is its regularization effect. Because soft targets distribute probability mass across multiple classes, they prevent the student model from overfitting to specific hard labels. This regularization improves model generalization and reduces sensitivity to adversarial inputs. Instead of confidently assigning a probability of 1.0 to the correct class and 0.0 to all others, the student learns to make more calibrated predictions, which improves its generalization performance. This is especially important when the student model has fewer parameters, as smaller networks are more prone to overfitting.

Finally, distillation helps compress large models into smaller, more efficient versions without major performance loss. This compression capability directly enables the sustainable AI practices Chapter 18: Sustainable AI by reducing the environmental impact of model deployment while maintaining performance standards. Training a small model from scratch often results in lower accuracy because the model lacks the capacity to learn the complex representations that a larger network can capture. However, by using the knowledge of a well-trained teacher, the student can reach a higher accuracy than it would have on its own, making it a more practical choice for real-world ML deployments, particularly in edge computing, mobile applications, and other resource-constrained environments explored in Chapter 14: On-Device Learning.

Efficiency Gains

Knowledge distillation’s efficiency benefits span three key areas: memory efficiency, computational efficiency, and deployment flexibility. Unlike pruning which modifies trained models, distillation trains compact models from the start using teacher guidance, enabling accuracy levels difficult to achieve through standard training alone (Sanh et al. 2019), supporting structured evaluation approaches in Chapter 12: Benchmarking AI.

Memory and Model Compression

A key advantage of knowledge distillation is that it enables smaller models to retain much of the predictive power of larger models, significantly reducing memory footprint. This is particularly useful in resource-constrained environments such as mobile and embedded AI systems, where model size directly impacts storage requirements and load times.

For instance, models such as DistilBERT (Sanh et al. 2019) in NLP and MobileNet distillation variants (Howard et al. 2017) in computer vision have been shown to retain up to 97% of the accuracy of their larger teacher models while using only half the number of parameters. This level of compression is often superior to pruning, where aggressive parameter reduction can lead to deterioration in representational power.

Another key benefit of knowledge distillation is its ability to transfer robustness and generalization from the teacher to the student. Large models are often trained with extensive datasets and develop strong generalization capabilities, meaning they are less sensitive to noise and data shifts. A well-trained student model inherits these properties, making it less prone to overfitting and more stable across diverse deployment conditions. This is particularly useful in low-data regimes, where training a small model from scratch may result in poor generalization due to insufficient training examples.

Computation and Inference Speed

By training the student model to approximate the teacher’s knowledge in a more compact representation, distillation results in models that require fewer FLOPs per inference, leading to faster execution times. Unlike unstructured pruning, which may require specialized hardware support for sparse computation, a distilled model remains densely structured, making it more compatible with existing machine learning accelerators such as GPUs, TPUs, and edge AI chips (Jiao et al. 2020).

In real-world deployments, this translates to:

- Reduced inference latency, which is important for real-time AI applications such as speech recognition, recommendation systems, and self-driving perception models.

- Lower energy consumption, making distillation particularly relevant for low-power AI on mobile devices and IoT systems.

- Higher throughput in cloud inference, where serving a distilled model allows large-scale AI applications to reduce computational cost while maintaining model quality.

For example, when deploying transformer models for NLP, organizations often use teacher-student distillation to create models that achieve similar accuracy at 2-4\(\times\) lower latency, making it feasible to serve billions of requests per day with significantly lower computational overhead.

Deployment and System Considerations

Knowledge distillation is also effective in multi-task learning scenarios, where a single teacher model can guide multiple student models for different tasks. For example, in multi-lingual NLP models, a large teacher trained on multiple languages can transfer language-specific knowledge to smaller, task-specific student models, enabling efficient deployment across different languages without retraining from scratch. Similarly, in computer vision, a teacher trained on diverse object categories can distill knowledge into specialized students optimized for tasks such as face recognition, medical imaging, or autonomous driving.

Once a student model is distilled, it can be further optimized for hardware-specific acceleration using techniques such as pruning, quantization, and graph optimization. This ensures that compressed models remain inference-efficient across multiple hardware environments, particularly in edge AI and mobile deployments (Gordon, Duh, and Andrews 2020).

Despite its advantages, knowledge distillation has some limitations. The effectiveness of distillation depends on the quality of the teacher model, a poorly trained teacher may transfer incorrect biases to the student. Distillation introduces an additional training phase, where both the teacher and student must be used together, increasing computational costs during training. In some cases, designing an appropriate student model architecture that can fully benefit from the teacher’s knowledge remains a challenge, as overly small student models may not have enough capacity to absorb all the relevant information.

Trade-offs

Compared to pruning, knowledge distillation preserves accuracy better but requires higher training complexity through training a new model rather than modifying an existing one. However, pruning provides a more direct computational efficiency gain, especially when structured pruning is used. In practice, combining pruning and distillation often yields the best trade-off, as seen in models like DistilBERT and MobileBERT, where pruning first reduces unnecessary parameters before distillation optimizes a final student model. Table 4 summarizes the key trade-offs between knowledge distillation and pruning.

| Criterion | Knowledge Distillation | Pruning |

|---|---|---|

| Accuracy retention | High – Student learns from teacher, better generalization | Varies – Can degrade accuracy if over-pruned |

| Training cost | Higher – Requires training both teacher and student | Lower – Only fine-tuning needed |

| Inference speed | High – Produces dense, optimized models | Depends – Structured pruning is efficient, unstructured needs special support |

| Hardware compatibility | High – Works on standard accelerators | Limited – Sparse models may need specialized execution |

| Ease of implementation | Complex – Requires designing a teacher-student pipeline | Simple – Applied post-training |

Knowledge distillation remains an important technique in ML systems optimization, often used alongside pruning and quantization for deployment-ready models. Understanding how distillation interacts with these complementary techniques is essential for building effective multi-stage optimization pipelines.

Structured Approximations

Approximation-based compression techniques restructure model representations to reduce complexity while maintaining expressive power, complementing the pruning and distillation methods discussed earlier.

Rather than eliminating individual parameters, approximation methods decompose large weight matrices and tensors into lower-dimensional components, allowing models to be stored and executed more efficiently. These techniques leverage the observation that many high-dimensional representations can be well-approximated by lower-rank structures, thereby reducing the number of parameters without a significant loss in performance. Unlike pruning, which selectively removes connections, or distillation, which transfers learned knowledge, factorization-based approaches optimize the internal representation of a model through structured approximations.

Among the most widely used approximation techniques are: