On-Device Learning

DALL·E 3 Prompt: Drawing of a smartphone with its internal components exposed, revealing diverse miniature engineers of different genders and skin tones actively working on the ML model. The engineers, including men, women, and non-binary individuals, are tuning parameters, repairing connections, and enhancing the network on the fly. Data flows into the ML model, being processed in real-time, and generating output inferences.

Purpose

Why does on-device learning represent the most fundamental architectural shift in machine learning systems since the separation of training and inference, and what makes this capability essential for the future of intelligent systems?

On-device learning dismantles the assumption governing machine learning architecture for decades: the separation between where models are trained and where they operate. This redefines what systems can become by enabling continuous adaptation in the real world rather than static deployment of pre-trained models. The shift from centralized training to distributed, adaptive learning transforms systems from passive inference engines into intelligent agents capable of personalization, privacy preservation, and autonomous improvement in disconnected environments. This architectural revolution becomes essential as AI systems move beyond controlled data centers into unpredictable environments where pre-training cannot anticipate every scenario or deployment condition. Understanding on-device learning principles enables engineers to design systems that break free from static model limitations, creating adaptive intelligence that learns and evolves at the point of human interaction.

Distinguish on-device learning from centralized training approaches by comparing computational distribution, data locality, and coordination mechanisms

Identify key motivational drivers (personalization, latency, privacy, infrastructure efficiency) and evaluate when on-device learning is appropriate versus alternative approaches

Analyze how training amplifies resource constraints compared to inference, quantifying memory (3-5\(\times\)), computational (2-3\(\times\)), and energy overhead impacts on system design

Evaluate adaptation strategies including weight freezing, residual updates, and sparse updates by comparing their resource consumption, expressivity, and suitability for different device classes

Examine data efficiency techniques for learning with limited local datasets, including few-shot learning, experience replay, and data compression methods

Apply federated learning protocols to coordinate privacy-preserving model updates across heterogeneous device populations while managing communication efficiency and convergence challenges

Design on-device learning systems that integrate thermal management, memory hierarchy optimization, and power budgeting to maintain acceptable user experience

Implement practical deployment strategies that address MLOps integration challenges including device-aware pipelines, distributed monitoring, and heterogeneous update coordination

Distributed Learning Paradigm Shift

Operational frameworks (Chapter 13: ML Operations) establish the foundation for managing machine learning systems at scale through centralized orchestration, monitoring, and deployment pipelines. These frameworks assume controlled cloud environments where computational resources are abundant, network connectivity is reliable, and system behavior is predictable. However, as machine learning systems increasingly move beyond data centers to edge devices, these fundamental assumptions begin to break down.

A smartphone learning to predict user text input, a smart home device adapting to household routines, or an autonomous vehicle updating its perception models based on local driving conditions exemplify scenarios where traditional centralized training approaches prove inadequate. The smartphone encounters linguistic patterns unique to individual users that were not present in global training data. The smart home device must adapt to seasonal changes and family dynamics that vary dramatically across households. The autonomous vehicle faces local road conditions, weather patterns, and traffic behaviors that differ from its original training environment.

These scenarios exemplify on-device learning, where models must train and adapt directly on the devices where they operate1. This paradigm transforms machine learning from a centralized discipline to a distributed ecosystem where learning occurs across millions of heterogeneous devices, each operating under unique constraints and local conditions.

1 A11 Bionic Breakthrough: Apple’s A11 Bionic (2017) was the first mobile chip with sufficient computational power for on-device training, delivering 0.6 TOPS compared to the previous A10’s 0.2 TOPS. This \(3\times\) improvement, combined with 4.3 billion transistors and a dual-core Neural Engine, allowed gradient computation for the first time on mobile devices. Google’s Pixel Visual Core achieved similar capabilities with 8 custom Image Processing Units optimized for machine learning workloads.

The transition to on-device learning introduces fundamental tension in machine learning systems design. While cloud-based architectures leverage abundant computational resources and controlled operational environments, edge devices must function within severely constrained resource envelopes characterized by limited memory capacity, restricted computational throughput, constrained energy budgets, and intermittent network connectivity. These constraints that make on-device learning technically challenging simultaneously enable its most significant advantages: personalized adaptation through localized data processing, privacy preservation through data locality, and operational autonomy through independence from centralized infrastructure.

This chapter examines the theoretical foundations and practical methodologies necessary to navigate this architectural tension. Building on computational efficiency principles (Chapter 9: Efficient AI) and operational frameworks (Chapter 13: ML Operations), we investigate the specialized algorithmic techniques, architectural design patterns, and system-level principles that enable effective learning under extreme resource constraints. The challenge extends beyond conventional optimization of training algorithms, requiring reconceptualization of the entire machine learning pipeline for deployment environments where traditional computational assumptions fail.

The implications of this paradigm extend far beyond technical optimization, challenging established assumptions regarding machine learning system development, deployment, and maintenance lifecycles. Models transition from following predictable versioning patterns to exhibiting continuous divergence and adaptation trajectories. Performance evaluation methodologies shift from centralized monitoring dashboards to distributed assessment across heterogeneous user populations. Privacy preservation evolves from a regulatory compliance consideration to a core architectural requirement that shapes system design decisions.

Understanding these systemic implications requires examining both the compelling motivations driving organizational adoption of on-device learning and the substantial technical challenges that must be addressed. This analysis establishes the theoretical foundations and practical methodologies required to architect systems capable of effective learning at the network edge while operating within stringent constraints.

Motivations and Benefits

Machine learning systems have traditionally relied on centralized training pipelines, where models are developed and refined using large, curated datasets and powerful cloud-based infrastructure (Dean et al. 2012). Once trained, these models are deployed to client devices for inference, creating a clear separation between the training and deployment phases. While this architectural separation has served most use cases well, it imposes significant limitations in modern applications where local data is dynamic, private, or highly personalized.

On-device learning challenges this established model by enabling systems to train or adapt directly on the device, without relying on constant connectivity to the cloud. This shift represents more than a technological advancement, it reflects changing application requirements and user expectations that demand responsive, personalized, and privacy-preserving machine learning systems.

Consider a smartphone keyboard adapting to a user’s unique vocabulary and typing patterns. To personalize predictions, the system must perform gradient updates on a compact language model using locally observed text input. A single gradient update for even a minimal language model requires 50-100 MB of memory for activations and optimizer state. Modern smartphones typically allocate 200-300 MB to background applications like keyboards (varies by OS and device generation). This razor-thin margin, where a single training step consumes 25% of available memory, exemplifies the central engineering challenge of on-device learning. The system must achieve meaningful personalization while operating within constraints so severe that traditional training approaches become architecturally infeasible. This quantitative reality drives the need for specialized techniques that make adaptation possible within extreme resource limitations.

On-Device Learning Benefits

Understanding the driving forces behind on-device learning adoption requires examining the inherent limitations of traditional centralized approaches. Traditional machine learning systems rely on a clear division of labor between model training and inference. Training is performed in centralized environments with access to high-performance compute resources and large-scale datasets. Once trained, models are distributed to client devices, where they operate in a static inference-only mode.

While this centralized paradigm has proven effective in many deployments, it introduces fundamental limitations in scenarios where data is user-specific, behavior is dynamic, or connectivity is intermittent. These limitations become particularly acute as machine learning moves beyond controlled environments into real-world applications with diverse user populations and deployment contexts.

On-device learning addresses these limitations by enabling deployed devices to perform model adaptation using locally available data. On-device learning is not merely an efficiency optimization; it serves as a cornerstone of building trustworthy AI systems, opening Part IV: Trustworthy Systems. By keeping data local, it provides a powerful foundation for privacy. By adapting to individual users, it enhances fairness and utility. By enabling offline operation, it improves robustness against network failures and infrastructure dependencies. This chapter explores the engineering required to build these trustworthy, adaptive systems.

This shift from centralized to decentralized learning is motivated by four key considerations that reflect both technological capabilities and changing application requirements: personalization, latency and availability, privacy, and infrastructure efficiency (Li et al. 2020).

Personalization represents the most compelling motivation, as deployed models often encounter usage patterns and data distributions that differ substantially from their training environments. Local adaptation allows models to refine behavior in response to user-specific data, capturing linguistic preferences, physiological baselines, sensor characteristics, or environmental conditions. This capability proves essential in applications with high inter-user variability, where a single global model cannot serve all users effectively.

Latency and availability constraints provide additional justification for local learning. In edge computing scenarios, connectivity to centralized infrastructure may be unreliable, delayed, or intentionally limited to preserve bandwidth or reduce energy consumption. On-device learning enables autonomous improvement of models even in fully offline or delay-sensitive contexts, where round-trip updates to the cloud are architecturally infeasible.

Privacy considerations provide a third compelling driver. Many modern applications involve sensitive or regulated data, including biometric measurements, typed input, location traces, or health information. Local learning mitigates privacy concerns by keeping raw data on the device and operating within privacy-preserving boundaries, potentially aiding adherence to regulations such as GDPR2, HIPAA (Tomes 1996), or region-specific data sovereignty laws.

2 GDPR’s ML Impact: When GDPR took effect in May 2018 (Rasmussen et al. 2024), it made centralized ML training illegal for personal data without explicit consent. The “right to be forgotten” also meant models trained on personal data could be legally required to “unlearn” specific users, technically impossible with traditional training. This drove massive investment in privacy-preserving ML techniques.

Infrastructure efficiency provides economic motivation for distributed learning approaches. Centralized training pipelines require substantial backend infrastructure to collect, store, and process user data from potentially millions of devices. By shifting learning to the edge, systems reduce communication costs and distribute training workloads across the deployment fleet, relieving pressure on centralized resources while improving scalability.

Alternative Approaches and Decision Criteria

On-device learning represents a significant engineering investment with inherent complexity that may not be justified by the benefits. Before committing to this approach, teams should carefully evaluate whether simpler alternatives can achieve comparable results with lower operational overhead. Understanding when not to implement on-device learning is as important as understanding its benefits, as premature adoption can introduce unnecessary complexity without proportional value.

Several alternative approaches often suffice for personalization and adaptation requirements without local training complexity:

Feature-based Personalization: Provides effective customization by storing user preferences, interaction history, and behavioral features locally. Rather than adapting model weights, the system feeds these stored features into a static model to achieve personalization. News recommendation systems exemplify this approach by storing user topic preferences and reading patterns locally, then combining these features with a centralized content model to provide personalized recommendations without model updates.

Cloud-based Fine-tuning with Privacy Controls: Enables personalization through centralized adaptation with appropriate privacy safeguards. User data is processed in batches during off-peak hours using privacy-preserving techniques such as differential privacy3 or federated analytics. This approach often achieves superior accuracy compared to resource-constrained on-device updates while maintaining acceptable privacy properties for many applications.

User-specific Lookup Tables: Combine global models with personalized retrieval mechanisms. The system maintains a lightweight, user-specific lookup table for frequently accessed patterns while using a shared global model for generalization. This hybrid approach provides personalization benefits with minimal computational and storage overhead.

3 Differential Privacy: Mathematical framework that provides quantifiable privacy guarantees by adding carefully calibrated noise to computations. In federated learning, DP ensures that individual user data cannot be inferred from model updates, even by aggregators. Key parameter ε controls privacy-utility tradeoff: smaller ε means stronger privacy but lower model accuracy. Typical deployments use ε=1-8, requiring noise addition that can increase communication overhead by 2-10\(\times\) and reduce model accuracy by 1-5%. Essential for regulatory compliance and user trust in distributed learning systems.

The decision to implement on-device learning should be driven by quantifiable requirements that preclude these simpler alternatives. True data privacy constraints that legally prohibit cloud processing, genuine network limitations that prevent reliable connectivity, quantitative latency budgets that preclude cloud round-trips, or demonstrable performance improvements that justify the operational complexity represent legitimate drivers for on-device learning adoption.

For applications with critical timing requirements (camera processing under 33 ms, voice response under 500 ms, AR/VR motion-to-photon latency under 20 ms, or safety-critical control under 10 ms), network round-trip times (typically 50-200 ms) make cloud-based alternatives architecturally infeasible. In such scenarios, on-device learning becomes necessary regardless of complexity considerations. Teams should thoroughly evaluate simpler solutions before committing to the significant engineering investment that on-device learning requires.

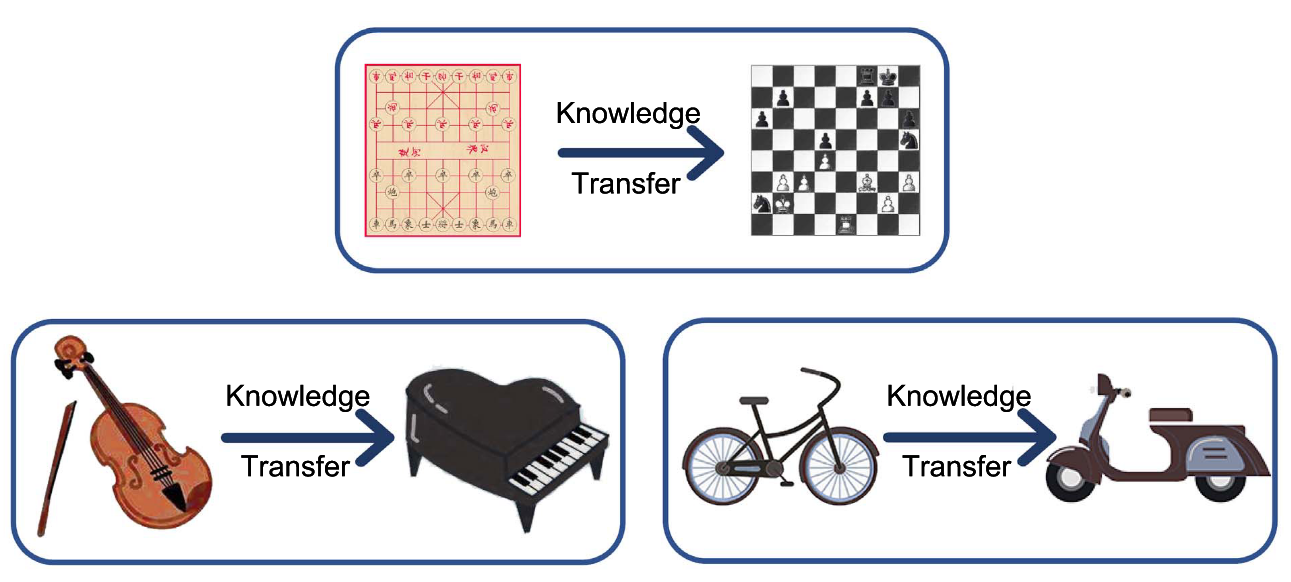

These motivations are grounded in the broader concept of knowledge transfer, where a pretrained model transfers useful representations to a new task or domain. This foundational principle makes on-device learning both feasible and effective, enabling sophisticated adaptation with minimal local resources. As depicted in Figure 1, knowledge transfer can occur between closely related tasks (e.g., playing different board games or musical instruments), or across domains that share structure (e.g., from riding a bicycle to driving a scooter). In the context of on-device learning, this means leveraging a model pretrained in the cloud and adapting it efficiently to a new context using only local data and limited updates. The figure highlights the key idea: pretrained knowledge allows fast adaptation without relearning from scratch, even when the new task diverges in input modality or goal.

This conceptual shift, enabled by transfer learning and adaptation, enables real-world on-device applications. Whether adapting a language model for personal typing preferences, adjusting gesture recognition to individual movement patterns, or recalibrating a sensor model in changing environments, on-device learning allows systems to remain responsive, efficient, and user-aligned over time.

Real-World Application Domains

Building on these established motivations (personalization, latency, privacy, and infrastructure efficiency), real-world deployments demonstrate the practical impact of on-device learning across diverse application domains. These domains span consumer technologies, healthcare, industrial systems, and embedded applications, each showcasing scenarios where the benefits outlined above become essential for effective machine learning deployment.

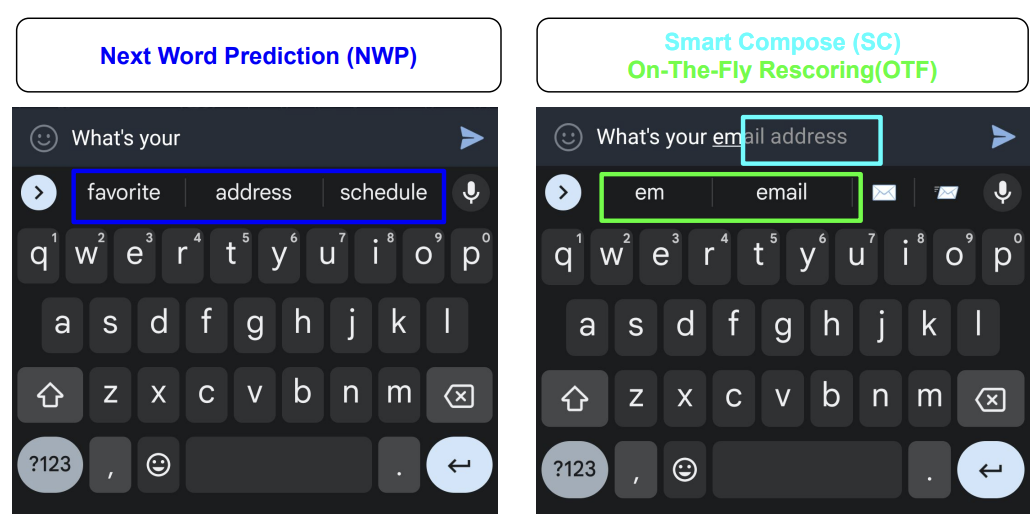

Mobile input prediction represents the most mature and widely deployed example of on-device learning. In systems such as smartphone keyboards, predictive text and autocorrect features benefit substantially from continuous local adaptation. User typing patterns are highly personalized and evolve dynamically, making centralized static models insufficient for optimal user experience. On-device learning allows language models to fine-tune their predictions directly on the device, achieving personalization while maintaining data locality.

For instance, Google’s Gboard employs federated learning to improve shared models across a large population of users while keeping raw data local to each device (Hard et al. 2018)4.

4 Gboard Federated Pioneer: Gboard became the first major commercial federated learning deployment in 2017, processing updates from over 1 billion devices. The technical challenge was immense: aggregating model updates while ensuring no individual user’s typing patterns could be inferred. Google’s success with Gboard proved federated learning could work at planetary scale, demonstrating 10-20% accuracy improvements over static models while maintaining strict differential privacy guarantees.

As shown in Figure 2, different prediction strategies illustrate how local adaptation operates in real time: next-word prediction (NWP) suggests likely continuations based on prior text, while Smart Compose uses on-the-fly rescoring to offer dynamic completions, demonstrating the sophistication of local inference mechanisms.

Building on the consumer applications, wearable and health monitoring devices present equally compelling use cases with additional regulatory constraints. These systems rely on real-time data from accelerometers, heart rate sensors, and electrodermal activity monitors to track user health and fitness. Physiological baselines vary dramatically between individuals, creating a personalization challenge that static models cannot address effectively. On-device learning allows models to adapt to these individual baselines over time, substantially improving the accuracy of activity recognition, stress detection, and sleep staging while meeting regulatory requirements for data localization.

Voice interaction technologies present another important application domain with unique acoustic challenges. Wake-word detection5 and voice interfaces in devices such as smart speakers and earbuds must recognize voice commands quickly and accurately, even in noisy or dynamic acoustic environments.

5 Wake-Word Detection: Always-listening keyword spotting that activates voice assistants (“Hey Siri,” “OK Google,” “Alexa”). These systems run continuously at ~1 mW power consumption, roughly 1000\(\times\) less than full speech recognition. They use tiny neural networks (~100 KB) with specialized architectures optimized for sub-100 ms latency and minimal false positive rates (<0.1 activations per hour). Modern systems achieve 95%+ accuracy while processing 16 kHz audio in real-time, making on-device personalization critical for adapting to individual voice characteristics and reducing false activations.

These systems face strict latency requirements: voice interfaces must maintain end-to-end response times under 500 ms to preserve natural conversation flow, with wake-word detection requiring sub-100 ms response times to avoid user frustration. Local training allows models to adapt to the user’s unique voice profile and changing ambient context, reducing false positives and missed detections while meeting these demanding performance constraints. This adaptation proves particularly valuable in far-field audio settings, where microphone configurations and room acoustics vary dramatically across deployments.

Beyond consumer applications, industrial IoT and remote monitoring systems demonstrate the value of on-device learning in resource-constrained environments. In applications such as agricultural sensing, pipeline monitoring, or environmental surveillance, connectivity to centralized infrastructure may be limited, expensive, or entirely unavailable. On-device learning allows these systems to detect anomalies, adjust thresholds, or adapt to seasonal trends without continuous communication with the cloud. This capability proves necessary for maintaining autonomy and reliability in edge-deployed sensor networks, where system downtime or missed detections can have significant economic or safety consequences.

The most demanding applications emerge in embedded computer vision systems, including those in robotics, AR/VR, and smart cameras, which combine complex visual processing with extreme timing constraints. Camera applications must process frames within 33 ms to maintain 30 FPS real-time performance, while AR/VR systems demand motion-to-photon latencies under 20 ms to prevent nausea and maintain immersion. Safety-critical control systems require even tighter bounds, typically under 10 ms, where delayed decisions can have severe consequences. These systems operate in novel or rapidly changing environments that differ substantially from their original training conditions. On-device adaptation allows models to recalibrate to new lighting conditions, object appearances, or motion patterns while meeting these critical latency budgets that fundamentally drive the architectural decision between on-device versus cloud-based processing.

Each domain reveals a common pattern: deployment environments introduce variation and context-specific requirements that cannot be anticipated during centralized training. These applications demonstrate how the motivational drivers (personalization, latency, privacy, and infrastructure efficiency) manifest as concrete engineering constraints. Mobile keyboards face memory limitations for storing user-specific patterns, wearable devices encounter energy budgets that restrict training frequency, voice interfaces must meet sub-100 ms latency requirements that preclude cloud coordination, and industrial IoT systems operate in network-constrained environments that demand autonomous adaptation. This pattern illuminates the fundamental design requirement shaping all subsequent technical decisions: learning must be performed efficiently, privately, and reliably under significant resource constraints that we examine through constraint analysis (Section 1.3), adaptation techniques (Section 1.4), and federated coordination (Section 1.6).

Architectural Trade-offs: Centralized vs. Decentralized Training

These applications demonstrate the practical value of on-device learning across diverse domains. Building on this foundation, we now examine how on-device learning differs from traditional ML architectures, revealing a complete reimagining of the training lifecycle that extends far beyond simple deployment choices.

Understanding the shift that on-device learning represents requires examining how traditional machine learning systems are structured and where their limitations become apparent. Most machine learning systems today follow a centralized learning paradigm that has served the field well but increasingly shows strain under modern deployment requirements. Models are trained in data centers using large-scale, curated datasets aggregated from many sources. Once trained, these models are deployed to client devices in a static form, where they perform inference without further modification. Updates to model parameters, either to incorporate new data or to improve generalization, are handled periodically through offline retraining, often using newly collected or labeled data sent back from the field.

This established centralized model offers numerous proven advantages: high-performance computing infrastructure, access to diverse data distributions, and robust debugging and validation pipelines. It also depends on several assumptions that may not hold in modern deployment scenarios: reliable data transfer, trust in data custodianship, and infrastructure capable of managing global updates across device fleets. As machine learning is deployed into increasingly diverse and distributed environments, the limitations of this approach become more apparent and often prohibitive.

In contrast to this centralized approach, on-device learning embraces an inherently decentralized paradigm that challenges many traditional assumptions. Each device maintains its own copy of a model and adapts it locally using data that is typically unavailable to centralized infrastructure. Training occurs on-device, often asynchronously and under varying resource conditions that change based on device usage patterns, battery levels, and thermal states. Data never leaves the device, reducing privacy exposure but also complicating coordination between devices. Devices may differ dramatically in their hardware capabilities, runtime environments, and patterns of use, making the learning process heterogeneous and difficult to standardize. These hardware variations create significant system design challenges.

This decentralized architecture introduces a new class of systems challenges that extend well beyond traditional machine learning concerns. Devices may operate with different versions of the model, leading to inconsistencies in behavior across the deployment fleet. Evaluation and validation become significantly more complex, as there is no central point from which to measure performance across all devices (McMahan et al. 2017). Model updates must be carefully managed to prevent degradation, and safety guarantees become substantially harder to enforce in the absence of centralized testing and validation infrastructure.

Managing thousands of heterogeneous edge devices exceeds typical distributed systems complexity. Device heterogeneity extends beyond hardware differences to include varying operating system versions, security patches, network configurations, and power management policies. At any given time, 20-40% of devices are offline (Bonawitz et al. 2019), while others have been disconnected for weeks or months, creating persistent coordination challenges.

When disconnected devices reconnect, they require state reconciliation to avoid version conflicts. Update verification becomes critical as devices can silently fail to apply updates or report success while running outdated models. Robust systems implement multi-stage verification: cryptographic signatures confirm update integrity, functional tests validate model behavior, and telemetry confirms deployment success. Rollback strategies must handle partial deployments where some devices received updates while others remain on previous versions, requiring sophisticated orchestration to maintain system consistency during failure recovery.

These challenges require different approaches to system design and operational management compared to centralized ML systems, building on the distributed systems principles from Chapter 13: ML Operations while introducing edge-specific complexities.

Despite these challenges, decentralization introduces opportunities that often justify the additional complexity. It allows for deep personalization without centralized oversight, supports robust learning in disconnected or bandwidth-limited environments, and reduces the operational cost and infrastructure burden for model updates. Realizing these benefits raises questions of how to effectively coordinate learning across devices, whether through periodic synchronization, federated aggregation, or hybrid approaches that balance local and global objectives.

The move from centralized to decentralized learning represents more than a shift in deployment architecture. It reshapes the entire design space for machine learning systems, requiring new approaches to model architecture, training algorithms, data management, and system validation. In centralized training, data is aggregated from many sources and processed in large-scale data centers, where models are trained, validated, and then deployed in a static form to edge devices. In contrast, on-device learning introduces a fundamentally different paradigm: models are updated directly on client devices using local data, often asynchronously and under diverse hardware conditions. This architectural transformation introduces coordination challenges while enabling autonomous local adaptation, requiring careful consideration of validation, system reliability, and update orchestration across heterogeneous device populations.

On-device learning responds to the limitations of centralized machine learning workflows. The transformation from centralized to decentralized learning creates three distinct operational phases, each with different characteristics and challenges.

The traditional centralized paradigm begins with cloud-based training on aggregated data, followed by static model deployment to client devices. This approach works well when data collection is feasible, network connectivity is reliable, and a single global model can serve all users effectively. However, it breaks down when data becomes personalized, privacy-sensitive, or collected in environments with limited connectivity.

Once deployed, local differences begin to emerge as each device encounters its own unique data distribution. Devices collect data that reflects individual user patterns, environmental conditions, and usage contexts. This data is often non-IID (non-independent and identically distributed)6 and noisy, requiring local model adaptation to maintain performance. This transition marks the shift from global generalization to local specialization.

6 Non-IID (Non-Independent and Identically Distributed): In machine learning, data is IID when samples are drawn independently from the same distribution. Non-IID violates this assumption, common in federated learning where each device collects data from different users, environments, or use cases. For example, smartphone keyboard data varies dramatically between users (languages, writing styles, autocorrect needs), making personalized model training essential but challenging for convergence.

The final phase introduces federated coordination, where devices periodically synchronize their local adaptations through aggregated model updates rather than raw data sharing. This enables privacy-preserving global refinement while maintaining the benefits of local personalization.

These three distinct phases (centralized training, local adaptation, and federated coordination) represent an architectural evolution that reshapes every aspect of the machine learning lifecycle. Figure 3 illustrates how data flow, computational distribution, and coordination mechanisms differ across these phases, highlighting the increasing complexity but also the enhanced capabilities that emerge at each stage. Understanding this progression helps frame the challenges that on-device learning systems must address.

Design Constraints

Part III established efficiency principles that shape all machine learning systems. Chapter 9: Efficient AI introduced three efficiency dimensions (algorithmic, compute, and data efficiency) and revealed through scaling laws why brute-force approaches hit fundamental limits. Chapter 10: Model Optimizations developed compression techniques including quantization, pruning, and knowledge distillation that enable deployment on resource-constrained devices. Chapter 11: AI Acceleration characterized edge hardware capabilities from microcontrollers to mobile accelerators, as detailed in the hardware discussions. These chapters focused primarily on inference workloads: running pre-trained models efficiently.

On-device learning operates under these same efficiency constraints but with training-specific amplifications that make optimization dramatically more challenging. Where inference requires a single forward pass through the network, training demands forward propagation, gradient computation through backpropagation, and weight updates, increasing memory requirements by 3-5\(\times\) and computational costs by 2-3\(\times\). The model compression techniques that enable efficient inference become baseline requirements rather than optimizations, as training within edge device constraints would be impossible without aggressive compression.

Given the established motivations for on-device learning, we now examine the fundamental engineering challenges that shape its implementation. Enabling learning on the device requires completely rethinking conventional assumptions about where and how machine learning systems operate. In centralized environments, models are trained with access to extensive compute infrastructure, large and curated datasets, and generous memory and energy budgets. At the edge, none of these assumptions hold, creating a fundamentally different design space.

On-device learning constraints fall into three critical dimensions that parallel but extend the efficiency framework from Part III: model compression requirements (extending algorithmic efficiency), sparse and non-uniform data characteristics (extending data efficiency), and severely limited computational resources (extending compute efficiency). These three dimensions form an interconnected constraint space that defines the feasible region for on-device learning systems, with each dimension imposing distinct limitations that influence algorithmic choices, system architecture, and deployment strategies.

Quantifying Training Overhead on Edge Devices

The transition from inference-only deployment to on-device training creates multiplicative rather than additive complexity. These constraints interact and amplify each other in ways that reshape system design requirements, building on the resource optimization principles from Chapter 9: Efficient AI while introducing new challenges specific to distributed learning environments.

The efficiency constraints introduced in Part III apply to both inference and training, but training amplifies each constraint dimension by 3-10\(\times\). Table 1 quantifies how training workloads intensify the challenges established in Chapter 9: Efficient AI, Chapter 10: Model Optimizations, and Chapter 11: AI Acceleration.

These amplifications explain why simply applying Part III optimization techniques to training workloads proves insufficient. Each constraint category shapes on-device learning system design, requiring approaches that build on but extend beyond the inference-focused methods from earlier chapters.

| Constraint Dimension | Inference (Part III) | Training Amplification | Impact on Design |

|---|---|---|---|

| Memory Footprint | Model weights + single activation map | Weights + full activation cache + gradients + optimizer state | 3-5\(\times\) increase; forces aggressive compression |

| Compute Operations | Forward pass only | Forward + backward + weight update | 2-3\(\times\) increase; limits model complexity |

| Memory Bandwidth | Sequential weight reads | Bidirectional data flow for gradients | 5-10\(\times\) increase; creates bottlenecks |

| Energy per Sample | Single inference operation | Multiple gradient steps with convergence | 10-50\(\times\) increase; requires opportunistic scheduling |

| Data Requirements | Pre-collected, curated datasets | Sparse, noisy, streaming local data | Necessitates sample-efficient methods |

| Hardware Utilization | Optimized for forward passes | Different access patterns for backprop | Inference accelerators may not help training |

Figure 4 illustrates a pipeline that combines offline pre-training with online adaptive learning on resource-constrained IoT devices. The system first undergoes meta-training with generic data. During deployment, device-specific constraints such as data availability, compute, and memory shape the adaptation strategy by ranking and selecting layers and channels to update. This allows efficient on-device learning within limited resource envelopes.

Model Constraints

The first dimension of on-device learning constraints centers on the model itself. Its structure, size, and computational requirements determine deployment feasibility. The structure and size of the machine learning model directly influence whether on-device training is even possible, let alone practical. Unlike cloud-deployed models that can span billions of parameters and rely on multi-gigabyte memory budgets, models intended for on-device learning must conform to tight constraints on memory, storage, and computational complexity. These constraints apply not only at inference time, but become even more restrictive during training, where additional resources are needed for gradient computation, parameter updates, and optimizer state management.

The scale of these constraints becomes apparent when examining specific examples across the device spectrum. The MobileNetV2 architecture, commonly used in mobile vision tasks, requires approximately 14 MB of storage in its standard configuration. While this memory requirement is entirely feasible for modern smartphones with gigabytes of available RAM, it far exceeds the memory available on embedded microcontrollers such as the Arduino Nano 33 BLE Sense7, which provides only 256 KB of SRAM and 1 MB of flash storage. This dramatic difference in available resources necessitates aggressive model compression techniques. In such severely constrained platforms, even a single layer of a typical convolutional neural network may exceed available RAM during training due to the need to store intermediate feature maps and gradient information.

7 Arduino Edge Computing Reality: The Arduino Nano 33 BLE Sense represents typical microcontroller constraints: 256 KB SRAM is roughly 65,000 times smaller than a modern smartphone’s 16 GB RAM (flagship devices). To put this in perspective, storing just one 224×224×3 RGB image (150 KB) would consume 60% of available memory. Training requires 3-5\(\times\) more memory for gradients and activations, making even tiny models challenging. The 1 MB flash storage can hold only the smallest quantized models, forcing designers to use 8-bit or even 4-bit representations.

Beyond static storage requirements, the training process itself dramatically expands the effective memory footprint, creating an additional layer of constraint. Standard backpropagation requires caching activations for each layer during the forward pass, which are then reused during gradient computation in the backward pass. As established in the amplification analysis above, this activation caching multiplies memory requirements compared to inference-only deployment. For a seemingly modest 10-layer convolutional model processing \(64 \times 64\) images, the required memory may exceed 1 to 2 MB, well beyond the SRAM capacity of most embedded systems and highlighting the fundamental tension between model expressiveness and resource availability.

Compounding these memory constraints, model complexity directly affects runtime energy consumption and thermal limits, introducing additional practical barriers to deployment. In systems such as smartwatches or battery-powered wearables, sustained model training can rapidly deplete energy reserves or trigger thermal throttling that degrades performance. Training a full model using floating-point operations on these devices is often infeasible from an energy perspective, even when memory constraints are satisfied. These practical limitations have motivated the development of ultra-lightweight model variants, such as MLPerf Tiny benchmark networks (Banbury et al. 2021), which fit within 100–200 KB and can be adapted using only partial gradient updates. These specialized models employ aggressive quantization and pruning strategies to achieve such compact representations while maintaining sufficient expressiveness for meaningful adaptation.

The practical implications of battery and thermal constraints extend beyond just limiting training duration. Mobile devices must carefully balance training opportunities with user experience. Aggressive on-device training can cause noticeable device heating and rapid battery drain, leading to user dissatisfaction and potential app uninstalls. Modern smartphones typically limit sustained processing to 2-3 W for ML workloads to prevent thermal discomfort, though they can burst to 5-10 W for brief periods before thermal throttling kicks in. Training even modest models can easily exceed these sustainable power limits. This reality necessitates intelligent scheduling strategies: training during charging periods when thermal dissipation is improved, utilizing low-power cores for gradient computation when possible, and implementing thermal-aware duty cycling that pauses training when temperature thresholds are exceeded. Some systems even leverage device usage patterns, scheduling intensive adaptation only during overnight charging when the device is idle and connected to power.

Given these multifaceted constraints, the model architecture itself must be fundamentally designed with on-device learning capabilities in mind from the outset. Many conventional architectures, such as large transformers or deep convolutional networks, are simply not viable for on-device adaptation due to their inherent size and computational complexity. Instead, specialized lightweight architectures such as MobileNets8, SqueezeNet (Iandola et al. 2016), and EfficientNet (Tan and Le 2019) have been developed specifically for resource-constrained environments. These architectures leverage efficiency principles and architectural optimizations, rethinking how neural networks can be structured. These specialized models employ techniques such as depthwise separable convolutions9, bottleneck layers, and aggressive quantization to dramatically reduce memory and compute requirements while maintaining sufficient performance for practical applications.

8 MobileNet Innovation: Google’s MobileNet family revolutionized mobile AI by achieving 10-20\(\times\) parameter reduction compared to traditional CNNs. MobileNetV1 (2017) used depthwise separable convolutions to reduce floating-point operations (FLOPs) by 8-9\(\times\), while MobileNetV2 (2018) added inverted residuals and linear bottlenecks. The breakthrough allowed real-time inference on smartphones: MobileNetV2 runs ImageNet classification in ~75 ms on a Pixel phone versus 1.8 seconds for ResNet-50 (He et al. 2016).

9 Depthwise Separable Convolutions: This technique decomposes standard convolution into two operations: depthwise convolution (applies single filter per input channel) and pointwise convolution (1×1 conv to combine channels). For a 3×3 conv with 512 input/output channels, standard convolution requires 2.4 M parameters while depthwise separable needs only 13.8 K, a 174\(\times\) reduction. The computational savings are similarly dramatic, making real-time inference possible on mobile CPUs.

These architectures are often designed to be modular, allowing for easy adaptation and fine-tuning. For example, MobileNets (Howard et al. 2017) can be configured with different width multipliers and resolution settings to balance performance and resource usage. Concretely, MobileNetV2 with α=1.0 requires 3.4 M parameters (13.6 MB in FP32), but with α=0.5 this drops to 0.7 M parameters (2.8 MB), enabling deployment on devices with just 4 MB available RAM. This flexibility is important for on-device learning, where the model must adapt to the specific constraints of the deployment environment.

While model architecture determines the memory and computational baseline for on-device learning, the characteristics of available training data introduce equally fundamental limitations that shape every aspect of the learning process.

Data Constraints

The second dimension of on-device learning constraints centers on data availability and quality. The nature of data available to on-device ML systems differs dramatically from the large, curated, and centrally managed datasets used in cloud-based training. At the edge, data is locally collected, temporally sparse, and often unstructured or unlabeled, creating a different learning environment. These characteristics introduce multifaceted challenges in volume, quality, and statistical distribution, all of which directly affect the reliability and generalizability of learning on the device.

Data volume represents the first major constraint, severely limited by both storage constraints and the sporadic nature of user interaction. For example, a smart fitness tracker may collect motion data only during physical activity, generating relatively few labeled samples per day. If a user wears the device for just 30 minutes of exercise, only a few hundred data points might be available for training, compared to the thousands or millions typically required for effective supervised learning in controlled environments. This scarcity changes the learning paradigm from data-rich to data-efficient algorithms.

Beyond volume limitations, on-device data is frequently non-IID (non-independent and identically distributed) (Zhao et al. 2018), creating statistical challenges that cloud-based systems rarely encounter. This heterogeneity manifests across multiple dimensions: user behavior patterns, environmental conditions, linguistic preferences, and usage contexts. A voice assistant deployed across households encounters dramatic variation in accents, languages, speaking styles, and command patterns. Similarly, smartphone keyboards adapt to individual typing patterns, autocorrect preferences, and multilingual usage that varies dramatically between users. This data heterogeneity complicates both model convergence and the design of update mechanisms that must generalize across devices while maintaining personalization.

Compounding these distribution challenges, label scarcity presents an additional critical obstacle that severely limits traditional learning approaches. Most edge-collected data is unlabeled by default, requiring systems to learn from weak or implicit supervision signals. In a smartphone camera, for instance, the device may capture thousands of images throughout the day, but only a few are associated with meaningful user actions (e.g., tagging, favoriting, or sharing), which could serve as implicit labels. In many applications, including detecting anomalies in sensor data and adapting gesture recognition models, explicit labels may be entirely unavailable, making traditional supervised learning infeasible without developing alternative methods for weak supervision or unsupervised adaptation.

Data quality issues add another layer of complexity to the on-device learning challenge. Noise and variability further degrade the already limited data available for training. Embedded systems such as environmental sensors or automotive ECUs may experience fluctuations in sensor calibration, environmental interference, or mechanical wear, leading to corrupted or drifting input signals over time. Without centralized validation systems to detect and filter these errors, they may silently degrade learning performance, creating a reliability challenge that cloud-based systems can more easily address through data preprocessing pipelines.

Finally, data privacy and security concerns impose the most restrictive constraints of all, often making data sharing architecturally impossible rather than merely undesirable. Sensitive information, such as health data, personal communications, or user behavioral patterns, must be protected from unauthorized access under legal and ethical requirements. This constraint often completely precludes the use of traditional data-sharing methods, such as uploading raw data to a central server for training. Instead, on-device learning must rely on sophisticated techniques that enable local adaptation without ever exposing sensitive information, changing how learning systems can be designed and validated.

Compute Constraints

Chapter 11: AI Acceleration characterized the edge hardware landscape that provides computational substrate for machine learning: microcontrollers like STM32F4 and ESP32 at the most constrained end, mobile-class processors with dedicated AI accelerators (Apple Neural Engine, Qualcomm Hexagon, Google Tensor) in the middle, and high-capability edge devices at the upper end. That chapter focused on inference capabilities—the computational throughput, memory bandwidth, and energy efficiency achievable when executing pre-trained models.

Training workloads exhibit fundamentally different computational characteristics that reshape hardware utilization patterns. Building on the edge hardware landscape characterized in Chapter 11: AI Acceleration, from microcontrollers to mobile AI accelerators, on-device learning must operate within severely constrained computational envelopes that differ dramatically from cloud-based training infrastructure by factors of hundreds or thousands in raw computational capacity.

The key difference: backpropagation requires significantly higher memory bandwidth than inference due to gradient computation and activation caching, weight updates create write-heavy access patterns unlike inference’s read-only operations, and optimizer state management demands additional memory allocation that inference never encounters. These training-specific demands mean hardware perfectly adequate for inference may prove entirely inadequate for adaptation, even when updating only a small parameter subset.

At the most constrained end of the spectrum, devices such as the STM32F410 or ESP3211 microcontrollers offer only a few hundred kilobytes of SRAM and completely lack hardware support for floating-point operations (Warden and Situnayake 2020). These extreme constraints represent the fundamental limitations of edge hardware (Chapter 11: AI Acceleration). Such severe limitations preclude the use of conventional deep learning libraries and require models to be meticulously designed for integer arithmetic and minimal runtime memory allocation. In these environments, even apparently simple models require highly specialized techniques, including quantization-aware training12 and selective parameter updates, to execute training loops without exceeding memory or power budgets.

10 STM32F4 Microcontroller Reality: The STM32F4 represents the harsh reality of embedded computing: 192 KB SRAM (roughly the size of a small JPEG image) and 1 MB flash storage, running at 168 MHz without floating-point hardware acceleration. Integer arithmetic is 10-100\(\times\) slower than dedicated floating-point units found in mobile chips. Power consumption is ~100 mW during active processing, requiring careful duty-cycling to preserve battery life. These constraints make even simple neural networks challenging: a 10-neuron hidden layer requires ~40 KB for weights alone in FP32.

11 ESP32 Edge Computing: The ESP32 provides 520 KB SRAM and dual-core processing at 240 MHz, making it more capable than STM32F4 but still severely constrained. Its key advantage is built-in WiFi and Bluetooth for federated learning scenarios. However, the lack of hardware floating-point support means all ML operations must use integer quantization. Real-world deployments show 8-bit quantized models can achieve 95% of FP32 accuracy while fitting in ~50 KB memory, enabling basic on-device training for simple tasks like sensor anomaly detection.

12 Quantization-Aware Training: Unlike post-training quantization which converts trained FP32 models to INT8, quantization-aware training simulates low-precision arithmetic during training itself. This allows the model to learn robust representations despite reduced precision. Critical for edge devices where INT8 operations consume \(4\times\) less power and enable \(4\times\) faster inference compared to FP32, while maintaining 95-99% of original accuracy.

13 Stochastic Gradient Descent (SGD): The fundamental optimization algorithm for neural networks, updating parameters using gradients computed on small batches (or single samples). Unlike full-batch gradient descent, SGD’s randomness helps escape local minima while requiring minimal memory, storing only current parameters and gradients. This simplicity makes SGD ideal for microcontrollers where advanced optimizers like Adam would exceed memory budgets.

The practical implications are stark: while the STM32F4 microcontroller can run a simple linear regression model with a few hundred parameters, training even a small convolutional neural network would immediately exceed its memory capacity. In these severely constrained environments, training is often limited to simple algorithms such as stochastic gradient descent (SGD)13 or \(k\)-means clustering, which can be implemented using integer arithmetic and minimal memory overhead, representing a fundamental departure from modern machine learning practice.

Moving up the computational hierarchy, mobile-class hardware represents a significant improvement but still operates under substantial constraints. Platforms including the Qualcomm Snapdragon, Apple Neural Engine14, and Google Tensor SoC15 provide significantly more compute power than microcontrollers, often featuring dedicated AI accelerators and optimized support for 8-bit or mixed-precision16 matrix operations. These accelerators, their capabilities, and their programming models are detailed in Chapter 11: AI Acceleration. While these platforms can support more sophisticated training routines, including full backpropagation over compact models, they still fall dramatically short of the computational throughput and memory bandwidth available in centralized data centers. For instance, training a lightweight transformer17 on a smartphone is technically feasible but must be tightly bounded in both time and energy consumption to avoid degrading the user experience, highlighting the persistent tension between learning capabilities and practical deployment constraints.

14 Apple Neural Engine Evolution: Apple’s Neural Engine has evolved dramatically since the A11 Bionic. The A17 Pro (2023) features a 16-core Neural Engine delivering 35 TOPS, roughly equivalent to an NVIDIA GTX 1080 Ti. This represents a 58\(\times\) improvement over the original A11. The Neural Engine specializes in matrix operations with dedicated 8-bit and 16-bit arithmetic units, enabling efficient on-device training. Real-world performance: fine-tuning a MobileNet classifier takes ~2 seconds versus 45 seconds on CPU alone, while consuming only ~500 mW additional power.

15 Google Tensor SoC Architecture: Google’s Tensor chips (starting with Pixel 6 in 2021) feature a custom TPU v1-derived Edge TPU optimized for ML workloads. Unlike Apple’s Neural Engine, Tensor optimizes for Google’s specific models (speech recognition, computational photography). The TPU provides efficient 8-bit integer operations while consuming only 2 W, making it highly efficient for federated learning scenarios where devices train locally on speech or image data.

16 Mixed-Precision Training: Uses different numerical precisions for different operations, typically FP16 for forward/backward passes and FP32 for parameter updates. This halves memory usage and doubles throughput on modern hardware with Tensor Cores, while maintaining training stability through automatic loss scaling. Mobile implementations often use INT8 for inference and FP16 for gradient computation, balancing accuracy with hardware constraints.

17 Lightweight Transformers: Mobile-optimized transformer architectures like MobileBERT (Sun et al. 2020) and DistilBERT (Sanh et al. 2019) achieve 4-6\(\times\) speedup over full models through techniques like knowledge distillation, layer reduction, and attention head pruning. MobileBERT retains 97% of BERT-base accuracy while running inference in ~40 ms on mobile CPUs versus 160 ms for full BERT. Key optimizations include bottleneck attention mechanisms and specialized mobile-friendly layer configurations.

These computational limitations become especially acute in real-time or battery-operated systems, as demonstrated in camera processing requirements, where specific latency budgets create hard architectural constraints. Camera applications processing at 30 FPS cannot exceed 33 ms per frame, voice interfaces require rapid response times for natural interaction, AR/VR systems demand sub-20 ms motion-to-photon latency to prevent user discomfort, and safety-critical control systems must respond within 10 ms to ensure operational safety. These quantitative constraints determine whether on-device learning is feasible or whether cloud-based alternatives become architecturally necessary. In a smartphone-based speech recognizer, on-device adaptation must seamlessly coexist with primary inference workloads without interfering with response latency or system responsiveness. Similarly, in wearable medical monitors, training must occur opportunistically during carefully managed windows—typically during periods of low activity or charging—to preserve battery life and avoid thermal management issues.

Beyond raw computational capacity, the architectural implications of these hardware constraints extend into fundamental system design choices. Training operations exhibit fundamentally different memory access patterns than inference workloads: backpropagation requires 3-5\(\times\) higher memory bandwidth due to gradient computation and activation caching, creating bottlenecks that pure computational metrics don’t capture. Modern edge accelerators attempt to address these challenges through increasingly specialized hardware features. Adaptive precision datapaths allow dynamic switching between INT4 for forward passes and FP16 for gradient computation, optimizing both accuracy and efficiency within power budgets. Sparse computation units accelerate selective parameter updates by skipping zero gradients—a capability critical for efficient bias-only and LoRA adaptations. Near-memory compute architectures18 reduce data movement costs by performing gradient updates directly adjacent to weight storage, addressing the memory bandwidth bottleneck. However, most current edge accelerators remain fundamentally optimized for inference workloads, creating significant hardware-software co-design opportunities for future generations of on-device training accelerators specifically designed to handle the unique demands of local adaptation.

18 Near-Memory Computing: Places processing units directly adjacent to or within memory arrays, dramatically reducing data movement costs. Traditional von Neumann architectures spend 100-1000\(\times\) more energy moving data than computing on it. Near-memory designs can perform matrix operations with 10-100\(\times\) better energy efficiency by eliminating costly memory bus transfers. Critical for edge training where gradient computations require intensive memory access patterns that overwhelm traditional cache hierarchies.

Edge Hardware Integration Challenges

Beyond the individual constraints of models, data, and computation, on-device learning systems must navigate the complex interactions between these elements and the underlying physics of mobile computing: power dissipation, thermal limits, and energy budgets. These physical constraints are not mere engineering details; they are fundamental design drivers that determine the entire feasible space of on-device learning algorithms. Understanding these quantitative constraints enables informed design decisions that balance learning capabilities with long-term system sustainability and user acceptance.

Energy and Thermal Constraint Analysis

Energy and thermal management represent perhaps the most challenging aspect of on-device learning system design, as they directly impact user experience and device longevity. Mobile devices operate under strict power budgets that fundamentally determine feasible model complexity and training schedules. The thermal design power (TDP) of mobile processors creates hard constraints that shape every aspect of on-device learning strategies. Modern smartphones typically maintain sustained processing at 2-3 W for ML workloads to prevent thermal discomfort, but can burst to 5-10 W for brief periods before thermal throttling dramatically reduces performance by 50% or more. This thermal cycling behavior forces training algorithms to operate in carefully managed burst modes, utilizing peak performance for only 10-30 seconds before backing off to sustainable power levels, a constraint that fundamentally changes how training algorithms must be designed.

The mobile power budget hierarchy reveals the tight constraints under which on-device learning must operate. Smartphone sustained processing is limited to 2-3 W to prevent user-noticeable heating and maintain acceptable battery life throughout the day. Peak training burst mode can reach 10 W, but this power level is sustainable for only 10-30 seconds before thermal throttling kicks in to protect the hardware. Dedicated neural processing units consume 0.5-2 W for AI workloads, offering optimized power efficiency compared to general-purpose processors. CPU-based AI processing requires 3-5 W and demands aggressive thermal management with duty cycling to prevent overheating, making it the least power-efficient option for sustained on-device learning.

The power consumption characteristics of training workloads create additional layers of constraint that extend beyond simple computational capacity. Power consumption scales superlinearly with model size and training complexity, with training operations consuming 10-50\(\times\) more power than equivalent inference workloads due to the substantial computational overhead of gradient computation (consuming 40-70% of training power), weight updates (20-30%), and dramatically increased data movement between memory hierarchies (10-30%). To maintain acceptable user experience, mobile devices typically budget only 500-1000 mW for sustained ML training, effectively limiting practical training sessions to 10-100 minutes daily under normal usage patterns. This severe power constraint fundamentally shifts the design priority from maximizing computational throughput to optimizing power efficiency, requiring careful co-optimization of algorithms and hardware utilization patterns.

The thermal management challenges extend far beyond simple power limits, creating complex dynamic constraints that vary with environmental conditions and usage patterns. Training workloads generate localized heat that can trigger protective throttling in specific processor cores or accelerator units, often in unpredictable ways that depend on ambient temperature and device design. Modern mobile SoCs implement sophisticated thermal management systems, including dynamic voltage and frequency scaling (DVFS)19, core migration between efficiency and performance clusters, and selective shutdown of non-essential processing units. Successfully deployed on-device learning systems must intimately integrate with these thermal management frameworks, intelligently scheduling training bursts during optimal thermal windows and gracefully degrading performance when thermal limits are approached, rather than simply failing or causing user-visible performance problems.

19 Dynamic Voltage and Frequency Scaling (DVFS): Modern mobile processors continuously adjust operating voltage and clock frequency based on workload and thermal conditions. During ML training, DVFS can reduce clock speeds by 30-50% when temperature exceeds 70°C, directly impacting training throughput. Effective on-device learning systems monitor thermal state and proactively reduce batch sizes or training intensity to maintain consistent performance rather than experiencing sudden throttling events.

Memory Hierarchy Optimization

Complementing the thermal and power challenges, memory hierarchy constraints create another fundamental bottleneck that shapes on-device learning system design. As established in the constraint amplification analysis above, these limitations affect both static model storage and the dynamic memory requirements during training, often pushing systems beyond their practical limits.

The device memory hierarchy spans several orders of magnitude across different device classes, each presenting distinct constraints for on-device learning. The iPhone 15 Pro provides 8 GB total system memory, but only approximately 2-4 GB remains available for application workloads after accounting for operating system requirements and background processes. Budget Android devices operate with 4 GB total system memory, leaving just 1-2 GB available for ML workloads after OS overhead consumes significant resources. IoT embedded systems provide 64 MB-1 GB total memory that must be shared between system tasks and application data, creating severe constraints for any learning algorithms. Microcontrollers offer only 256 KB-2 MB SRAM, requiring extreme optimization and careful memory management that fundamentally limits the complexity of models that can adapt on such platforms.

The memory expansion during training creates particularly acute challenges that often determine system feasibility. Standard backpropagation requires caching intermediate activations for each layer during the forward pass, which are then reused during gradient computation in the backward pass, creating substantial memory overhead. A MobileNetV2 model requiring just 14 MB for inference balloons to 50-70 MB during training, often exceeding the available memory budget on many mobile devices and making training impossible without aggressive optimization. This dramatic expansion necessitates sophisticated model compression techniques that must compound multiplicatively: INT8 quantization provides \(4\times\) memory reduction, structured pruning achieves \(10\times\) parameter reduction, and knowledge distillation enables \(5\times\) model size reduction while maintaining accuracy within 2-5% of the original model. These techniques must be carefully combined to achieve the aggressive compression ratios required for practical deployment.

Given these memory constraints, cache optimization becomes absolutely critical for achieving acceptable performance with constrained memory pools. Modern mobile SoCs feature complex memory hierarchies with L1 cache (32-64 KB), L2 cache (1-8 MB), and system memory (4-16 GB) that exhibit 10-100\(\times\) latency differences between levels, creating severe performance cliffs when working sets exceed cache capacity. Training workloads that exceed cache capacity face dramatic performance degradation due to memory bandwidth bottlenecks that can slow training by orders of magnitude. Successful on-device learning systems must carefully design data access patterns to maximize cache hit rates, often requiring specialized memory layouts that group related parameters for spatial locality, carefully sized mini-batches that fit entirely within cache constraints, and sophisticated gradient accumulation strategies that minimize expensive memory bus traffic.

The memory bandwidth limitations become particularly acute during training. While inference workloads primarily read model weights sequentially, training requires bidirectional data flow for gradient computation and weight updates. This increased memory traffic can saturate the memory subsystem, creating bottlenecks that limit training throughput regardless of computational capacity. Advanced implementations employ techniques such as gradient checkpointing20 to trade computation for memory, and mixed-precision training to reduce bandwidth requirements while maintaining numerical stability.

20 Gradient Checkpointing: A memory optimization technique that trades computation for memory by recomputing intermediate activations during the backward pass instead of storing them. This can reduce memory requirements by 50-80% at the cost of 20-30% additional computation. Particularly valuable for on-device training where memory is more constrained than compute capacity, enabling training of larger models within fixed memory budgets.

Mobile AI Accelerator Optimization

Different mobile platforms provide distinct acceleration capabilities that determine not only achievable model complexity but also feasible learning paradigms. The architectural differences between these accelerators fundamentally shape the design space for on-device training algorithms, influencing everything from numerical precision choices to gradient computation strategies.

Current generation mobile accelerators demonstrate remarkable diversity in their capabilities and optimization focus. Apple’s Neural Engine in the A17 Pro delivers 35 TOPS peak performance specialized for 8-bit and 16-bit operations, optimized primarily for CoreML inference patterns with limited training support, making it ideal for inference-heavy adaptation techniques. Qualcomm’s Hexagon DSP in the Snapdragon 8 Gen 3 achieves 45 TOPS with flexible precision support and programmable vector units, enabling mixed-precision training workflows that can adapt precision dynamically based on training phase and memory constraints. Google’s Tensor TPU in the Pixel 8 is optimized specifically for TensorFlow Lite operations with strong INT8 performance and tight integration with federated learning frameworks, reflecting Google’s strategic focus on distributed learning scenarios. The energy efficiency comparison reveals why dedicated neural processing units are essential: NPUs achieve 1-5 TOPS per watt versus general-purpose CPUs at just 0.1-0.2 TOPS per watt, representing a 5-50\(\times\) efficiency advantage that makes the difference between feasible and infeasible on-device training.

These accelerators determine not just raw performance but feasible learning paradigms and algorithmic approaches. Apple’s Neural Engine excels at fixed-precision inference workloads but provides limited support for the dynamic precision requirements of gradient computation, making it more suitable for inference-heavy adaptation techniques like few-shot learning. Qualcomm’s Hexagon DSP offers greater training flexibility through its programmable vector units and support for mixed-precision arithmetic, enabling more sophisticated on-device training including full backpropagation on compact models. Google’s Tensor TPU integrates tightly with federated learning frameworks and provides optimized communication primitives for distributed training scenarios.

The architectural implications extend beyond computational throughput to memory access patterns and data flow optimization. Training workloads exhibit fundamentally different characteristics than inference: gradient computation requires significantly higher memory bandwidth due to the amplification effects discussed above, weight updates create write-heavy access patterns, and optimizer state management demands additional memory allocation. Modern edge accelerators are beginning to address these challenges through specialized hardware features including adaptive precision datapaths that dynamically switch between INT4 for forward passes and FP16 for gradient computation, sparse computation units that accelerate selective parameter updates by skipping zero gradients, and near-memory compute architectures that reduce data movement costs by performing gradient updates directly adjacent to weight storage.

However, most current edge accelerators remain primarily optimized for inference workloads, creating a significant hardware-software co-design opportunity. Future on-device training accelerators will need to efficiently handle the unique demands of local adaptation, including support for dynamic precision scaling, efficient gradient accumulation, and specialized memory hierarchies optimized for the bidirectional data flow patterns characteristic of training workloads. Architecture selection influences everything from model quantization strategies and gradient computation approaches to federated communication protocols and thermal management policies.

Holistic Resource Management Strategies

The constraint analysis above reveals three fundamental challenge categories that define the on-device learning design space. Each constraint category directly drives a corresponding solution pillar, creating a systematic engineering approach to this complex systems problem. The constraint-to-solution mapping follows naturally from understanding how specific limitations necessitate particular technical responses.

The resource amplification effects—where training increases memory requirements by 3-10\(\times\), computational costs by 2-3\(\times\), and energy consumption proportionally—directly necessitate Model Adaptation approaches. When traditional training becomes impossible due to resource constraints, systems must fundamentally reduce the scope of parameter updates while preserving learning capability.

The information scarcity constraints—limited local datasets, non-IID distributions, privacy restrictions on data sharing, and minimal supervision—directly drive Data Efficiency solutions. When conventional data-hungry approaches fail due to insufficient local information, systems must extract maximum learning signal from minimal examples.

The coordination challenges—device heterogeneity, intermittent connectivity, distributed validation complexity, and scalability requirements—directly motivate Federated Coordination mechanisms. When isolated on-device learning limits collective intelligence, systems must enable privacy-preserving collaboration across device populations.

This constraint-to-solution mapping, illustrated in Table 2, creates a systematic engineering framework where each pillar addresses specific aspects of the deployment challenge while integrating with the others. Rather than viewing these as independent techniques, successful on-device learning systems orchestrate all three approaches to create coherent adaptive systems that operate effectively within edge constraints.

| Constraint Category | Key Challenges | Solution Approach |

|---|---|---|

| Resource Amplification | • Training workloads (3-10× memory) • Memory limitations • Power constraints | Model Adaptation • Parameter-efficient updates • Selective layer fine-tuning • Low-rank adaptations |

| Information Scarcity | • Limited local datasets • Non-IID distributions • Privacy restrictions | Data Efficiency • Few-shot learning • Meta-learning • Transfer learning |

| Coordination Challenges | • Device heterogeneity • Intermittent connectivity • Distributed validation | Federated Coordination • Privacy-preserving aggregation • Robust communication protocols • Asynchronous participation |

The subsequent sections examine each solution pillar systematically, building on the optimization principles from Chapter 10: Model Optimizations and the distributed systems frameworks from Chapter 13: ML Operations. Each pillar provides essential capabilities that the others cannot deliver alone, but their integration creates systems capable of meaningful adaptation within the severe constraints of edge deployment environments.

Model Adaptation

The computational and memory constraints outlined above create a challenging environment for model training, but they also reveal clear solution pathways when approached systematically. Model adaptation represents the first pillar of on-device learning systems engineering: reducing the scope of parameter updates to make training feasible within edge constraints while maintaining sufficient model expressivity for meaningful personalization.

The engineering challenge centers on navigating a fundamental trade-off space: adaptation expressivity versus resource consumption. At one extreme, updating all parameters provides maximum flexibility but exceeds edge device capabilities. At the other extreme, no adaptation preserves resources but fails to capture user-specific patterns. Effective on-device learning systems must operate in the middle ground, selecting adaptation strategies based on three key engineering criteria.