AI Frameworks

DALL·E 3 Prompt: Illustration in a rectangular format, designed for a professional textbook, where the content spans the entire width. The vibrant chart represents training and inference frameworks for ML. Icons for TensorFlow, Keras, PyTorch, ONNX, and TensorRT are spread out, filling the entire horizontal space, and aligned vertically. Each icon is accompanied by brief annotations detailing their features. The lively colors like blues, greens, and oranges highlight the icons and sections against a soft gradient background. The distinction between training and inference frameworks is accentuated through color-coded sections, with clean lines and modern typography maintaining clarity and focus.

Purpose

Why do machine learning frameworks represent the critical abstraction layer that determines system scalability, development velocity, and architectural flexibility in production AI systems?

Machine learning frameworks serve as the critical abstraction layer that bridges theoretical concepts and practical implementation, transforming abstract mathematical concepts into efficient, executable code while providing standardized interfaces for hardware acceleration, distributed computing, and model deployment. Without frameworks, every ML project would require reimplementing core operations like automatic differentiation and parallel computation, making large-scale development economically infeasible. This abstraction layer enables two crucial capabilities: development acceleration through pre-optimized implementations and hardware portability across CPUs, GPUs, and specialized accelerators. Framework selection becomes one of the most consequential engineering decisions, determining system architecture constraints, performance characteristics, and deployment flexibility throughout the development lifecycle.

Trace the evolutionary progression of ML frameworks from numerical computing libraries through deep learning platforms to specialized deployment variants

Explain the architecture and implementation of computational graphs, automatic differentiation, and tensor operations in modern frameworks

Compare static and dynamic execution models by analyzing their trade-offs in development flexibility, debugging capabilities, and production optimization

Analyze the design philosophies underlying major frameworks (research-first, production-first, functional programming) and their impact on system architecture

Evaluate framework selection criteria by systematically assessing model requirements, hardware constraints, and deployment contexts

Design framework selection strategies for specific deployment scenarios including cloud, edge, mobile, and microcontroller environments

Critique common framework selection fallacies and assess their impact on system performance and maintainability

Framework Abstraction and Necessity

The transformation of raw computational primitives into machine learning systems represents one of the most significant engineering challenges in modern computer science. Building upon the data pipelines established in the previous chapter, this chapter examines the software infrastructure that enables the efficient implementation of machine learning algorithms across diverse computational architectures. While the mathematical foundations of machine learning (linear algebra operations, optimization algorithms, and gradient computations) are well-established, their efficient realization in production systems demands software abstractions that bridge theoretical formulations with practical implementation constraints.

The computational complexity of modern machine learning algorithms illustrates the necessity of these abstractions. Training a contemporary language model involves orchestrating billions of floating-point operations across distributed hardware configurations, requiring precise coordination of memory hierarchies, communication protocols, and numerical precision management. Each algorithmic component, from forward propagation through backpropagation, must be decomposed into elementary operations that can be mapped to heterogeneous processing units while maintaining numerical stability and computational reproducibility. The engineering complexity of implementing these systems from basic computational primitives would render large-scale machine learning development economically prohibitive for most organizations.

This complexity becomes immediately apparent when considering specific implementation challenges. Implementing backpropagation for a simple 3-layer multilayer perceptron manually requires hundreds of lines of careful calculus and matrix manipulation code. A modern framework accomplishes this in a single line: loss.backward(). Frameworks don’t just make machine learning easier; they make modern deep learning possible by managing the complexity of gradient computation, hardware optimization, and distributed execution across millions of parameters.

Machine learning frameworks constitute the essential software infrastructure that mediates between high-level algorithmic specifications and low-level computational implementations. These platforms address the core abstraction problem in computational machine learning: enabling algorithmic expressiveness while maintaining computational efficiency across diverse hardware architectures. By providing standardized computational graphs, automatic differentiation engines, and optimized operator libraries, frameworks enable researchers and practitioners to focus on algorithmic innovation rather than implementation details. This abstraction layer has proven instrumental in accelerating both research discovery and industrial deployment of machine learning systems.

The evolutionary trajectory of machine learning frameworks reflects the broader maturation of the field from experimental research to industrial-scale deployment. Early computational frameworks addressed primarily the efficient expression of mathematical operations, focusing on optimizing linear algebra primitives and gradient computations. Contemporary platforms have expanded their scope to encompass the complete machine learning development lifecycle, integrating data preprocessing pipelines, distributed training orchestration, model versioning systems, and production deployment infrastructure. This architectural evolution demonstrates the field’s recognition that sustainable machine learning systems require engineering solutions that address not merely algorithmic performance, but operational concerns including scalability, reliability, maintainability, and reproducibility.

The architectural design decisions embedded within these frameworks exert profound influence on the characteristics and capabilities of machine learning systems built upon them. Design choices regarding computational graph representation, memory management strategies, parallelization schemes, and hardware abstraction layers directly determine system performance, scalability limits, and deployment flexibility. These architectural constraints propagate through every development phase, from initial research prototyping through production optimization, establishing the boundaries within which algorithmic innovations can be practically realized.

This chapter examines machine learning frameworks as both software engineering artifacts and enablers of contemporary artificial intelligence systems. We analyze the architectural principles governing these platforms, investigate the trade-offs that shape their design, and examine their role within the broader ecosystem of machine learning infrastructure. Through systematic study of framework evolution, architectural patterns, and implementation strategies, students will develop the technical understanding necessary to make informed framework selection decisions and effectively leverage these abstractions in the design and implementation of production machine learning systems.

Historical Development Trajectory

To appreciate how modern frameworks achieved these capabilities, we can trace how they evolved from simple mathematical libraries into today’s platforms. The evolution of machine learning frameworks mirrors the broader development of artificial intelligence and computational capabilities, driven by three key factors: growing model complexity, increasing dataset sizes, and diversifying hardware architectures.

These driving forces shaped distinct evolutionary phases that reflect both technological advances and changing requirements of the AI community. This section explores how frameworks progressed from early numerical computing libraries to modern deep learning frameworks. This evolution builds upon the historical context of AI development introduced in Chapter 1: Introduction and demonstrates how software infrastructure has enabled the practical realization of the theoretical advances in machine learning.

Chronological Framework Development

The development of machine learning frameworks has been built upon decades of foundational work in computational libraries. From the early building blocks of BLAS and LAPACK to modern frameworks like TensorFlow, PyTorch, and JAX, this journey represents a steady progression toward higher-level abstractions that make machine learning more accessible and powerful.

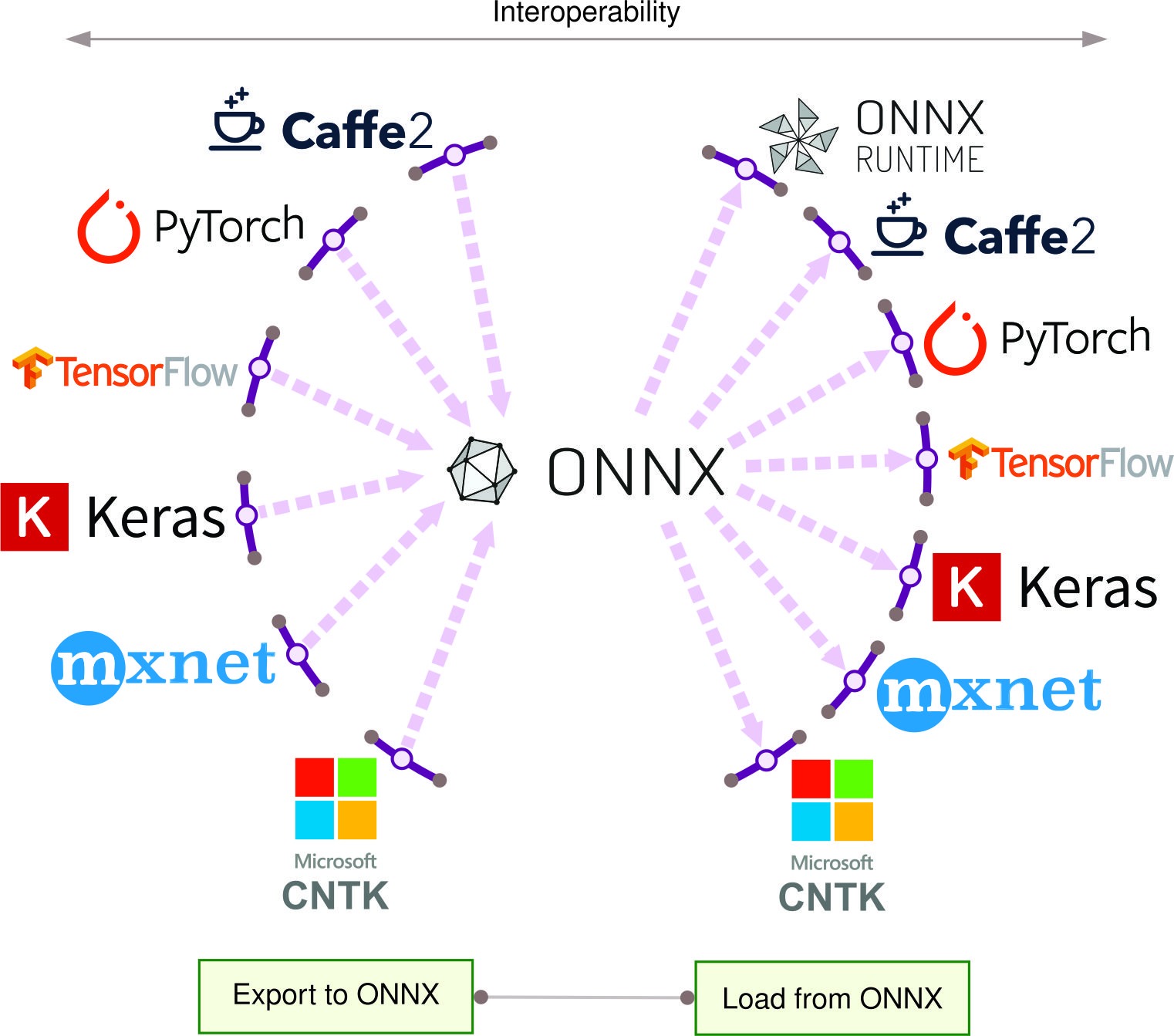

The development trajectory becomes clear when examining the relationships between these foundational technologies. Looking at Figure 1, we can trace how these numerical computing libraries laid the groundwork for modern ML development. The mathematical foundations established by BLAS and LAPACK enabled the creation of more user-friendly tools like NumPy and SciPy, which in turn set the stage for today’s deep learning frameworks.

This progression demonstrates how frameworks achieve their capabilities through incremental innovation, building computational accessibility upon foundations established by their predecessors.

Foundational Mathematical Computing Infrastructure

The foundation for modern ML frameworks begins at the core level of computation: matrix operations. Machine learning computations are primarily matrix-matrix and matrix-vector multiplications because neural networks process data through linear transformations1 applied to multidimensional arrays. The Basic Linear Algebra Subprograms (BLAS)2, developed in 1979, provided these essential matrix operations that would become the computational backbone of machine learning (Kung and Leiserson 1979). These low-level operations, when combined and executed, enable the complex calculations required for training neural networks and other ML models.

1 Linear Transformations: Mathematical operations that preserve vector addition and scalar multiplication, typically implemented as matrix multiplication in neural networks. Each layer applies a learned linear transformation (weights matrix) followed by a non-linear activation function (like ReLU or sigmoid), enabling networks to learn complex patterns from simple mathematical building blocks.

2 BLAS (Basic Linear Algebra Subprograms): Originally developed at Argonne National Laboratory, BLAS became the de facto standard for linear algebra operations, with Level 1 (vector-vector), Level 2 (matrix-vector), and Level 3 (matrix-matrix) operations that still underpin every modern ML framework.

3 LAPACK (Linear Algebra Package): Succeeded LINPACK and EISPACK, introducing block algorithms that dramatically improved cache efficiency and parallel execution, innovations that became essential as datasets grew from megabytes to terabytes.

Building upon BLAS, the Linear Algebra Package (LAPACK)3 emerged in 1992, extending these capabilities with advanced linear algebra operations such as matrix decompositions, eigenvalue problems, and linear system solutions. This layered approach of building increasingly complex operations from basic matrix computations became a defining characteristic of ML frameworks.

This foundation of optimized linear algebra operations set the stage for higher-level abstractions that would make numerical computing more accessible. The development of NumPy in 2006 marked an important milestone in this evolution, building upon its predecessors Numeric and Numarray to become the primary package for numerical computation in Python. NumPy introduced n-dimensional array objects and essential mathematical functions, providing an efficient interface to these underlying BLAS and LAPACK operations. This abstraction allowed developers to work with high-level array operations while maintaining the performance of optimized low-level matrix computations.

The trend continued with SciPy, which built upon NumPy’s foundations to provide specialized functions for optimization, linear algebra, and signal processing, with its first stable release in 2008. This layered architecture, progressing from basic matrix operations to numerical computations, established the blueprint for future ML frameworks.

Early Machine Learning Platform Development

The next evolutionary phase represented a conceptual leap from general numerical computing to domain-specific machine learning tools. The transition from numerical libraries to dedicated machine learning frameworks marked an important evolution in abstraction. While the underlying computations remained rooted in matrix operations, frameworks began to encapsulate these operations into higher-level machine learning primitives. The University of Waikato introduced Weka in 1993 (Witten and Frank 2002), one of the earliest ML frameworks, which abstracted matrix operations into data mining tasks, though it was limited by its Java implementation and focus on smaller-scale computations.

This paradigm shift became evident with Scikit-learn, emerging in 2007 as a significant advancement in machine learning abstraction. Building upon the NumPy and SciPy foundation, it transformed basic matrix operations into intuitive ML algorithms. For example, what amounts to a series of matrix multiplications and gradient computations became a simple fit() method call in a logistic regression model. This abstraction pattern, hiding complex matrix operations behind clean APIs, would become a defining characteristic of modern ML frameworks.

Theano4, developed at the Montreal Institute for Learning Algorithms (MILA) and appearing in 2007, was a major advancement that introduced two revolutionary concepts: computational graphs5 and GPU acceleration (Team et al. 2016). Computational graphs represented mathematical operations as directed graphs, with matrix operations as nodes and data flowing between them. This graph-based approach allowed for automatic differentiation and optimization of the underlying matrix operations. More importantly, it enabled the framework to automatically route these operations to GPU hardware, dramatically accelerating matrix computations.

4 Theano: Named after the ancient Greek mathematician Theano of Croton, this framework pioneered the concept of symbolic mathematical expressions in Python, laying the groundwork for every modern deep learning framework.

5 Computational Graphs: First formalized in automatic differentiation literature by Wengert (1964), this representation became the backbone of modern ML frameworks, enabling both forward and reverse-mode differentiation at unprecedented scale.

6 Eager Execution: An execution model where operations are evaluated immediately as they are called, similar to standard Python execution. Pioneered by Torch in 2002, this approach prioritizes developer productivity and debugging ease over performance optimization, becoming the default mode in modern frameworks like PyTorch and TensorFlow 2.x.

A parallel development track emerged with Torch7 (the Lua-based predecessor to PyTorch), created at NYU in 2002, which took a different approach to handling matrix operations. It emphasized immediate execution of operations (eager execution6) and provided an adaptable interface for neural network implementations.

Torch’s design philosophy of prioritizing developer experience while maintaining high performance established design patterns that would later influence frameworks like PyTorch. Its architecture demonstrated how to balance high-level abstractions with efficient low-level matrix operations, introducing concepts that would prove crucial as deep learning complexity increased.

Deep Learning Computational Platform Innovation

The emergence of deep learning created unprecedented computational demands that exposed the limitations of existing frameworks. The deep learning revolution required a major shift in how frameworks handled matrix operations, primarily due to three factors: the massive scale of computations, the complexity of gradient calculations through deep networks, and the need for distributed processing. Traditional frameworks, designed for classical machine learning algorithms, could not handle the billions of matrix operations required for training deep neural networks.

This computational challenge sparked innovation in academic research environments that would reshape framework development. The foundations for modern deep learning frameworks emerged from academic research. The University of Montreal’s Theano, released in 2007, established the concepts that would shape future frameworks (Bergstra et al. 2010). It introduced key concepts such as computational graphs for automatic differentiation and GPU acceleration, demonstrating how to organize and optimize complex neural network computations.

Caffe, released by UC Berkeley in 2013, advanced this evolution by introducing specialized implementations of convolutional operations (Jia et al. 2014). While convolutions are mathematically equivalent to specific patterns of matrix multiplication, Caffe optimized these patterns specifically for computer vision tasks, demonstrating how specialized matrix operation implementations could dramatically improve performance for specific network architectures.

The next breakthrough came from industry, where computational scale demands required new architectural approaches. Google’s TensorFlow7, introduced in 2015, revolutionized the field by treating matrix operations as part of a distributed computing problem (Dean et al. 2012). It represented all computations, from individual matrix multiplications to entire neural networks, as a static computational graph8 that could be split across multiple devices. This approach enabled training of unprecedented model sizes by distributing matrix operations across clusters of computers and specialized hardware. TensorFlow’s static graph approach, while initially constraining, allowed for aggressive optimization of matrix operations through techniques like kernel fusion9 (combining multiple operations into a single kernel for efficiency) and memory planning10 (pre-allocating memory for operations).

7 TensorFlow: Named after tensor operations flowing through computational graphs, this framework democratized distributed machine learning by open-sourcing Google’s internal DistBelief system, instantly giving researchers access to infrastructure that previously required massive corporate resources.

8 Static Computational Graph: A pre-defined computation structure where the entire model architecture is specified before execution, enabling global optimizations and efficient memory planning. Pioneered by TensorFlow 1.x, this approach sacrifices runtime flexibility for maximum performance optimization, making it ideal for production deployments.

9 Kernel Fusion: An optimization technique that combines multiple separate operations (like matrix multiplication followed by bias addition and activation) into a single GPU kernel, reducing memory bandwidth requirements by up to 10x and eliminating intermediate memory allocations. This optimization is particularly crucial for complex deep learning models with thousands of operations.

10 Memory Planning: A framework optimization that pre-analyzes computational graphs to determine optimal memory allocation strategies, enabling techniques like in-place operations and memory reuse patterns that can reduce peak memory usage by 40-60% during training.

The deep learning framework ecosystem continued to diversify as distinct organizations addressed specific computational challenges. Microsoft’s CNTK entered the field in 2016, bringing implementations for speech recognition and natural language processing tasks (Seide and Agarwal 2016). Its architecture emphasized scalability across distributed systems while maintaining efficient computation for sequence-based models.

Simultaneously, Facebook’s PyTorch11, also launched in 2016, took a radically different approach to handling matrix computations. Instead of static graphs, PyTorch introduced dynamic computational graphs that could be modified on the fly (Paszke et al. 2019). This dynamic approach, while potentially sacrificing optimization opportunities, simplified debugging and analysis of matrix operation flow in their models for researchers. PyTorch’s success demonstrated that the ability to introspect and modify computations dynamically was equally important as raw performance for research applications.

11 PyTorch: Inspired by the original Torch framework from NYU, PyTorch brought “define-by-run” semantics to Python, enabling researchers to modify models during execution, a breakthrough that accelerated research by making debugging as simple as using a standard Python debugger.

Framework development continued to expand with Amazon’s MXNet, which approached the challenge of large-scale matrix operations by focusing on memory efficiency and scalability across different hardware configurations. It introduced a hybrid approach that combined aspects of both static and dynamic graphs, enabling adaptable model development while maintaining aggressive optimization of the underlying matrix operations.

These diverse approaches revealed that no single solution could address all deep learning requirements, leading to the development of specialized tools. As deep learning applications grew more diverse, the need for specialized and higher-level abstractions became apparent. Keras emerged in 2015 to address this need, providing a unified interface that could run on top of multiple lower-level frameworks (Chollet et al. 2015). This higher-level abstraction approach demonstrated how frameworks could focus on user experience while leveraging the computational power of existing systems.

12 JAX: Stands for “Just After eXecution” and combines NumPy’s API with functional programming transforms (jit, grad, vmap, pmap), enabling researchers to write concise code that automatically scales to TPUs and GPU clusters while maintaining NumPy compatibility.

Meanwhile, Google’s JAX12, introduced in 2018, brought functional programming principles to deep learning computations, enabling new patterns of model development (Bradbury et al. 2018). FastAI built upon PyTorch to package common deep learning patterns into reusable components, making advanced techniques more accessible to practitioners (Howard and Gugger 2020). These higher-level frameworks demonstrated how abstraction could simplify development while maintaining the performance benefits of their underlying implementations.

Hardware-Driven Framework Architecture Evolution

The evolution of frameworks has been inextricably linked to advances in computational hardware, creating a dynamic relationship between software capabilities and hardware innovations. Hardware developments have significantly reshaped how frameworks implement and optimize matrix operations. The introduction of NVIDIA’s CUDA platform13 in 2007 marked a critical moment in framework design by enabling general-purpose computing on GPUs (Nickolls et al. 2008). This was transformative because GPUs excel at parallel matrix operations, offering orders of magnitude speedup for the computations in deep learning. While a CPU might process matrix elements sequentially, a GPU can process thousands of elements simultaneously, significantly changing how frameworks approach computation scheduling.

13 CUDA (Compute Unified Device Architecture): NVIDIA’s parallel computing platform launched in 2007 that transformed ML by enabling general-purpose GPU computing. GPUs can execute thousands of threads simultaneously, providing 10-100x speedup for matrix operations compared to CPUs, fundamentally changing how ML frameworks approach computation scheduling.

Modern GPU architectures demonstrate quantifiable efficiency advantages for ML workloads. NVIDIA A100 GPUs provide 312 TFLOPS of tensor operations at FP16 precision with 1.6 TB/s memory bandwidth, compared to typical CPU configurations delivering 1-2 TFLOPS with 50-100 GB/s memory bandwidth. These hardware characteristics significantly change framework optimization strategies. Frameworks must design computational graphs that maximize GPU utilization by ensuring sufficient computational intensity (measured in FLOPS per byte transferred) to saturate the available memory bandwidth.

Memory bandwidth optimization becomes critical when frameworks target GPU acceleration. The memory bandwidth-to-compute ratio (bytes per FLOP) determines whether operations are compute-bound or memory-bound. Matrix multiplication operations with large dimensions (typically N×N where N > 1024) achieve high computational intensity and become compute-bound, enabling near-peak GPU utilization. However, element-wise operations like activation functions frequently become memory-bound, achieving only 10-20% of peak performance. Frameworks address this through operator fusion techniques, combining memory-bound operations into single kernels that reduce memory transfers.

Beyond general GPU acceleration, the development of hardware-specific accelerators further revolutionized framework design. Google’s Tensor Processing Units (TPUs)14, first deployed in 2016, were purpose-built for tensor operations, the essential building blocks of deep learning computations. TPUs introduced systolic array15 architectures, which are particularly efficient for matrix multiplication and convolution operations. This hardware architecture prompted frameworks like TensorFlow to develop specialized compilation strategies that could map high-level operations directly to TPU instructions, bypassing traditional CPU-oriented optimizations.

14 TPU (Tensor Processing Unit): Google’s first-generation TPU (v1) achieved 15-30x better performance-per-watt than contemporary GPUs and CPUs for neural networks, proving that domain-specific architectures could outperform general-purpose processors for ML workloads.

15 Systolic Array: A specialized parallel computing architecture invented by H.T. Kung (CMU) and Charles Leiserson (MIT) in 1978, where data flows through a grid of processing elements in a rhythmic, pipeline fashion. Each element performs simple operations on data flowing from neighbors, making it exceptionally efficient for matrix operations, which form the heart of neural network computations.

TPU architecture demonstrates specialized efficiency gains through quantitative metrics. TPU v4 chips achieve 275 TFLOPS of BF16 compute with 1.2 TB/s memory bandwidth while consuming 200W power, delivering 1.375 TFLOPS/W power efficiency. This represents a 3-5x energy efficiency improvement over contemporary GPUs for large matrix operations. However, TPUs optimize specifically for dense matrix operations and show reduced efficiency for sparse computations or operations requiring complex control flow. Frameworks targeting TPUs must design computational graphs that maximize dense matrix operation usage while minimizing data movement between on-chip high-bandwidth memory (32 GB at 1.2 TB/s) and off-chip memory.

Mobile hardware accelerators, such as Apple’s Neural Engine (2017) and Qualcomm’s Neural Processing Units, brought new constraints and opportunities to framework design. These devices emphasized power efficiency over raw computational speed, requiring frameworks to develop new strategies for quantization and operator fusion. Mobile frameworks like TensorFlow Lite (more recently rebranded to LiteRT) and PyTorch Mobile needed to balance model accuracy with energy consumption, leading to innovations in how matrix operations are scheduled and executed.

Mobile accelerators demonstrate the critical importance of mixed-precision computation for energy efficiency. Apple’s Neural Engine in the A17 Pro chip provides 35 TOPS (trillion operations per second) of INT8 performance while consuming approximately 5W, achieving 7.2 TOPS/W efficiency. This represents a 10-15x energy efficiency improvement over FP32 computation on the same chip. Frameworks targeting mobile hardware must provide automatic mixed-precision policies that determine optimal precision for each operation, balancing energy consumption against accuracy degradation.

Sparse computation frameworks address the memory bandwidth limitations of mobile hardware. Sparse neural networks can reduce memory traffic by 50-90% for networks with structured sparsity patterns, directly improving energy efficiency since memory access consumes 10-100x more energy than arithmetic operations on mobile processors. Frameworks like Neural Magic’s SparseML automatically generate sparse models that maintain accuracy while conforming to hardware sparsity support. Qualcomm’s Neural Processing SDK provides specialized kernels for 2:4 structured sparse operations, where 2 out of every 4 consecutive weights are zero, enabling 1.5-2x speedup with minimal accuracy loss.

The emergence of custom ASIC16 (Application-Specific Integrated Circuit) solutions has further diversified the hardware landscape. Companies like Graphcore, Cerebras, and SambaNova have developed unique architectures for matrix computation, each with different strengths and optimization opportunities. This growth in specialized hardware has driven frameworks to adopt more adaptable intermediate representations17 of matrix operations, enabling target-specific optimization while maintaining a common high-level interface.

16 ASIC (Application-Specific Integrated Circuit): Custom silicon chips designed for specific tasks, contrasting with general-purpose CPUs. In ML contexts, ASICs like Google’s TPUs and Tesla’s FSD chips sacrifice flexibility for 10-100x efficiency gains in matrix operations, though they require 2-4 years development time and millions in upfront costs.

17 Intermediate Representation (IR): A framework-internal format that sits between high-level user code and hardware-specific machine code, enabling optimizations and cross-platform deployment. Modern ML frameworks use IRs like TensorFlow’s XLA or PyTorch’s TorchScript to compile the same model for CPUs, GPUs, TPUs, and mobile devices.

The emergence of reconfigurable hardware added another layer of complexity and opportunity. Field Programmable Gate Arrays (FPGAs) introduced yet another dimension to framework optimization. Unlike fixed-function ASICs, FPGAs allow for reconfigurable circuits that can be optimized for specific matrix operation patterns. Frameworks responding to this capability developed just-in-time compilation strategies that could generate optimized hardware configurations based on the specific needs of a model.

This hardware-driven evolution demonstrates how framework design must constantly adapt to leverage new computational capabilities. Having traced how frameworks evolved from simple numerical libraries to platforms driven by hardware innovations, we now turn to understanding the core concepts that enable modern frameworks to manage this computational complexity. These key concepts (computational graphs, execution models, and system architectures) form the foundation upon which all framework capabilities are built.

Fundamental Concepts

Modern machine learning frameworks operate through the integration of four key layers: Fundamentals, Data Handling, Developer Interface, and Execution and Abstraction. These layers function together to provide a structured and efficient foundation for model development and deployment, as illustrated in Figure 2.

The Fundamentals layer establishes the structural basis of these frameworks through computational graphs. These graphs use the directed acyclic graph (DAG) representation, enabling automatic differentiation and optimization. By organizing operations and data dependencies, computational graphs provide the framework with the ability to distribute workloads and execute computations across a variety of hardware platforms.

Building upon this structural foundation, the Data Handling layer manages numerical data and parameters essential for machine learning workflows. Central to this layer are specialized data structures, such as tensors, which handle high-dimensional arrays while optimizing memory usage and device placement. Memory management and data movement strategies ensure that computational workloads are executed effectively, particularly in environments with diverse or limited hardware resources.

The Developer Interface layer provides the tools and abstractions through which users interact with the framework. Programming models allow developers to define machine learning algorithms in a manner suited to their specific needs. These are categorized as either imperative or symbolic. Imperative models offer flexibility and ease of debugging, while symbolic models prioritize performance and deployment efficiency. Execution models further shape this interaction by defining whether computations are carried out eagerly (immediately) or as pre-optimized static graphs.

At the bottom of this architectural stack, the Execution and Abstraction layer transforms these high-level representations into efficient hardware-executable operations. Core operations, encompassing everything from basic linear algebra to complex neural network layers, are optimized for diverse hardware platforms. This layer also includes mechanisms for allocating resources and managing memory dynamically, ensuring scalable performance in both training and inference settings.

These four layers work together through carefully designed interfaces and dependencies, creating a cohesive system that balances usability with performance. Understanding these interconnected layers is essential for leveraging machine learning frameworks effectively. Each layer plays a distinct yet interdependent role in facilitating experimentation, optimization, and deployment. By mastering these concepts, practitioners can make informed decisions about resource utilization, scaling strategies, and the suitability of specific frameworks for various tasks.

Our exploration begins with computational graphs because they form the structural foundation that enables all other framework capabilities. This core abstraction provides the mathematical representation underlying automatic differentiation, optimization, and hardware acceleration capabilities that distinguish modern frameworks from simple numerical libraries.

Computational Graphs

The computational graph is the central abstraction that enables frameworks to transform intuitive model descriptions into efficient hardware execution. This representation organizes mathematical operations and their dependencies to enable automatic optimization, parallelization, and hardware specialization.

Computational Graph Fundamentals

Computational graphs emerged as a key abstraction in machine learning frameworks to address the growing complexity of deep learning models. As models grew larger and more complex, efficient execution across diverse hardware platforms became necessary. The computational graph transforms high-level model descriptions into efficient low-level hardware execution (Baydin et al. 2017), representing a machine learning model as a directed acyclic graph18 (DAG) where nodes represent operations and edges represent data flow. This DAG abstraction enables automatic differentiation and efficient optimization across diverse hardware platforms.

18 Directed Acyclic Graph (DAG): In machine learning frameworks, DAGs represent computation where nodes are operations (like matrix multiplication or activation functions) and edges are data dependencies. Unlike general DAGs in computer science, ML computational graphs specifically optimize for automatic differentiation, enabling frameworks to compute gradients by traversing the graph in reverse order.

For example, a node might represent a matrix multiplication operation, taking two input matrices (or tensors) and producing an output matrix (or tensor). To visualize this, consider the simple example in Figure 3. The directed acyclic graph computes \(z = x \times y\), where each variable is just numbers.

This simple example illustrates the fundamental principle, but real machine learning models require much more complex graph structures. As shown in Figure 4, the structure of the computation graph involves defining interconnected layers, such as convolution, activation, pooling, and normalization, which are optimized before execution. The figure also demonstrates key system-level interactions, including memory management and device placement, showing how the static graph approach enables complete pre-execution analysis and resource allocation.

Layers and Tensors

Modern machine learning frameworks implement neural network computations through two key abstractions: layers and tensors. Layers represent computational units that perform operations like convolution, pooling, or dense transformations. Each layer maintains internal states, including weights and biases, that evolve during model training. When data flows through these layers, it takes the form of tensors, immutable mathematical objects that hold and transmit numerical values.

The relationship between layers and tensors mirrors the distinction between operations and data in traditional programming. A layer defines how to transform input tensors into output tensors, much like a function defines how to transform its inputs into outputs. However, layers add an extra dimension: they maintain and update internal parameters during training. For example, a convolutional layer not only specifies how to perform convolution operations but also learns and stores the optimal convolution filters for a given task.

This abstraction becomes particularly powerful when frameworks automate the graph construction process. When a developer writes tf.keras.layers.Conv2D, the framework constructs the necessary graph nodes for convolution operations, parameter management, and data flow, shielding developers from implementation complexities.

Neural Network Construction

The power of computational graphs extends beyond basic layer operations. Activation functions, essential for introducing non-linearity in neural networks, become nodes in the graph. Functions like ReLU, sigmoid, and tanh transform the output tensors of layers, enabling networks to approximate complex mathematical functions. Frameworks provide optimized implementations of these activation functions, allowing developers to experiment with different non-linearities without worrying about implementation details.

Modern frameworks extend this modular approach by providing complete model architectures as pre-configured computational graphs. Models like ResNet and MobileNet come ready to use, allowing developers to customize specific layers and leverage transfer learning from pre-trained weights.

System-Level Consequences

Using the computational graph abstraction established earlier, frameworks can analyze and optimize entire computations before execution begins. The explicit representation of data dependencies enables automatic differentiation for gradient-based optimization.

Beyond optimization capabilities, this graph structure also provides flexibility in execution. The same model definition can run efficiently across different hardware platforms, from CPUs to GPUs to specialized accelerators. The framework handles the complexity of mapping operations to specific hardware capabilities, optimizing memory usage, and coordinating parallel execution. The graph structure also enables model serialization, allowing trained models to be saved, shared, and deployed across different environments.

These system benefits distinguish computational graphs from simpler visualization tools. While neural network diagrams help visualize model architecture, computational graphs serve a deeper purpose. They provide the precise mathematical representation needed to transform intuitive model design into efficient execution. Understanding this representation reveals how frameworks transform high-level model descriptions into optimized, hardware-specific implementations, making modern deep learning practical at scale.

It is important to differentiate computational graphs from neural network diagrams, such as those for multilayer perceptrons (MLPs), which depict nodes and layers. Neural network diagrams visualize the architecture and flow of data through nodes and layers, providing an intuitive understanding of the model’s structure. In contrast, computational graphs provide a low-level representation of the underlying mathematical operations and data dependencies required to implement and train these networks.

These representational capabilities have far-reaching implications for framework design and performance. From a systems perspective, computational graphs provide several key capabilities that influence the entire machine learning pipeline. They enable automatic differentiation, which we will examine next, provide clear structure for analyzing data dependencies and potential parallelism, and serve as an intermediate representation that can be optimized and transformed for different hardware targets. However, the power of computational graphs depends critically on how and when they are executed, which brings us to the fundamental distinction between static and dynamic graph execution models.

Pre-Defined Computational Structure

Static computation graphs, pioneered by early versions of TensorFlow, implement a “define-then-run” execution model. In this approach, developers must specify the entire computation graph before execution begins. This architectural choice has significant implications for both system performance and development workflow, as we will examine later.

A static computation graph implements a clear separation between the definition of operations and their execution. During the definition phase, each mathematical operation, variable, and data flow connection is explicitly declared and added to the graph structure. This graph is a complete specification of the computation but does not perform any actual calculations. Instead, the framework constructs an internal representation of all operations and their dependencies, which will be executed in a subsequent phase.

This upfront definition enables powerful system-level optimizations. The framework can analyze the complete structure to identify opportunities for operation fusion, eliminating unnecessary intermediate results and reducing memory traffic by 3-10x through kernel fusion. Memory requirements can be precisely calculated and optimized in advance, leading to efficient allocation strategies. Static graphs enable compilation frameworks like XLA19 (Accelerated Linear Algebra) to perform aggressive optimizations. Graph rewriting can eliminate substantial numbers of redundant operations while hardware-specific kernel generation can provide significant speedups over generic implementations. This abstraction, while elegant, imposes fundamental constraints on expressible computations: static graphs achieve these performance gains by sacrificing flexibility in control flow and dynamic computation patterns. Once validated, the same computation can be run repeatedly with high confidence in its behavior and performance characteristics.

19 XLA (Accelerated Linear Algebra): TensorFlow’s domain-specific compiler that optimizes tensor operations for CPUs, GPUs, and TPUs. Achieves 3-10x speedups through operation fusion, memory layout optimization, and hardware-specific code generation, demonstrating how ML workloads benefit from specialized compilation strategies.

Figure 5 illustrates this fundamental two-phase approach: first, the complete computational graph is constructed and optimized; then, during the execution phase, actual data flows through the graph to produce results. This separation enables the framework to perform thorough analysis and optimization of the entire computation before any execution begins.

Runtime-Adaptive Computational Structure

Dynamic computation graphs, popularized by PyTorch, implement a “define-by-run” execution model. This approach constructs the graph during execution, offering greater flexibility in model definition and debugging. Unlike static graphs, which rely on predefined memory allocation, dynamic graphs allocate memory as operations execute, making them susceptible to memory fragmentation in long-running tasks. While dynamic graphs trade efficiency for flexibility in expressing control flow, they significantly limit compiler optimization opportunities. The inability to analyze the complete computation before execution prevents aggressive kernel fusion and graph rewriting optimizations that static graphs enable.

As shown in Figure 6, each operation is defined, executed, and completed before moving on to define the next operation. This contrasts sharply with static graphs, where all operations must be defined upfront. When an operation is defined, it is immediately executed, and its results become available for subsequent operations or for inspection during debugging. This cycle continues until all operations are complete.

Dynamic graphs excel in scenarios that require conditional execution or dynamic control flow, such as when processing variable-length sequences or implementing complex branching logic. They provide immediate feedback during development, making it easier to identify and fix issues in the computational pipeline. This flexibility aligns naturally with imperative programming patterns familiar to most developers, allowing them to inspect and modify computations at runtime. These characteristics make dynamic graphs particularly valuable during the research and development phase of ML projects.

Framework Architecture Trade-offs

The architectural differences between static and dynamic computational graphs have multiple implications for how machine learning systems are designed and executed. These implications touch on various aspects of memory usage, device utilization, execution optimization, and debugging, all of which play important roles in determining the efficiency and scalability of a system. We focus on memory management and device placement as foundational concepts, with optimization techniques covered in detail in Chapter 8: AI Training. This allows us to build a clear understanding before exploring more complex topics like optimization and fault tolerance.

Memory Management

Memory management occurs when executing computational graphs. Static graphs benefit from their predefined structure, allowing for precise memory planning before execution. Frameworks can calculate memory requirements in advance, optimize allocation, and minimize overhead through techniques like memory reuse. This structured approach helps ensure consistent performance, particularly in resource-constrained environments, such as Mobile and Tiny ML systems. For large models, frameworks must efficiently handle memory bandwidth requirements that can range from 100GB/s for smaller models to over 1TB/s for large language models with billions of parameters, making memory planning critical for achieving optimal throughput.

Dynamic graphs, by contrast, allocate memory dynamically as operations are executed. While this flexibility is invaluable for handling dynamic control flows or variable input sizes, it can result in higher memory overhead and fragmentation. These trade-offs are often most apparent during development, where dynamic graphs enable rapid iteration and debugging but may require additional optimization for production deployment. The dynamic allocation overhead becomes particularly significant when memory bandwidth utilization drops below 50% of available capacity due to fragmentation and suboptimal access patterns.

Device Placement

Device placement, the process of assigning operations to hardware resources such as CPUs, GPUs, or specialized ASICS like TPUs, is another system-level consideration. Static graphs allow for detailed pre-execution analysis, enabling the framework to map computationally intensive operations to devices while minimizing communication overhead. This capability makes static graphs well-suited for optimizing execution on specialized hardware, where performance gains can be significant.

Dynamic graphs, in contrast, handle device placement at runtime. This allows them to adapt to changing conditions, such as hardware availability or workload demands. However, the lack of a complete graph structure before execution can make it challenging to optimize device utilization fully, potentially leading to inefficiencies in large-scale or distributed setups.

Broader Perspective

The trade-offs between static and dynamic graphs extend well beyond memory and device considerations. As shown in Table 1, these architectures influence optimization potential, debugging capabilities, scalability, and deployment complexity. These broader implications are explored in detail in Chapter 8: AI Training for training workflows and Chapter 11: AI Acceleration for system-level optimizations.

These hybrid solutions aim to provide the flexibility of dynamic graphs during development while enabling the performance optimizations of static graphs in production environments. The choice between static and dynamic graphs often depends on specific project requirements, balancing factors like development speed, production performance, and system complexity.

| Aspect | Static Graphs | Dynamic Graphs |

|---|---|---|

| Memory Management | Precise allocation planning, optimized memory usage | Flexible but likely less efficient allocation |

| Optimization Potential | Comprehensive graph-level optimizations possible | Limited to local optimizations due to runtime |

| Hardware Utilization | Can generate highly optimized hardware-specific code | May sacrifice hardware-specific optimizations |

| Development Experience | Requires more upfront planning, harder to debug | Better debugging, faster iteration cycles |

| Debugging Workflow | Framework-specific tools, disconnected stack traces | Standard Python debugging (pdb, print, inspect) |

| Error Reporting | Execution-time errors disconnected from definition | Intuitive stack traces pointing to exact lines |

| Research Velocity | Slower iteration due to define-then-run requirement | Faster prototyping and model experimentation |

| Runtime Flexibility | Fixed computation structure | Can adapt to runtime conditions |

| Production Performance | Generally better performance at scale | May have overhead from graph construction |

| Integration with Legacy Code | More separation between definition and execution | Natural integration with imperative code |

| Memory Overhead | Lower memory overhead due to planned allocations | Higher overhead due to dynamic allocations |

| Deployment Complexity | Simpler deployment due to fixed structure | May require additional runtime support |

Graph-Based Gradient Computation Implementation

The computational graph serves as more than just an execution plan; it is the core data structure that makes reverse-mode automatic differentiation feasible and efficient. Understanding this connection reveals how frameworks compute gradients through arbitrarily complex neural networks.

During the forward pass, the framework constructs a computational graph where each node represents an operation and stores both the result and the information needed to compute gradients. This graph is not just a visualization tool but an actual data structure maintained in memory. When loss.backward() is called, the framework performs a reverse traversal of this graph in reverse topological order, systematically applying the chain rule at each node.

The key insight is that the graph structure encodes all the dependency relationships needed for the chain rule. Each edge in the graph represents a partial derivative, and reverse traversal automatically composes these partial derivatives according to the chain rule. The forward pass builds the computation history, and the backward pass is simply a graph traversal algorithm that accumulates gradients by following the recorded dependencies.

This design enables automatic differentiation to scale to networks with millions of parameters because the complexity is linear in the number of operations, not exponential in the number of variables. The graph structure ensures that each gradient computation is performed exactly once and that shared subcomputations are properly handled through the dependency tracking built into the graph representation.

Automatic Differentiation

Machine learning frameworks must solve a core computational challenge: calculating derivatives through complex chains of mathematical operations accurately and efficiently. This capability enables the training of neural networks by computing how millions of parameters require adjustment to improve the model’s performance (Baydin et al. 2017).

Listing 1 shows a simple computation that illustrates this challenge.

def f(x):

a = x * x # Square

b = sin(x) # Sine

return a * b # ProductEven in this basic example, computing derivatives manually would require careful application of calculus rules - the product rule, the chain rule, and derivatives of trigonometric functions. Now imagine scaling this to a neural network with millions of operations. This is where automatic differentiation (AD)20 becomes essential.

20 Automatic Differentiation: Invented by Robert Edwin Wengert in 1964, this technique achieves machine precision derivatives by applying the chain rule at the elementary operation level, making neural network training computationally feasible for networks with millions of parameters.

Automatic differentiation calculates derivatives of functions implemented as computer programs by decomposing them into elementary operations. In our example, AD breaks down f(x) into three basic steps:

- Computing

a = x * x(squaring) - Computing

b = sin(x)(sine function) - Computing the final product

a * b

For each step, AD knows the basic derivative rules:

- For squaring:

d(x²)/dx = 2x - For sine:

d(sin(x))/dx = cos(x) - For products:

d(uv)/dx = u(dv/dx) + v(du/dx)

By tracking how these operations combine and systematically applying the chain rule, AD computes exact derivatives through the entire computation. When implemented in frameworks like PyTorch or TensorFlow, this enables automatic computation of gradients through arbitrary neural network architectures, which becomes essential for the training algorithms and optimization techniques detailed in Chapter 8: AI Training. This fundamental understanding of how AD decomposes and tracks computations sets the foundation for examining its implementation in machine learning frameworks. We will explore its mathematical principles, system architecture implications, and performance considerations that make modern machine learning possible.

Forward and Reverse Mode Differentiation

Automatic differentiation can be implemented using two primary computational approaches, each with distinct characteristics in terms of efficiency, memory usage, and applicability to different problem types. This section examines forward mode and reverse mode automatic differentiation, analyzing their mathematical foundations, implementation structures, performance characteristics, and integration patterns within machine learning frameworks.

Forward Mode

Forward mode automatic differentiation computes derivatives alongside the original computation, tracking how changes propagate from input to output. Building on the basic AD concepts introduced in Section 1.3.2, forward mode mirrors manual derivative computation, making it intuitive to understand and implement.

Consider our previous example with a slight modification to show how forward mode works (see Listing 2).

def f(x): # Computing both value and derivative

# Step 1: x -> x²

a = x * x # Value: x²

da = 2 * x # Derivative: 2x

# Step 2: x -> sin(x)

b = sin(x) # Value: sin(x)

db = cos(x) # Derivative: cos(x)

# Step 3: Combine using product rule

result = a * b # Value: x² * sin(x)

dresult = a * db + b * da # Derivative: x²*cos(x) + sin(x)*2x

return result, dresultForward mode achieves this systematic derivative computation by augmenting each number with its derivative value, creating what mathematicians call a “dual number.” The example in Listing 3 shows how this works numerically when x = 2.0, the computation tracks both values and derivatives:

x = 2.0 # Initial value

dx = 1.0 # We're tracking derivative with respect to x

# Step 1: x²

a = 4.0 # (2.0)²

da = 4.0 # 2 * 2.0

# Step 2: sin(x)

b = 0.909 # sin(2.0)

db = -0.416 # cos(2.0)

# Final result

result = 3.637 # 4.0 * 0.909

dresult = 2.805 # 4.0 * (-0.416) + 0.909 * 4.0Implementation Structure

Forward mode AD structures computations to track both values and derivatives simultaneously through programs. The structure of such computations can be seen again in Listing 4, where each intermediate operation is made explicit.

def f(x):

a = x * x

b = sin(x)

return a * bWhen a framework executes this function in forward mode, it augments each computation to carry two pieces of information: the value itself and how that value changes with respect to the input. This paired movement of value and derivative mirrors how we think about rates of change as shown in Listing 5.

# Conceptually, each computation tracks (value, derivative)

x = (2.0, 1.0) # Input value and its derivative

a = (4.0, 4.0) # x² and its derivative 2x

b = (0.909, -0.416) # sin(x) and its derivative cos(x)

result = (3.637, 2.805) # Final value and derivativeThis forward propagation of derivative information happens automatically within the framework’s computational machinery. The framework: 1. Enriches each value with derivative information 2. Transforms each basic operation to handle both value and derivative 3. Propagates this information forward through the computation

The beauty of this approach is that it follows the natural flow of computation - as values move forward through the program, their derivatives move with them. This makes forward mode particularly well-suited for functions with single inputs and multiple outputs, as the derivative information follows the same path as the regular computation.

Performance Characteristics

Forward mode AD exhibits distinct performance patterns that influence when and how frameworks employ it. Understanding these characteristics helps explain why frameworks choose different AD approaches for different scenarios.

Forward mode performs one derivative computation alongside each original operation. For a function with one input variable, this means roughly doubling the computational work - once for the value, once for the derivative. The cost scales linearly with the number of operations in the program, making it predictable and manageable for simple computations.

However, consider a neural network layer computing derivatives for matrix multiplication between weights and inputs. To compute derivatives with respect to all weights, forward mode would require performing the computation once for each weight parameter, potentially thousands of times. This reveals an important characteristic: forward mode’s efficiency depends on the number of input variables we need derivatives for.

Forward mode’s memory requirements are relatively modest. It needs to store the original value, a single derivative value, and temporary results during computation. The memory usage stays constant regardless of how complex the computation becomes. This predictable memory pattern makes forward mode particularly suitable for embedded systems with limited memory, real-time applications requiring consistent memory use, and systems where memory bandwidth is a bottleneck.

This combination of computational scaling with input variables but constant memory usage creates specific trade-offs that influence framework design decisions. Forward mode shines in scenarios with few inputs but many outputs, where its straightforward implementation and predictable resource usage outweigh the computational cost of multiple passes.

Use Cases

While forward mode automatic differentiation isn’t the primary choice for training full neural networks, it plays several important roles in modern machine learning frameworks. Its strength lies in scenarios where we need to understand how small changes in inputs affect a network’s behavior. Consider a data scientist seeking to understand why their model makes certain predictions. They may require analysis of how changing a single pixel in an image or a specific feature in their data affects the model’s output, as illustrated in Listing 6.

def analyze_image_sensitivity(model, image):

# Forward mode tracks how changing one pixel

# affects the final classification

layer1 = relu(W1 @ image + b1)

layer2 = relu(W2 @ layer1 + b2)

predictions = softmax(W3 @ layer2 + b3)

return predictionsAs the computation moves through each layer, forward mode carries both values and derivatives, making it straightforward to see how input perturbations ripple through to the final prediction. For each operation, we can track exactly how small changes propagate forward.

Neural network interpretation presents another compelling application. When researchers generate saliency maps or attribution scores, they typically compute how each input element influences the output as shown in Listing 7.

def compute_feature_importance(model, input_features):

# Track influence of each input feature

# through the network's computation

hidden = tanh(W1 @ input_features + b1)

logits = W2 @ hidden + b2

# Forward mode efficiently computes d(logits)/d(input)

return logitsIn specialized training scenarios, particularly those involving online learning where models update on individual examples, forward mode offers advantages. The framework can track derivatives for a single example through the network, though this approach becomes less practical when dealing with batch training or updating multiple model parameters simultaneously.

Understanding these use cases helps explain why machine learning frameworks maintain forward mode capabilities alongside other differentiation strategies. While reverse mode handles the heavy lifting of full model training, forward mode provides an elegant solution for specific analytical tasks where its computational pattern matches the problem structure.

Reverse Mode

Reverse mode automatic differentiation forms the computational backbone of modern neural network training. This isn’t by accident - reverse mode’s structure perfectly matches what we need for training neural networks. During training, we have one scalar output (the loss function) and need derivatives with respect to millions of parameters (the network weights). Reverse mode is exceptionally efficient at computing exactly this pattern of derivatives.

A closer look at Listing 8 reveals how reverse mode differentiation is structured.

def f(x):

a = x * x # First operation: square x

b = sin(x) # Second operation: sine of x

c = a * b # Third operation: multiply results

return cIn this function shown in Listing 8, we have three operations that create a computational chain. Notice how ‘x’ influences the final result ‘c’ through two different paths: once through squaring (a = x²) and once through sine (b = sin(x)). Both paths must be accounted for when computing derivatives.

First, the forward pass computes and stores values, as illustrated in Listing 9.

x = 2.0 # Our input value

a = 4.0 # x * x = 2.0 * 2.0 = 4.0

b = 0.909 # sin(2.0) ≈ 0.909

c = 3.637 # a * b = 4.0 * 0.909 ≈ 3.637Then comes the backward pass. This is where reverse mode shows its elegance. This process is demonstrated in Listing 10, where we compute the gradient starting from the output.

#| eval: false

dc/dc = 1.0 # Derivative of output with respect to itself is 1

# Moving backward through multiplication c = a * b

dc/da = b # ∂(a*b)/∂a = b = 0.909

dc/db = a # ∂(a*b)/∂b = a = 4.0

# Finally, combining derivatives for x through both paths

# Path 1: x -> x² -> c contribution: 2x * dc/da

# Path 2: x -> sin(x) -> c contribution: cos(x) * dc/db

dc/dx = (2 * x * dc/da) + (cos(x) * dc/db)

= (2 * 2.0 * 0.909) + (cos(2.0) * 4.0)

= 3.636 + (-0.416 * 4.0)

= 2.805The power of reverse mode becomes clear when we consider what would happen if we added more operations that depend on x. Forward mode would require tracking derivatives through each new path, but reverse mode handles all paths in a single backward pass. This is exactly the scenario in neural networks, where each weight can affect the final loss through multiple paths in the network.

Implementation Structure

The implementation of reverse mode in machine learning frameworks requires careful orchestration of computation and memory. While forward mode simply augments each computation, reverse mode needs to maintain a record of the forward computation to enable the backward pass. Modern frameworks accomplish this through computational graphs and automatic gradient accumulation21.

21 Gradient Accumulation: A training technique where gradients from multiple mini-batches are computed and summed before updating model parameters, effectively simulating larger batch sizes without requiring additional memory. Essential for training large models where memory constraints limit batch size to as small as 1 sample per device.

We extend our previous example to a small neural network computation. See Listing 11 for the code structure.

def simple_network(x, w1, w2):

# Forward pass

hidden = x * w1 # First layer multiplication

activated = max(0, hidden) # ReLU activation

output = activated * w2 # Second layer multiplication

return output # Final output (before loss)During the forward pass, the framework doesn’t just compute values. It builds a graph of operations while tracking intermediate results, as illustrated in Listing 12.

x = 1.0

w1 = 2.0

w2 = 3.0

hidden = 2.0 # x * w1 = 1.0 * 2.0

activated = 2.0 # max(0, 2.0) = 2.0

output = 6.0 # activated * w2 = 2.0 * 3.0Refer to Listing 13 for a step-by-step breakdown of gradient computation during the backward pass.

d_output = 1.0 # Start with derivative of output

d_w2 = activated # d_output * d(output)/d_w2

# = 1.0 * 2.0 = 2.0

d_activated = w2 # d_output * d(output)/d_activated

# = 1.0 * 3.0 = 3.0

# ReLU gradient: 1 if input was > 0, 0 otherwise

d_hidden = d_activated * (1 if hidden > 0 else 0)

# 3.0 * 1 = 3.0

d_w1 = x * d_hidden # 1.0 * 3.0 = 3.0

d_x = w1 * d_hidden # 2.0 * 3.0 = 6.0This example illustrates several key implementation considerations: 1. The framework must track dependencies between operations 2. Intermediate values must be stored for the backward pass 3. Gradient computations follow the reverse topological order of the forward computation 4. Each operation needs both forward and backward implementations

Memory Management Strategies

Memory management represents one of the key challenges in implementing reverse mode differentiation in machine learning frameworks. Unlike forward mode where we can discard intermediate values as we go, reverse mode requires storing results from the forward pass to compute gradients during the backward pass.

This requirement is illustrated in Listing 14, which extends our neural network example to highlight how intermediate activations must be preserved for use during gradient computation.

def deep_network(x, w1, w2, w3):

# Forward pass - must store intermediates

hidden1 = x * w1

activated1 = max(0, hidden1) # Store for backward

hidden2 = activated1 * w2

activated2 = max(0, hidden2) # Store for backward

output = activated2 * w3

return outputEach intermediate value needed for gradient computation must be kept in memory until its backward pass completes. As networks grow deeper, this memory requirement grows linearly with network depth. For a typical deep neural network processing a batch of images, this can mean gigabytes of stored activations.

Frameworks employ several strategies to manage this memory burden. One such approach is illustrated in Listing 15.

def training_step(model, input_batch):

# Strategy 1: Checkpointing

with checkpoint_scope():

hidden1 = activation(layer1(input_batch))

# Framework might free some memory here

hidden2 = activation(layer2(hidden1))

# More selective memory management

output = layer3(hidden2)

# Strategy 2: Gradient accumulation

loss = compute_loss(output)

# Backward pass with managed memory

loss.backward()Modern frameworks automatically balance memory usage and computation speed. They might recompute some intermediate values during the backward pass rather than storing everything, particularly for memory-intensive operations. This trade-off between memory and computation becomes especially important in large-scale training scenarios.

Optimization Techniques

Reverse mode automatic differentiation in machine learning frameworks employs several key optimization techniques to enhance training efficiency. These optimizations become crucial when training large neural networks where computational and memory resources are pushed to their limits.

Modern frameworks implement gradient checkpointing22, a technique that strategically balances computation and memory. A simplified forward pass of such a network is shown in Listing 16.

22 Gradient Checkpointing: A memory optimization technique that trades computation time for memory by selectively storing only certain intermediate activations during the forward pass, then recomputing discarded values during gradient computation. Can reduce memory usage by 50-90% for deep networks while increasing training time by only 20-33%.

def deep_network(input_tensor):

# A typical deep network computation

layer1 = large_dense_layer(input_tensor)

activation1 = relu(layer1)

layer2 = large_dense_layer(activation1)

activation2 = relu(layer2)

# ... many more layers

output = final_layer(activation_n)

return outputInstead of storing all intermediate activations, frameworks can strategically recompute certain values during the backward pass. Listing 17 demonstrates how frameworks achieve this memory saving. The framework might save activations only every few layers.

# Conceptual representation of checkpointing

checkpoint1 = save_for_backward(activation1)

# Intermediate activations can be recomputed

checkpoint2 = save_for_backward(activation4)

# Framework balances storage vs recomputationAnother crucial optimization involves operation fusion23. Rather than treating each mathematical operation separately, frameworks combine operations that commonly occur together. Matrix multiplication followed by bias addition, for instance, can be fused into a single operation, reducing memory transfers and improving hardware utilization.

23 Operation Fusion: Compiler optimization that combines multiple sequential operations into a single kernel to reduce memory bandwidth and latency. For example, fusing matrix multiplication, bias addition, and ReLU activation can eliminate intermediate memory allocations and achieve 2-3x speedup on modern GPUs.

The backward pass itself can be optimized by reordering computations to maximize hardware efficiency. Consider the gradient computation for a convolution layer - rather than directly translating the mathematical definition into code, frameworks implement specialized backward operations that take advantage of modern hardware capabilities.

These optimizations work together to make the training of large neural networks practical. Without them, many modern architectures would be prohibitively expensive to train, both in terms of memory usage and computation time.

Framework Implementation of Automatic Differentiation

The integration of automatic differentiation into machine learning frameworks requires careful system design to balance flexibility, performance, and usability. Modern frameworks like PyTorch and TensorFlow expose AD capabilities through high-level APIs while maintaining the sophisticated underlying machinery.

Frameworks present AD to users through various interfaces. A typical example from PyTorch is shown in Listing 18.

# PyTorch-style automatic differentiation

def neural_network(x):

# Framework transparently tracks operations

layer1 = nn.Linear(784, 256)

layer2 = nn.Linear(256, 10)

# Each operation is automatically tracked

hidden = torch.relu(layer1(x))

output = layer2(hidden)

return output

# Training loop showing AD integration

for batch_x, batch_y in data_loader:

optimizer.zero_grad() # Clear previous gradients

output = neural_network(batch_x)

loss = loss_function(output, batch_y)

# Framework handles all AD machinery

loss.backward() # Automatic backward pass

optimizer.step() # Parameter updatesWhile this code appears straightforward, it masks considerable complexity. The framework must:

- Track all operations during the forward pass

- Build and maintain the computational graph

- Manage memory for intermediate values

- Schedule gradient computations efficiently

- Interface with hardware accelerators

This integration extends beyond basic training. Frameworks must handle complex scenarios like higher-order gradients, where we compute derivatives of derivatives, and mixed-precision training. The ability to compute second-order derivatives is demonstrated in Listing 19.

# Computing higher-order gradients

with torch.set_grad_enabled(True):

# First-order gradient computation

output = model(input)

grad_output = torch.autograd.grad(output, model.parameters())

# Second-order gradient computation

grad2_output = torch.autograd.grad(

grad_output, model.parameters()

)The Systems Engineering Breakthrough

While the mathematical foundations of automatic differentiation were established decades ago, the practical implementation in machine learning frameworks represents a significant systems engineering achievement. Understanding this perspective illuminates why automatic differentiation systems enabled the deep learning revolution.

Before automated systems, implementing gradient computation required manually deriving and coding gradients for every operation in a neural network. For a simple fully connected layer, this meant writing separate forward and backward functions, carefully tracking intermediate values, and ensuring mathematical correctness across dozens of operations. As architectures became more complex with convolutional layers, attention mechanisms, or custom operations, this manual process became error-prone and prohibitively time-consuming.

Addressing these challenges, the breakthrough in automatic differentiation lies not in mathematical innovation but in software engineering. Modern frameworks must handle memory management, operation scheduling, numerical stability, and optimization across diverse hardware while maintaining mathematical correctness. Consider the complexity: a single matrix multiplication requires different gradient computations depending on which inputs require gradients, tensor shapes, hardware capabilities, and memory constraints. Automatic differentiation systems handle these variations transparently, enabling researchers to focus on model architecture rather than gradient implementation details.

Beyond simplifying existing workflows, autograd systems enabled architectural innovations that would be impossible with manual gradient implementation. Modern architectures like Transformers involve hundreds of operations with complex dependencies. Computing gradients manually for complex architectural components, layer normalization, and residual connections would require months of careful derivation and debugging. Automatic differentiation systems compute these gradients correctly and efficiently, enabling rapid experimentation with novel architectures.

This systems perspective explains why deep learning accelerated dramatically after frameworks matured: not because the mathematics changed, but because software engineering finally made the mathematics practical to apply at scale. The computational graphs discussed earlier provide the infrastructure, but the automatic differentiation systems provide the intelligence to traverse these graphs correctly and efficiently.

Memory Management in Gradient Computation

The memory demands of automatic differentiation stem from a fundamental requirement: to compute gradients during the backward pass, we must remember what happened during the forward pass. This seemingly simple requirement creates interesting challenges for machine learning frameworks. Unlike traditional programs that can discard intermediate results as soon as they’re used, AD systems must carefully preserve computational history.

This necessity is illustrated in Listing 20, which shows what happens during a neural network’s forward pass.

def neural_network(x):

# Each operation creates values that must be remembered

a = layer1(x) # Must store for backward pass

b = relu(a) # Must store input to relu

c = layer2(b) # Must store for backward pass