AI Training

DALL·E 3 Prompt: An illustration for AI training, depicting a neural network with neurons that are being repaired and firing. The scene includes a vast network of neurons, each glowing and firing to represent activity and learning. Among these neurons, small figures resembling engineers and scientists are actively working, repairing and tweaking the neurons. These miniature workers symbolize the process of training the network, adjusting weights and biases to achieve convergence. The entire scene is a visual metaphor for the intricate and collaborative effort involved in AI training, with the workers representing the continuous optimization and learning within a neural network. The background is a complex array of interconnected neurons, creating a sense of depth and complexity.

Purpose

Why do modern machine learning problems require new approaches to distributed computing and system architecture?

Machine learning training creates computational demands that exceed single machine capabilities, requiring distributed systems that coordinate computation across multiple devices and data centers. Training workloads have unique characteristics: massive datasets that cannot fit in memory, models with billions of parameters requiring coordinated updates, and iterative algorithms requiring continuous synchronization across distributed resources. These scale requirements create systems challenges in memory management, communication efficiency, fault tolerance, and resource scheduling that traditional systems were not designed to handle. As model complexity grows exponentially, understanding distributed training systems becomes necessary for any machine learning application of practical significance. The systems engineering principles developed for training at scale directly influence deployment architectures, cost structures, and feasibility of solutions across industries.

Explain how mathematical operations in neural networks (matrix multiplications, activation functions, backpropagation) translate to computational and memory system requirements

Analyze performance bottlenecks in training pipelines including data loading, memory bandwidth limitations, and compute utilization patterns

Design training pipeline architectures integrating data preprocessing, computation passes, and parameter updates efficiently

Apply single-machine optimization techniques including mixed-precision training, gradient accumulation, and activation checkpointing to maximize resource utilization

Compare distributed training strategies (data parallelism, model parallelism, pipeline parallelism) and select appropriate approaches based on model characteristics and hardware constraints

Evaluate specialized hardware platforms (GPUs, TPUs, FPGAs, ASICs) for training workloads and optimize code for specific architectural features

Implement optimization algorithms (SGD, Adam, AdamW) within training frameworks while understanding their memory and computational implications

Critique common training system design decisions to avoid performance pitfalls and scaling bottlenecks

Training Systems Evolution and Architecture

Training represents the most demanding phase in machine learning systems, where theoretical constructs become practical reality through computational optimization. Building upon the system design methodologies established in Chapter 2: ML Systems, data pipeline architectures explored in Chapter 6: Data Engineering, and computational frameworks examined in Chapter 7: AI Frameworks, this chapter examines how algorithmic theory, data processing, and hardware architecture converge in the iterative refinement of intelligent systems.

Training constitutes the most computationally demanding phase in the machine learning systems lifecycle, requiring careful orchestration of mathematical optimization processes with distributed systems engineering principles. Contemporary training workloads impose computational requirements that exceed conventional computing paradigms: models with billions of parameters demand terabytes of memory capacity, training corpora span petabyte-scale storage systems, and gradient-based optimization algorithms require synchronized computation across thousands of processing units. These computational scales create systems engineering challenges in memory hierarchy management, inter-node communication efficiency, and resource allocation strategies that distinguish training infrastructure from general-purpose computing architectures.

The design methodologies established in preceding chapters serve as architectural foundations during the training phase. The modular system architectures from Chapter 2: ML Systems enable distributed training orchestration, the engineered data pipelines from Chapter 6: Data Engineering provide continuous training sample streams, and the computational frameworks from Chapter 7: AI Frameworks supply necessary algorithmic abstractions. Training systems integration represents where theoretical design principles meet performance engineering constraints, establishing the computational foundation for the optimization techniques investigated in Part III.

This chapter develops systems engineering foundations for scalable training infrastructure. We examine the translation of mathematical operations in parametric models into concrete computational requirements, analyze performance bottlenecks within training pipelines including memory bandwidth limitations and computational throughput constraints, and architect systems that achieve high efficiency while maintaining fault tolerance guarantees. Through exploration of single-node optimization strategies, distributed training methodologies, and specialized hardware utilization patterns, this chapter develops the systems engineering perspective needed for constructing training infrastructure capable of scaling from experimental prototypes to production-grade deployments.

This chapter uses training GPT-2 (1.5 billion parameters) as a consistent reference point to ground abstract concepts in concrete reality. GPT-2 represents an ideal teaching example because it:

- Spans the scale spectrum: Large enough to require serious optimization, small enough to train without massive infrastructure

- Has well-documented architecture: 48 transformer layers, 1280 hidden dimensions, 20 attention heads

- Exhibits all key training challenges: Memory pressure, computational intensity, data pipeline complexity

- Represents modern ML systems: Transformer-based models dominate contemporary machine learning

Transformer Architecture Primer:

GPT-2 uses a transformer architecture (detailed in Chapter 4: DNN Architectures) that processes text through self-attention mechanisms. Understanding these key computational patterns provides essential context for the training examples throughout this chapter:

- Self-attention: Computes relationships between all words in a sequence through matrix operations (Query × Key^T), producing attention scores that weight how much each word should influence others

- Multi-head attention: Parallelizes attention across multiple “heads” (GPT-2 uses 20), each learning different relationship patterns

- Transformer layers: Stack attention with feed-forward networks (GPT-2 has 48 layers), enabling hierarchical feature learning

- Key computational pattern: Dominated by large matrix multiplications (attention score calculation, feed-forward networks) that benefit from GPU parallelization

This architecture’s heavy reliance on matrix multiplication and sequential dependencies creates the specific training system challenges we explore: massive activation memory requirements, communication bottlenecks in distributed training, and opportunities for mixed-precision optimization.

Key GPT-2 Specifications:

- Parameters: 1.542B (1,558,214,656 exact count)

- Training Data: OpenWebText (~40GB text, ~9B tokens)

- Batch Configuration: Typically 512 effective batch size across 8-32 GPUs

- Memory Footprint: ~3GB parameters (FP16: 16-bit floating point, using 2 bytes per value vs 4 bytes for FP32), ~18GB activations (batch_size=32)

- Training Time: ~2 weeks on 32 V100 GPUs

Note on precision formats: Throughout this chapter, we reference FP32 (32-bit) and FP16 (16-bit) floating-point formats. FP16 halves memory requirements and enables faster computation on modern GPUs with Tensor Cores. Mixed-precision training (detailed in Section 1.5.4) strategically combines FP16 for most operations with FP32 for numerical stability, achieving 2× memory savings and 2-3× speedups while maintaining accuracy.

🔄 GPT-2 Example Markers appear at strategic points where this specific model illuminates the concept under discussion. Each example provides quantitative specifications, performance tradeoffs, and concrete implementation decisions encountered in training this model.

Training Systems

The development of modern machine learning models relies on specialized computational frameworks that manage the complex process of iterative optimization. These systems differ from traditional computing infrastructures, requiring careful orchestration of data processing, gradient computation, parameter updates, and distributed coordination across potentially thousands of devices. Understanding what constitutes a training system and how it differs from general-purpose computing provides the foundation for the architectural decisions and optimization strategies that follow.

Designing effective training architectures requires recognizing that machine learning training systems represent a distinct class of computational workload with unique demands on hardware and software infrastructure. When you execute training commands in frameworks like PyTorch or TensorFlow, these systems must efficiently orchestrate repeated computations over large datasets while managing memory requirements and data movement patterns that exceed the capabilities of general-purpose computing architectures.

Training workloads exhibit three characteristics that distinguish them from traditional computing: extreme computational intensity from iterative gradient computations across massive models, substantial memory pressure from storing parameters, activations, and optimizer states simultaneously, and complex data dependencies requiring synchronized parameter updates across distributed resources. A single training run for large language models requires approximately \(10^{23}\) floating-point operations (Brown et al. 2020), memory footprints reaching terabytes when including activation storage, and coordination across thousands of devices—demands that general-purpose systems were never designed to handle.

Understanding why contemporary training systems evolved their current architectures requires examining how computing systems progressively adapted to increasingly demanding workloads. While training focuses on iterative optimization for learning, inference systems (detailed throughout this book) optimize for low-latency prediction serving. These represent two complementary but distinct computational paradigms. The architectural progression from general-purpose computing to specialized training systems reveals systems principles that inform modern training infrastructure design. Unlike traditional high-performance computing workloads, training systems exhibit specific characteristics that influence their design and implementation.

Computing Architecture Evolution for ML Training

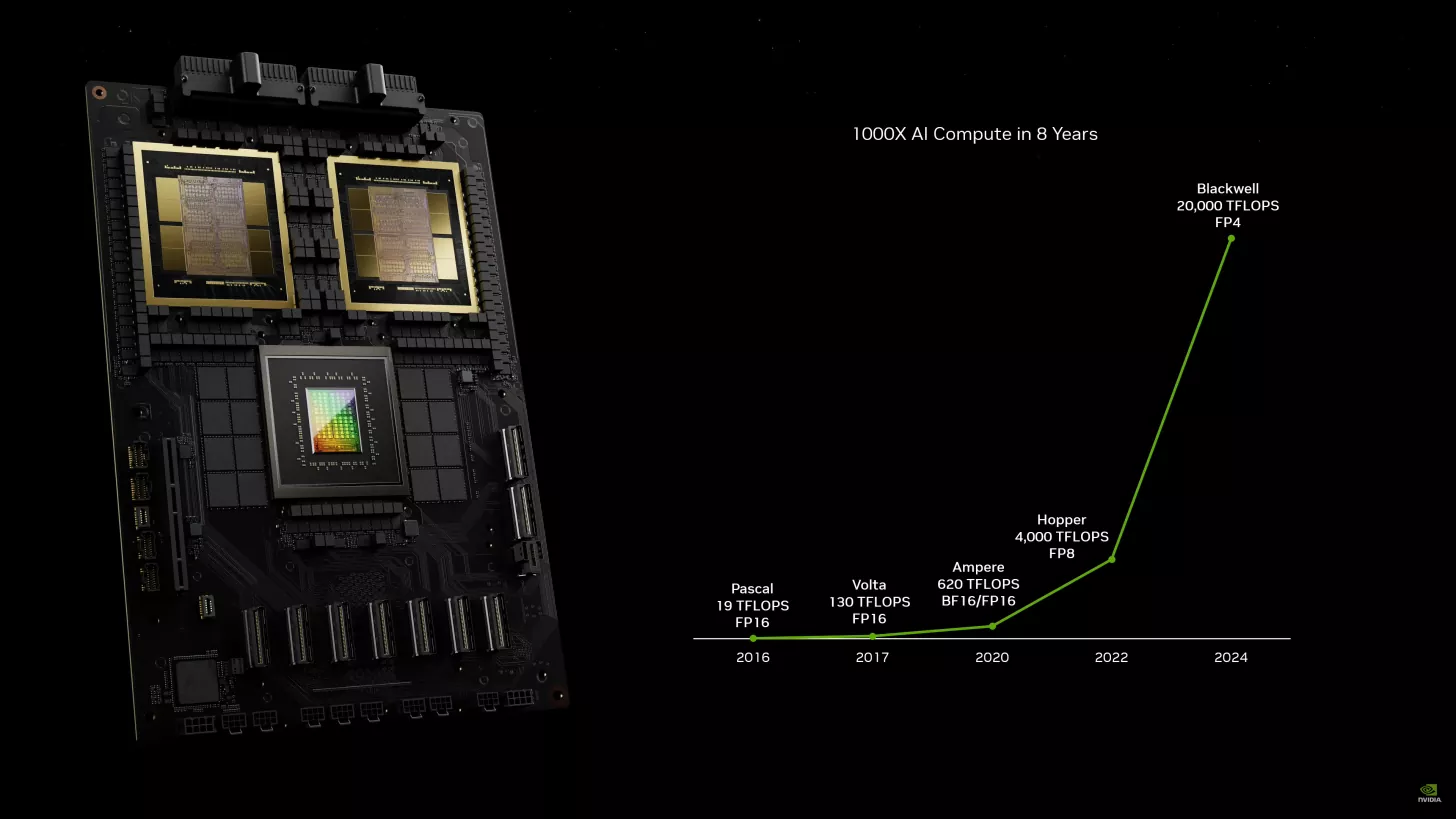

Computing system architectures have evolved through distinct generations, with each new era building upon previous advances while introducing specialized optimizations for emerging application requirements (Figure 1). This evolution parallels the development of ML frameworks and software stacks detailed in Chapter 7: AI Frameworks, which have co-evolved with hardware to enable efficient utilization of these computational resources. This progression demonstrates how hardware adaptation to application needs shapes modern machine learning systems.

Electronic computation began with the mainframe era. ENIAC1 (1945) established the viability of electronic computation at scale, while the IBM System/3602 (1964) introduced architectural principles of standardized instruction sets and memory hierarchies. These basic concepts provided the foundation for all subsequent computing systems.

1 ENIAC (Electronic Numerical Integrator and Computer): Completed in 1946 at the University of Pennsylvania, ENIAC weighed 30 tons, consumed 150kW of power, and performed 5,000 operations per second. Its 17,468 vacuum tubes required constant maintenance, but it demonstrated electronic computation could be 1,000x faster than mechanical calculators.

2 IBM System/360: Launched in 1964 as a $5 billion gamble (equivalent to $40 billion today), System/360 introduced the revolutionary concept of backward compatibility across different computer models. Its standardized instruction set architecture became the foundation for modern computing, enabling software portability that drives today’s cloud computing.

3 CDC 6600: Designed by Seymour Cray and released in 1964, the CDC 6600 achieved 3 MFLOPS (million floating-point operations per second) using innovative parallel processing with 10 peripheral processors. Costing $8 million ($65 million today), it was the world’s fastest computer until 1969 and established supercomputing as a field.

4 Connection Machine CM-5: Released by Thinking Machines in 1991, the CM-5 featured up to 16,384 processors connected by a fat-tree network, delivering over 100 GFLOPS. Its $10-50 million price tag and specialized parallel architecture made it a favorite for scientific computing but ultimately commercially unsuccessful as commodity clusters emerged.

Building upon these foundational computing principles, high-performance computing (HPC) systems (Thornton 1965) specialized for scientific computation. The CDC 66003 and later systems like the CM-54 (Corporation 1992) optimized for dense matrix operations and floating-point calculations.

HPC systems implemented specific architectural features for scientific workloads: high-bandwidth memory systems for array operations, vector processing units for mathematical computations, and specialized interconnects for collective communication patterns. Scientific computing demanded emphasis on numerical precision and stability, with processors and memory systems designed for regular, predictable access patterns. The interconnects supported tightly synchronized parallel execution, enabling efficient collective operations across computing nodes.

As the demand for internet-scale processing grew, warehouse-scale computing marked the next evolutionary step. Google’s data center implementations5 (Barroso and Hölzle 2007) introduced new optimizations for internet-scale data processing. Unlike HPC systems focused on tightly coupled scientific calculations, warehouse computing handled loosely coupled tasks with irregular data access patterns.

5 Google Data Centers: Starting in 1998 with commodity PCs, Google pioneered warehouse-scale computing by 2003, managing over 100,000 servers across multiple facilities. By 2020, Google operated over 20 data centers consuming 12 TWh annually, equivalent to entire countries, while achieving industry-leading PUE (Power Usage Effectiveness) of 1.10 through innovative cooling.

WSC systems introduced architectural changes to support high throughput for independent tasks, with robust fault tolerance and recovery mechanisms. The storage and memory systems adapted to handle sparse data structures efficiently, moving away from the dense array optimizations of HPC. Resource management systems evolved to support multiple applications sharing the computing infrastructure, contrasting with HPC’s dedicated application execution model.

Neither HPC nor warehouse-scale systems fully addressed the unique demands of machine learning training. Each computing era optimized for distinct workload characteristics that only partially matched AI training requirements:

High-Performance Computing: Optimized for dense, floating-point heavy, tightly-coupled simulations. HPC established the foundation for high-bandwidth interconnects and parallel numerical computation essential for AI training, but focused on regular, predictable access patterns unsuited to the dynamic memory requirements of neural network training.

Warehouse-Scale Computing: Optimized for sparse, integer-heavy, loosely-coupled data processing. WSC demonstrated fault tolerance and massive scale essential for production AI systems, but emphasized independent parallel tasks that contrasted with the synchronized gradient updates required in distributed training.

AI Training: Presents the unique challenge of requiring both dense FP16/FP32 computation (like HPC) and massive data scale (like WSC), while adding the complexity of iterative, synchronized gradient updates. This unique combination of requirements—intensive parameter updates, complex memory access patterns, and coordinated distributed computation—drove the development of today’s specialized AI hypercomputing systems.

AlexNet’s6 (Krizhevsky, Sutskever, and Hinton 2017) success in 2012 demonstrated that existing systems could not efficiently handle this convergence of requirements. Neural network training demanded new approaches to memory management and inter-device communication that neither HPC’s tightly-coupled scientific focus nor warehouse computing’s loosely-coupled data processing had addressed.

6 AlexNet: Developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, AlexNet won ImageNet 2012 with 15.3% error rate (vs. 26.2% for second place), using two GTX 580 GPUs for 5-6 days of training. This breakthrough launched the deep learning revolution and demonstrated that GPUs could accelerate neural network training by 10-50x over CPUs.

7 NVIDIA AI GPUs: From the 2012 GTX 580 (1.58 TFLOPS) used for AlexNet to the 2023 H100 (989 TFLOPS for sparse AI workloads, 312 TFLOPS dense), NVIDIA GPUs increased AI performance by over 300x in a decade. The H100 costs $25,000-40,000 but enables training models that would be impossible on older hardware, demonstrating specialized silicon’s critical role in AI advancement.

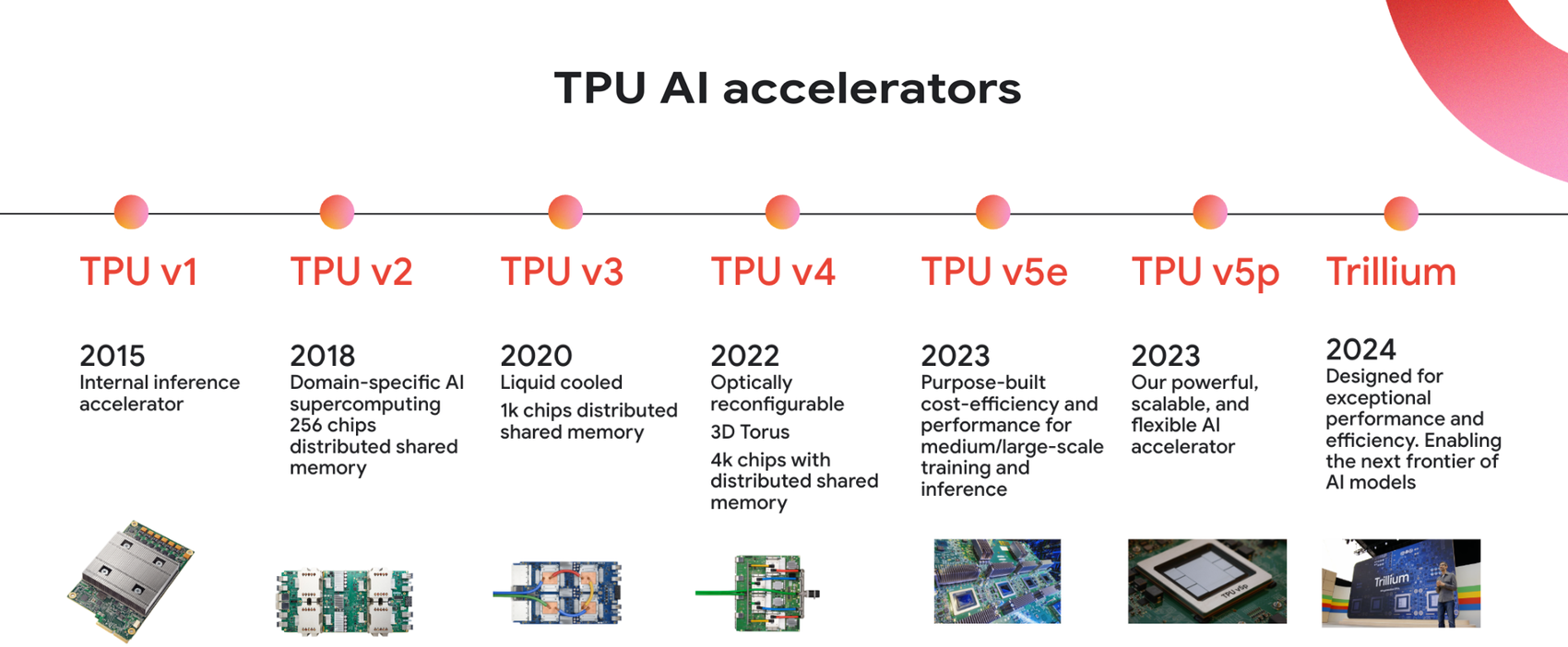

8 Google TPUs: First deployed internally in 2015, TPUs deliver 15-30x better price-performance than GPUs for specific AI workloads. The TPU v4 (2021) achieves 275 TFLOPS (bfloat16) with 32GB memory per chip, while TPU pods can scale to 1 exaFLOP. Google’s $billions investment in custom silicon has enabled training models like PaLM (540B parameters) cost-effectively.

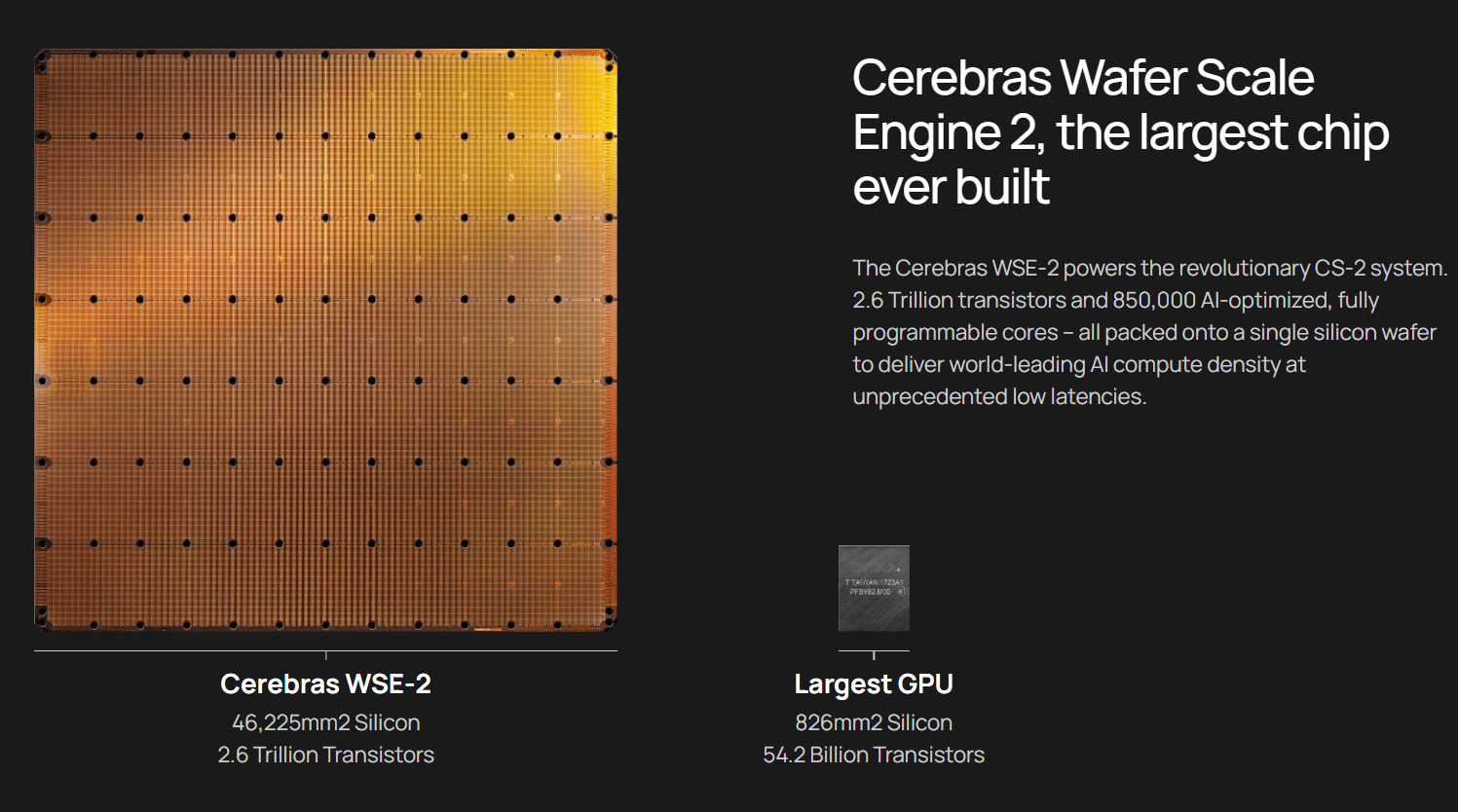

This need for specialization ushered in the AI hypercomputing era, beginning in 2015, which represents the latest step in this evolutionary chain. NVIDIA GPUs7 and Google TPUs8 introduced hardware designs specifically optimized for neural network computations, moving beyond adaptations of existing architectures. These systems implemented new approaches to parallel processing, memory access, and device communication to handle the distinct patterns of model training. The resulting architectures balanced the numerical precision needs of scientific computing with the scale requirements of warehouse systems, while adding specialized support for the iterative nature of neural network optimization. The comprehensive design principles, architectural details, and optimization strategies for these specialized training accelerators are explored in detail in Chapter 11: AI Acceleration, while this chapter focuses on training system orchestration and pipeline optimization.

This architectural progression illuminates why traditional computing systems proved insufficient for neural network training. As shown in Table 1, while HPC systems provided the foundation for parallel numerical computation and warehouse-scale systems demonstrated distributed processing at scale, neither fully addressed the computational patterns of model training. Modern neural networks combine intensive parameter updates, complex memory access patterns, and coordinated distributed computation in ways that demanded new architectural approaches.

Understanding these distinct characteristics and their evolution from previous computing eras explains why modern AI training systems require dedicated hardware features and optimized system designs. This historical context provides the foundation for examining machine learning training system architectures in detail.

| Era | Primary Workload | Memory Patterns | Processing Model | System Focus |

|---|---|---|---|---|

| Mainframe | Sequential batch processing | Simple memory hierarchy | Single instruction stream | General-purpose computation |

| HPC | Scientific simulation | Regular array access | Synchronized parallel | Numerical precision, collective operations |

| Warehouse-scale | Internet services | Sparse, irregular access | Independent parallel tasks | Throughput, fault tolerance |

| AI Hypercomputing | Neural network training | Parameter-heavy, mixed access | Hybrid parallel, distributed | Training optimization, model scale |

Training Systems in the ML Development Lifecycle

Training systems function through specialized computational frameworks. The development of modern machine learning models relies on specialized systems for training and optimization. These systems combine hardware and software components that must efficiently handle massive datasets while maintaining numerical precision and computational stability. Training systems share common characteristics and requirements that distinguish them from traditional computing infrastructures, despite their rapid evolution and diverse implementations.

These training systems provide the core infrastructure required for developing predictive models. They execute the mathematical optimization of model parameters, converting input data into computational representations for tasks such as pattern recognition, language understanding, and decision automation. The training process involves systematic iteration over datasets to minimize error functions and achieve optimal model performance.

Training systems function as integral components within the broader machine learning pipeline, building upon the foundational concepts introduced in Chapter 1: Introduction. They interface with preprocessing frameworks that standardize and transform raw data, while connecting to deployment architectures that enable model serving. The computational efficiency and reliability of training systems directly influence the development cycle, from initial experimentation through model validation to production deployment. This end-to-end perspective connects training optimization with the broader AI system lifecycle considerations explored in Chapter 13: ML Operations.

This operational scope has expanded with recent architectural advances. The emergence of transformer architectures9 and large-scale models has introduced new requirements for training systems. Current implementations must efficiently process petabyte-scale datasets, orchestrate distributed training across multiple accelerators, and optimize memory utilization for models containing billions of parameters. The management of data parallelism10, model parallelism11, and inter-device communication presents technical challenges in modern training architectures. These distributed system complexities motivate the specialized AI workflow management tools (Chapter 5: AI Workflow) that automate many aspects of large-scale training orchestration.

9 Transformer Architectures: Detailed in Chapter 4: DNN Architectures. Transformer models use attention mechanisms to process sequences without recurrence, enabling parallel computation and capturing long-range dependencies more effectively than RNNs.

10 Data Parallelism Scaling: Linear scaling works until communication becomes the bottleneck, typically around 64-128 GPUs for most models. BERT-Large typically achieves 60-80x speedup on 128 GPUs (45-65% efficiency), while GPT-3 required 1,024 GPUs with only 45% efficiency. The key constraint is AllReduce communication cost scales as O(n) with number of devices, requiring high-bandwidth interconnects like InfiniBand.

11 Model Parallelism Memory Scaling: Enables training models too large for single GPUs. GPT-3 (175B parameters) needs 350GB for weights in FP16 (700GB in FP32), far exceeding any single GPU’s 80GB maximum. Model parallelism often achieves only 20-60% compute efficiency due to sequential dependencies between model partitions and communication overhead between devices.

Training systems also impact the operational considerations of machine learning development. System design must address multiple technical constraints: computational throughput, energy consumption, hardware compatibility, and scalability with increasing model complexity. While this chapter focuses on the computational and architectural aspects of training systems, energy efficiency and sustainability considerations are explored in Chapter 18: Sustainable AI. These factors determine the technical feasibility and operational viability of machine learning implementations across different scales and applications.

System Design Principles for Training Infrastructure

Training implementation requires a systems perspective. The practical execution of training models is deeply tied to system design. Training is not merely a mathematical optimization problem; it is a system-driven process that requires careful orchestration of computing hardware, memory, and data movement.

Training workflows consist of interdependent stages: data preprocessing, forward and backward passes, and parameter updates, extending the basic neural network concepts from Chapter 3: Deep Learning Primer. Each stage imposes specific demands on system resources. The data preprocessing stage, for instance, relies on storage and I/O subsystems to provide computing hardware with continuous input. The quality and reliability of this input data are critical—data validation, corruption detection, feature engineering, schema enforcement, and pipeline reliability strategies are covered in Chapter 6: Data Engineering. While Chapter 6: Data Engineering focuses on ensuring data quality and consistency, this chapter examines the systems-level efficiency of data movement, transformation throughput, and delivery to computational resources during training.

While traditional processors like CPUs handle many training tasks effectively, increasingly complex models have driven the adoption of hardware accelerators. Graphics Processing Units (GPUs) and specialized machine learning processors can process mathematical operations in parallel, offering substantial speedups for matrix-heavy computations. These accelerators, alongside CPUs, handle operations like gradient computation and parameter updates, enabling the training of hierarchical representations whose theoretical foundations are explored in Chapter 4: DNN Architectures. The performance of these stages depends on how well the system manages bottlenecks such as memory bandwidth and communication latency.

These interconnected workflow stages reveal how system architecture directly impacts training efficiency. System constraints often dictate the performance limits of training workloads. Modern accelerators are frequently bottlenecked by memory bandwidth, as data movement between memory hierarchies can be slower and more energy-intensive than the computations themselves (Patterson and Hennessy 2021a). In distributed setups, synchronization across devices introduces additional latency, with the performance of interconnects (e.g., NVLink, InfiniBand) playing an important role.

Optimizing training workflows overcomes these limitations through systematic approaches detailed in Section 1.5.1. Techniques like overlapping computation with data loading, mixed-precision training (Micikevicius et al. 2017), and efficient memory allocation address the three primary bottlenecks that constrain training performance. These low-level optimizations complement the higher-level model compression strategies covered in Chapter 10: Model Optimizations, creating an integrated approach to training efficiency.

Systems thinking extends beyond infrastructure optimization to design decisions. System-level constraints often guide the development of new model architectures and training approaches. The hardware-software co-design principles discussed in Chapter 11: AI Acceleration demonstrate how understanding system capabilities can inspire entirely new architectural innovations. For example, memory limitations have motivated research into more efficient neural network architectures (M. X. Chen et al. 2018), while communication overhead in distributed systems has influenced the design of optimization algorithms. These adaptations demonstrate how practical system considerations shape the evolution of machine learning approaches within given computational bounds.

12 Transformer Training: Large transformer models like GPT and BERT require specialized training techniques covered in Chapter 4: DNN Architectures, including attention computation optimization and sequence parallelism strategies.

For example, training large Transformer models12 requires partitioning data and model parameters across multiple devices. This introduces synchronization challenges, particularly during gradient updates. Communication libraries such as NVIDIA’s Collective Communications Library (NCCL) enable efficient gradient sharing, providing the foundation for distributed training optimization techniques. The benchmarking methodologies in Chapter 12: Benchmarking AI provide systematic approaches for evaluating these distributed training performance characteristics. These examples illustrate how system-level considerations influence the feasibility and efficiency of modern training workflows.

Mathematical Foundations

The systems perspective established above reveals why understanding the mathematical operations at the heart of training is essential. These operations are not abstract concepts but concrete computations that dictate every aspect of training system design. The computational characteristics of neural network mathematics directly determine hardware requirements, memory architectures, and parallelization constraints. When system architects choose GPUs over CPUs, design memory hierarchies, or select distributed training strategies, they are responding to the specific demands of these mathematical operations.

The specialized training systems discussed above are designed specifically to execute these operations efficiently. Understanding these mathematical foundations is essential because they directly determine system requirements: the type of operations dictates hardware specialization needs (why matrix multiplication units dominate modern accelerators), the memory access patterns influence cache design (why activation storage becomes a bottleneck), and the computational dependencies shape parallelization strategies (why some operations cannot be trivially distributed). When we discussed how AI hypercomputing differs from HPC systems earlier, the distinction emerges from differences in the mathematical operations each must perform.

Training systems must execute three categories of operations repeatedly. First, forward propagation computes predictions through matrix multiplications and activation functions. Second, gradient computation via backpropagation calculates parameter updates using stored activations and the chain rule. Third, parameter updates apply gradients using optimization algorithms that maintain momentum and adaptive learning rate state. Each category exhibits distinct computational patterns and system requirements that training architectures must accommodate.

The computational characteristics of these operations directly inform the system design decisions discussed previously. Matrix multiplications dominate forward and backward passes, accounting for 60-90% of training time (He et al. 2016), which explains why specialized matrix units (GPU tensor cores, TPU systolic arrays) became central to training hardware. This computational dominance shapes modern training architectures, from hardware design choices to software optimization strategies. Activation storage for gradient computation creates memory pressure proportional to batch size and network depth, motivating the memory hierarchies and optimization techniques like gradient checkpointing we will explore. The iterative dependencies between forward passes, gradient computations, and parameter updates prevent arbitrary parallelization, constraining the distributed training strategies available for scaling. Understanding these mathematical operations and their system-level implications provides the foundation for understanding how modern training systems achieve efficiency.

Neural Network Computation

Neural network training consists of repeated matrix operations and nonlinear transformations. These operations, while conceptually simple, create the system-level challenges that dominate modern training infrastructure. Foundational works by Rumelhart, Hinton, and Williams (1986) through the introduction of backpropagation and the development of efficient matrix computation libraries, e.g., BLAS (Dongarra et al. 1988), laid the groundwork for modern training architectures.

Mathematical Operations in Neural Networks

At the heart of a neural network is the process of forward propagation, which in its simplest case involves two primary operations: matrix multiplication and the application of an activation function. Matrix multiplication forms the basis of the linear transformation in each layer of the network. This equation represents how information flows through each layer of a neural network:

At layer \(l\), the computation can be described as: \[ A^{(l)} = f\left(W^{(l)} A^{(l-1)} + b^{(l)}\right) \] Where:

- \(A^{(l-1)}\) represents the activations from the previous layer (or the input layer for the first layer),

- \(W^{(l)}\) is the weight matrix at layer \(l\), which contains the parameters learned by the network,

- \(b^{(l)}\) is the bias vector for layer \(l\),

- \(f(\cdot)\) is the activation function applied element-wise (e.g., ReLU, sigmoid) to introduce non-linearity.

Matrix Operations

Understanding how these mathematical operations translate to system requirements requires examining the computational patterns in neural networks, which revolve around various types of matrix operations. Understanding these operations and their evolution reveals the reasons why specific system designs and optimizations emerged in machine learning training systems.

Dense Matrix-Matrix Multiplication

Building on the matrix multiplication dominance established above, the evolution of these computational patterns has driven both algorithmic and hardware innovations. Early neural network implementations relied on standard CPU-based linear algebra libraries, but the scale of modern training demanded specialized optimizations. From Strassen’s algorithm13, which reduced the naive \(O(n^3)\) complexity to approximately \(O(n^{2.81})\) (Strassen 1969), to contemporary hardware-accelerated libraries like cuBLAS, these innovations have continually pushed the limits of computational efficiency.

13 Strassen’s Algorithm: Developed by Volker Strassen in 1969, this breakthrough reduced matrix multiplication from O(n³) to O(n^2.807) by using clever algebraic tricks with 7 multiplications instead of 8. While theoretically faster, it’s only practical for matrices larger than 500×500 due to overhead. Modern implementations in libraries like Intel MKL switch between algorithms based on matrix size, demonstrating how theoretical advances require careful engineering for practical impact.

This computational dominance has driven system-level optimizations. Modern systems implement blocked matrix computations for parallel processing across multiple units. As neural architectures grew in scale, these multiplications began to demand significant memory resources, since weight matrices and activation matrices must both remain accessible for the backward pass during training. Hardware designs adapted to optimize for these dense multiplication patterns while managing growing memory requirements.

Each GPT-2 layer performs attention computations that exemplify dense matrix multiplication demands. For a single attention head with batch_size=32, sequence_length=1024, hidden_dim=1280:

Query, Key, Value Projections (3 separate matrix multiplications): \[ \text{FLOPS} = 3 \times (\text{batch} \times \text{seq} \times \text{hidden} \times \text{hidden}) \] \[ = 3 \times (32 \times 1024 \times 1280 \times 1280) = 161 \text{ billion FLOPS} \]

Attention Score Computation (Q × K^T): \[ \text{FLOPS} = \text{batch} \times \text{heads} \times \text{seq} \times \text{seq} \times \text{hidden/heads} \] \[ = 32 \times 20 \times 1024 \times 1024 \times 64 = 42.9 \text{ billion FLOPS} \]

Computation Scale

- Total for one attention layer: ~204B FLOPS forward pass

- With 48 layers in GPT-2: ~9.8 trillion FLOPS per training step

- At 50K training steps: ~490 petaFLOPS total training computation

System Implication: A V100 GPU (125 TFLOPS peak FP16 with Tensor Cores, 28 TFLOPS without) would require 79 seconds just for the attention computations per step at 100% utilization. Actual training steps take 180 to 220ms, requiring 8 to 32 GPUs to achieve this throughput.

Matrix-Vector Operations

Beyond matrix-matrix operations, matrix-vector multiplication became essential with the introduction of normalization techniques in neural architectures. Although computationally simpler than matrix-matrix multiplication, these operations present system challenges. They exhibit lower hardware utilization due to their limited parallelization potential. This characteristic influences hardware design and model architecture decisions, particularly in networks processing sequential inputs or computing layer statistics.

Batched Operations

Recognizing the limitations of matrix-vector operations, the introduction of batching14 transformed matrix computation in neural networks. By processing multiple inputs simultaneously, training systems convert matrix-vector operations into more efficient matrix-matrix operations. This approach improves hardware utilization but increases memory demands for storing intermediate results. Modern implementations must balance batch sizes against available memory, leading to specific optimizations in memory management and computation scheduling.

14 Batching in Neural Networks: Unlike traditional programming where data is processed one item at a time, ML systems process multiple examples simultaneously to maximize GPU utilization. A single example might achieve only 5-10% GPU utilization, while batches of 32-256 can reach 80-95%. This shift from scalar to tensor operations explains why ML systems require different programming patterns and hardware optimizations than traditional applications.

Hardware accelerators like Google’s TPU (Jouppi et al. 2017) reflect this evolution, incorporating specialized matrix units and memory hierarchies for these diverse multiplication patterns. These hardware adaptations enable training of large-scale models like GPT-3 (Brown et al. 2020) through efficient handling of varied matrix operations.

The matrix operations described above directly explain modern training hardware architecture. GPUs dominate training because:

- Massive parallelism: Matrix multiplication’s independent element calculations map perfectly to GPU’s thousands of cores (NVIDIA A100: 6,912 CUDA cores)

- Specialized hardware units: Tensor Cores accelerate matrix operations by 10-20× through dedicated hardware for the dominant workload

- Memory bandwidth optimization: Blocked matrix computation patterns enable efficient use of GPU memory hierarchy (L1/L2 cache → shared memory → global memory)

When GPT-2 examples later show why V100 GPUs achieve 2.4× speedup with mixed precision (line 2018), this acceleration comes from Tensor Cores executing the matrix multiplications we just analyzed. Understanding matrix operation characteristics is prerequisite for appreciating why pipeline optimizations like mixed-precision training provide such substantial benefits.

Activation Functions

In Chapter 3: Deep Learning Primer, we established that activation functions—sigmoid, tanh, ReLU, and softmax—provide the nonlinearity essential for neural networks to learn complex patterns. We examined their mathematical properties: sigmoid’s \((0,1)\) bounded output, tanh’s zero-centered \((-1,1)\) range, ReLU’s gradient flow advantages, and softmax’s probability distributions. Recall from ?@fig-activation-functions how each function transforms inputs differently, with distinct implications for gradient behavior and learning dynamics.

While activation functions are applied element-wise and contribute only 5-10% of total computation time compared to matrix operations, their implementation characteristics significantly impact training system performance. The question facing ML systems engineers is not what activation functions do mathematically—that foundation is established—but rather how to implement them efficiently at scale. Why does ReLU train 3× faster than sigmoid on CPUs but show different relative performance on GPUs? How do hardware accelerators optimize these operations? What memory access patterns do different activation functions create during backpropagation?

This section examines activation functions from a systems perspective, analyzing computational costs, hardware implementation strategies, and performance trade-offs that determine real-world training efficiency. Understanding these practical constraints enables informed architectural decisions when designing training systems for specific hardware environments.

Benchmarking Activation Functions

Activation functions in neural networks significantly impact both mathematical properties and system-level performance. The selection of an activation function directly influences training time, model scalability, and hardware efficiency through three primary factors: computational cost, gradient behavior, and memory usage.

Benchmarking common activation functions on an Apple M2 single-threaded CPU reveals meaningful performance differences, as illustrated in Figure 2. The data demonstrates that Tanh and ReLU execute more efficiently than Sigmoid on CPU architectures, making them particularly suitable for real-time applications and large-scale systems.

While these benchmark results provide valuable insights, they represent CPU-only performance without hardware acceleration. In production environments, modern hardware accelerators like GPUs can substantially alter the relative performance characteristics of activation functions. System architects must therefore consider their specific hardware environment and deployment context when evaluating computational efficiency.

Recall from Chapter 3: Deep Learning Primer that each activation function exhibits different gradient behavior, sparsity characteristics, and computational complexity. The question now is: how do these mathematical properties translate into hardware constraints and system performance? The following subsections examine each function’s implementation characteristics, focusing on software versus hardware trade-offs that determine real-world training efficiency:

Sigmoid

Sigmoid’s smooth \((0,1)\) bounded output makes it useful for probabilistic interpretation, but its vanishing gradient problem and non-zero-centered outputs present optimization challenges. From a systems perspective, the exponential function computation becomes the critical bottleneck. In software, this computation is expensive and inefficient15, particularly for deep networks or large datasets where millions of sigmoid evaluations occur per forward pass.

15 Sigmoid Computational Cost: Computing sigmoid requires expensive exponential operations. On CPU, exp() takes 10-20 clock cycles vs. 1 cycle for basic arithmetic. GPU implementations use 32-entry lookup tables with linear interpolation, reducing cost to 3-4 cycles but still 3x slower than ReLU. This overhead compounds in deep networks with millions of activations per forward pass.

These computational challenges are addressed differently in hardware. Modern accelerators like GPUs and TPUs typically avoid direct computation of the exponential function, instead using lookup tables (LUTs) or piece-wise linear approximations to balance accuracy with speed. While these hardware optimizations help, the multiple memory lookups and interpolation calculations still make sigmoid more resource-intensive than simpler functions like ReLU, even on highly parallel architectures.

Tanh

While tanh improves upon sigmoid with its \((-1,1)\) zero-centered outputs, it shares sigmoid’s computational burden. The exponential computations required for tanh create similar performance bottlenecks in both software and hardware implementations. In software, this computational overhead can slow training, particularly when working with large datasets or deep models.

In hardware, tanh uses its mathematical relationship with sigmoid (a scaled and shifted version) to optimize implementation. Modern hardware often implements tanh using a hybrid approach: lookup tables for common input ranges combined with piece-wise approximations for edge cases. This approach helps balance accuracy with computational efficiency, though tanh remains more resource-intensive than simpler functions. Despite these challenges, tanh remains common in RNNs and LSTMs16 where balanced gradients are necessary.

16 RNNs and LSTMs: Long Short-Term Memory networks are specialized RNN variants designed to handle long-range dependencies. Both architectures are detailed in Chapter 4: DNN Architectures.

ReLU

ReLU represents a shift in activation function design. Its mathematical simplicity—\(\max(0,x)\)—avoids vanishing gradients and introduces beneficial sparsity, though it can suffer from dying neurons. This straightforward form has profound implications for system performance. In software, ReLU’s simple thresholding operation results in dramatically faster computation compared to sigmoid or tanh, requiring only a single comparison rather than exponential calculations.

The hardware implementation of ReLU showcases why it has become the dominant activation function in modern neural networks. Its simple \(\max(0,x)\) operation requires just a single comparison and conditional set, translating to minimal circuit complexity17. Modern GPUs and TPUs can implement ReLU using a simple multiplexer that checks the input’s sign bit, allowing for extremely efficient parallel processing. This hardware efficiency, combined with the sparsity it introduces, results in both reduced computation time and lower memory bandwidth requirements.

17 ReLU Hardware Efficiency: ReLU requires just 1 instruction (max(0,x)) vs. sigmoid’s 10+ operations including exponentials. On NVIDIA GPUs, ReLU runs at 95% of peak FLOPS while sigmoid achieves only 30-40%. ReLU’s sparsity (typically 50% zeros) enables additional optimizations: sparse matrix operations, reduced memory bandwidth, and compressed gradients during backpropagation.

Softmax

Softmax differs from the element-wise functions above. Rather than processing inputs independently, softmax converts logits into probability distributions through global normalization, creating unique computational challenges. Its computation involves exponentiating each input value and normalizing by their sum, a process that becomes increasingly complex with larger output spaces. In software, this creates significant computational overhead for tasks like natural language processing, where vocabulary sizes can reach hundreds of thousands of terms. The function also requires keeping all values in memory during computation, as each output probability depends on the entire input vector.

At the hardware level, softmax faces unique challenges because it can’t process each value independently like other activation functions. Unlike ReLU’s simple threshold or even sigmoid’s per-value computation, softmax needs access to all values to perform normalization. This becomes particularly demanding in modern transformer architectures18, where softmax computations in attention mechanisms process thousands of values simultaneously. To manage these demands, hardware implementations often use approximation techniques or simplified versions of softmax, especially when dealing with large vocabularies or attention mechanisms.

18 Transformer Attention: The attention mechanism in transformers computes weighted relationships between all positions in a sequence simultaneously. This architecture is covered in Chapter 4: DNN Architectures.

Table 2 summarizes the trade-offs of these commonly used activation functions and highlights how these choices affect system performance.

| Function | Key Advantages | Key Disadvantages | System Implications |

|---|---|---|---|

| Sigmoid | Smooth gradients; bounded output in \((0, 1)\). | Vanishing gradients; non-zero-centered output. | Exponential computation adds overhead; limited scalability for deep networks on modern accelerators. |

| Tanh | Zero-centered output in \((-1, 1)\); stabilizes gradients. | Vanishing gradients for large inputs. | More expensive than ReLU; still commonly used in RNNs/LSTMs but less common in CNNs and Transformers. |

| ReLU | Computationally efficient; avoids vanishing gradients; introduces sparsity. | Dying neurons; unbounded output. | Simple operations optimize well on GPUs/TPUs; sparse activations reduce memory and computation needs. |

| Softmax | Converts logits into probabilities; sums to \(1\). | Computationally expensive for large outputs. | High cost for large vocabularies; hierarchical or sampled softmax needed for scalability in NLP tasks. |

The choice of activation function should balance computational considerations with their mathematical properties, such as handling vanishing gradients or introducing sparsity in neural activations. This data emphasizes the importance of evaluating both theoretical and practical performance when designing neural networks. For large-scale networks or real-time applications, ReLU is often the best choice due to its efficiency and scalability. However, for tasks requiring probabilistic outputs, such as classification, softmax remains indispensable despite its computational cost. Ultimately, the ideal activation function depends on the specific task, network architecture, and hardware environment.

While the table above covers classical activation functions, GPT-2 uses the Gaussian Error Linear Unit (GELU), defined as: \[ \text{GELU}(x) = x \cdot \Phi(x) = x \cdot \frac{1}{2}\left[1 + \text{erf}\left(\frac{x}{\sqrt{2}}\right)\right] \]

where \(\Phi(x)\) is the cumulative distribution function of the standard normal distribution.

Why GELU for GPT-2?

- Smoother gradients than ReLU, reducing the dying neuron problem

- Stochastic regularization effect: acts like dropout by probabilistically dropping inputs

- Better empirical performance on language modeling tasks

System Performance Tradeoff

- Computational cost: ~3 to 4x more expensive than ReLU (requires erf function evaluation)

- Memory: Same as ReLU (element-wise operation)

- Training time impact: For GPT-2’s 48 layers, GELU adds ~5 to 8% to total forward pass time

- Worth it: The improved model quality (lower perplexity) offsets the computational overhead

Fast Approximation: Modern frameworks (PyTorch, TensorFlow) implement GELU with optimized approximations:

# Fast GELU approximation (used in practice)

GELU(x) ≈ 0.5 * x * (1 + tanh(sqrt(2/π) * (x + 0.044715 * x³)))This approximation reduces computational cost to ~1.5x ReLU while maintaining GELU’s benefits, demonstrating how production systems balance mathematical properties with implementation efficiency.

Activation functions reveal a critical systems principle: not all operations are compute-bound. While matrix multiplications saturate GPU compute units, activation functions often become memory-bandwidth-bound:

- Low arithmetic intensity: Element-wise operations perform few calculations per memory access (ReLU: 1 operation per load)

- Limited parallelism benefit: Simple operations complete faster than memory transfer time

- Bandwidth constraints: Modern GPUs have 10-100× more compute throughput than memory bandwidth

This explains why activation function choice matters less than expected—ReLU vs sigmoid shows only 2-3× difference despite vastly different computational complexity, because both are bottlenecked by memory access. The forward pass must carefully manage activation storage to prevent memory bandwidth from limiting overall training throughput.

Optimization Algorithms

Optimization algorithms play an important role in neural network training by guiding the adjustment of model parameters to minimize a loss function. This process enables neural networks to learn from data, and it involves finding the optimal set of parameters that yield the best model performance on a given task. Broadly, these algorithms can be divided into two categories: classical methods, which provide the theoretical foundation, and advanced methods, which introduce enhancements for improved performance and efficiency.

These algorithms explore the complex, high-dimensional loss function surface, identifying regions where the function achieves its lowest values. This task is challenging because the loss function surface is rarely smooth or simple, often characterized by local minima, saddle points, and sharp gradients. Effective optimization algorithms are designed to overcome these challenges, ensuring convergence to a solution that generalizes well to unseen data. While this section covers optimization algorithms used during training, advanced optimization techniques including quantization, pruning, and knowledge distillation are detailed in Chapter 10: Model Optimizations.

The selection and design of optimization algorithms have significant system-level implications, such as computation efficiency, memory requirements, and scalability to large datasets or models. Systematic approaches to hyperparameter optimization, including grid search, Bayesian optimization, and automated machine learning workflows, are covered in Chapter 5: AI Workflow. A deeper understanding of these algorithms is essential for addressing the trade-offs between accuracy, speed, and resource usage.

Gradient-Based Optimization Methods

Modern neural network training relies on variations of gradient descent for parameter optimization. These approaches differ in how they process training data, leading to distinct system-level implications.

Gradient Descent

Gradient descent is the mathematical foundation of neural network training, iteratively adjusting parameters to minimize a loss function. The basic gradient descent algorithm computes the gradient of the loss with respect to each parameter, then updates parameters in the opposite direction of the gradient: \[ \theta_{t+1} = \theta_t - \alpha \nabla L(\theta_t) \]

The effectiveness of gradient descent in training systems reveals deep questions in optimization theory. Unlike convex optimization where gradient descent guarantees finding the global minimum, neural network loss surfaces contain exponentially many local minima. Yet gradient descent consistently finds solutions that generalize well, suggesting the optimization process has implicit biases toward solutions with desirable properties. Modern overparameterized networks, with more parameters than training examples, paradoxically achieve better generalization than smaller models, challenging traditional optimization intuitions.

In training systems, this mathematical operation translates into specific computational patterns. For each iteration, the system must:

- Compute forward pass activations

- Calculate loss value

- Compute gradients through backpropagation

- Update parameters using the gradient values

The computational demands of gradient descent scale with both model size and dataset size. Consider a neural network with \(M\) parameters training on \(N\) examples. Computing gradients requires storing intermediate activations during the forward pass for use in backpropagation. These activations consume memory proportional to the depth of the network and the number of examples being processed.

Traditional gradient descent processes the entire dataset in each iteration. For a training set with 1 million examples, computing gradients requires evaluating and storing results for each example before performing a parameter update. This approach poses significant system challenges: \[ \text{Memory Required} = N \times \text{(Activation Memory + Gradient Memory)} \]

The memory requirements often exceed available hardware resources on modern hardware. A ResNet-50 model processing ImageNet-scale datasets would require hundreds of gigabytes of memory using this approach. Processing the full dataset before each update creates long iteration times, reducing the rate at which the model can learn from the data.

Stochastic Gradient Descent

These system constraints led to the development of variants that better align with hardware capabilities. The key insight was that exact gradient computation, while mathematically appealing, is not necessary for effective learning. This realization opened the door to methods that trade gradient accuracy for improved system efficiency.

These system limitations motivated the development of more efficient optimization approaches. SGD19 is a big shift in the optimization strategy. Rather than computing gradients over the entire dataset, SGD estimates gradients using individual training examples: \[ \theta_{t+1} = \theta_t - \alpha \nabla L(\theta_t; x_i, y_i) \] where \((x_i, y_i)\) represents a single training example. This approach drastically reduces memory requirements since only one example’s activations and gradients need storage at any time.

19 Stochastic Gradient Descent: Originally developed by Robbins and Monro in 1951 for statistical optimization, SGD was first applied to neural networks by Rosenblatt for the perceptron in 1958. The method remained largely theoretical until the 1980s when computational constraints made full-batch gradient descent impractical for larger networks. Today’s “mini-batch SGD” (processing 32-512 examples) represents a compromise between the original single-example approach and full-batch methods, enabling parallel processing on modern GPUs. The stochastic nature of these updates introduces noise into the optimization process, but this noise often helps escape local minima and reach better solutions.

However, processing single examples creates new system challenges. Modern accelerators achieve peak performance through parallel computation, processing multiple data elements simultaneously. Single-example updates leave most computing resources idle, resulting in poor hardware utilization. The frequent parameter updates also increase memory bandwidth requirements, as weights must be read and written for each example rather than amortizing these operations across multiple examples.

Mini-batch Processing

Mini-batch gradient descent emerges as a practical compromise between full-batch and stochastic methods. It computes gradients over small batches of examples, enabling parallel computations that align well with modern GPU architectures (Dean and Ghemawat 2008). \[ \theta_{t+1} = \theta_t - \alpha \frac{1}{B} \sum_{i=1}^B \nabla L(\theta_t; x_i, y_i) \]

Mini-batch processing aligns well with modern hardware capabilities. Consider a training system using GPU hardware. These devices contain thousands of cores designed for parallel computation. Mini-batch processing allows these cores to simultaneously compute gradients for multiple examples, improving hardware utilization. The batch size B becomes a key system parameter, influencing both computational efficiency and memory requirements.

The relationship between batch size and system performance follows clear patterns that reveal hardware-software trade-offs. Memory requirements scale linearly with batch size, but the specific costs vary dramatically by model architecture: \[ \begin{aligned} \text{Memory Required} = B \times (&\text{Activation Memory} \\ &+ \text{Gradient Memory} \\ &+ \text{Parameter Memory}) \end{aligned} \]

For concrete understanding, consider ResNet-50 training with different batch sizes. At batch size 32, the model requires approximately 8GB of activation memory, 4GB for gradients, and 200MB for parameters per GPU. Doubling to batch size 64 doubles these memory requirements to 16GB activations and 8GB gradients. This linear scaling quickly exhausts GPU memory, with high-end training GPUs typically providing 40-80GB of HBM.

Larger batches enable more efficient computation through improved parallelism and better memory access patterns. GPU utilization efficiency demonstrates this trade-off: batch sizes of 256 or higher typically achieve over 90% hardware utilization on modern training accelerators, while smaller batches of 16-32 may only achieve 60-70% utilization due to insufficient parallelism to saturate the hardware.

This establishes a central theme in training systems: the hardware-software trade-off between memory constraints and computational efficiency. Training systems must select batch sizes that maximize hardware utilization while fitting within available memory. The optimal choice often requires gradient accumulation when memory constraints prevent using efficiently large batches, trading increased computation for the same effective batch size.

Adaptive and Momentum-Based Optimizers

Advanced optimization algorithms introduce mechanisms like momentum and adaptive learning rates to improve convergence. These methods have been instrumental in addressing the inefficiencies of classical approaches (Kingma and Ba 2014).

Momentum-Based Methods

Momentum methods enhance gradient descent by accumulating a velocity vector across iterations. The momentum update equations introduce an additional term to track the history of parameter updates: \[\begin{gather*} v_{t+1} = \beta v_t + \nabla L(\theta_t) \\ \theta_{t+1} = \theta_t - \alpha v_{t+1} \end{gather*}\] where \(\beta\) is the momentum coefficient, typically set between 0.9 and 0.99. From a systems perspective, momentum introduces additional memory requirements. The training system must maintain a velocity vector with the same dimensionality as the parameter vector, effectively doubling the memory needed for optimization state.

Adaptive Learning Rate Methods

RMSprop modifies the basic gradient descent update by maintaining a moving average of squared gradients for each parameter: \[\begin{gather*} s_t = \gamma s_{t-1} + (1-\gamma)\big(\nabla L(\theta_t)\big)^2 \\ \theta_{t+1} = \theta_t - \alpha \frac{\nabla L(\theta_t)}{\sqrt{s_t + \epsilon}} \end{gather*}\]

This per-parameter adaptation requires storing the moving average \(s_t\), creating memory overhead similar to momentum methods. The element-wise operations in RMSprop also introduce additional computational steps compared to basic gradient descent.

Adam Optimization

Adam combines concepts from both momentum and RMSprop, maintaining two moving averages for each parameter: \[\begin{gather*} m_t = \beta_1 m_{t-1} + (1-\beta_1)\nabla L(\theta_t) \\ v_t = \beta_2 v_{t-1} + (1-\beta_2)\big(\nabla L(\theta_t)\big)^2 \\ \theta_{t+1} = \theta_t - \alpha \frac{m_t}{\sqrt{v_t + \epsilon}} \end{gather*}\]

The system implications of Adam are more substantial than previous methods. The optimizer must store two additional vectors (\(m_t\) and \(v_t\)) for each parameter, tripling the memory required for optimization state. For a model with 100 million parameters using 32-bit floating-point numbers, the additional memory requirement is approximately 800 MB.

Optimization Algorithm System Implications

The practical implementation of both classical and advanced optimization methods requires careful consideration of system resources and hardware capabilities. Understanding these implications helps inform algorithm selection and system design choices.

Optimization Trade-offs

The choice of optimization algorithm creates specific patterns of computation and memory access that influence training efficiency. Memory requirements increase progressively from basic gradient descent to more sophisticated methods: \[\begin{gather*} \text{Memory}_{\text{SGD}} = \text{Size}_{\text{params}} \\ \text{Memory}_{\text{Momentum}} = 2 \times \text{Size}_{\text{params}} \\ \text{Memory}_{\text{Adam}} = 3 \times \text{Size}_{\text{params}} \end{gather*}\]

These memory costs must be balanced against convergence benefits. While Adam often requires fewer iterations to reach convergence, its per-iteration memory and computation overhead may impact training speed on memory-constrained systems.

GPT-2 training uses the Adam optimizer with these hyperparameters:

- β₁ = 0.9 (momentum decay)

- β₂ = 0.999 (second moment decay)

- Learning rate: Warmed up from 0 to 2.5e-4 over first 500 steps, then cosine decay

- Weight decay: 0.01

- Gradient clipping: Global norm clipping at 1.0

Memory Overhead Calculation

For GPT-2’s 1.5B parameters in FP32 (4 bytes each):

- Parameters: 1.5B × 4 bytes = 6.0 GB

- Gradients: 1.5B × 4 bytes = 6.0 GB

- Adam first moment (m): 1.5B × 4 bytes = 6.0 GB

- Adam second moment (v): 1.5B × 4 bytes = 6.0 GB

- Total optimizer state: 24 GB

This explains why GPT-2 training requires 32GB+ V100 GPUs even before considering activation memory.

System Decisions Driven by Optimizer

- Mixed precision training (FP16 params, FP32 optimizer state) cuts this to ~15GB

- Gradient accumulation (splitting effective batches into smaller micro-batches, accumulating gradients across multiple forward/backward passes before updating, detailed in Section 1.5.5) allows effective batch_size=512 despite memory limits

- Optimizer state sharding (ZeRO-2) distributes Adam state across GPUs in distributed training

Convergence Tradeoff: Adam’s memory overhead is worth it. GPT-2 converges in ~50K steps vs. ~150K+ steps with SGD+Momentum, saving weeks of training time despite higher per-step cost.

Implementation Considerations

The efficient implementation of optimization algorithms in training frameworks hinges on strategic system-level considerations that directly influence performance. Key factors include memory bandwidth management, operation fusion techniques, and numerical precision optimization. These elements collectively determine the computational efficiency, memory utilization, and scalability of optimizers across diverse hardware architectures.

Memory bandwidth presents the primary bottleneck in optimizer implementation. Modern frameworks address this through operation fusion, which reduces memory access overhead by combining multiple operations into a single kernel. For example, the Adam optimizer’s memory access requirements can grow linearly with parameter size when operations are performed separately: \[ \text{Bandwidth}_{\text{separate}} = 5 \times \text{Size}_{\text{params}} \]

However, fusing these operations into a single computational kernel significantly reduces the bandwidth requirement: \[ \text{Bandwidth}_{\text{fused}} = 2 \times \text{Size}_{\text{params}} \]

These techniques have been effectively demonstrated in systems like cuDNN and other GPU-accelerated frameworks that optimize memory bandwidth usage and operation fusion (Chetlur et al. 2014; Jouppi et al. 2017).

Memory access patterns also play an important role in determining the efficiency of cache utilization. Sequential access to parameter and optimizer state vectors maximizes cache hit rates and effective memory bandwidth. This principle is evident in hardware such as GPUs and tensor processing units (TPUs), where optimized memory layouts significantly improve performance (Jouppi et al. 2017).

Numerical precision represents another important tradeoff in implementation. Empirical studies have shown that optimizer states remain stable even when reduced precision formats, such as 16-bit floating-point (FP16), are used. Transitioning from 32-bit to 16-bit formats reduces memory requirements, as illustrated for the Adam optimizer: \[ \text{Memory}_{\text{Adam-FP16}} = \frac{3}{2} \times \text{Size}_{\text{params}} \]

Mixed-precision training20 has been shown to achieve comparable accuracy while significantly reducing memory consumption and computational overhead (Micikevicius et al. 2017; Krishnamoorthi 2018).

20 Mixed-Precision Training: Introduced by NVIDIA in 2018, this technique uses FP16 for forward/backward passes while maintaining FP32 precision for loss scaling, enabling 2x memory savings and 1.6x speedups on Tensor Core GPUs while maintaining model accuracy.

The above implementation factors determine the practical performance of optimization algorithms in deep learning systems, emphasizing the importance of tailoring memory, computational, and numerical strategies to the underlying hardware architecture (T. Chen et al. 2015).

Optimizer Trade-offs

The evolution of optimization algorithms in neural network training reveals an intersection between algorithmic efficiency and system performance. While optimizers were primarily developed to improve model convergence, their implementation significantly impacts memory usage, computational requirements, and hardware utilization.

A deeper examination of popular optimization algorithms reveals their varying impacts on system resources. As shown in Table 3, each optimizer presents distinct trade-offs between memory usage, computational patterns, and convergence behavior. SGD maintains minimal memory overhead, requiring storage only for model parameters and current gradients. This lightweight memory footprint comes at the cost of slower convergence and potentially poor hardware utilization due to its sequential update nature.

| Property | SGD | Momentum | RMSprop | Adam |

|---|---|---|---|---|

| Memory Overhead | None | Velocity terms | Squared gradients | Both velocity and squared gradients |

| Memory Cost | \(1\times\) | \(2\times\) | \(2\times\) | \(3\times\) |

| Access Pattern | Sequential | Sequential | Random | Random |

| Operations/Parameter | 2 | 3 | 4 | 5 |

| Hardware Efficiency | Low | Medium | High | Highest |

| Convergence Speed | Slowest | Medium | Fast | Fastest |

Momentum methods introduce additional memory requirements by storing velocity terms for each parameter, doubling the memory footprint compared to SGD. This increased memory cost brings improved convergence through better gradient estimation, while maintaining relatively efficient memory access patterns. The sequential nature of momentum updates allows for effective hardware prefetching and cache utilization.

RMSprop adapts learning rates per parameter by tracking squared gradient statistics. Its memory overhead matches momentum methods, but its computation patterns become more irregular. The algorithm requires additional arithmetic operations for maintaining running averages and computing adaptive learning rates, increasing computational intensity from 3 to 4 operations per parameter.

Adam combines the benefits of momentum and adaptive learning rates, but at the highest system resource cost. Table 3 reveals that it maintains both velocity terms and squared gradient statistics, tripling the memory requirements compared to SGD. The algorithm’s computational patterns involve 5 operations per parameter update, though these operations often utilize hardware more effectively due to their regular structure and potential for parallelization.

Training system designers must balance these trade-offs when selecting optimization strategies. Modern hardware architectures influence these decisions. GPUs excel at the parallel computations required by adaptive methods, while memory-constrained systems might favor simpler optimizers. The choice of optimizer affects not only training dynamics but also maximum feasible model size, achievable batch size, hardware utilization efficiency, and overall training time to convergence. Beyond optimizer selection, learning rate scheduling strategies, including cosine annealing, linear warmup, and cyclical schedules, further influence convergence behavior and final model performance, with large-batch training requiring careful scaling adjustments as detailed in distributed training discussions.

Modern training frameworks continue to evolve, developing techniques like optimizer state sharding, mixed-precision storage, and fused operations to better balance these competing demands. Understanding these system implications helps practitioners make informed decisions about optimization strategies based on their specific hardware constraints and training requirements.

Framework Optimizer Interface

While the mathematical formulations of SGD, momentum, and Adam establish the theoretical foundations for parameter optimization, frameworks provide standardized interfaces that abstract these algorithms into practical training loops. Understanding how frameworks like PyTorch implement optimizer APIs demonstrates how complex mathematical operations become accessible through clean abstractions.

The framework optimizer interface follows a consistent pattern that separates gradient computation from parameter updates. This separation enables the mathematical algorithms to be applied systematically across diverse model architectures and training scenarios.

Framework optimizers implement a four-step training cycle that encapsulates the mathematical operations within a clean API. The following example demonstrates how Adam optimization integrates into a standard training loop:

import torch

import torch.nn as nn

import torch.optim as optim

# Initialize Adam optimizer with model parameters

# and learning rate

optimizer = optim.Adam(

model.parameters(), lr=0.001, betas=(0.9, 0.999)

)

loss_function = nn.CrossEntropyLoss()

# Standard training loop implementing the four-step optimization cycle

for epoch in range(num_epochs):

for batch_idx, (data, targets) in enumerate(dataloader):

# Step 1: Clear accumulated gradients from previous iteration

optimizer.zero_grad()

# Step 2: Forward pass - compute model predictions

predictions = model(data)

loss = loss_function(predictions, targets)

# Step 3: Backward pass - compute gradients via

# automatic differentiation

loss.backward()

# Step 4: Parameter update - apply Adam optimization equations

optimizer.step()The optimizer.zero_grad() call addresses a critical framework implementation detail: gradients accumulate across calls to backward(), requiring explicit clearing between batches. This behavior enables gradient accumulation patterns for large effective batch sizes but requires careful management in standard training loops.

The optimizer.step() method encapsulates the mathematical update equations. For Adam optimization, this single call implements the momentum estimation, squared gradient tracking, bias correction, and parameter update computation automatically. The following code illustrates the mathematical operations that occur within the optimizer:

# Mathematical operations implemented by optimizer.step() for Adam

# These computations happen automatically within the framework

# Adam hyperparameters (typically β₁=0.9, β₂=0.999, ε=1e-8)

beta_1, beta_2, epsilon = 0.9, 0.999, 1e-8

learning_rate = 0.001

# For each parameter tensor in the model:

for param in model.parameters():

if param.grad is not None:

grad = param.grad.data # Current gradient

# Step 1: Update biased first moment estimate

# (momentum)

# m_t = β₁ * m_{t-1} + (1-β₁) * ∇L(θₜ)

momentum_buffer = (

beta_1 * momentum_buffer + (1 - beta_1) * grad

)

# Step 2: Update biased second moment estimate

# (squared gradients)

# v_t = β₂ * v_{t-1} + (1-β₂) * (∇L(θₜ))²

variance_buffer = beta_2 * variance_buffer + (

1 - beta_2

) * grad.pow(2)

# Step 3: Compute bias-corrected estimates

momentum_corrected = momentum_buffer / (

1 - beta_1**step_count

)

variance_corrected = variance_buffer / (

1 - beta_2**step_count

)

# Step 4: Apply parameter update

# θ_{t+1} = θₜ - α * m_t / (√v_t + ε)

param.data -= (

learning_rate

* momentum_corrected

/ (variance_corrected.sqrt() + epsilon)

)Framework implementations also handle the memory management challenges in optimizer trade-offs. The optimizer automatically allocates storage for momentum terms and squared gradient statistics, managing the 2-3x memory overhead transparently while providing efficient memory access patterns optimized for the underlying hardware.

Learning Rate Scheduling Integration

Frameworks integrate learning rate scheduling directly into the optimizer interface, enabling dynamic adjustment of the learning rate α during training. This integration demonstrates how frameworks compose multiple optimization techniques through modular design patterns.

Learning rate schedulers modify the optimizer’s learning rate according to predefined schedules, such as cosine annealing, exponential decay, or step-wise reductions. The following example demonstrates how to integrate cosine annealing with Adam optimization:

import torch

import torch.optim as optim

import torch.optim.lr_scheduler as lr_scheduler

import math

# Initialize optimizer with initial learning rate

optimizer = optim.Adam(

model.parameters(), lr=0.001, weight_decay=1e-4

)

# Configure cosine annealing scheduler

# T_max: number of epochs for one complete cosine cycle

# eta_min: minimum learning rate (default: 0)

scheduler = lr_scheduler.CosineAnnealingLR(

optimizer,

T_max=100, # Complete cycle over 100 epochs

eta_min=1e-6, # Minimum learning rate

)

# Training loop with integrated learning rate scheduling