Welcome#

Everyone wants to be an astronaut 🧑. Very few want to be the rocket scientist .

In machine learning, we see the same pattern. Everyone wants to train models, run inference, deploy AI. Very few want to understand how the frameworks actually work. Even fewer want to build one.

The world is full of users. We do not have enough builders—people who can debug, optimize, and adapt systems when the black box breaks down.

This is the gap TinyTorch exists to fill.

The Problem#

Most people can use PyTorch or TensorFlow. They can import libraries, call functions, train models. But very few understand how these frameworks work: how memory is managed for tensors, how autograd builds computation graphs, how optimizers update parameters. And almost no one has a guided, structured way to learn that from the ground up.

Why does this matter? Because users hit walls that builders do not:

When your model runs out of memory, you need to understand tensor allocation

When gradients explode, you need to understand the computation graph

When training is slow, you need to understand where the bottlenecks are

When deploying on a microcontroller, you need to know what can be stripped away

The framework becomes a black box you cannot debug, optimize, or adapt. You are stuck waiting for someone else to solve your problem.

Students cannot learn this from production code. PyTorch is too large, too complex, too optimized. Fifty thousand lines of C++ across hundreds of files. No one learns to build rockets by studying the Saturn V.

They also cannot learn it from toy scripts. A hundred-line neural network does not reveal the architecture of a framework. It hides it.

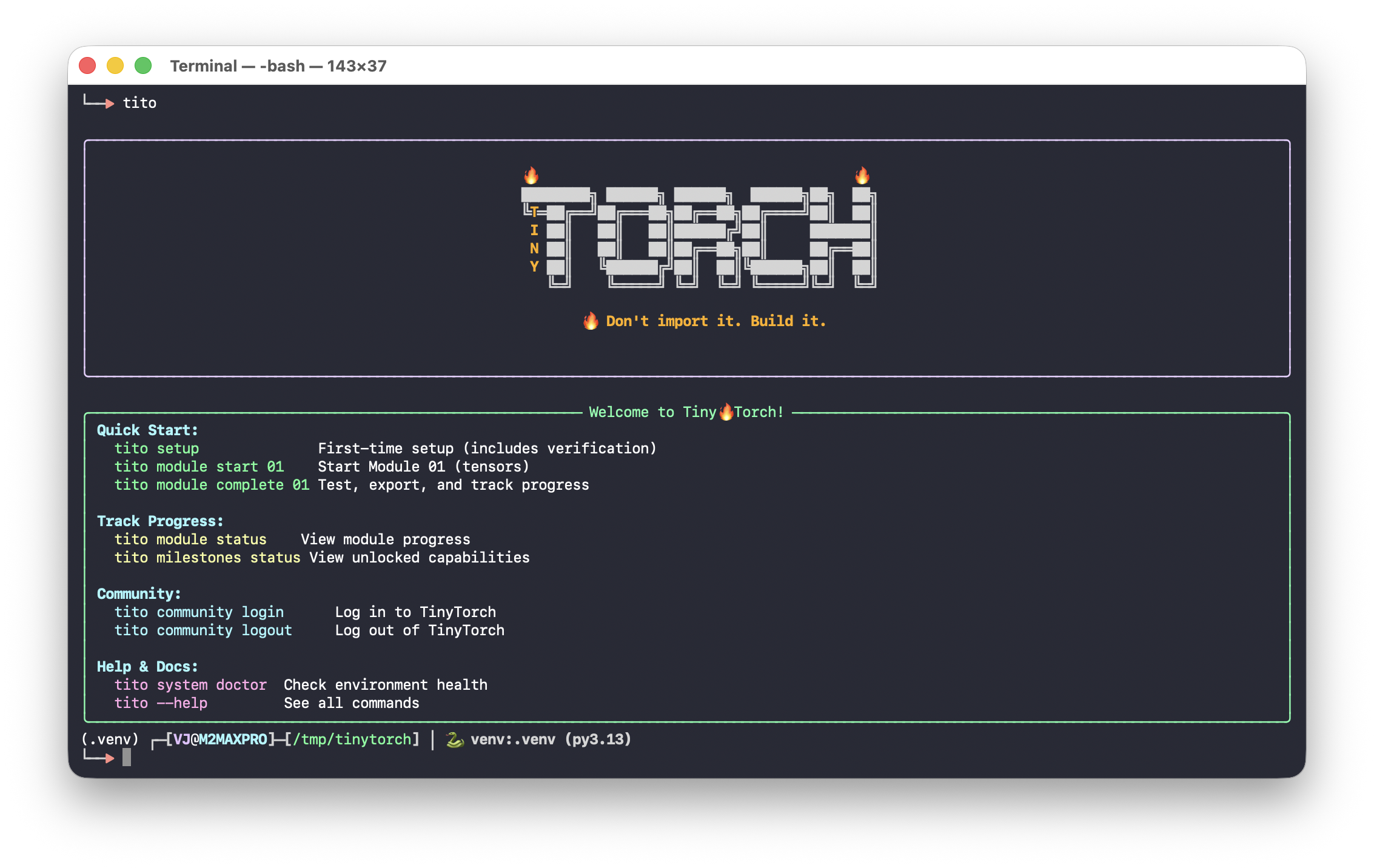

The Solution: AI Bricks#

TinyTorch teaches you the AI bricks—the stable engineering foundations you can use to build any AI system. Small enough to learn from: bite-sized code that runs even on a Raspberry Pi. Big enough to matter: showing the real architecture of how frameworks are built.

The Machine Learning Systems textbook teaches you the concepts of the rocket ship: propulsion, guidance, life support.

TinyTorch is where you actually build a small rocket with your own hands. Not a toy—a real framework.

This is how people move from using machine learning to engineering machine learning systems. This is how someone becomes an AI systems engineer rather than someone who only knows how to run code in a notebook.

Who This Is For#

Want to understand ML systems deeply, not just use them superficially. If you've wondered "how does that actually work?", this is for you.

Need to debug, optimize, and deploy models in production. Understanding the systems underneath makes you more effective.

You understand memory hierarchies, computational complexity, performance optimization. You want to apply it to ML.

Can use frameworks but want to know how they work. Preparing for ML infrastructure roles and need systems-level understanding.

What you need is not another API tutorial. You need to build.[1]

What You Will Build#

By the end of TinyTorch, you will have implemented:

A tensor library with broadcasting, reshaping, and matrix operations

Activation functions with numerical stability considerations

Neural network layers: linear, convolutional, normalization

An autograd engine that builds computation graphs and computes gradients

Optimizers that update parameters using those gradients

Data loaders that handle batching, shuffling, and preprocessing

A complete training loop that ties everything together

Tokenizers, embeddings, attention, and transformer architectures

Profiling, quantization, and optimization techniques

This is not a simulation. This is the actual architecture of modern ML frameworks, implemented at a scale you can fully understand.

How to Learn#

Each module follows a Build-Use-Reflect cycle: implement from scratch, apply to real problems, then connect what you built to production systems and understand the tradeoffs. Work through Foundation first, then choose your path based on your interests.

Do not copy-paste. The learning happens in the struggle of implementation.

Use built-in profiling tools. Measure first, optimize second.

Every module includes comprehensive tests. When they pass, you have built something real.

Once your implementation works, compare with PyTorch's equivalent.

Take your time. The goal is not to finish fast. The goal is to understand deeply.

The Bigger Picture#

TinyTorch is part of a larger effort to educate a million learners at the edge of AI. The Machine Learning Systems textbook provides the conceptual foundation. TinyTorch provides the hands-on implementation experience. Together, they form a complete path into ML systems engineering.

The next generation of engineers cannot rely on magic. They need to see how everything fits together, from tensors all the way to systems. They need to feel that the world of ML systems is not an unreachable tower but something they can open, shape, and build.

That is what TinyTorch offers: the confidence that comes from building something real.

Prof. Vijay Janapa Reddi (Harvard University) 2025

What’s Next?#

See the Big Picture → — How all 20 modules connect, what you’ll build, and which path to take.