7 AI Frameworks

Resources: Slides, Labs, Exercises

In this chapter, we explore the landscape of AI frameworks that serve as the foundation for developing machine learning systems. AI frameworks provide the essential tools, libraries, and environments necessary to design, train, and deploy machine learning models. We delve into the evolutionary trajectory of these frameworks, dissect the workings of TensorFlow, and provide insights into the core components and advanced features that define these frameworks.

Furthermore, we investigate the specialization of frameworks tailored to specific needs, the emergence of frameworks specifically designed for embedded AI, and the criteria for selecting the most suitable framework for your project. This exploration will be rounded off by a glimpse into the future trends that are expected to shape the landscape of ML frameworks in the coming years.

Understand the evolution and capabilities of major machine learning frameworks. This includes graph execution models, programming paradigms, hardware acceleration support, and how they have expanded over time.

Learn the core components and functionality of frameworks like computational graphs, data pipelines, optimization algorithms, training loops, etc. that enable efficient model building.

Compare frameworks across different environments like cloud, edge, and TinyML. Learn how frameworks specialize based on computational constraints and hardware.

Dive deeper into embedded and TinyML focused frameworks like TensorFlow Lite Micro, CMSIS-NN, TinyEngine etc. and how they optimize for microcontrollers.

Explore model conversion and deployment considerations when choosing a framework, including aspects like latency, memory usage, and hardware support.

Evaluate key factors in selecting the right framework like performance, hardware compatibility, community support, ease of use, etc. based on the specific project needs and constraints.

Understand the limitations of current frameworks and potential future trends like using ML to improve frameworks, decomposed ML systems, and high performance compilers.

7.1 Introduction

Machine learning frameworks provide the tools and infrastructure to efficiently build, train, and deploy machine learning models. In this chapter, we will explore the evolution and key capabilities of major frameworks like TensorFlow (TF), PyTorch, and specialized frameworks for embedded devices. We will dive into the components like computational graphs, optimization algorithms, hardware acceleration, and more that enable developers to quickly construct performant models. Understanding these frameworks is essential to leverage the power of deep learning across the spectrum from cloud to edge devices.

ML frameworks handle much of the complexity of model development through high-level APIs and domain-specific languages that allow practitioners to quickly construct models by combining pre-made components and abstractions. For example, frameworks like TensorFlow and PyTorch provide Python APIs to define neural network architectures using layers, optimizers, datasets, and more. This enables rapid iteration compared to coding every model detail from scratch.

A key capability offered by frameworks is distributed training engines that can scale model training across clusters of GPUs and TPUs. This makes it feasible to train state-of-the-art models with billions or trillions of parameters on vast datasets. Frameworks also integrate with specialized hardware like NVIDIA GPUs to further accelerate training via optimizations like parallelization and efficient matrix operations.

In addition, frameworks simplify deploying finished models into production through tools like TensorFlow Serving for scalable model serving and TensorFlow Lite for optimization on mobile and edge devices. Other valuable capabilities include visualization, model optimization techniques like quantization and pruning, and monitoring metrics during training.

Leading open source frameworks like TensorFlow, PyTorch, and MXNet power much of AI research and development today. Commercial offerings like Amazon SageMaker and Microsoft Azure Machine Learning integrate these open source frameworks with proprietary capabilities and enterprise tools.

Machine learning engineers and practitioners leverage these robust frameworks to focus on high-value tasks like model architecture, feature engineering, and hyperparameter tuning instead of infrastructure. The goal is to efficiently build and deploy performant models that solve real-world problems.

In this chapter, we will explore today's leading cloud frameworks and how they have adapted models and tools specifically for embedded and edge deployment. We will compare programming models, supported hardware, optimization capabilities, and more to fully understand how frameworks enable scalable machine learning from the cloud to the edge.

7.2 Framework Evolution

Machine learning frameworks have evolved significantly over time to meet the diverse needs of machine learning practitioners and advancements in AI techniques. A few decades ago, building and training machine learning models required extensive low-level coding and infrastructure. Machine learning frameworks have evolved considerably over the past decade to meet the expanding needs of practitioners and rapid advances in deep learning techniques. Early neural network research was constrained by insufficient data and compute power. Building and training machine learning models required extensive low-level coding and infrastructure. But the release of large datasets like ImageNet (Deng et al. 2009) and advancements in parallel GPU computing unlocked the potential for far deeper neural networks.

The first ML frameworks, Theano by Team et al. (2016) and Caffe by Jia et al. (2014), were developed by academic institutions (Montreal Institute for Learning Algorithms, Berkeley Vision and Learning Center). Amid a growing interest in deep learning due to state-of-the-art performance of AlexNet Krizhevsky, Sutskever, and Hinton (2012) on the ImageNet dataset, private companies and individuals began developing ML frameworks, resulting in frameworks such as Keras by Chollet (2018), Chainer by Tokui et al. (2019), TensorFlow from Google (Yu et al. 2018), CNTK by Microsoft (Seide and Agarwal 2016), and PyTorch by Facebook (Paszke et al. 2019).

Many of these ML frameworks can be divided into categories, namely high-level vs. low-level frameworks and static vs. dynamic computational graph frameworks. High-level frameworks provide a higher level of abstraction than low-level frameworks. That is, high-level frameworks have pre-built functions and modules for common ML tasks, such as creating, training, and evaluating common ML models as well as preprocessing data, engineering features, and visualizing data, which low-level frameworks do not have. Thus, high-level frameworks may be easier to use, but are not as customizable as low-level frameworks (i.e. users of low-level frameworks can define custom layers, loss functions, optimization algorithms, etc.). Examples of high-level frameworks include TensorFlow/Keras and PyTorch. Examples of low-level ML frameworks include TensorFlow with low-level APIs, Theano, Caffe, Chainer, and CNTK.

Frameworks like Theano and Caffe used static computational graphs which required rigidly defining the full model architecture upfront. Static graphs require upfront declaration and limit flexibility. Dynamic graphs construct on-the-fly for more iterative development. But around 2016, frameworks began adopting dynamic graphs like PyTorch and TensorFlow 2.0 which can construct graphs on-the-fly. This provides greater flexibility for model development. We will discuss these concepts and details later on in the AI Training section.

The development of these frameworks facilitated an explosion in model size and complexity over time—from early multilayer perceptrons and convolutional networks to modern transformers with billions or trillions of parameters. In 2016, ResNet models by He et al. (2016) achieved record ImageNet accuracy with over 150 layers and 25 million parameters. Then in 2020, the GPT-3 language model from OpenAI (Brown et al. 2020) pushed parameters to an astonishing 175 billion using model parallelism in frameworks to train across thousands of GPUs and TPUs.

Each generation of frameworks unlocked new capabilities that powered advancement:

Theano and TensorFlow (2015) introduced computational graphs and automatic differentiation to simplify model building.

CNTK (2016) pioneered efficient distributed training by combining model and data parallelism.

PyTorch (2016) provided imperative programming and dynamic graphs for flexible experimentation.

TensorFlow 2.0 (2019) made eager execution default for intuitiveness and debugging.

TensorFlow Graphics (2020) added 3D data structures to handle point clouds and meshes.

In recent years, there has been a convergence on the frameworks. Figure 7.1 shows that TensorFlow and PyTorch have become the overwhelmingly dominant ML frameworks, representing more than 95% of ML frameworks used in research and production. Keras was integrated into TensorFlow in 2019; Preferred Networks transitioned Chainer to PyTorch in 2019; and Microsoft stopped actively developing CNTK in 2022 in favor of supporting PyTorch on Windows.

However, a one-size-fits-all approach does not work well across the spectrum from cloud to tiny edge devices. Different frameworks represent various philosophies around graph execution, declarative versus imperative APIs, and more. Declarative defines what the program should do while imperative focuses on how it should do it step-by-step. For instance, TensorFlow uses graph execution and declarative-style modeling while PyTorch adopts eager execution and imperative modeling for more Pythonic flexibility. Each approach carries tradeoffs that we will discuss later in the Basic Components section.

Today's advanced frameworks enable practitioners to develop and deploy increasingly complex models - a key driver of innovation in the AI field. But they continue to evolve and expand their capabilities for the next generation of machine learning. To understand how these systems continue to evolve, we will dive deeper into TensorFlow as an example of how the framework grew in complexity over time.

7.3 DeepDive into TensorFlow

TensorFlow was developed by the Google Brain team and was released as an open-source software library on November 9, 2015. It was designed for numerical computation using data flow graphs and has since become popular for a wide range of machine learning and deep learning applications.

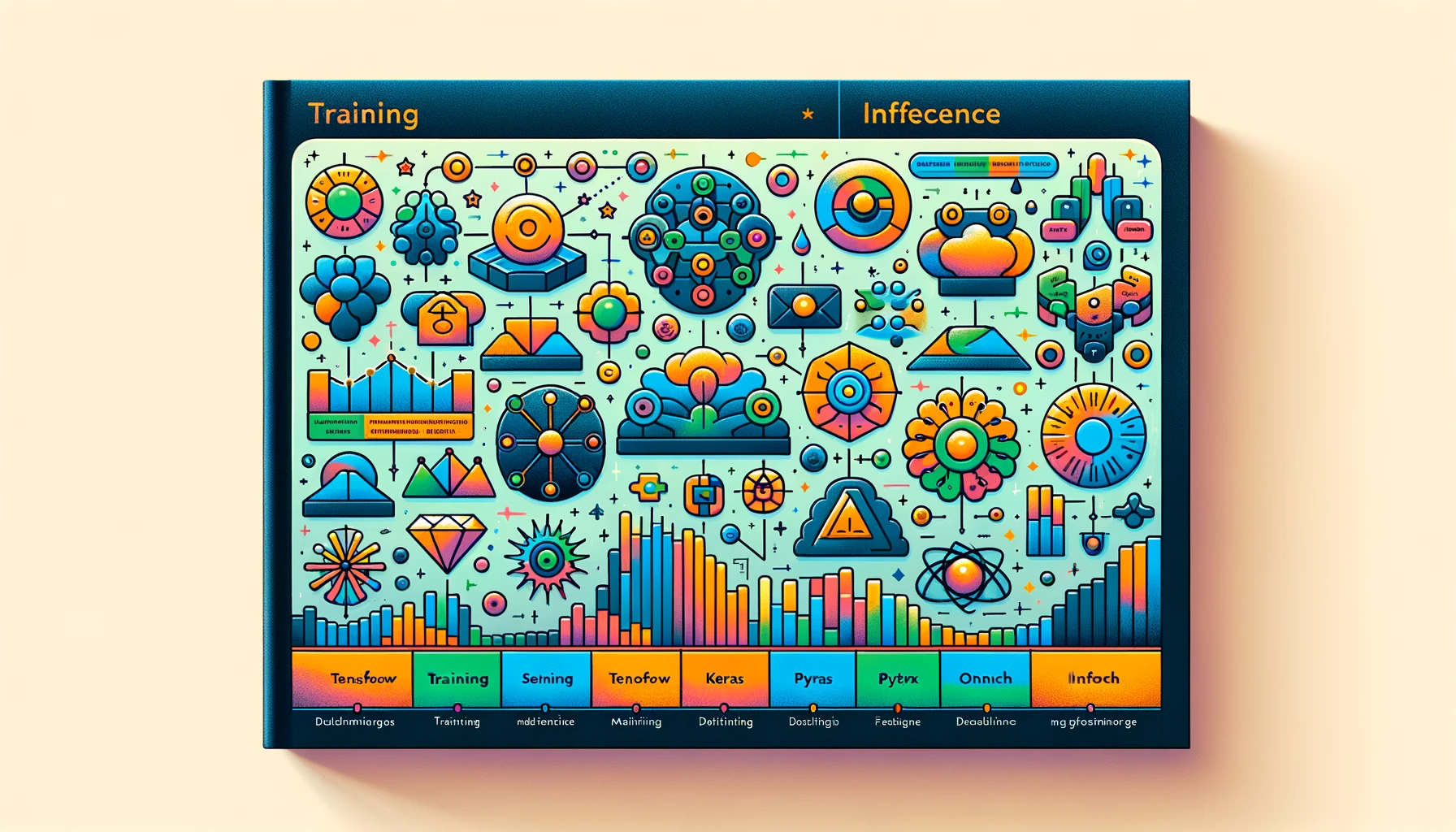

TensorFlow is both a training and inference framework and provides built-in functionality to handle everything from model creation and training, to deployment (Figure 7.2). Since its initial development, the TensorFlow ecosystem has grown to include many different “varieties” of TensorFlow that are each intended to allow users to support ML on different platforms. In this section, we will mainly discuss only the core package.

7.3.1 TF Ecosystem

TensorFlow Core: primary package that most developers engage with. It provides a comprehensive, flexible platform for defining, training, and deploying machine learning models. It includes tf.keras as its high-level API.

TensorFlow Lite: designed for deploying lightweight models on mobile, embedded, and edge devices. It offers tools to convert TensorFlow models to a more compact format suitable for limited-resource devices and provides optimized pre-trained models for mobile.

TensorFlow.js: JavaScript library that allows training and deployment of machine learning models directly in the browser or on Node.js. It also provides tools for porting pre-trained TensorFlow models to the browser-friendly format.

TensorFlow on Edge Devices (Coral): platform of hardware components and software tools from Google that allows the execution of TensorFlow models on edge devices, leveraging Edge TPUs for acceleration.

TensorFlow Federated (TFF): framework for machine learning and other computations on decentralized data. TFF facilitates federated learning, allowing model training across many devices without centralizing the data.

TensorFlow Graphics: library for using TensorFlow to carry out graphics-related tasks, including 3D shapes and point clouds processing, using deep learning.

TensorFlow Hub: repository of reusable machine learning model components to allow developers to reuse pre-trained model components, facilitating transfer learning and model composition

TensorFlow Serving: framework designed for serving and deploying machine learning models for inference in production environments. It provides tools for versioning and dynamically updating deployed models without service interruption.

TensorFlow Extended (TFX): end-to-end platform designed to deploy and manage machine learning pipelines in production settings. TFX encompasses components for data validation, preprocessing, model training, validation, and serving.

TensorFlow was developed to address the limitations of DistBelief (Yu et al. 2018)—the framework in use at Google from 2011 to 2015—by providing flexibility along three axes: 1) defining new layers, 2) refining training algorithms, and 3) defining new training algorithms. To understand what limitations in DistBelief led to the development of TensorFlow, we will first give a brief overview of the Parameter Server Architecture that DistBelief employed (Dean et al. 2012).

The Parameter Server (PS) architecture is a popular design for distributing the training of machine learning models, especially deep neural networks, across multiple machines. The fundamental idea is to separate the storage and management of model parameters from the computation used to update these parameters:

Storage: The storage and management of model parameters were handled by the stateful parameter server processes. Given the large scale of models and the distributed nature of the system, these parameters were sharded across multiple parameter servers. Each server maintained a portion of the model parameters, making it "stateful" as it had to maintain and manage this state across the training process.

Computation: The worker processes, which could be run in parallel, were stateless and purely computational, processing data and computing gradients without maintaining any state or long-term memory (M. Li et al. 2014).

DistBelief and its architecture defined above were crucial in enabling distributed deep learning at Google but also introduced limitations that motivated the development of TensorFlow:

7.3.2 Static Computation Graph

In the parameter server architecture, model parameters are distributed across various parameter servers. Since DistBelief was primarily designed for the neural network paradigm, parameters corresponded to a fixed structure of the neural network. If the computation graph were dynamic, the distribution and coordination of parameters would become significantly more complicated. For example, a change in the graph might require the initialization of new parameters or the removal of existing ones, complicating the management and synchronization tasks of the parameter servers. This made it harder to implement models outside the neural framework or models that required dynamic computation graphs.

TensorFlow was designed to be a more general computation framework where the computation is expressed as a data flow graph. This allows for a wider variety of machine learning models and algorithms outside of just neural networks, and provides flexibility in refining models.

7.3.3 Usability & Deployment

The parameter server model involves a clear delineation of roles (worker nodes and parameter servers), and is optimized for data center deployments which might not be optimal for all use cases. For instance, on edge devices or in other non-data center environments, this division introduces overheads or complexities.

TensorFlow was built to run on multiple platforms, from mobile devices and edge devices, to cloud infrastructure. It also aimed to provide ease of use between local and distributed training, and to be more lightweight, and developer friendly.

7.3.4 Architecture Design

Rather than using the parameter server architecture, TensorFlow instead deploys tasks across a cluster. These tasks are named processes that can communicate over a network, and each can execute TensorFlow's core construct: the dataflow graph, and interface with various computing devices (like CPUs or GPUs). This graph is a directed representation where nodes symbolize computational operations, and edges depict the tensors (data) flowing between these operations.

Despite the absence of traditional parameter servers, some tasks, called “PS tasks”, still perform the role of storing and managing parameters, reminiscent of parameter servers in other systems. The remaining tasks, which usually handle computation, data processing, and gradient calculations, are referred to as "worker tasks." TensorFlow's PS tasks can execute any computation representable by the dataflow graph, meaning they aren't just limited to parameter storage, and the computation can be distributed. This capability makes them significantly more versatile and gives users the power to program the PS tasks using the standard TensorFlow interface, the same one they'd use to define their models. As mentioned above, dataflow graphs’ structure also makes it inherently good for parallelism allowing for processing of large datasets.

7.3.5 Built-in Functionality & Keras

TensorFlow includes libraries to help users develop and deploy more use-case specific models, and since this framework is open-source, this list continues to grow. These libraries address the entire ML development life-cycle: data preparation, model building, deployment, as well as responsible AI.

Additionally, one of TensorFlow’s biggest advantages is its integration with Keras, though as we will cover in the next section, Pytorch recently also added a Keras integration. Keras is another ML framework that was built to be extremely user-friendly and as a result has a high level of abstraction. We will cover Keras in more depth later in this chapter, but when discussing its integration with TensorFlow, the most important thing to note is that it was originally built to be backend agnostic. This means users could abstract away these complexities, offering a cleaner, more intuitive way to define and train models without worrying about compatibility issues with different backends. TensorFlow users had some complaints about the usability and readability of TensorFlow’s API, so as TF gained prominence it integrated Keras as its high-level API. This integration offered major benefits to TensorFlow users since it introduced more intuitive readability, and portability of models while still taking advantage of powerful backend features, Google support, and infrastructure to deploy models on various platforms.

7.3.6 Limitations and Challenges

TensorFlow is one of the most popular deep learning frameworks but does have criticisms and weaknesses– mostly focusing on usability, and resource usage. The rapid pace of updates through its support from Google, while advantageous, has sometimes led to issues of backward compatibility, deprecated functions, and shifting documentation. Additionally, even with the Keras implementation, the syntax and learning curve of TensorFlow can be difficult for new users. One major critique of TensorFlow is its high overhead and memory consumption due to the range of built in libraries and support. Some of these concerns can be addressed by using pared down versions, but can still be limiting in resource-constrained environments.

7.3.7 PyTorch vs. TensorFlow

PyTorch and TensorFlow have established themselves as frontrunners in the industry. Both frameworks offer robust functionalities, but they differ in terms of their design philosophies, ease of use, ecosystem, and deployment capabilities.

Design Philosophy and Programming Paradigm: PyTorch uses a dynamic computational graph, termed as eager execution. This makes it intuitive and facilitates debugging since operations are executed immediately and can be inspected on-the-fly. In comparison, earlier versions of TensorFlow were centered around a static computational graph, which required the graph's complete definition before execution. However, TensorFlow 2.0 introduced eager execution by default, making it more aligned with PyTorch in this regard. PyTorch's dynamic nature and Python based approach has enabled its simplicity and flexibility, particularly for rapid prototyping. TensorFlow's static graph approach in its earlier versions had a steeper learning curve; the introduction of TensorFlow 2.0, with its Keras integration as the high-level API, has significantly simplified the development process.

Deployment: PyTorch is heavily favored in research environments, deploying PyTorch models in production settings was traditionally challenging. However, with the introduction of TorchScript and the TorchServe tool, deployment has become more feasible. One of TensorFlow's strengths lies in its scalability and deployment capabilities, especially on embedded and mobile platforms with TensorFlow Lite. TensorFlow Serving and TensorFlow.js further facilitate deployment in various environments, thus giving it a broader reach in the ecosystem.

Performance: Both frameworks offer efficient hardware acceleration for their operations. However, TensorFlow has a slightly more robust optimization workflow, such as the XLA (Accelerated Linear Algebra) compiler, which can further boost performance. Its static computational graph, in the early versions, was also advantageous for certain optimizations.

Ecosystem: PyTorch has a growing ecosystem with tools like TorchServe for serving models and libraries like TorchVision, TorchText, and TorchAudio for specific domains. As we mentioned earlier, TensorFlow has a broad and mature ecosystem. TensorFlow Extended (TFX) provides an end-to-end platform for deploying production machine learning pipelines. Other tools and libraries include TensorFlow Lite, TensorFlow.js, TensorFlow Hub, and TensorFlow Serving.

Here’s a summarizing comparative analysis:

| Feature/Aspect | PyTorch | TensorFlow |

|---|---|---|

| Design Philosophy | Dynamic computational graph (eager execution) | Static computational graph (early versions); Eager execution in TensorFlow 2.0 |

| Deployment | Traditionally challenging; Improved with TorchScript & TorchServe | Scalable, especially on embedded platforms with TensorFlow Lite |

| Performance & Optimization | Efficient GPU acceleration | Robust optimization with XLA compiler |

| Ecosystem | TorchServe, TorchVision, TorchText, TorchAudio | TensorFlow Extended (TFX), TensorFlow Lite, TensorFlow.js, TensorFlow Hub, TensorFlow Serving |

| Ease of Use | Preferred for its Pythonic approach and rapid prototyping | Initially steep learning curve; Simplified with Keras in TensorFlow 2.0 |

7.4 Basic Framework Components

7.4.1 Tensor data structures

To understand tensors, let us start from the familiar concepts in linear algebra. As demonstrated in Figure 7.3, vectors can be represented as a stack of numbers in a 1-dimensional array. Matrices follow the same idea, and one can think of them as many vectors being stacked on each other, making it 2 dimensional. Higher dimensional tensors work the same way. A 3-dimensional tensor is simply a set of matrices stacked on top of each other in another direction. Therefore, vectors and matrices can be considered special cases of tensors, with 1D and 2D dimensions respectively.

Defining formally, in machine learning, tensors are a multi-dimensional array of numbers. The number of dimensions defines the rank of the tensor. As a generalization of linear algebra, the study of tensors is called multilinear algebra. There are noticeable similarities between matrices and higher ranked tensors. First, it is possible to extend the definitions given in linear algebra to tensors, such as with eigenvalues, eigenvectors, and rank (in the linear algebra sense) . Furthermore, with the way that we have defined tensors, it is possible to turn higher dimensional tensors into matrices. This turns out to be very critical in practice, as multiplication of abstract representations of higher dimensional tensors are often completed by first converting them into matrices for multiplication.

Tensors offer a flexible data structure with its ability to represent data in higher dimensions. For example, to represent color image data, for each of the pixel values (in 2 dimensions), one needs the color values for red, green and blue. With tensors, it is easy to contain image data in a single 3-dimensional tensor with each of the numbers within it representing a certain color value in the certain location of the image. Extending even further, if we wanted to store a series of images, we can simply extend the dimensions such that the new dimension (to create a 4-dimensional tensor) represents the different images that we have. This is exactly what the famous MNIST dataset does, loading a single 4-dimensional tensor when one calls to load the dataset, allowing a compact representation of all the data in one place.

7.4.2 Computational graphs

Graph Definition

Computational graphs are a key component of deep learning frameworks like TensorFlow and PyTorch. They allow us to express complex neural network architectures in a way that can be efficiently executed and differentiated. A computational graph consists of a directed acyclic graph (DAG) where each node represents an operation or variable, and edges represent data dependencies between them.

For example, a node might represent a matrix multiplication operation, taking two input matrices (or tensors) and producing an output matrix (or tensor). To visualize this, consider the simple example in Figure 7.4. The directed acyclic graph above computes \(z = x \times y\), where each of the variables are just numbers.

Underneath the hood, the computational graphs represent abstractions for common layers like convolutional, pooling, recurrent, and dense layers, with data including activations, weights, biases, are represented in tensors. Convolutional layers form the backbone of CNN models for computer vision. They detect spatial patterns in input data through learned filters. Recurrent layers like LSTMs and GRUs enable processing sequential data for tasks like language translation. Attention layers are used in transformers to draw global context from the entire input.

Broadly speaking, layers are higher level abstractions that define computations on top of those tensors. For example, a Dense layer performs a matrix multiplication and addition between input/weight/bias tensors. Note that a layer operates on tensors as inputs and outputs and the layer itself is not a tensor. Some key differences:

Layers contain states like weights and biases. Tensors are stateless, just holding data.

Layers can modify internal state during training. Tensors are immutable/read-only.

Layers are higher level abstractions. Tensors are lower level, directly representing data and math operations.

Layers define fixed computation patterns. Tensors flow between layers during execution.

Layers are used indirectly when building models. Tensors flow between layers during execution.

So while tensors are a core data structure that layers consume and produce, layers have additional functionality for defining parameterized operations and training. While a layer configures tensor operations under the hood, the layer itself remains distinct from the tensor objects. The layer abstraction makes building and training neural networks much more intuitive. This sort of abstraction enables developers to build models by stacking these layers together, without having to implement the layer logic themselves. For example, calling tf.keras.layers.Conv2D in TensorFlow creates a convolutional layer. The framework handles computing the convolutions, managing parameters, etc. This simplifies model development, allowing developers to focus on architecture rather than low-level implementations. Layer abstractions utilize highly optimized implementations for performance. They also enable portability, as the same architecture can run on different hardware backends like GPUs and TPUs.

In addition, computational graphs include activation functions like ReLU, sigmoid, and tanh that are essential to neural networks and many frameworks provide these as standard abstractions. These functions introduce non-linearities that enable models to approximate complex functions. Frameworks provide these as simple, pre-defined operations that can be used when constructing models. For example, tf.nn.relu in TensorFlow. This abstraction enables flexibility, as developers can easily swap activation functions for tuning performance. Pre-defined activations are also optimized by the framework for faster execution.

In recent years, models like ResNets and MobileNets have emerged as popular architectures, with current frameworks pre-packaging these as computational graphs. Rather than worrying about the fine details, developers can utilize them as a starting point, customizing as needed by substituting layers. This simplifies and speeds up model development, avoiding reinventing architectures from scratch. Pre-defined models include well-tested, optimized implementations that ensure good performance. Their modular design also enables transferring learned features to new tasks via transfer learning. In essence, these pre-defined architectures provide high-performance building blocks to quickly create robust models.

These layer abstractions, activation functions, and predefined architectures provided by the frameworks are what constitute a computational graph. When a user defines a layer in a framework (e.g. tf.keras.layers.Dense()), the framework is configuring computational graph nodes and edges to represent that layer. The layer parameters like weights and biases become variables in the graph. The layer computations become operation nodes (such as the x and y in the figure above). When you call an activation function like tf.nn.relu(), the framework adds a ReLU operation node to the graph. Predefined architectures are just pre-configured subgraphs that can be inserted into your model's graph. Thus, model definition via high-level abstractions creates a computational graph. The layers, activations, and architectures we use become graph nodes and edges.

When we define a neural network architecture in a framework, we are implicitly constructing a computational graph. The framework uses this graph to determine operations to run during training and inference. Computational graphs bring several advantages over raw code and that’s one of the core functionalities that is offered by a good ML framework:

Explicit representation of data flow and operations

Ability to optimize graph before execution

Automatic differentiation for training

Language agnosticism - graph can be translated to run on GPUs, TPUs, etc.

Portability - graph can be serialized, saved, and restored later

Computational graphs are the fundamental building blocks of ML frameworks. Model definition via high-level abstractions creates a computational graph. The layers, activations, and architectures we use become graph nodes and edges. The framework compilers and optimizers operate on this graph to generate executable code. Essentially, the abstractions provide a developer-friendly API for building computational graphs. Under the hood, it's still graphs all the way down! So while you may not directly manipulate graphs as a framework user, they enable your high-level model specifications to be efficiently executed. The abstractions simplify model-building while computational graphs make it possible.

Static vs. Dynamic Graphs

Deep learning frameworks have traditionally followed one of two approaches for expressing computational graphs.

Static graphs (declare-then-execute): With this model, the entire computational graph must be defined upfront before it can be run. All operations and data dependencies must be specified during the declaration phase. TensorFlow originally followed this static approach - models were defined in a separate context, then a session was created to run them. The benefit of static graphs is they allow more aggressive optimization, since the framework can see the full graph. But it also tends to be less flexible for research and interactivity. Changes to the graph require re-declaring the full model.

For example:

x = tf.placeholder(tf.float32)

y = tf.matmul(x, weights) + biasesThe model is defined separately from execution, like building a blueprint. For TensorFlow 1.x, this is done using tf.Graph(). All ops and variables must be declared upfront. Subsequently, the graph is compiled and optimized before running. Execution is done later by feeding in tensor values.

Dynamic graphs (define-by-run): In contrast to declare (all) first and then execute, the graph is built dynamically as execution happens. There is no separate declaration phase - operations execute immediately as they are defined. This style is more imperative and flexible, facilitating experimentation.

PyTorch uses dynamic graphs, building the graph on-the-fly as execution happens. For example, consider the following code snippet, where the graph is built as the execution is taking place:

x = torch.randn(4,784)

y = torch.matmul(x, weights) + biasesIn the above example, there are no separate compile/build/run phases. Ops define and execute immediately. With dynamic graphs, definition is intertwined with execution. This provides a more intuitive, interactive workflow. But the downside is less potential for optimizations, since the framework only sees the graph as it is built.

Recently, however, the distinction has blurred as frameworks adopt both modes. TensorFlow 2.0 defaults to dynamic graph mode, while still letting users work with static graphs when needed. Dynamic declaration makes frameworks easier to use, while static models provide optimization benefits. The ideal framework offers both options.

Static graph declaration provides optimization opportunities but less interactivity. While dynamic execution offers flexibility and ease of use, it may have performance overhead. Here is a table comparing the pros and cons of static vs dynamic execution graphs:

| Execution Graph | Pros | Cons |

|---|---|---|

| Static (Declare-then-execute) | Enable graph optimizations by seeing full model ahead of time Can export and deploy frozen graphs Graph is packaged independently of code |

Less flexible for research and iteration Changes require rebuilding graph Execution has separate compile and run phases |

| Dynamic (Define-by-run) | Intuitive imperative style like Python code Interleave graph build with execution Easy to modify graphs Debugging seamlessly fits workflow |

Harder to optimize without full graph Possible slowdowns from graph building during execution Can require more memory |

7.4.3 Data Pipeline Tools

Computational graphs can only be as good as the data they learn from and work on. Therefore, feeding training data efficiently is crucial for optimizing deep neural networks performance, though it is often overlooked as one of the core functionalities. Many modern AI frameworks provide specialized pipelines to ingest, process, and augment datasets for model training.

Data Loaders

At the core of these pipelines are data loaders, which handle reading examples from storage formats like CSV files or image folders. Reading training examples from sources like files, databases, object storage, etc. is the job of the data loaders. Deep learning models require diverse data formats depending on the application. Among the popular formats are CSV: A versatile, simple format often used for tabular data. TFRecord: TensorFlow's proprietary format, optimized for performance. Parquet: Columnar storage, offering efficient data compression and retrieval. JPEG/PNG: Commonly used for image data. WAV/MP3: Prevalent formats for audio data. For instance, tf.data is TensorFlows’s dataloading pipeline: https://www.tensorflow.org/guide/data.

Data loaders batch examples to leverage vectorization support in hardware. Batching refers to grouping multiple data points for simultaneous processing, leveraging the vectorized computation capabilities of hardware like GPUs. While typical batch sizes range from 32-512 examples, the optimal size often depends on the memory footprint of the data and the specific hardware constraints. Advanced loaders can stream virtually unlimited datasets from disk and cloud storage. Streaming large datasets from disk or networks instead of loading fully into memory. This enables virtually unlimited dataset sizes.

Data loaders can also shuffle data across epochs for randomization, and preprocess features in parallel with model training to expedite the training process. Randomly shuffling the order of examples between training epochs reduces bias and improves generalization.

Data loaders also support caching and prefetching strategies to optimize data delivery for fast, smooth model training. Caching preprocessed batches in memory so they can be reused efficiently during multiple training steps. Caching these batches in memory eliminates redundant processing. Prefetching, on the other hand, involves preloading subsequent batches, ensuring that the model never idles waiting for data.

7.4.4 Data Augmentation

Besides loading, data augmentation expands datasets synthetically. Augmentations apply random transformations like flipping, cropping, rotating, altering color, adding noise etc. for images. For audio, common augmentations involve mixing clips with background noise, or modulating speed/pitch/volume.

Augmentations increase variation in the training data. Frameworks like TensorFlow and PyTorch simplify applying random augmentations each epoch by integrating into the data pipeline.By programmatically increasing variation in the training data distribution, augmentations reduce overfitting and improve model generalization.

Many frameworks make it easy to integrate augmentations into the data pipeline so they are applied on-the-fly each epoch. Together, performant data loaders and extensive augmentations enable practitioners to feed massive, varied datasets to neural networks efficiently. Hands-off data pipelines represent a significant improvement in usability and productivity. They allow developers to focus more on model architecture and less on data wrangling when training deep learning models.

7.4.5 Optimization Algorithms

Training a neural network is fundamentally an iterative process that seeks to minimize a loss function. At its core, the goal is to fine-tune the model weights and parameters to produce predictions as close as possible to the true target labels. Machine learning frameworks have greatly streamlined this process by offering extensive support in three critical areas: loss functions, optimization algorithms, and regularization techniques.

Loss Functions are useful to quantify the difference between the model's predictions and the true values. Different datasets require a different loss function to perform properly, as the loss function tells the computer the “objective” for it to aim to. Commonly used loss functions are Mean Squared Error (MSE) for regression tasks and Cross-Entropy Loss for classification tasks.

To demonstrate some of the loss functions, imagine that you have a set of inputs and the corresponding outputs, \(Y_n\) that denotes the output of \(n\)’th value. The inputs are fed into the model, and the model outputs a prediction, which we can call \(\hat{Y_n}\). With the predicted value and the real value, we can for example use the MSE to calculate the loss function:

\[MSE = \frac{1}{N}\sum_{n=1}^{N}(Y_n - \hat{Y_n})^2\]

If the problem is a classification problem, we do not want to use the MSE, since the distance between the predicted value and the real value does not have significant meaning. For example, if one wants to recognize handwritten models, while 9 is further away from 2, it does not mean that the model is more wrong by making the prediction. Therefore, we use the cross-entropy loss function, which is defined as:

\[Cross-Entropy = -\sum_{n=1}^{N}Y_n\log(\hat{Y_n})\]

Once the loss like above is computed, we need methods to adjust the model's parameters to reduce this loss or error during the training process. To do so, current frameworks use a gradient based approach, where it computes how much changes tuning the weights in a certain way changes the value of the loss function. Knowing this gradient, the model moves in the direction that reduces the gradient. There are many challenges associated with this, however, primarily stemming from the fact that the optimization problem is not convex, making it very easy to solve, and more details about this will come in the AI Training section. Modern frameworks come equipped with efficient implementations of several optimization algorithms, many of which are variants of gradient descent algorithms with stochastic methods and adaptive learning rates. More information with clear examples can be found in the AI Training section.

Last but not least, overly complex models tend to overfit, meaning they perform well on the training data but fail to generalize to new, unseen data (see Overfitting). To counteract this, regularization methods are employed to penalize model complexity and encourage it to learn simpler patterns. Dropout for instance randomly sets a fraction of input units to 0 at each update during training, which helps prevent overfitting.

However, there are cases where the problem is more complex than what the model can represent, and this may result in underfitting. Therefore, choosing the right model architecture is also a critical step in the training process. Further heuristics and techniques are discussed in the AI Training section.

Frameworks also provide efficient implementations of gradient descent, Adagrad, Adadelta, and Adam. Adding regularization like dropout and L1/L2 penalties prevents overfitting during training. Batch normalization accelerates training by normalizing inputs to layers.

7.4.6 Model Training Support

Before training a defined neural network model, a compilation step is required. During this step, the high-level architecture of the neural network is transformed into an optimized, executable format. This process comprises several steps. The construction of the computational graph is the first step. It represents all the mathematical operations and data flow within the model. We discussed this earlier.

During training, the focus is on executing the computational graph. Every parameter within the graph, such as weights and biases, is assigned an initial value. This value might be random or based on a predefined logic, depending on the chosen initialization method.

The next critical step is memory allocation. Essential memory is reserved for the model's operations on both CPUs and GPUs, ensuring efficient data processing. The model's operations are then mapped to the available hardware resources, particularly GPUs or TPUs, to expedite computation. Once compilation is finalized, the model is prepared for training.

The training process employs various tools to enhance efficiency. Batch processing is commonly used to maximize computational throughput. Techniques like vectorization enable operations on entire data arrays, rather than proceeding element-wise, which bolsters speed. Optimizations such as kernel fusion (refer to the Optimizations chapter) amalgamate multiple operations into a single action, minimizing computational overhead. Operations can also be segmented into phases, facilitating the concurrent processing of different mini-batches at various stages.

Frameworks consistently checkpoint the state, preserving intermediate model versions during training. This ensures that if an interruption occurs, the progress isn't wholly lost, and training can recommence from the last checkpoint. Additionally, the system vigilantly monitors the model's performance against a validation data set. Should the model begin to overfit (that is, if its performance on the validation set declines), training is automatically halted, conserving computational resources and time.

ML frameworks incorporate a blend of model compilation, enhanced batch processing methods, and utilities such as checkpointing and early stopping. These resources manage the complex aspects of performance, enabling practitioners to zero in on model development and training. As a result, developers experience both speed and ease when utilizing the capabilities of neural networks.

7.4.7 Validation and Analysis

After training deep learning models, frameworks provide utilities to evaluate performance and gain insights into the models' workings. These tools enable disciplined experimentation and debugging.

Evaluation Metrics

Frameworks include implementations of common evaluation metrics for validation:

Accuracy - Fraction of correct predictions overall. Widely used for classification.

Precision - Of positive predictions, how many were actually positive. Useful for imbalanced datasets.

Recall - Of actual positives, how many did we predict correctly. Measures completeness.

F1-score - Harmonic mean of precision and recall. Combines both metrics.

AUC-ROC - Area under ROC curve. Used for classification threshold analysis.

MAP - Mean Average Precision. Evaluates ranked predictions in retrieval/detection.

Confusion Matrix - Matrix that shows the true positives, true negatives, false positives, and false negatives. Provides a more detailed view of classification performance.

These metrics quantify model performance on validation data for comparison.

Visualization

Visualization tools provide insight into models:

Loss curves - Plot training and validation loss over time to spot overfitting.

Activation grids - Illustrate features learned by convolutional filters.

Projection - Reduce dimensionality for intuitive visualization.

Precision-recall curves - Assess classification tradeoffs.

Tools like TensorBoard for TensorFlow and TensorWatch for PyTorch enable real-time metrics and visualization during training.

7.4.8 Differentiable programming

With the machine learning training methods such as backpropagation relying on the change in the loss function with respect to the change in weights (which essentially is the definition of derivatives), the ability to quickly and efficiently train large machine learning models rely on the computer’s ability to take derivatives. This makes differentiable programming one of the most important elements of a machine learning framework.

There are primarily four methods that we can use to make computers take derivatives. First, we can manually figure out the derivatives by hand and input them to the computer. One can see that this would quickly become a nightmare with many layers of neural networks, if we had to compute all the derivatives in the backpropagation steps by hand. Another method is symbolic differentiation using computer algebra systems such as Mathematica, but this can introduce a layer of inefficiency, as there needs to be a level of abstraction to take derivatives. Numerical derivatives, the practice of approximating gradients using finite difference methods, suffer from many problems including high computational costs, and larger grid size can lead to a significant amount of errors. This leads to automatic differentiation, which exploits the primitive functions that computers use to represent operations to obtain an exact derivative. With automatic differentiation, computational complexity of computing the gradient is proportional to computing the function itself. Intricacies of automatic differentiation are not dealt with by end users now, but resources to learn more can be found widely, such as from here. Automatic differentiation and differentiable programming today is ubiquitous and is done efficiently and automatically by modern machine learning frameworks.

7.4.9 Hardware Acceleration

The trend to continuously train and deploy larger machine learning models has essentially made hardware acceleration support a necessity for machine learning platforms (Figure 7.5). Deep layers of neural networks require many matrix multiplications, which attracts hardware that can compute matrix operations fast and in parallel. In this landscape, two types of hardware architectures, the GPU and TPU, have emerged as leading choices for training machine learning models.

The use of hardware accelerators began with AlexNet, which paved the way for future works to utilize GPUs as hardware accelerators for training computer vision models. GPUs, or Graphics Processing Units, excel in handling a large number of computations at once, making them ideal for the matrix operations that are central to neural network training. Their architecture, designed for rendering graphics, turns out to be perfect for the kind of mathematical operations required in machine learning. While they are very useful for machine learning tasks and have been implemented in many hardware platforms, GPU’s are still general purpose in that they can be used for other applications.

On the other hand, Tensor Processing Units (TPU) are hardware units designed specifically for neural networks. They focus on the multiply and accumulate (MAC) operation, and their hardware essentially consists of a large hardware matrix that contains elements efficiently computing the MAC operation. This concept called the systolic array architecture, was pioneered by Kung and Leiserson (1979), but has proven to be a useful structure to efficiently compute matrix products and other operations within neural networks (such as convolutions).

While TPU’s can drastically reduce training times, it also has disadvantages. For example, many operations within the machine learning frameworks (primarily TensorFlow here since the TPU directly integrates with it) are not supported with the TPU’s. It also cannot support custom custom operations from the machine learning frameworks, and the network design must closely align to the hardware capabilities.

Today, NVIDIA GPUs dominate training, aided by software libraries like CUDA, cuDNN, and TensorRT. Frameworks also tend to include optimizations to maximize performance on these hardware types, like pruning unimportant connections and fusing layers. Combining these techniques with hardware acceleration provides greater efficiency. For inference, hardware is increasingly moving towards optimized ASICs and SoCs. Google's TPUs accelerate models in data centers. Apple, Qualcomm, and others now produce AI-focused mobile chips. The NVIDIA Jetson family targets autonomous robots.

7.5 Advanced Features

7.5.1 Distributed training

As machine learning models have become larger over the years, it has become essential for large models to utilize multiple computing nodes in the training process. This process, called distributed learning, has allowed for higher training capabilities, but has also imposed challenges in implementation.

We can consider three different ways to spread the work of training machine learning models to multiple computing nodes. Input data partitioning, referring to multiple processors running the same model on different input partitions. This is the easiest to implement that is available for many machine learning frameworks. The more challenging distribution of work comes with model parallelism, which refers to multiple computing nodes working on different parts of the model, and pipelined model parallelism, which refers to multiple computing nodes working on different layers of the model on the same input. The latter two mentioned here are active research areas.

ML frameworks that support distributed learning include TensorFlow (through its tf.distribute module), PyTorch (through its torch.nn.DataParallel and torch.nn.DistributedDataParallel modules), and MXNet (through its gluon API).

7.5.2 Model Conversion

Machine learning models have various methods to be represented in order to be used within different frameworks and for different device types. For example, a model can be converted to be compatible with inference frameworks within the mobile device. The default format for TensorFlow models is checkpoint files containing weights and architectures, which are needed in case we have to retrain the models. But for mobile deployment, models are typically converted to TensorFlow Lite format. TensorFlow Lite uses a compact flatbuffer representation and optimizations for fast inference on mobile hardware, discarding all the unnecessary baggage associated with training metadata such as checkpoint file structures.

The default format for TensorFlow models is checkpoint files containing weights and architectures. For mobile deployment, models are typically converted to TensorFlow Lite format. TensorFlow Lite uses a compact flatbuffer representation and optimizations for fast inference on mobile hardware.

Model optimizations like quantization (see Optimizations chapter) can further optimize models for target architectures like mobile. This reduces precision of weights and activations to uint8 or int8 for a smaller footprint and faster execution with supported hardware accelerators. For post-training quantization, TensorFlow's converter handles analysis and conversion automatically.

Frameworks like TensorFlow simplify deploying trained models to mobile and embedded IoT devices through easy conversion APIs for TFLite format and quantization. Ready-to-use conversion enables high performance inference on mobile without manual optimization burden. Besides TFLite, other common targets include TensorFlow.js for web deployment, TensorFlow Serving for cloud services, and TensorFlow Hub for transfer learning. TensorFlow's conversion utilities handle these scenarios to streamline end-to-end workflows.

More information about model conversion in TensorFlow is linked here.

7.5.3 AutoML, No-Code/Low-Code ML

In many cases, machine learning can have a relatively high barrier of entry compared to other fields. To successfully train and deploy models, one needs to have a critical understanding of a variety of disciplines, from data science (data processing, data cleaning), model structures (hyperparameter tuning, neural network architecture), hardware (acceleration, parallel processing), and more depending on the problem at hand. The complexity of these problems have led to the introduction to frameworks such as AutoML, which aims to make “Machine learning available for non-Machine Learning exports” and to “automate research in machine learning”. They have constructed AutoWEKA, which aids in the complex process of hyperparameter selection, as well as Auto-sklearn and Auto-pytorch, an extension of AutoWEKA into the popular sklearn and PyTorch Libraries.

While these works of automating parts of machine learning tasks are underway, others have focused on constructing machine learning models easier by deploying no-code/low code machine learning, utilizing a drag and drop interface with an easy to navigate user interface. Companies such as Apple, Google, and Amazon have already created these easy to use platforms to allow users to construct machine learning models that can integrate to their ecosystem.

These steps to remove barrier to entry continue to democratize machine learning and make it easier to access for beginners and simplify workflow for experts.

7.5.4 Advanced Learning Methods

Transfer Learning

Transfer learning is the practice of using knowledge gained from a pretrained model to train and improve performance of a model that is for a different task. For example, datasets that have been trained on ImageNet datasets such as MobileNet and ResNet can help classify other image datasets. To do so, one may freeze the pretrained model, utilizing it as a feature extractor to train a much smaller model that is built on top of the feature extraction. One can also fine tune the entire model to fit the new task.

Transfer learning has a series of challenges, in that the modified model may not be able to conduct its original tasks after transfer learning. Papers such as “Learning without Forgetting” by Z. Li and Hoiem (2018) aims to address these challenges and have been implemented in modern machine learning platforms.

Federated Learning

Consider the problem of labeling items that are present in a photo from personal devices. One may consider moving the image data from the devices to a central server, where a single model will train Using these image data provided by the devices. However, this presents many potential challenges. First, with many devices one needs a massive network infrastructure to move and store data from these devices to a central location. With the number of devices that are present today this is often not feasible, and very costly. Furthermore, there are privacy challenges associated with moving personal data, such as Photos central servers.

Federated learning by McMahan et al. (2017) is a form of distributed computing that resolves these issues by distributing the models into personal devices for them to be trained on device (Figure 7.6). At the beginning, a base global model is trained on a central server to be distributed to all devices. Using this base model, the devices individually compute the gradients and send them back to the central hub. Intuitively this is the transfer of model parameters instead of the data itself. This innovative approach allows the model to be trained with many different datasets (which, in our example, would be the set of images that are on personal devices), without the need to transfer a large amount of potentially sensitive data. However, federated learning also comes with a series of challenges.

In many real-world situations, data collected from devices may not come with suitable labels. This issue is compounded by the fact that users, who are often the primary source of data, can be unreliable. This unreliability means that even when data is labeled, there’s no guarantee of its accuracy or relevance. Furthermore, each user’s data is unique, resulting in a significant variance in the data generated by different users. This non-IID nature of data, coupled with the unbalanced data production where some users generate more data than others, can adversely impact the performance of the global model. Researchers have worked to compensate for this, such as by adding a proximal term to achieve a balance between the local and global model, and adding a frozen global hypersphere classifier.

There are additional challenges associated with federated learning. The number of mobile device owners can far exceed the average number of training samples on each device, leading to substantial communication overhead. This issue is particularly pronounced in the context of mobile networks, which are often used for such communication and can be unstable. This instability can result in delayed or failed transmission of model updates, thereby affecting the overall training process.

The heterogeneity of device resources is another hurdle. Devices participating in Federated Learning can have varying computational powers and memory capacities. This diversity makes it challenging to design algorithms that are efficient across all devices. Privacy and security issues are not a guarantee for federated learning. Techniques such as inversion gradient attacks can be used to extract information about the training data from the model parameters. Despite these challenges, the large amount of potential benefits continue to make it a popular research area. Open source programs such as Flower have been developed to make it simpler to implement federated learning with a variety of machine learning frameworks.

7.6 Framework Specialization

Thus far, we have talked about ML frameworks generally. However, typically frameworks are optimized based on the target environment's computational capabilities and application requirements, ranging from the cloud to the edge to tiny devices. Choosing the right framework is crucial based on the target environment for deployment. This section provides an overview of the major types of AI frameworks tailored for cloud, edge, and TinyML environments to help understand the similarities and differences between these different ecosystems.

7.6.1 Cloud

Cloud-based AI frameworks assume access to ample computational power, memory, and storage resources in the cloud. They generally support both training and inference. Cloud-based AI frameworks are suited for applications where data can be sent to the cloud for processing, such as cloud-based AI services, large-scale data analytics, and web applications. Popular cloud AI frameworks include the ones we mentioned earlier such as TensorFlow, PyTorch, MXNet, Keras, and others. These frameworks utilize technologies like GPUs, TPUs, distributed training, and AutoML to deliver scalable AI. Concepts like model serving, MLOps, and AIOps relate to the operationalization of AI in the cloud. Cloud AI powers services like Google Cloud AI and enables transfer learning using pre-trained models.

7.6.2 Edge

Edge AI frameworks are tailored for deploying AI models on edge devices, such as IoT devices, smartphones, and edge servers. Edge AI frameworks are optimized for devices with moderate computational resources, offering a balance between power and performance. Edge AI frameworks are ideal for applications requiring real-time or near-real-time processing, including robotics, autonomous vehicles, and smart devices. Key edge AI frameworks include TensorFlow Lite, PyTorch Mobile, CoreML, and others. They employ optimizations like model compression, quantization, and efficient neural network architectures. Hardware support includes CPUs, GPUs, NPUs and accelerators like the Edge TPU. Edge AI enables use cases like mobile vision, speech recognition, and real-time anomaly detection.

7.6.3 Embedded

TinyML frameworks are specialized for deploying AI models on extremely resource-constrained devices, specifically microcontrollers and sensors within the IoT ecosystem. TinyML frameworks are designed for devices with severely limited resources, emphasizing minimal memory and power consumption. TinyML frameworks are specialized for use cases on resource-constrained IoT devices for applications such as predictive maintenance, gesture recognition, and environmental monitoring. Major TinyML frameworks include TensorFlow Lite Micro, uTensor, and ARM NN. They optimize complex models to fit within kilobytes of memory through techniques like quantization-aware training and reduced precision. TinyML allows intelligent sensing across battery-powered devices, enabling collaborative learning via federated learning. The choice of framework involves balancing model performance and computational constraints of the target platform, whether cloud, edge or TinyML. Here is a summary table comparing the major AI frameworks across cloud, edge, and TinyML environments:

| Framework Type | Examples | Key Technologies | Use Cases |

|---|---|---|---|

| Cloud AI | TensorFlow, PyTorch, MXNet, Keras | GPUs, TPUs, distributed training, AutoML, MLOps | Cloud services, web apps, big data analytics |

| Edge AI | TensorFlow Lite, PyTorch Mobile, Core ML | Model optimization, compression, quantization, efficient NN architectures | Mobile apps, robots, autonomous systems, real-time processing |

| TinyML | TensorFlow Lite Micro, uTensor, ARM NN | Quantization-aware training, reduced precision, neural architecture search | IoT sensors, wearables, predictive maintenance, gesture recognition |

Key differences:

Cloud AI leverages massive computational power for complex models using GPUs/TPUs and distributed training

Edge AI optimizes models to run locally on resource-constrained edge devices.

TinyML fits models into extremely low memory and compute environments like microcontrollers

7.7 Embedded AI Frameworks

7.7.1 Resource Constraints

Embedded systems face severe resource constraints that pose unique challenges for deploying machine learning models compared to traditional computing platforms. For example, microcontroller units (MCUs) commonly used in IoT devices often have:

RAM in the range of tens of kilobytes to a few megabytes. The popular ESP8266 MCU has around 80KB RAM available to developers. This contrasts with 8GB or more on typical laptops and desktops today.

Flash storage ranging from hundreds of kilobytes to a few megabytes. The Arduino Uno microcontroller provides just 32KB of storage for code. Standard computers today have disk storage in the order of terabytes.

Processing power from just a few MHz to approximately 200MHz. The ESP8266 operates at 80MHz. This is several orders of magnitude slower than multi-GHz multi-core CPUs in servers and high-end laptops.

These tight constraints make training machine learning models directly on microcontrollers infeasible in most cases. The limited RAM precludes handling large datasets for training. Energy usage for training would also quickly deplete battery-powered devices. Instead, models are trained on resource-rich systems and deployed on microcontrollers for optimized inference. But even inference poses challenges:

Model Size: AI models are too large to fit on embedded and IoT devices. This necessitates the need for model compression techniques, such as quantization, pruning, and knowledge distillation. Additionally, as we will see, many of the frameworks used by developers for AI development have large amounts of overhead, and built in libraries that embedded systems can’t support.

Complexity of Tasks: With only tens of KBs to a few MBs of RAM, IoT devices and embedded systems are constrained in the complexity of tasks they can handle. Tasks that require large datasets or sophisticated algorithms– for example LLMs– which would run smoothly on traditional computing platforms, might be infeasible on embedded systems without compression or other optimization techniques due to memory limitations.

Data Storage and Processing: Embedded systems often process data in real-time and might not store large amounts of data locally. Conversely, traditional computing systems can hold and process large datasets in memory, enabling faster data operations and analysis as well as real-time updates.

Security and Privacy: Limited memory also restricts the complexity of security algorithms and protocols, data encryption, reverse engineering protections, and more that can be implemented on the device. This can potentially make some IoT devices more vulnerable to attacks.

Consequently, specialized software optimizations and ML frameworks tailored for microcontrollers are necessary to work within these tight resource bounds. Clever optimization techniques like quantization, pruning and knowledge distillation compress models to fit within limited memory (see Optimizations section). Learnings from neural architecture search help guide model designs.

Hardware improvements like dedicated ML accelerators on microcontrollers also help alleviate constraints. For instance, Qualcomm’s Hexagon DSP provides acceleration for TensorFlow Lite models on Snapdragon mobile chips. Google’s Edge TPU packs ML performance into a tiny ASIC for edge devices. ARM Ethos-U55 offers efficient inference on Cortex-M class microcontrollers. These customized ML chips unlock advanced capabilities for resource-constrained applications.

Generally, due to the limited processing power, it’s almost always infeasible to train AI models on IoT or embedded systems. Instead, models are trained on powerful traditional computers (often with GPUs) and then deployed on the embedded device for inference. TinyML specifically deals with this, ensuring models are lightweight enough for real-time inference on these constrained devices.

7.7.2 Frameworks & Libraries

Embedded AI frameworks are software tools and libraries designed to enable AI and ML capabilities on embedded systems. These frameworks are essential for bringing AI to IoT devices, robotics, and other edge computing platforms and they are designed to work where computational resources, memory, and power consumption are limited.

7.7.3 Challenges

While embedded systems present an enormous opportunity for deploying machine learning to enable intelligent capabilities at the edge, these resource-constrained environments also pose significant challenges. Unlike typical cloud or desktop environments rich with computational resources, embedded devices introduce severe constraints around memory, processing power, energy efficiency, and specialized hardware. As a result, existing machine learning techniques and frameworks designed for server clusters with abundant resources do not directly translate to embedded systems. This section uncovers some of the challenges and opportunities for embedded systems and ML frameworks.

Fragmented Ecosystem

The lack of a unified ML framework led to a highly fragmented ecosystem. Engineers at companies like STMicroelectronics, NXP Semiconductors, and Renesas had to develop custom solutions tailored to their specific microcontroller and DSP architectures. These ad-hoc frameworks required extensive manual optimization for each low-level hardware platform. This made porting models extremely difficult, requiring redevelopment for new Arm, RISC-V or proprietary architectures.

Disparate Hardware Needs

Without a shared framework, there was no standard way to assess hardware’s capabilities. Vendors like Intel, Qualcomm and NVIDIA created integrated solutions blending model, software and hardware improvements. This made it hard to discern the sources of performance gains - whether new chip designs like Intel’s low-power x86 cores or software optimizations were responsible. A standard framework was needed so vendors could evaluate their hardware’s capabilities in a fair, reproducible way.

Lack of Portability

Adapting models trained in common frameworks like TensorFlow or PyTorch to run efficiently on microcontrollers was very challenging without standardized tools. It required time-consuming manual translation of models to run on specialized DSPs from companies like CEVA or low-power Arm M-series cores. There were no turnkey tools enabling portable deployment across different architectures.

Incomplete Infrastructure

The infrastructure to support key model development workflows was lacking. There was minimal support for compression techniques to fit large models within constrained memory budgets. Tools for quantization to lower precision for faster inference were missing. Standardized APIs for integration into applications were incomplete. Essential functionality like on-device debugging, metrics, and performance profiling was absent. These gaps increased the cost and difficulty of embedded ML development.

No Standard Benchmark

Without unified benchmarks, there was no standard way to assess and compare the capabilities of different hardware platforms from vendors like NVIDIA, Arm and Ambiq Micro. Existing evaluations relied on proprietary benchmarks tailored to showcased strengths of particular chips. This made it impossible to objectively measure hardware improvements in a fair, neutral manner. This topic is discussed in more detail in the Benchmarking AI chapter.

Minimal Real-World Testing

Much of the benchmarks relied on synthetic data. Rigorously testing models on real-world embedded applications was difficult without standardized datasets and benchmarks. This raised questions on how performance claims would translate to real-world usage. More extensive testing was needed to validate chips in actual use cases.

The lack of shared frameworks and infrastructure slowed TinyML adoption, hampering the integration of ML into embedded products. Recent standardized frameworks have begun addressing these issues through improved portability, performance profiling, and benchmarking support. But ongoing innovation is still needed to enable seamless, cost-effective deployment of AI to edge devices.

Summary

The absence of standardized frameworks, benchmarks, and infrastructure for embedded ML has traditionally hampered adoption. However, recent progress has been made in developing shared frameworks like TensorFlow Lite Micro and benchmark suites like MLPerf Tiny that aim to accelerate the proliferation of TinyML solutions. But overcoming the fragmentation and difficulty of embedded deployment remains an ongoing process.

7.8 Examples

Machine learning deployment on microcontrollers and other embedded devices often requires specially optimized software libraries and frameworks to work within the tight constraints of memory, compute, and power. Several options exist for performing inference on such resource-limited hardware, each with their own approach to optimizing model execution. This section will explore the key characteristics and design principles behind TFLite Micro, TinyEngine, and CMSIS-NN, providing insight into how each framework tackles the complex problem of high-accuracy yet efficient neural network execution on microcontrollers. They showcase different approaches for implementing efficient TinyML frameworks.

The table summarizes the key differences and similarities between these three specialized machine learning inference frameworks for embedded systems and microcontrollers.

| Framework | TensorFlow Lite Micro | TinyEngine | CMSIS-NN |

|---|---|---|---|

| Approach | Interpreter-based | Static compilation | Optimized neural network kernels |

| Hardware Focus | General embedded devices | Microcontrollers | ARM Cortex-M processors |

| Arithmetic Support | Floating point | Floating point, fixed point | Floating point, fixed point |

| Model Support | General neural network models | Models co-designed with TinyNAS | Common neural network layer types |

| Code Footprint | Larger due to inclusion of interpreter and ops | Small, includes only ops needed for model | Lightweight by design |

| Latency | Higher due to interpretation overhead | Very low due to compiled model | Low latency focus |

| Memory Management | Dynamically managed by interpreter | Model-level optimization | Tools for efficient allocation |

| Optimization Approach | Some code generation features | Specialized kernels, operator fusion | Architecture-specific assembly optimizations |

| Key Benefits | Flexibility, portability, ease of updating models | Maximizes performance, optimized memory usage | Hardware acceleration, standardized API, portability |

In the following sections, we will dive into understanding each of these in greater detail.

7.8.1 Interpreter

TensorFlow Lite Micro (TFLM) is a machine learning inference framework designed for embedded devices with limited resources. It uses an interpreter to load and execute machine learning models, which provides flexibility and ease of updating models in the field (David et al. 2021).

Traditional interpreters often have significant branching overhead, which can reduce performance. However, machine learning model interpretation benefits from the efficiency of long-running kernels, where each kernel runtime is relatively large and helps mitigate interpreter overhead.

An alternative to an interpreter-based inference engine is to generate native code from a model during export. This can improve performance, but it sacrifices portability and flexibility, as the generated code needs recompilation for each target platform and must be replaced entirely to modify a model.

TFLM strikes a balance between the simplicity of code compilation and the flexibility of an interpreter-based approach by incorporating certain code-generation features. For example, the library can be constructed solely from source files, offering much of the compilation simplicity associated with code generation while retaining the benefits of an interpreter-based model execution framework.

An interpreter-based approach offers several benefits over code generation for machine learning inference on embedded devices:

Flexibility: Models can be updated in the field without recompiling the entire application.

Portability: The interpreter can be used to execute models on different target platforms without porting the code.

Memory efficiency: The interpreter can share code across multiple models, reducing memory usage.

Ease of development: Interpreters are easier to develop and maintain than code generators.

TensorFlow Lite Micro is a powerful and flexible framework for machine learning inference on embedded devices. Its interpreter-based approach offers several benefits over code generation, including flexibility, portability, memory efficiency, and ease of development.

7.8.2 Compiler-based

TinyEngine by is an ML inference framework designed specifically for resource-constrained microcontrollers. It employs several optimizations to enable high-accuracy neural network execution within the tight constraints of memory, compute, and storage on microcontrollers (Lin et al. 2020).

While inference frameworks like TFLite Micro use interpreters to execute the neural network graph dynamically at runtime, this adds significant overhead in terms of memory usage to store metadata, interpretation latency, and lack of optimizations, although TFLite argues that the overhead is small. TinyEngine eliminates this overhead by employing a code generation approach. During compilation, it analyzes the network graph and generates specialized code to execute just that model. This code is natively compiled into the application binary, avoiding runtime interpretation costs.

Conventional ML frameworks schedule memory per layer, trying to minimize usage for each layer separately. TinyEngine does model-level scheduling instead, analyzing memory usage across layers. It allocates a common buffer size based on the max memory needs of all layers. This buffer is then shared efficiently across layers to increase data reuse.