3 Deep Learning Primer

Resources: Slides, Labs, Exercises

This section offers a brief introduction to deep learning, starting with an overview of its history, applications, and relevance to embedded AI systems. It examines the core concepts like neural networks, highlighting key components like perceptrons, multilayer perceptrons, activation functions, and computational graphs. The primer also briefly explores major deep learning architecture, contrasting their applications and uses. Additionally, it compares deep learning to traditional machine learning to equip readers with the general conceptual building blocks to make informed choices between deep learning and traditional ML techniques based on problem constraints, setting the stage for more advanced techniques and applications that will follow in subsequent chapters.

Understand the basic concepts and definitions of deep neural networks.

Recognize there are different deep learning model architectures.

Comparison between deep learning and traditional machine learning approaches across various dimensions.

Acquire the basic conceptual building blocks to delve deeper into advanced deep learning techniques and applications.

3.1 Introduction

3.1.1 Definition and Importance

Deep learning, a specialized area within machine learning and artificial intelligence (AI), utilizes algorithms modeled after the structure and function of the human brain, known as artificial neural networks. This field is a foundational element in AI, driving progress in diverse sectors such as computer vision, natural language processing, and self-driving vehicles. Its significance in embedded AI systems is highlighted by its capability to handle intricate calculations and predictions, optimizing the limited resources in embedded settings.

3.1.2 Brief History of Deep Learning

The idea of deep learning has origins in early artificial neural networks. It has experienced several cycles of interest, starting with the introduction of the Perceptron in the 1950s (Rosenblatt 1957), followed by the invention of backpropagation algorithms in the 1980s (Rumelhart, Hinton, and Williams 1986).

The term “deep learning” became prominent in the 2000s, characterized by advances in computational power and data accessibility. Important milestones include the successful training of deep networks like AlexNet (Krizhevsky, Sutskever, and Hinton 2012) by Geoffrey Hinton, a leading figure in AI, and the renewed focus on neural networks as effective tools for data analysis and modeling.

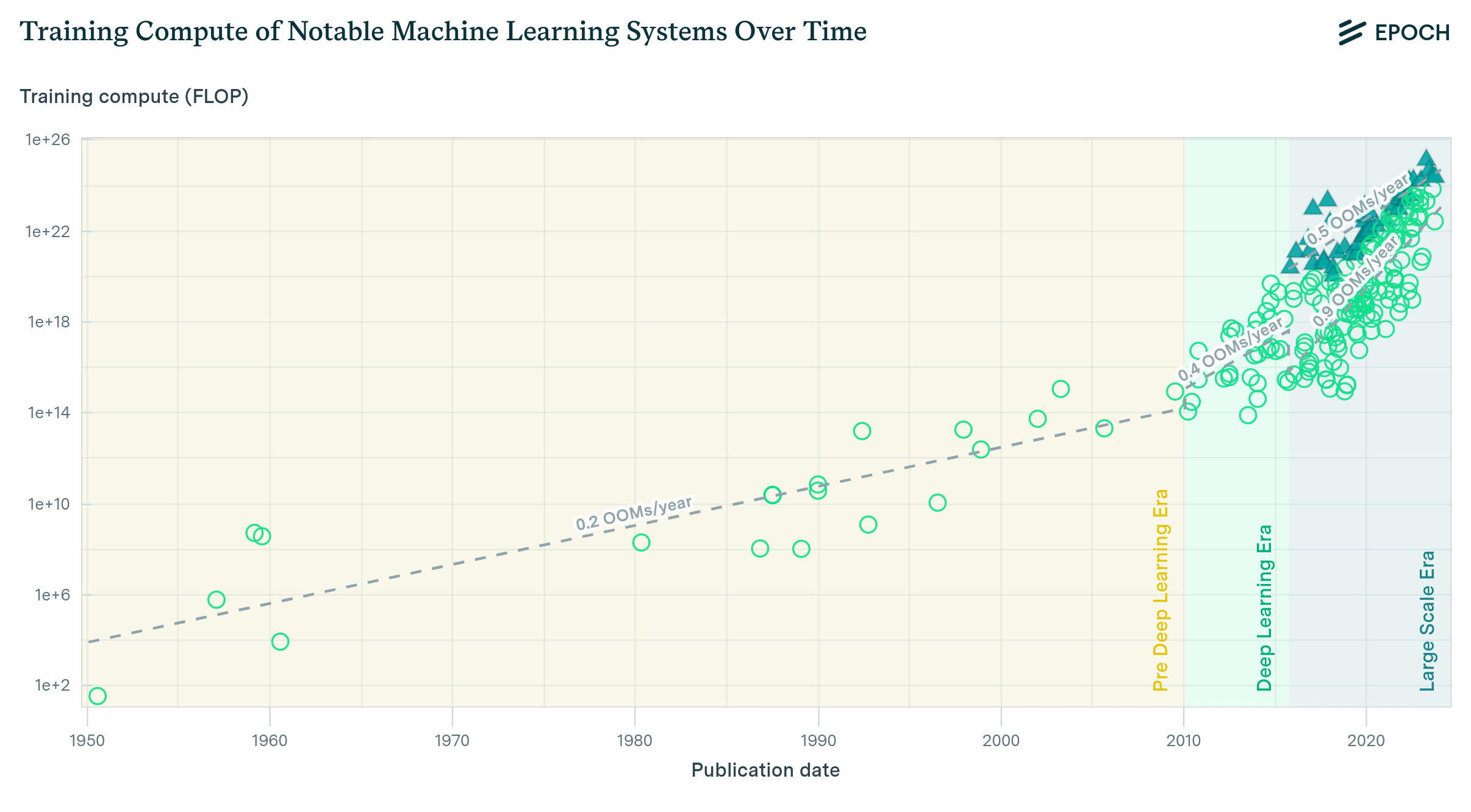

In recent times, deep learning has seen exponential growth, transforming various industries. Computational growth followed an 18-month doubling pattern from 1952 to 2010, which then accelerated to a 6-month cycle from 2010 to 2022, as shown in Figure 3.1. Concurrently, we saw the emergence of large-scale models between 2015 and 2022, appearing 2 to 3 orders of magnitude faster and following a 10-month doubling cycle.

Multiple factors have contributed to this surge, including advancements in computational power, the abundance of big data, and improvements in algorithmic designs. First, the growth of computational capabilities, especially the arrival of Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) (Jouppi et al. 2017), has significantly sped up the training and inference times of deep learning models. These hardware improvements have enabled the construction and training of more complex, deeper networks than what was possible in earlier years.

Second, the digital revolution has yielded a wealth of big data, offering rich material for deep learning models to learn from and excel in tasks such as image and speech recognition, language translation, and game playing. The presence of large, labeled datasets has been key in refining and successfully deploying deep learning applications in real-world settings.

Additionally, collaborations and open-source efforts have nurtured a dynamic community of researchers and practitioners, accelerating advancements in deep learning techniques. Innovations like deep reinforcement learning, transfer learning, and generative adversarial networks have broadened the scope of what is achievable with deep learning, opening new possibilities in various sectors including healthcare, finance, transportation, and entertainment.

Organizations around the world recognize the transformative potential of deep learning and are investing heavily in research and development to leverage its capabilities in providing innovative solutions, optimizing operations, and creating new business opportunities. As deep learning continues its upward trajectory, it is set to redefine how we interact with technology, enhancing convenience, safety, and connectivity in our lives.

3.1.3 Applications of Deep Learning

Deep learning finds extensive use across numerous industries today. In finance, it is employed for stock market prediction, risk assessment, and fraud detection. In marketing, it is used for customer segmentation, personalization, and content optimization. In healthcare, machine learning aids in diagnosis, treatment planning, and patient monitoring. The transformative impact on society is evident.

For instance, deep learning algorithms can predict stock market trends, guiding investment strategies and enhancing financial decisions. Similarly, in healthcare, deep learning can make medical predictions that improve patient diagnosis and save lives. The benefits are clear: machine learning not only predicts with greater accuracy than humans but also does so much more quickly.

In manufacturing, deep learning has had a significant impact. By continuously learning from vast amounts of data collected during the manufacturing process, companies can boost productivity while minimizing waste through improved efficiency. This financial benefit for companies translates to better quality products at lower prices for customers. Machine learning enables manufacturers to continually refine their processes, producing higher quality goods more efficiently than ever before.

Deep learning also enhances everyday products like Netflix recommendations and Google Translate text translations. Moreover, it helps companies like Amazon and Uber reduce customer service costs by swiftly identifying dissatisfied customers.

3.1.4 Relevance to Embedded AI

Embedded AI, the integration of AI algorithms directly into hardware devices, naturally gains from the capabilities of deep learning. The combination of deep learning algorithms and embedded systems has laid the groundwork for intelligent, autonomous devices capable of advanced on-device data processing and analysis. Deep learning aids in extracting complex patterns and information from input data, serving as an essential tool in the development of smart embedded systems, from household appliances to industrial machinery. This collaboration aims to usher in a new era of intelligent, interconnected devices that can learn and adapt to user behavior and environmental conditions, optimizing performance and offering unprecedented levels of convenience and efficiency.

3.2 Neural Networks

Deep learning draws inspiration from the neural networks of the human brain to create patterns used in decision-making. This section delves into the foundational concepts that make up deep learning, providing insights into the more complex topics discussed later in this primer.

Neural networks serve as the foundation of deep learning, inspired by the biological neural networks in the human brain to process and analyze data hierarchically. Below, we examine the primary components and structures commonly found in neural networks.

3.2.1 Perceptrons

The perceptron is the basic unit or node that serves as the foundation for more complex structures. A perceptron takes various inputs, applies weights and a bias to these inputs, and then uses an activation function to produce an output.

Conceived in the 1950s, perceptrons paved the way for the development of more intricate neural networks and have been a fundamental building block in the field of deep learning.

3.2.2 Multi-layer Perceptrons

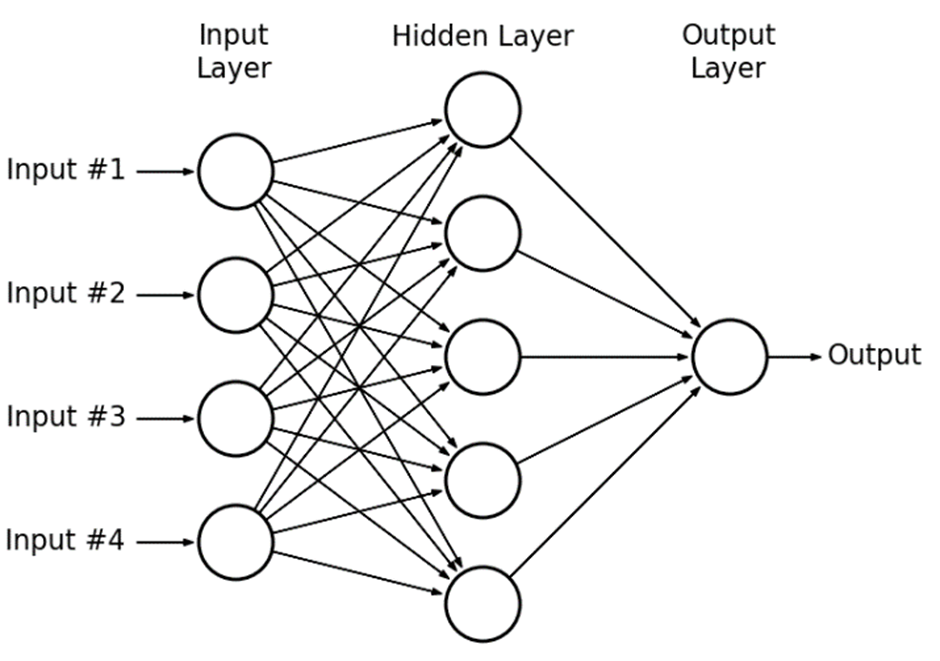

Multi-layer perceptrons (MLPs) are an evolution of the single-layer perceptron model, featuring multiple layers of nodes connected in a feedforward manner. These layers include an input layer for data reception, several hidden layers for data processing, and an output layer for final result generation. MLPs are skilled at identifying non-linear relationships and use a backpropagation technique for training, where weights are optimized through a gradient descent algorithm.

Forward Pass

The forward pass is the initial phase where data moves through the network from the input to the output layer. During this phase, each layer performs specific computations on the input data, using weights and biases before passing the resulting values to subsequent layers. The final output of this phase is used to compute the loss, indicating the difference between the predicted output and actual target values.

Backward Pass (Backpropagation)

Backpropagation is a key algorithm in training deep neural networks. This phase involves calculating the gradient of the loss function concerning each weight by using the chain rule, effectively moving backward through the network. The gradients calculated in this step guide the adjustment of weights with the objective of minimizing the loss function, thereby enhancing the network’s performance with each iteration of training.

Grasping these foundational concepts paves the way to understanding more intricate deep learning architectures and techniques, fostering the development of more sophisticated and efficacious applications, especially within the realm of embedded AI systems.

3.2.3 Model Architectures

Deep learning architectures refer to the various structured approaches that dictate how neurons and layers are organized and interact in neural networks. These architectures have evolved to tackle different problems and data types effectively. This section offers an overview of some well-known deep learning architectures and their characteristics.

Multi-Layer Perceptrons (MLPs)

MLPs are basic deep learning architectures, comprising three or more layers: an input layer, one or more hidden layers, and an output layer. These layers are fully connected, meaning each neuron in a layer is linked to every neuron in the preceding and following layers. MLPs can model intricate functions and are used in a broad array of tasks, such as regression, classification, and pattern recognition. Their capacity to learn non-linear relationships through backpropagation makes them a versatile instrument in the deep learning toolkit.

In embedded AI systems, MLPs can function as compact models for simpler tasks like sensor data analysis or basic pattern recognition, where computational resources are limited. Their ability to learn non-linear relationships with relatively less complexity makes them a suitable choice for embedded systems.

Convolutional Neural Networks (CNNs)

CNNs are mainly used in image and video recognition tasks. This architecture employs convolutional layers that apply a series of filters to the input data to identify features like edges, corners, and textures. A typical CNN also includes pooling layers to reduce the spatial dimensions of the data, and fully connected layers for classification. CNNs have proven highly effective in tasks such as image recognition, object detection, and computer vision applications.

In embedded AI, CNNs are crucial for image and video recognition tasks, where real-time processing is often needed. They can be optimized for embedded systems by using techniques like quantization and pruning to minimize memory usage and computational demands, enabling efficient object detection and facial recognition functionalities in devices with limited computational resources.

Recurrent Neural Networks (RNNs)

RNNs are suitable for sequential data analysis, like time series forecasting and natural language processing. In this architecture, connections between nodes form a directed graph along a temporal sequence, allowing information to be carried across sequences through hidden state vectors. Variants of RNNs include Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), designed to capture longer dependencies in sequence data.

In embedded systems, these networks can be used in voice recognition systems, predictive maintenance, or in IoT devices where sequential data patterns are common. Optimizations specific to embedded platforms can assist in managing their typically high computational and memory requirements.

Generative Adversarial Networks (GANs)

GANs consist of two networks, a generator and a discriminator, trained simultaneously through adversarial training (Goodfellow et al. 2020). The generator produces data that tries to mimic the real data distribution, while the discriminator aims to distinguish between real and generated data. GANs are widely used in image generation, style transfer, and data augmentation.

In embedded settings, GANs could be used for on-device data augmentation to enhance the training of models directly on the embedded device, enabling continual learning and adaptation to new data without the need for cloud computing resources.

Autoencoders

Autoencoders are neural networks used for data compression and noise reduction (Bank, Koenigstein, and Giryes 2023). They are structured to encode input data into a lower-dimensional representation and then decode it back to its original form. Variants like Variational Autoencoders (VAEs) introduce probabilistic layers that allow for generative properties, finding applications in image generation and anomaly detection.

Using autoencoders can help in efficient data transmission and storage, improving the overall performance of embedded systems with limited computational and memory resources.

Transformer Networks

Transformer networks have emerged as a powerful architecture, especially in natural language processing (Vaswani et al. 2017). These networks use self-attention mechanisms to weigh the influence of different input words on each output word, enabling parallel computation and capturing intricate patterns in data. Transformer networks have led to state-of-the-art results in tasks like language translation, summarization, and text generation.

These networks can be optimized to perform language-related tasks directly on-device. For example, transformers can be used in embedded systems for real-time translation services or voice-assisted interfaces, where latency and computational efficiency are crucial. Techniques such as model distillation can be employed to deploy these networks on embedded devices with limited resources.

Each of these architectures serves specific purposes and excels in different domains, offering a rich toolkit for addressing diverse problems in the realm of embedded AI systems. Understanding the nuances of these architectures is crucial in designing effective and efficient deep learning models for various applications.

3.2.4 Traditional ML vs Deep Learning

To succinctly highlight the differences, a comparative table illustrates the contrasting characteristics between traditional ML and deep learning:

| Aspect | Traditional ML | Deep Learning |

|---|---|---|

| Data Requirements | Low to Moderate (efficient with smaller datasets) | High (requires large datasets for nuanced learning) |

| Model Complexity | Moderate (suitable for well-defined problems) | High (detects intricate patterns, suited for complex tasks) |

| Computational Resources | Low to Moderate (cost-effective, less resource-intensive) | High (demands substantial computational power and resources) |

| Deployment Speed | Fast (quicker training and deployment cycles) | Slow (prolonged training times, especially with larger datasets) |

| Interpretability | High (clear insights into decision pathways) | Low (complex layered structures, “black box” nature) |

| Maintenance | Easier (simple to update and maintain) | Complex (requires more efforts in maintenance and updates) |

3.2.5 Choosing Traditional ML vs. DL

Data Availability and Volume

Amount of Data: Traditional machine learning algorithms, such as decision trees or Naive Bayes, are often more suitable when data availability is limited, offering robust predictions even with smaller datasets. This is particularly true in cases like medical diagnostics for disease prediction and customer segmentation in marketing.

Data Diversity and Quality: Traditional machine learning algorithms are flexible in handling various data types and often require less preprocessing compared to deep learning models. They may also be more robust in situations with noisy data.

Complexity of the Problem

Problem Granularity: Problems that are simple to moderately complex, which may involve linear or polynomial relationships between variables, often find a better fit with traditional machine learning methods.

Hierarchical Feature Representation: Deep learning models are excellent in tasks that require hierarchical feature representation, such as image and speech recognition. However, not all problems require this level of complexity, and traditional machine learning algorithms may sometimes offer simpler and equally effective solutions.

Hardware and Computational Resources

Resource Constraints: The availability of computational resources often influences the choice between traditional ML and deep learning. The former is generally less resource-intensive and thus preferable in environments with hardware limitations or budget constraints.

Scalability and Speed: Traditional machine learning algorithms, like support vector machines (SVM), often allow for faster training times and easier scalability, particularly beneficial in projects with tight timelines and growing data volumes.

Regulatory Compliance

Regulatory compliance is crucial in various industries, requiring adherence to guidelines and best practices such as the GDPR in the EU. Traditional ML models, due to their inherent interpretability, often align better with these regulations, especially in sectors like finance and healthcare.

Interpretability

Understanding the decision-making process is easier with traditional machine learning techniques compared to deep learning models, which function as “black boxes,” making it challenging to trace decision pathways.

3.2.6 Making an Informed Choice

Given the constraints of embedded AI systems, understanding the differences between traditional ML techniques and deep learning becomes essential. Both avenues offer unique advantages, and their distinct characteristics often dictate the choice of one over the other in different scenarios.

Despite this, deep learning has been steadily outperforming traditional machine learning methods in several key areas due to a combination of abundant data, computational advancements, and proven effectiveness in complex tasks.

Here are some specific reasons why we focus on deep learning in this text:

Superior Performance in Complex Tasks: Deep learning models, particularly deep neural networks, excel in tasks where the relationships between data points are incredibly intricate. Tasks like image and speech recognition, language translation, and playing complex games like Go and Chess have seen significant advancements primarily through deep learning algorithms.

Efficient Handling of Unstructured Data: Unlike traditional machine learning methods, deep learning can process unstructured data more effectively. This is crucial in today’s data landscape, where a large majority of data is unstructured, such as text, images, and videos.

Leveraging Big Data: With the availability of big data, deep learning models have the capacity to continually learn and improve. These models excel at utilizing large datasets to enhance their predictive accuracy, a limitation in traditional machine learning approaches.

Hardware Advancements and Parallel Computing: The advent of powerful GPUs and the availability of cloud computing platforms have enabled the rapid training of deep learning models. These advancements have addressed one of the significant challenges of deep learning-the need for substantial computational resources.

Dynamic Adaptability and Continuous Learning: Deep learning models can adapt to new information or data dynamically. They can be trained to generalize their learning to new, unseen data, which is crucial in rapidly evolving fields like autonomous driving or real-time language translation.

While deep learning has gained significant traction, it’s essential to understand that traditional machine learning is far from obsolete. As we delve deeper into the intricacies of deep learning, we will also highlight situations where traditional machine learning methods may be more appropriate due to their simplicity, efficiency, and interpretability. By focusing on deep learning in this text, we aim to equip readers with the knowledge and tools needed to tackle modern, complex problems across various domains, while also providing insights into the comparative advantages and appropriate application scenarios for both deep learning and traditional machine learning techniques.

3.3 Conclusion

Deep learning has risen as a potent set of techniques for addressing intricate pattern recognition and prediction challenges. Starting with an overview, we outlined the fundamental concepts and principles governing deep learning, laying the groundwork for more advanced studies.

Central to deep learning, we explored the basic ideas of neural networks, the powerful computational models inspired by the human brain’s interconnected neuron structure. This exploration allowed us to appreciate the capabilities and potential of neural networks in creating sophisticated algorithms capable of learning and adapting from data.

Understanding the role of libraries and frameworks was a key part of our discussion, offering insights into the tools that can facilitate the development and deployment of deep learning models. These resources not only ease the implementation of neural networks but also open avenues for innovation and optimization.

Next, we tackled the challenges one might face when embedding deep learning algorithms within embedded systems, providing a critical perspective on the complexities and considerations that come with bringing AI to edge devices.

Furthermore, we delved into an examination of the limitations of deep learning. Through a series of discussions, we unraveled the challenges faced in deep learning applications and outlined scenarios where traditional machine learning might outperform deep learning. These sections are crucial for fostering a balanced view of the capabilities and limitations of deep learning.

In this primer, we have equipped you with the knowledge to make informed choices between deploying traditional machine learning or deep learning techniques, depending on the unique demands and constraints of a specific problem.

As we conclude this chapter, we hope you are now well-equipped with the basic “language” of deep learning and prepared to delve deeper into the subsequent chapters with a solid understanding and critical perspective. The journey ahead is filled with exciting opportunities and challenges in embedding AI within systems.

3.4 Exercises

Now would be an excellent time to try some deep learning models:

Resources

Here is a curated list of resources to support both students and instructors in their learning and teaching journey. We are continuously working on expanding this collection and will be adding new exercises in the near future.